Matthew Cooper

Widespread Increases in Future Wildfire Risk to Global Forest Carbon Offset Projects Revealed by Explainable AI

May 03, 2023Abstract:Carbon offset programs are critical in the fight against climate change. One emerging threat to the long-term stability and viability of forest carbon offset projects is wildfires, which can release large amounts of carbon and limit the efficacy of associated offsetting credits. However, analysis of wildfire risk to forest carbon projects is challenging because existing models for forecasting long-term fire risk are limited in predictive accuracy. Therefore, we propose an explainable artificial intelligence (XAI) model trained on 7 million global satellite wildfire observations. Validation results suggest substantial potential for high resolution, enhanced accuracy projections of global wildfire risk, and the model outperforms the U.S. National Center for Atmospheric Research's leading fire model. Applied to a collection of 190 global forest carbon projects, we find that fire exposure is projected to increase 55% [37-76%] by 2080 under a mid-range scenario (SSP2-4.5). Our results indicate the large wildfire carbon project damages seen in the past decade are likely to become more frequent as forests become hotter and drier. In response, we hope the model can support wildfire managers, policymakers, and carbon market analysts to preemptively quantify and mitigate long-term permanence risks to forest carbon projects.

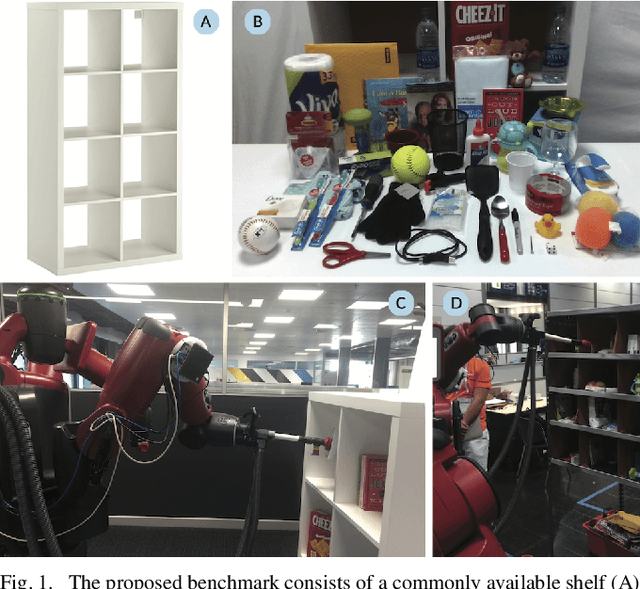

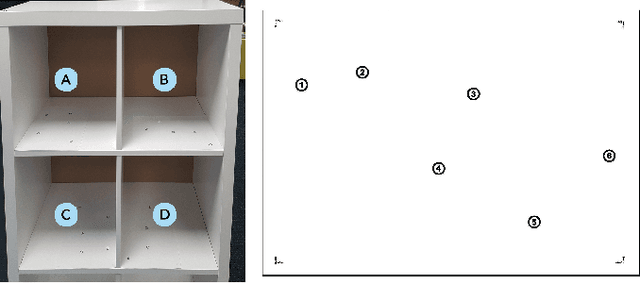

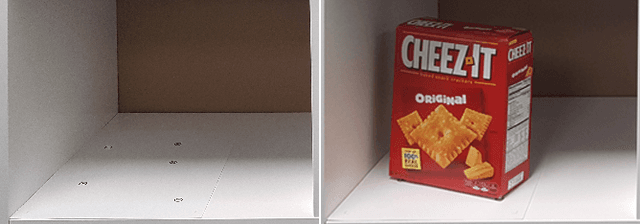

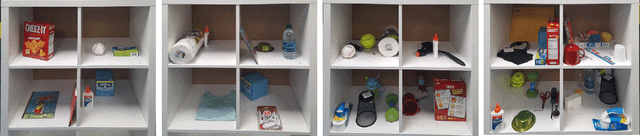

The ACRV Picking Benchmark : A Robotic Shelf Picking Benchmark to Foster Reproducible Research

Dec 14, 2016

Abstract:Robotic challenges like the Amazon Picking Challenge (APC) or the DARPA Challenges are an established and important way to drive scientific progress. They make research comparable on a well-defined benchmark with equal test conditions for all participants. However, such challenge events occur only occasionally, are limited to a small number of contestants, and the test conditions are very difficult to replicate after the main event. We present a new physical benchmark challenge for robotic picking: the ACRV Picking Benchmark (APB). Designed to be reproducible, it consists of a set of 42 common objects, a widely available shelf, and exact guidelines for object arrangement using stencils. A well-defined evaluation protocol enables the comparison of \emph{complete} robotic systems -- including perception and manipulation -- instead of sub-systems only. Our paper also describes and reports results achieved by an open baseline system based on a Baxter robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge