Christophe Andrieu

Comparison of Markov chains via weak Poincaré inequalities with application to pseudo-marginal MCMC

Dec 10, 2021

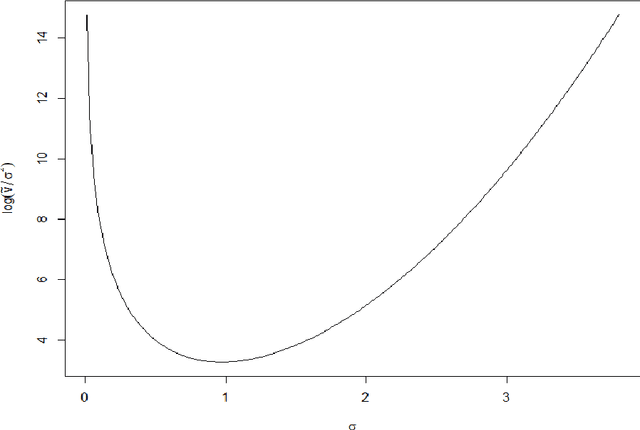

Abstract:We investigate the use of a certain class of functional inequalities known as weak Poincar\'e inequalities to bound convergence of Markov chains to equilibrium. We show that this enables the straightforward and transparent derivation of subgeometric convergence bounds for methods such as the Independent Metropolis--Hastings sampler and pseudo-marginal methods for intractable likelihoods, the latter being subgeometric in many practical settings. These results rely on novel quantitative comparison theorems between Markov chains. Associated proofs are simpler than those relying on drift/minorization conditions and the tools developed allow us to recover and further extend known results as particular cases. We are then able to provide new insights into the practical use of pseudo-marginal algorithms, analyse the effect of averaging in Approximate Bayesian Computation (ABC) and the use of products of independent averages, and also to study the case of lognormal weights relevant to particle marginal Metropolis--Hastings (PMMH).

Nonreversible MCMC from conditional invertible transforms: a complete recipe with convergence guarantees

Dec 31, 2020Abstract:Markov Chain Monte Carlo (MCMC) is a class of algorithms to sample complex and high-dimensional probability distributions. The Metropolis-Hastings (MH) algorithm, the workhorse of MCMC, provides a simple recipe to construct reversible Markov kernels. Reversibility is a tractable property which implies a less tractable but essential property here, invariance. Reversibility is however not necessarily desirable when considering performance. This has prompted recent interest in designing kernels breaking this property. At the same time, an active stream of research has focused on the design of novel versions of the MH kernel, some nonreversible, relying on the use of complex invertible deterministic transforms. While standard implementations of the MH kernel are well understood, aforementioned developments have not received the same systematic treatment to ensure their validity. This paper fills the gap by developing general tools to ensure that a class of nonreversible Markov kernels, possibly relying on complex transforms, has the desired invariance property and lead to convergent algorithms. This leads to a set of simple and practically verifiable conditions.

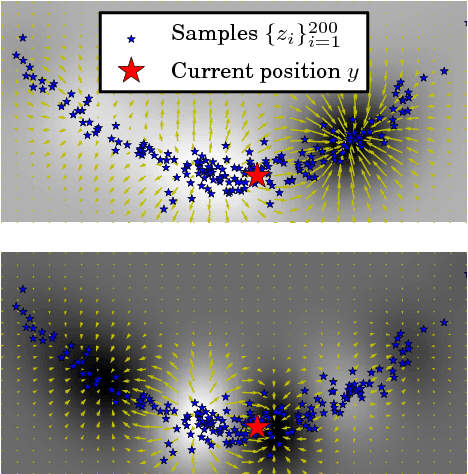

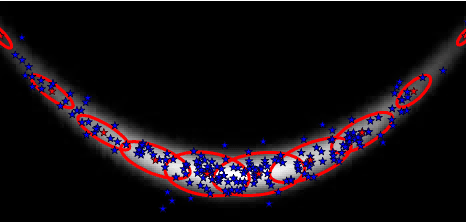

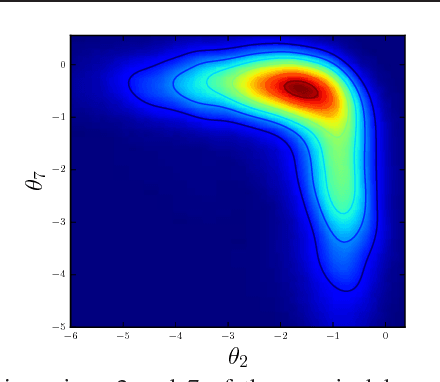

Kernel Adaptive Metropolis-Hastings

Jun 12, 2014

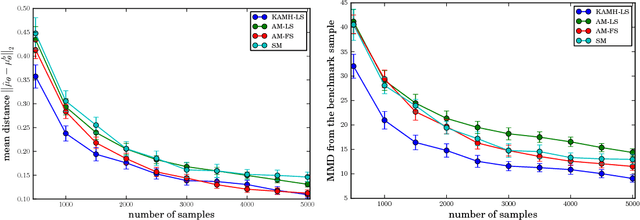

Abstract:A Kernel Adaptive Metropolis-Hastings algorithm is introduced, for the purpose of sampling from a target distribution with strongly nonlinear support. The algorithm embeds the trajectory of the Markov chain into a reproducing kernel Hilbert space (RKHS), such that the feature space covariance of the samples informs the choice of proposal. The procedure is computationally efficient and straightforward to implement, since the RKHS moves can be integrated out analytically: our proposal distribution in the original space is a normal distribution whose mean and covariance depend on where the current sample lies in the support of the target distribution, and adapts to its local covariance structure. Furthermore, the procedure requires neither gradients nor any other higher order information about the target, making it particularly attractive for contexts such as Pseudo-Marginal MCMC. Kernel Adaptive Metropolis-Hastings outperforms competing fixed and adaptive samplers on multivariate, highly nonlinear target distributions, arising in both real-world and synthetic examples. Code may be downloaded at https://github.com/karlnapf/kameleon-mcmc.

Reversible Jump MCMC Simulated Annealing for Neural Networks

Jan 16, 2013

Abstract:We propose a novel reversible jump Markov chain Monte Carlo (MCMC) simulated annealing algorithm to optimize radial basis function (RBF) networks. This algorithm enables us to maximize the joint posterior distribution of the network parameters and the number of basis functions. It performs a global search in the joint space of the parameters and number of parameters, thereby surmounting the problem of local minima. We also show that by calibrating a Bayesian model, we can obtain the classical AIC, BIC and MDL model selection criteria within a penalized likelihood framework. Finally, we show theoretically and empirically that the algorithm converges to the modes of the full posterior distribution in an efficient way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge