Christoffer Sloth

Manipulation via Force Distribution at Contact

Feb 03, 2026Abstract:Efficient and robust trajectories play a crucial role in contact-rich manipulation, which demands accurate mod- eling of object-robot interactions. Many existing approaches rely on point contact models due to their computational effi- ciency. Simple contact models are computationally efficient but inherently limited for achieving human-like, contact-rich ma- nipulation, as they fail to capture key frictional dynamics and torque generation observed in human manipulation. This study introduces a Force-Distributed Line Contact (FDLC) model in contact-rich manipulation and compares it against conventional point contact models. A bi-level optimization framework is constructed, in which the lower-level solves an optimization problem for contact force computation, and the upper-level optimization applies iLQR for trajectory optimization. Through this framework, the limitations of point contact are demon- strated, and the benefits of the FDLC in generating efficient and robust trajectories are established. The effectiveness of the proposed approach is validated by a box rotating task, demonstrating that FDLC enables trajectories generated via non-uniform force distributions along the contact line, while requiring lower control effort and less motion of the robot.

Solving Robotics Tasks with Prior Demonstration via Exploration-Efficient Deep Reinforcement Learning

Sep 04, 2025Abstract:This paper proposes an exploration-efficient Deep Reinforcement Learning with Reference policy (DRLR) framework for learning robotics tasks that incorporates demonstrations. The DRLR framework is developed based on an algorithm called Imitation Bootstrapped Reinforcement Learning (IBRL). We propose to improve IBRL by modifying the action selection module. The proposed action selection module provides a calibrated Q-value, which mitigates the bootstrapping error that otherwise leads to inefficient exploration. Furthermore, to prevent the RL policy from converging to a sub-optimal policy, SAC is used as the RL policy instead of TD3. The effectiveness of our method in mitigating bootstrapping error and preventing overfitting is empirically validated by learning two robotics tasks: bucket loading and open drawer, which require extensive interactions with the environment. Simulation results also demonstrate the robustness of the DRLR framework across tasks with both low and high state-action dimensions, and varying demonstration qualities. To evaluate the developed framework on a real-world industrial robotics task, the bucket loading task is deployed on a real wheel loader. The sim2real results validate the successful deployment of the DRLR framework.

Robust Adaptive Time-Varying Control Barrier Function with Application to Robotic Surface Treatment

Jun 17, 2025Abstract:Set invariance techniques such as control barrier functions (CBFs) can be used to enforce time-varying constraints such as keeping a safe distance from dynamic objects. However, existing methods for enforcing time-varying constraints often overlook model uncertainties. To address this issue, this paper proposes a CBFs-based robust adaptive controller design endowing time-varying constraints while considering parametric uncertainty and additive disturbances. To this end, we first leverage Robust adaptive Control Barrier Functions (RaCBFs) to handle model uncertainty, along with the concept of Input-to-State Safety (ISSf) to ensure robustness towards input disturbances. Furthermore, to alleviate the inherent conservatism in robustness, we also incorporate a set membership identification scheme. We demonstrate the proposed method on robotic surface treatment that requires time-varying force bounds to ensure uniform quality, in numerical simulation and real robotic setup, showing that the quality is formally guaranteed within an acceptable range.

Towards Data-Driven Model-Free Safety-Critical Control

Jun 07, 2025Abstract:This paper presents a framework for enabling safe velocity control of general robotic systems using data-driven model-free Control Barrier Functions (CBFs). Model-free CBFs rely on an exponentially stable velocity controller and a design parameter (e.g. alpha in CBFs); this design parameter depends on the exponential decay rate of the controller. However, in practice, the decay rate is often unavailable, making it non-trivial to use model-free CBFs, as it requires manual tuning for alpha. To address this, a Neural Network is used to learn the Lyapunov function from data, and the maximum decay rate of the systems built-in velocity controller is subsequently estimated. Furthermore, to integrate the estimated decay rate with model-free CBFs, we derive a probabilistic safety condition that incorporates a confidence bound on the violation rate of the exponential stability condition, using Chernoff bound. This enhances robustness against uncertainties in stability violations. The proposed framework has been tested on a UR5e robot in multiple experimental settings, and its effectiveness in ensuring safe velocity control with model-free CBFs has been demonstrated.

Imitation Learning-Based Path Generation for the Complex Assembly of Deformable Objects

May 30, 2025Abstract:This paper investigates how learning can be used to ease the design of high-quality paths for the assembly of deformable objects. Object dynamics plays an important role when manipulating deformable objects; thus, detailed models are often used when conducting motion planning for deformable objects. We propose to use human demonstrations and learning to enable motion planning of deformable objects with only simple dynamical models of the objects. In particular, we use the offline collision-free path planning, to generate a large number of reference paths based on a simple model of the deformable object. Subsequently, we execute the collision-free paths on a robot with a compliant control such that a human can slightly modify the path to complete the task successfully. Finally, based on the virtual path data sets and the human corrected ones, we use behavior cloning (BC) to create a dexterous policy that follows one reference path to finish a given task.

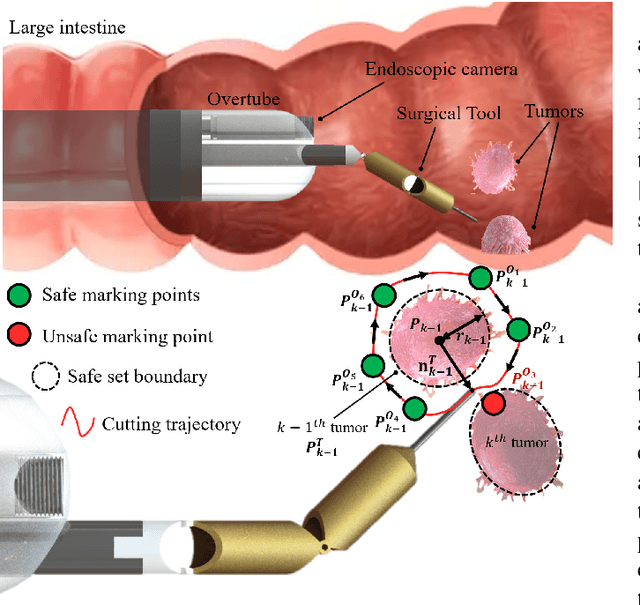

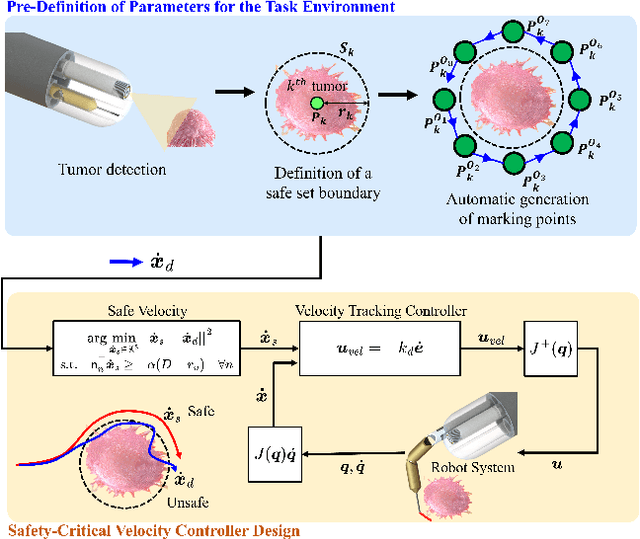

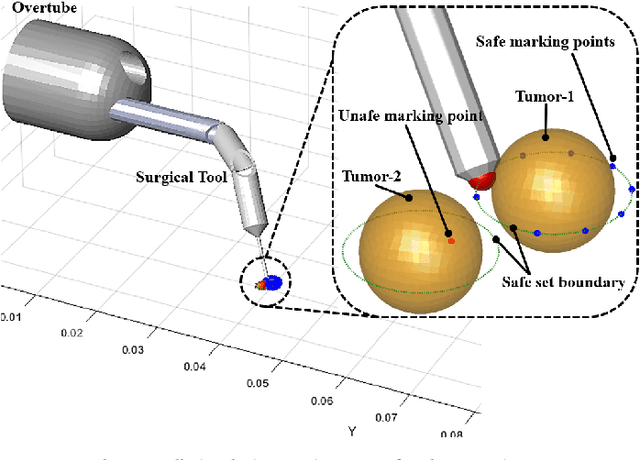

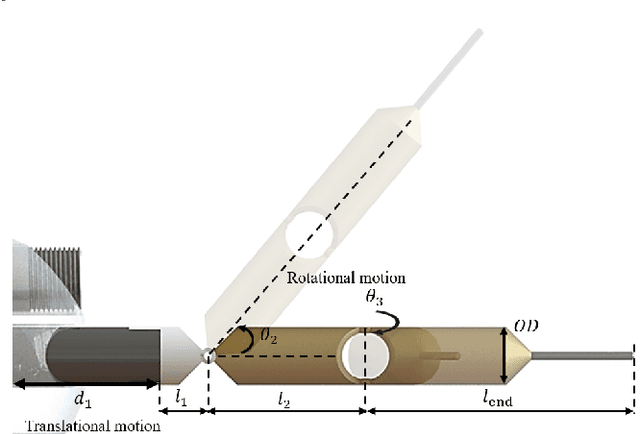

Safety-Ensured Control Framework for Robotic Endoscopic Task Automation

Mar 11, 2025

Abstract:There is growing interest in automating surgical tasks using robotic systems, such as endoscopy for treating gastrointestinal (GI) cancer. However, previous studies have primarily focused on detecting and analyzing objects or robots, with limited attention to ensuring safety, which is critical for clinical applications, where accidents can be caused by unsafe robot motions. In this study, we propose a new control framework that can formally ensure the safety of automating certain processes involved in endoscopic submucosal dissection (ESD), a representative endoscopic surgical method for the treatment of early GI cancer, by using an endoscopic robot. The proposed framework utilizes Control Barrier Functions (CBFs) to accurately identify the boundaries of individual tumors, even in close proximity within the GI tract, ensuring precise treatment and removal while preserving the surrounding normal tissue. Additionally, by adopting a model-free control scheme, safety assurance is made possible even in endoscopic robotic systems where dynamic modeling is challenging. We demonstrate the proposed framework in cases where the tumors to be removed are close to each other, showing that the safety constraints are enforced. We show that the model-free CBF-based controlled robot eliminates one tumor completely without damaging it, while not invading another nearby tumor.

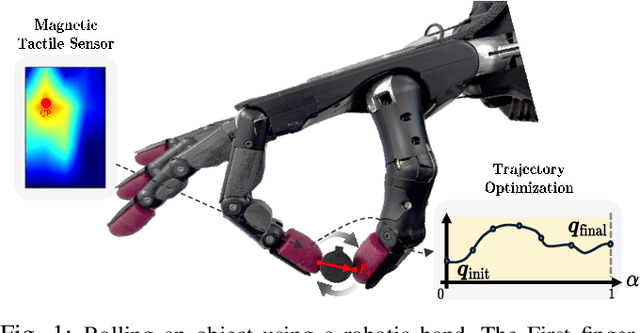

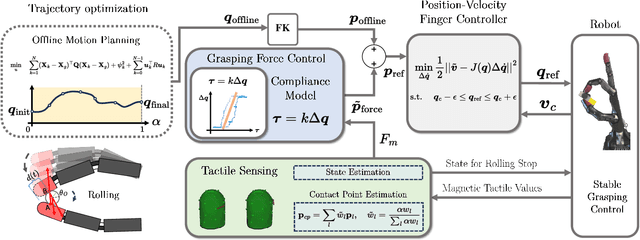

Trajectory Optimization for In-Hand Manipulation with Tactile Force Control

Mar 11, 2025

Abstract:The strength of the human hand lies in its ability to manipulate small objects precisely and robustly. In contrast, simple robotic grippers have low dexterity and fail to handle small objects effectively. This is why many automation tasks remain unsolved by robots. This paper presents an optimization-based framework for in-hand manipulation with a robotic hand equipped with compact Magnetic Tactile Sensors (MTSs). The small form factor of the robotic hand from Shadow Robot introduces challenges in estimating the state of the object while satisfying contact constraints. To address this, we formulate a trajectory optimization problem using Nonlinear Programming (NLP) for finger movements while ensuring contact points to change along the geometry of the fingers. Using the optimized trajectory from the solver, we implement and test an open-loop controller for rolling motion. To further enhance robustness and accuracy, we introduce a force controller for the fingers and a state estimator for the object utilizing MTSs. The proposed framework is validated through comparative experiments, showing that incorporating the force control with compliance consideration improves the accuracy and robustness of the rolling motion. Rolling an object with the force controller is 30\% more likely to succeed than running an open-loop controller. The demonstration video is available at https://youtu.be/6J_muL_AyE8.

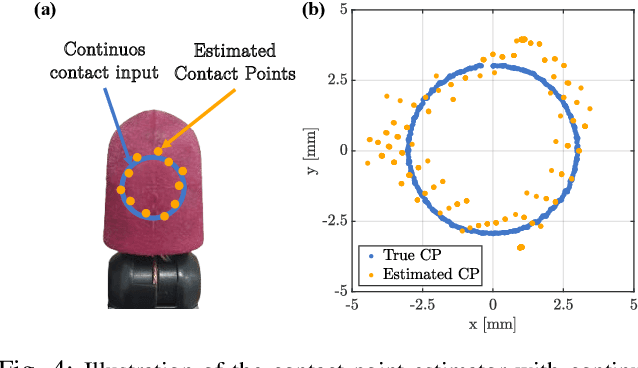

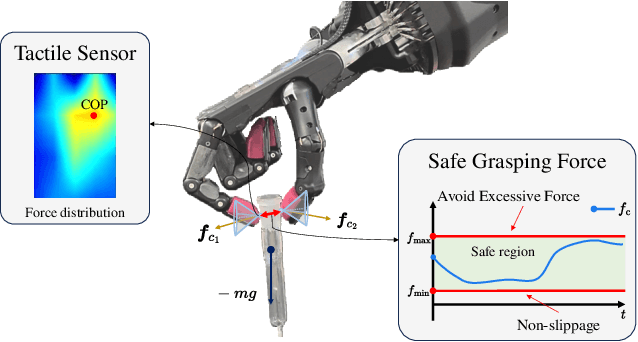

Robust Adaptive Safe Robotic Grasping with Tactile Sensing

Nov 12, 2024

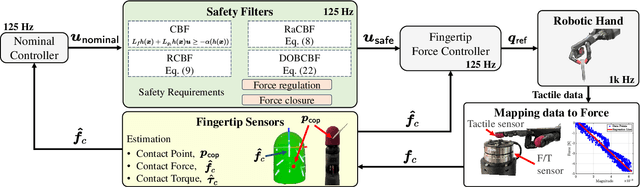

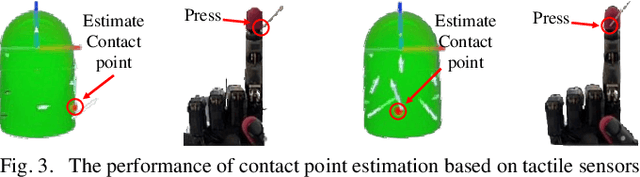

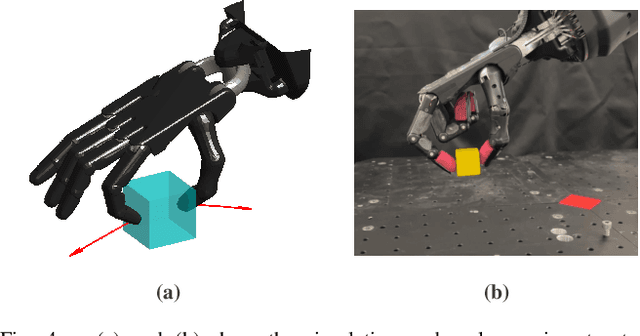

Abstract:Robotic grasping requires safe force interaction to prevent a grasped object from being damaged or slipping out of the hand. In this vein, this paper proposes an integrated framework for grasping with formal safety guarantees based on Control Barrier Functions. We first design contact force and force closure constraints, which are enforced by a safety filter to accomplish safe grasping with finger force control. For sensory feedback, we develop a technique to estimate contact point, force, and torque from tactile sensors at each finger. We verify the framework with various safety filters in a numerical simulation under a two-finger grasping scenario. We then experimentally validate the framework by grasping multiple objects, including fragile lab glassware, in a real robotic setup, showing that safe grasping can be successfully achieved in the real world. We evaluate the performance of each safety filter in the context of safety violation and conservatism, and find that disturbance observer-based control barrier functions provide superior performance for safety guarantees with minimum conservatism. The demonstration video is available at https://youtu.be/Cuj47mkXRdg.

Fast robust peg-in-hole insertion with continuous visual servoing

Nov 12, 2020

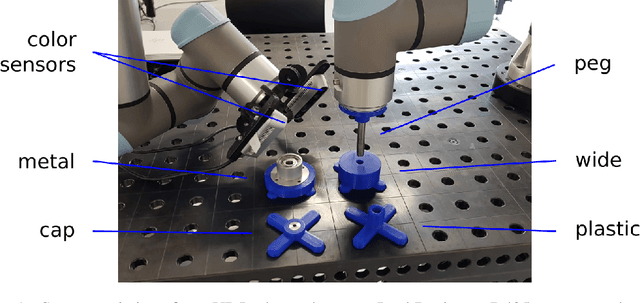

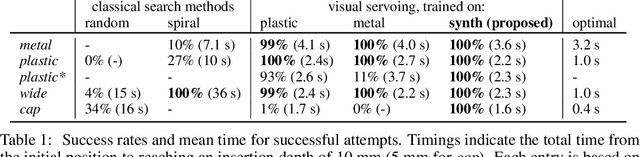

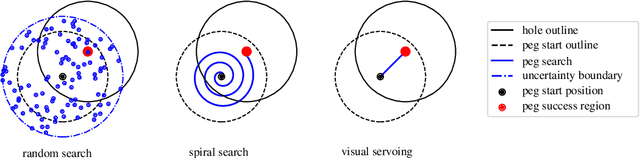

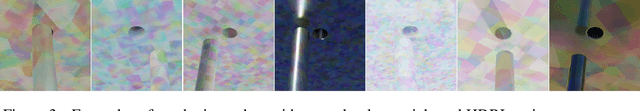

Abstract:This paper demonstrates a visual servoing method which is robust towards uncertainties related to system calibration and grasping, while significantly reducing the peg-in-hole time compared to classical methods and recent attempts based on deep learning. The proposed visual servoing method is based on peg and hole point estimates from a deep neural network in a multi-cam setup, where the model is trained on purely synthetic data. Empirical results show that the learnt model generalizes to the real world, allowing for higher success rates and lower cycle times than existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge