Chris Hokamp

NegotiationGym: Self-Optimizing Agents in a Multi-Agent Social Simulation Environment

Oct 05, 2025Abstract:We design and implement NegotiationGym, an API and user interface for configuring and running multi-agent social simulations focused upon negotiation and cooperation. The NegotiationGym codebase offers a user-friendly, configuration-driven API that enables easy design and customization of simulation scenarios. Agent-level utility functions encode optimization criteria for each agent, and agents can self-optimize by conducting multiple interaction rounds with other agents, observing outcomes, and modifying their strategies for future rounds.

Narrative Studio: Visual narrative exploration using LLMs and Monte Carlo Tree Search

Apr 03, 2025Abstract:Interactive storytelling benefits from planning and exploring multiple 'what if' scenarios. Modern LLMs are useful tools for ideation and exploration, but current chat-based user interfaces restrict users to a single linear flow. To address this limitation, we propose Narrative Studio -- a novel in-browser narrative exploration environment featuring a tree-like interface that allows branching exploration from user-defined points in a story. Each branch is extended via iterative LLM inference guided by system and user-defined prompts. Additionally, we employ Monte Carlo Tree Search (MCTS) to automatically expand promising narrative paths based on user-specified criteria, enabling more diverse and robust story development. We also allow users to enhance narrative coherence by grounding the generated text in an entity graph that represents the actors and environment of the story.

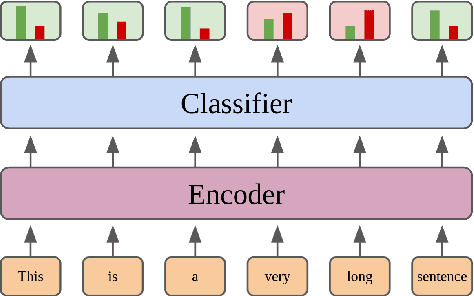

GLiREL -- Generalist Model for Zero-Shot Relation Extraction

Jan 06, 2025

Abstract:We introduce GLiREL (Generalist Lightweight model for zero-shot Relation Extraction), an efficient architecture and training paradigm for zero-shot relation classification. Inspired by recent advancements in zero-shot named entity recognition, this work presents an approach to efficiently and accurately predict zero-shot relationship labels between multiple entities in a single forward pass. Experiments using the FewRel and WikiZSL benchmarks demonstrate that our approach achieves state-of-the-art results on the zero-shot relation classification task. In addition, we contribute a protocol for synthetically-generating datasets with diverse relation labels.

KGValidator: A Framework for Automatic Validation of Knowledge Graph Construction

Apr 24, 2024

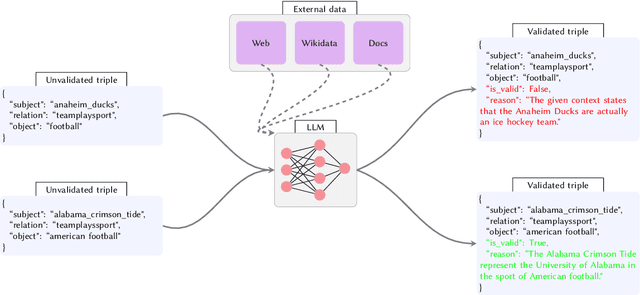

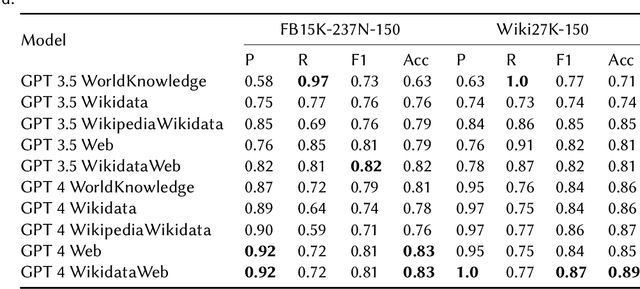

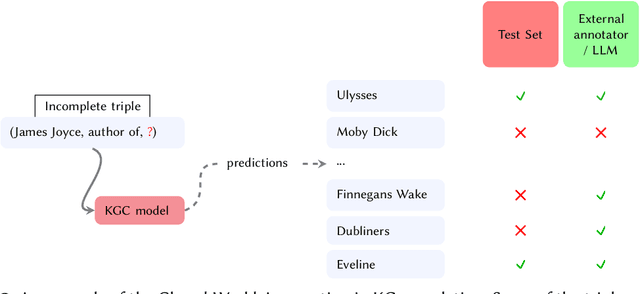

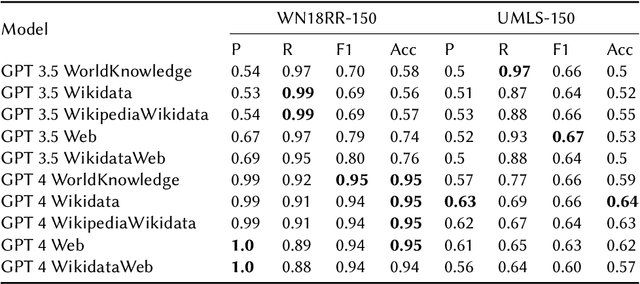

Abstract:This study explores the use of Large Language Models (LLMs) for automatic evaluation of knowledge graph (KG) completion models. Historically, validating information in KGs has been a challenging task, requiring large-scale human annotation at prohibitive cost. With the emergence of general-purpose generative AI and LLMs, it is now plausible that human-in-the-loop validation could be replaced by a generative agent. We introduce a framework for consistency and validation when using generative models to validate knowledge graphs. Our framework is based upon recent open-source developments for structural and semantic validation of LLM outputs, and upon flexible approaches to fact checking and verification, supported by the capacity to reference external knowledge sources of any kind. The design is easy to adapt and extend, and can be used to verify any kind of graph-structured data through a combination of model-intrinsic knowledge, user-supplied context, and agents capable of external knowledge retrieval.

News Signals: An NLP Library for Text and Time Series

Dec 18, 2023

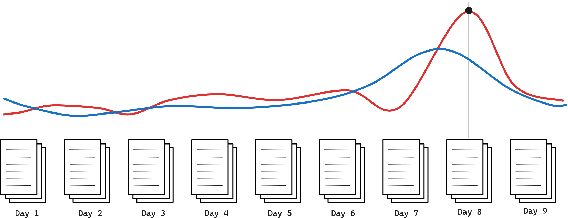

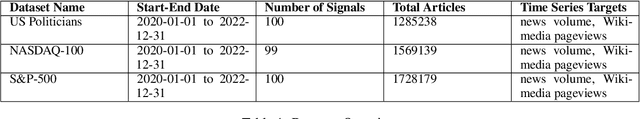

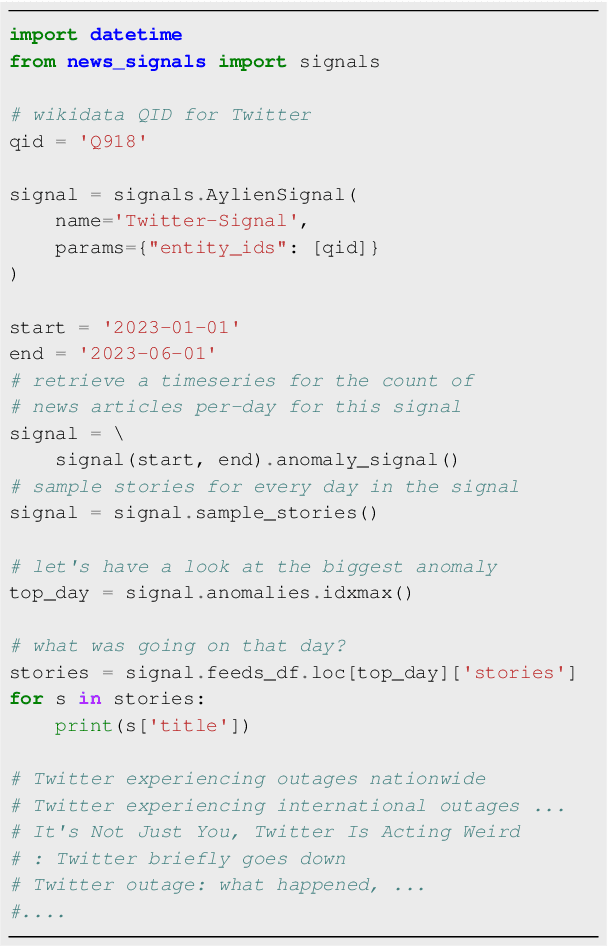

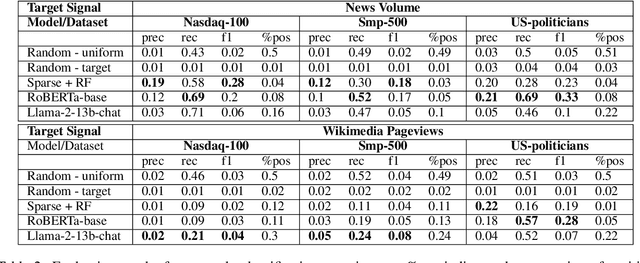

Abstract:We present an open-source Python library for building and using datasets where inputs are clusters of textual data, and outputs are sequences of real values representing one or more time series signals. The news-signals library supports diverse data science and NLP problem settings related to the prediction of time series behaviour using textual data feeds. For example, in the news domain, inputs are document clusters corresponding to daily news articles about a particular entity, and targets are explicitly associated real-valued time series: the volume of news about a particular person or company, or the number of pageviews of specific Wikimedia pages. Despite many industry and research use cases for this class of problem settings, to the best of our knowledge, News Signals is the only open-source library designed specifically to facilitate data science and research settings with natural language inputs and time series targets. In addition to the core codebase for building and interacting with datasets, we also conduct a suite of experiments using several popular Machine Learning libraries, which are used to establish baselines for time series anomaly prediction using textual inputs.

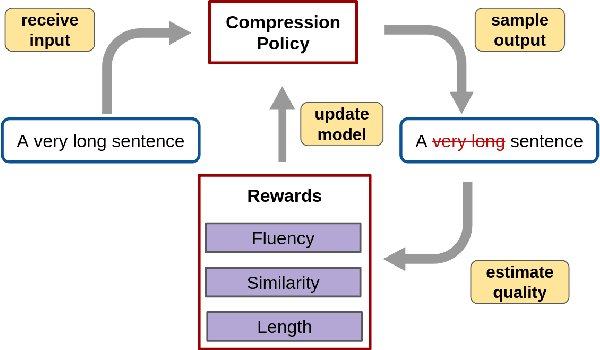

Efficient Unsupervised Sentence Compression by Fine-tuning Transformers with Reinforcement Learning

May 17, 2022

Abstract:Sentence compression reduces the length of text by removing non-essential content while preserving important facts and grammaticality. Unsupervised objective driven methods for sentence compression can be used to create customized models without the need for ground-truth training data, while allowing flexibility in the objective function(s) that are used for learning and inference. Recent unsupervised sentence compression approaches use custom objectives to guide discrete search; however, guided search is expensive at inference time. In this work, we explore the use of reinforcement learning to train effective sentence compression models that are also fast when generating predictions. In particular, we cast the task as binary sequence labelling and fine-tune a pre-trained transformer using a simple policy gradient approach. Our approach outperforms other unsupervised models while also being more efficient at inference time.

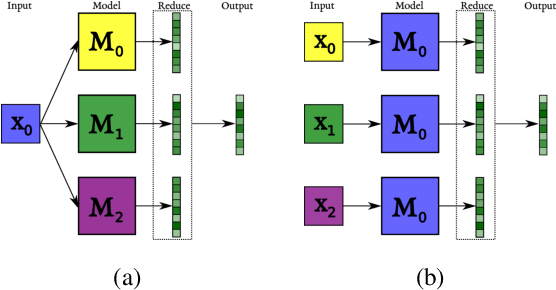

DynE: Dynamic Ensemble Decoding for Multi-Document Summarization

Jun 15, 2020

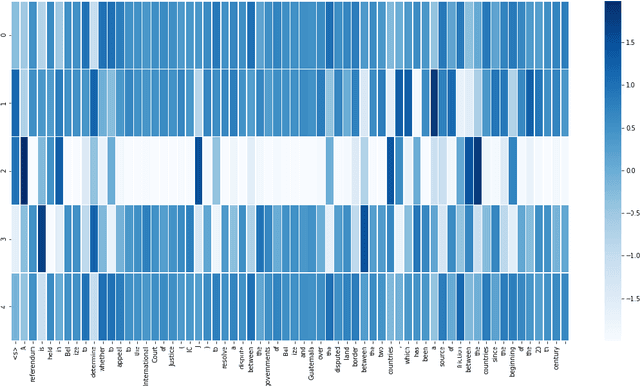

Abstract:Sequence-to-sequence (s2s) models are the basis for extensive work in natural language processing. However, some applications, such as multi-document summarization, multi-modal machine translation, and the automatic post-editing of machine translation, require mapping a set of multiple distinct inputs into a single output sequence. Recent work has introduced bespoke architectures for these multi-input settings, and developed models which can handle increasingly longer inputs; however, the performance of special model architectures is limited by the available in-domain training data. In this work we propose a simple decoding methodology which ensembles the output of multiple instances of the same model on different inputs. Our proposed approach allows models trained for vanilla s2s tasks to be directly used in multi-input settings. This works particularly well when each of the inputs has significant overlap with the others, as when compressing a cluster of news articles about the same event into a single coherent summary, and we obtain state-of-the-art results on several multi-document summarization datasets.

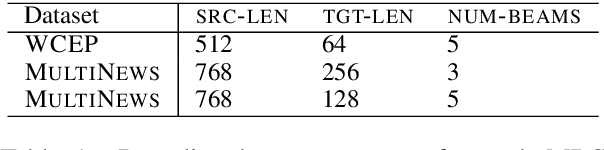

A Large-Scale Multi-Document Summarization Dataset from the Wikipedia Current Events Portal

May 20, 2020

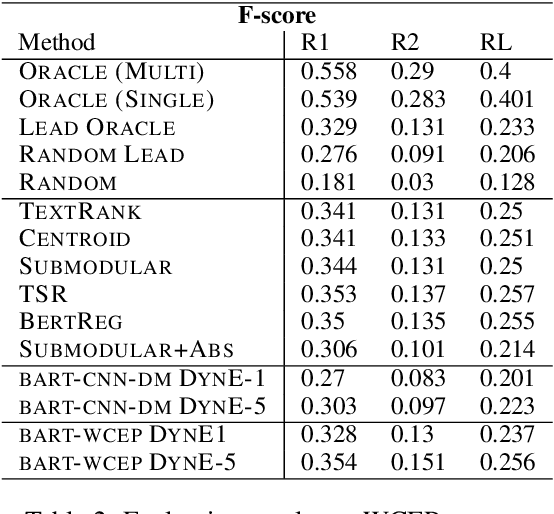

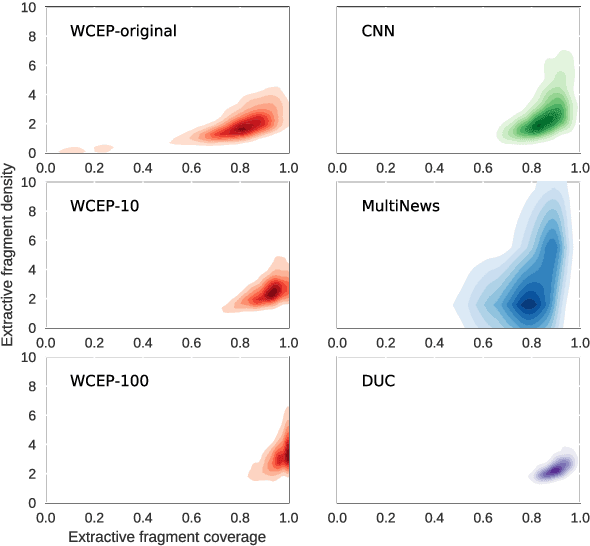

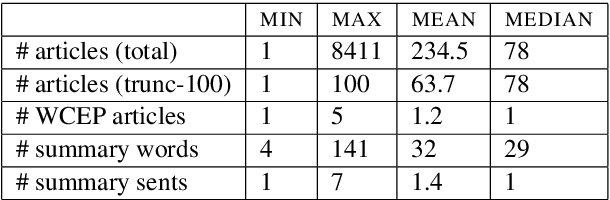

Abstract:Multi-document summarization (MDS) aims to compress the content in large document collections into short summaries and has important applications in story clustering for newsfeeds, presentation of search results, and timeline generation. However, there is a lack of datasets that realistically address such use cases at a scale large enough for training supervised models for this task. This work presents a new dataset for MDS that is large both in the total number of document clusters and in the size of individual clusters. We build this dataset by leveraging the Wikipedia Current Events Portal (WCEP), which provides concise and neutral human-written summaries of news events, with links to external source articles. We also automatically extend these source articles by looking for related articles in the Common Crawl archive. We provide a quantitative analysis of the dataset and empirical results for several state-of-the-art MDS techniques.

Task Selection Policies for Multitask Learning

Jul 14, 2019

Abstract:One of the questions that arises when designing models that learn to solve multiple tasks simultaneously is how much of the available training budget should be devoted to each individual task. We refer to any formalized approach to addressing this problem (learned or otherwise) as a task selection policy. In this work we provide an empirical evaluation of the performance of some common task selection policies in a synthetic bandit-style setting, as well as on the GLUE benchmark for natural language understanding. We connect task selection policy learning to existing work on automated curriculum learning and off-policy evaluation, and suggest a method based on counterfactual estimation that leads to improved model performance in our experimental settings.

Evaluating the Supervised and Zero-shot Performance of Multi-lingual Translation Models

Jun 24, 2019

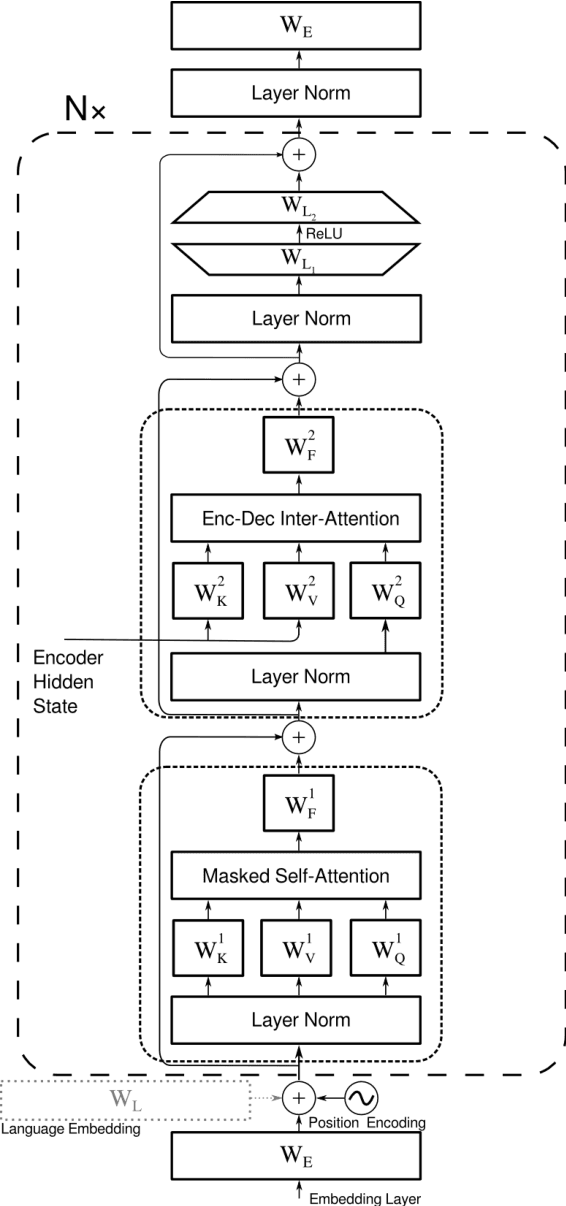

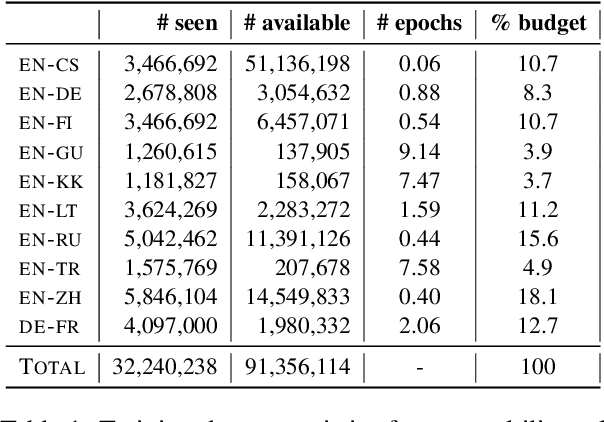

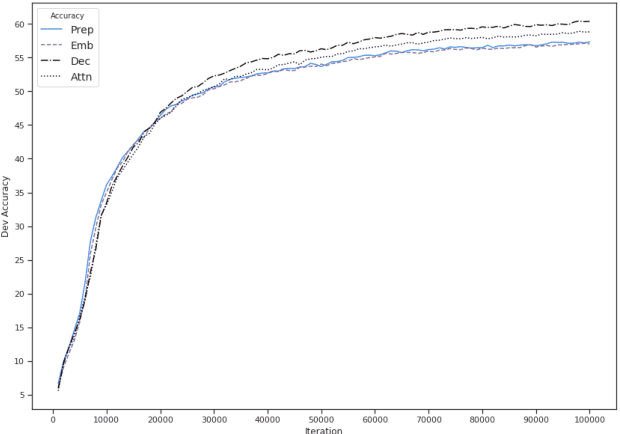

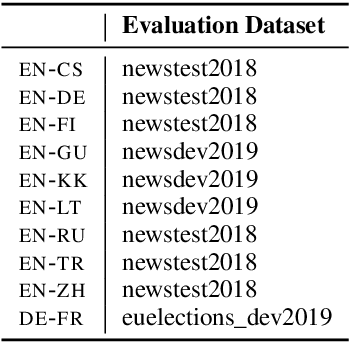

Abstract:We study several methods for full or partial sharing of the decoder parameters of multilingual NMT models. We evaluate both fully supervised and zero-shot translation performance in 110 unique translation directions using only the WMT 2019 shared task parallel datasets for training. We use additional test sets and re-purpose evaluation methods recently used for unsupervised MT in order to evaluate zero-shot translation performance for language pairs where no gold-standard parallel data is available. To our knowledge, this is the largest evaluation of multi-lingual translation yet conducted in terms of the total size of the training data we use, and in terms of the diversity of zero-shot translation pairs we evaluate. We conduct an in-depth evaluation of the translation performance of different models, highlighting the trade-offs between methods of sharing decoder parameters. We find that models which have task-specific decoder parameters outperform models where decoder parameters are fully shared across all tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge