Chloé Puget

On the Value of PHH3 for Mitotic Figure Detection on H&E-stained Images

Jun 28, 2024Abstract:The count of mitotic figures (MFs) observed in hematoxylin and eosin (H&E)-stained slides is an important prognostic marker as it is a measure for tumor cell proliferation. However, the identification of MFs has a known low inter-rater agreement. Deep learning algorithms can standardize this task, but they require large amounts of annotated data for training and validation. Furthermore, label noise introduced during the annotation process may impede the algorithm's performance. Unlike H&E, the mitosis-specific antibody phospho-histone H3 (PHH3) specifically highlights MFs. Counting MFs on slides stained against PHH3 leads to higher agreement among raters and has therefore recently been used as a ground truth for the annotation of MFs in H&E. However, as PHH3 facilitates the recognition of cells indistinguishable from H&E stain alone, the use of this ground truth could potentially introduce noise into the H&E-related dataset, impacting model performance. This study analyzes the impact of PHH3-assisted MF annotation on inter-rater reliability and object level agreement through an extensive multi-rater experiment. We found that the annotators' object-level agreement increased when using PHH3-assisted labeling. Subsequently, MF detectors were evaluated on the resulting datasets to investigate the influence of PHH3-assisted labeling on the models' performance. Additionally, a novel dual-stain MF detector was developed to investigate the interpretation-shift of PHH3-assisted labels used in H&E, which clearly outperformed single-stain detectors. However, the PHH3-assisted labels did not have a positive effect on solely H&E-based models. The high performance of our dual-input detector reveals an information mismatch between the H&E and PHH3-stained images as the cause of this effect.

Deep Learning model predicts the c-Kit-11 mutational status of canine cutaneous mast cell tumors by HE stained histological slides

Jan 02, 2024

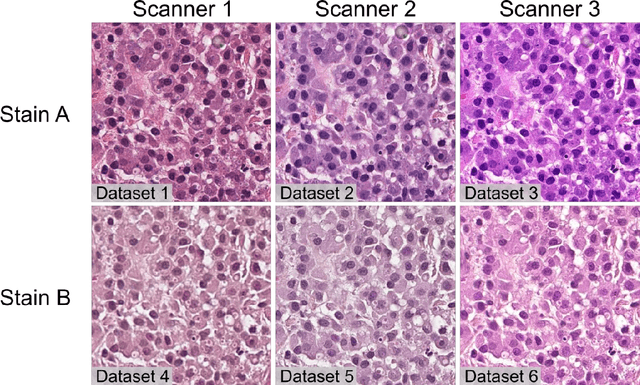

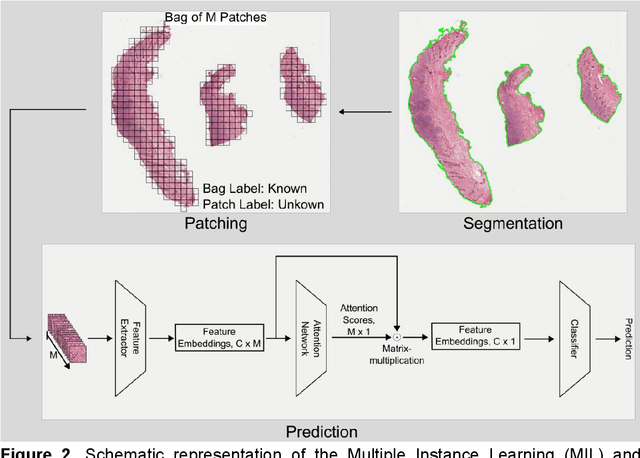

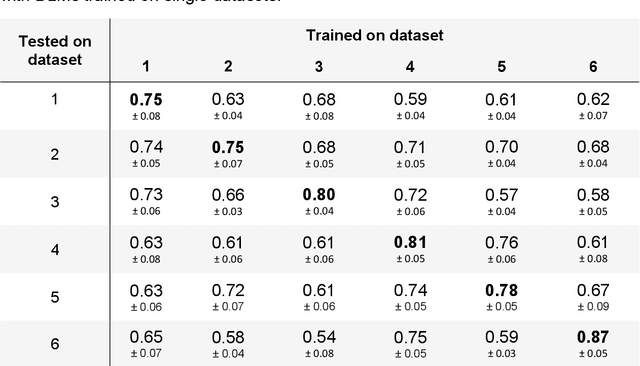

Abstract:Numerous prognostic factors are currently assessed histopathologically in biopsies of canine mast cell tumors to evaluate clinical behavior. In addition, PCR analysis of the c-Kit exon 11 mutational status is often performed to evaluate the potential success of a tyrosine kinase inhibitor therapy. This project aimed at training deep learning models (DLMs) to identify the c-Kit-11 mutational status of MCTs solely based on morphology without additional molecular analysis. HE slides of 195 mutated and 173 non-mutated tumors were stained consecutively in two different laboratories and scanned with three different slide scanners. This resulted in six different datasets (stain-scanner variations) of whole slide images. DLMs were trained with single and mixed datasets and their performances was assessed under scanner and staining domain shifts. The DLMs correctly classified HE slides according to their c-Kit 11 mutation status in, on average, 87% of cases for the best-suited stain-scanner variant. A relevant performance drop could be observed when the stain-scanner combination of the training and test dataset differed. Multi-variant datasets improved the average accuracy but did not reach the maximum accuracy of algorithms trained and tested on the same stain-scanner variant. In summary, DLM-assisted morphological examination of MCTs can predict c-Kit-exon 11 mutational status of MCTs with high accuracy. However, the recognition performance is impeded by a change of scanner or staining protocol. Larger data sets with higher numbers of scans originating from different laboratories and scanners may lead to more robust DLMs to identify c-Kit mutations in HE slides.

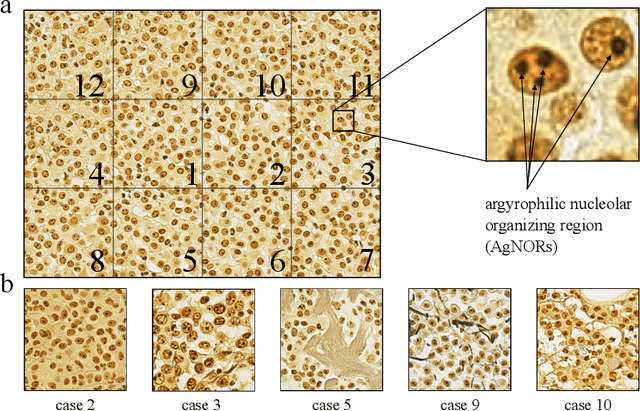

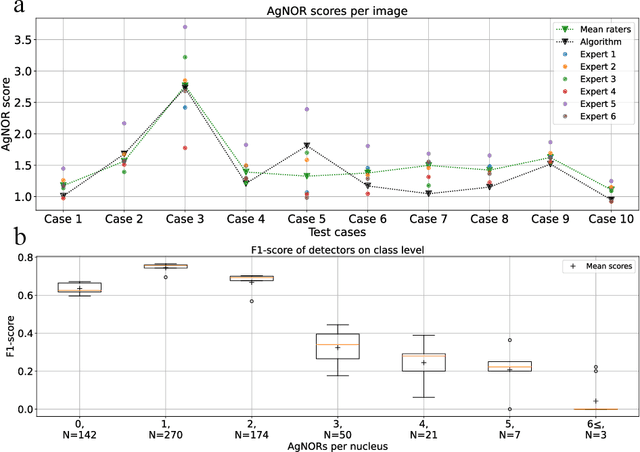

Deep Learning-Based Automatic Assessment of AgNOR-scores in Histopathology Images

Dec 15, 2022

Abstract:Nucleolar organizer regions (NORs) are parts of the DNA that are involved in RNA transcription. Due to the silver affinity of associated proteins, argyrophilic NORs (AgNORs) can be visualized using silver-based staining. The average number of AgNORs per nucleus has been shown to be a prognostic factor for predicting the outcome of many tumors. Since manual detection of AgNORs is laborious, automation is of high interest. We present a deep learning-based pipeline for automatically determining the AgNOR-score from histopathological sections. An additional annotation experiment was conducted with six pathologists to provide an independent performance evaluation of our approach. Across all raters and images, we found a mean squared error of 0.054 between the AgNOR- scores of the experts and those of the model, indicating that our approach offers performance comparable to humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge