Chengyao Li

Variable Impedance Control for Floating-Base Supernumerary Robotic Leg in Walking Assistance

Nov 15, 2025Abstract:In human-robot systems, ensuring safety during force control in the presence of both internal and external disturbances is crucial. As a typical loosely coupled floating-base robot system, the supernumerary robotic leg (SRL) system is particularly susceptible to strong internal disturbances. To address the challenge posed by floating base, we investigated the dynamics model of the loosely coupled SRL and designed a hybrid position/force impedance controller to fit dynamic torque input. An efficient variable impedance control (VIC) method is developed to enhance human-robot interaction, particularly in scenarios involving external force disturbances. By dynamically adjusting impedance parameters, VIC improves the dynamic switching between rigidity and flexibility, so that it can adapt to unknown environmental disturbances in different states. An efficient real-time stability guaranteed impedance parameters generating network is specifically designed for the proposed SRL, to achieve shock mitigation and high rigidity supporting. Simulations and experiments validate the system's effectiveness, demonstrating its ability to maintain smooth signal transitions in flexible states while providing strong support forces in rigid states. This approach provides a practical solution for accommodating individual gait variations in interaction, and significantly advances the safety and adaptability of human-robot systems.

Survey of the Detection and Classification of Pulmonary Lesions via CT and X-Ray

Dec 31, 2020

Abstract:In recent years, the prevalence of several pulmonary diseases, especially the coronavirus disease 2019 (COVID-19) pandemic, has attracted worldwide attention. These diseases can be effectively diagnosed and treated with the help of lung imaging. With the development of deep learning technology and the emergence of many public medical image datasets, the diagnosis of lung diseases via medical imaging has been further improved. This article reviews pulmonary CT and X-ray image detection and classification in the last decade. It also provides an overview of the detection of lung nodules, pneumonia, and other common lung lesions based on the imaging characteristics of various lesions. Furthermore, this review introduces 26 commonly used public medical image datasets, summarizes the latest technology, and discusses current challenges and future research directions.

Confidence Guided Stereo 3D Object Detection with Split Depth Estimation

Mar 11, 2020

Abstract:Accurate and reliable 3D object detection is vital to safe autonomous driving. Despite recent developments, the performance gap between stereo-based methods and LiDAR-based methods is still considerable. Accurate depth estimation is crucial to the performance of stereo-based 3D object detection methods, particularly for those pixels associated with objects in the foreground. Moreover, stereo-based methods suffer from high variance in the depth estimation accuracy, which is often not considered in the object detection pipeline. To tackle these two issues, we propose CG-Stereo, a confidence-guided stereo 3D object detection pipeline that uses separate decoders for foreground and background pixels during depth estimation, and leverages the confidence estimation from the depth estimation network as a soft attention mechanism in the 3D object detector. Our approach outperforms all state-of-the-art stereo-based 3D detectors on the KITTI benchmark.

Visual Measurement Integrity Monitoring for UAV Localization

Sep 18, 2019

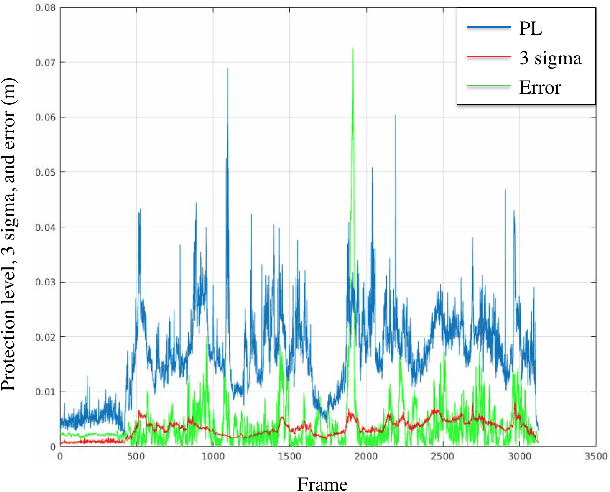

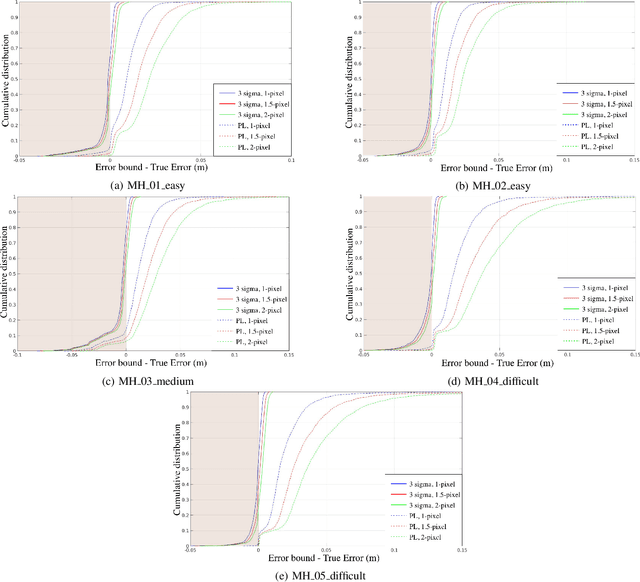

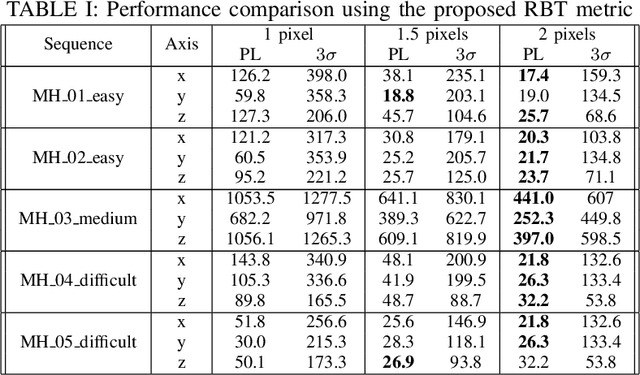

Abstract:Unmanned aerial vehicles (UAVs) have increasingly been adopted for safety, security, and rescue missions, for which they need precise and reliable pose estimates relative to their environment. To ensure mission safety when relying on visual perception, it is essential to have an approach to assess the integrity of the visual localization solution. However, to the best of our knowledge, such an approach does not exist for optimization-based visual localization. Receiver autonomous integrity monitoring (RAIM) has been widely used in global navigation satellite systems (GNSS) applications such as automated aircraft landing. In this paper, we propose a novel approach inspired by RAIM to monitor the integrity of optimization-based visual localization and calculate the protection level of a state estimate, i.e. the largest possible translational error in each direction. We also propose a metric that quantitatively evaluates the performance of the error bounds. Finally, we validate the protection level using the EuRoC dataset and demonstrate that the proposed protection level provides a significantly more reliable bound than the commonly used $3\sigma$ method.

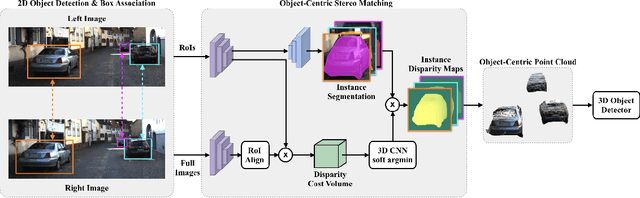

Object-Centric Stereo Matching for 3D Object Detection

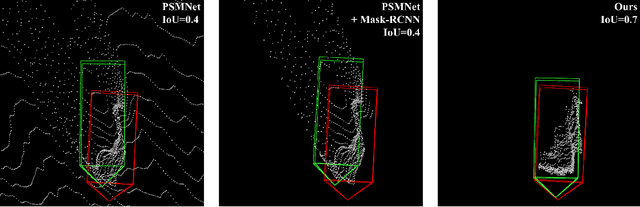

Sep 17, 2019

Abstract:Safe autonomous driving requires reliable 3D object detection-determining the 6 DoF pose and dimensions of objects of interest. Using stereo cameras to solve this task is a cost-effective alternative to the widely used LiDAR sensor. The current state-of-the-art for stereo 3D object detection takes the existing PSMNet stereo matching network, with no modifications, and converts the estimated disparities into a 3D point cloud, and feeds this point cloud into a LiDAR-based 3D object detector. The issue with existing stereo matching networks is that they are designed for disparity estimation, not 3D object detection; the shape and accuracy of object point clouds are not the focus. Stereo matching networks commonly suffer from inaccurate depth estimates at object boundaries, which we define as streaking, because background and foreground points are jointly estimated. Existing networks also penalize disparity instead of the estimated position of object point clouds in their loss functions. We propose a novel 2D box association and object-centric stereo matching method that only estimates the disparities of the objects of interest to address these two issues. Our method achieves state-of-the-art results on the KITTI 3D and BEV benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge