Visual Measurement Integrity Monitoring for UAV Localization

Paper and Code

Sep 18, 2019

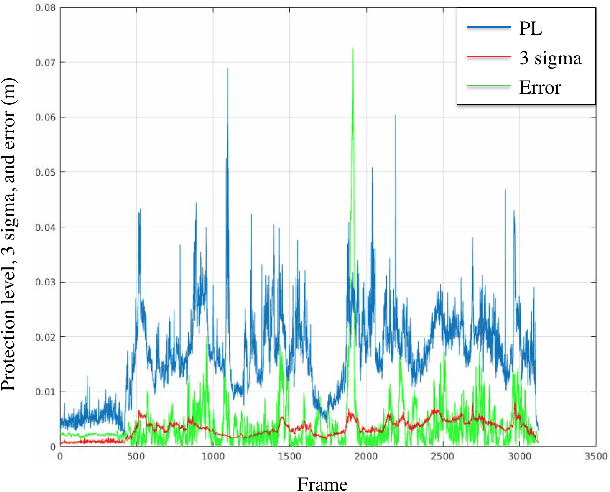

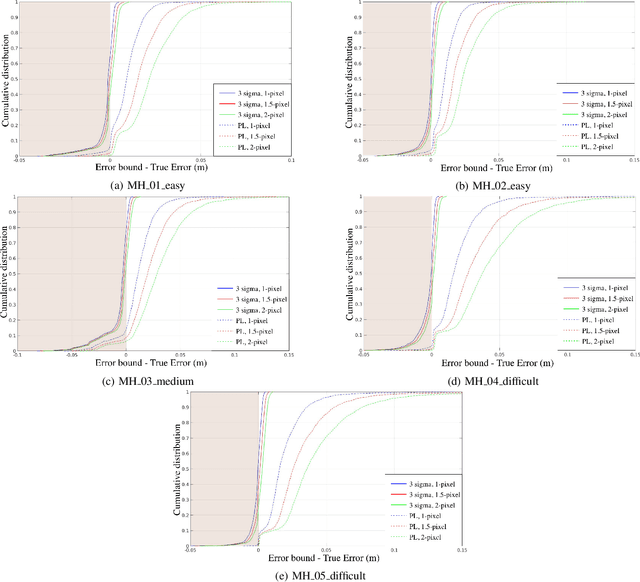

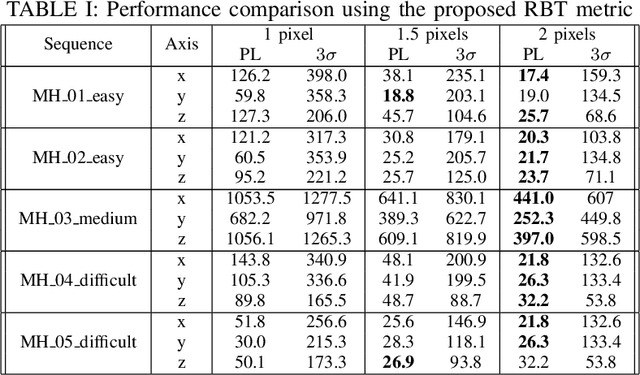

Unmanned aerial vehicles (UAVs) have increasingly been adopted for safety, security, and rescue missions, for which they need precise and reliable pose estimates relative to their environment. To ensure mission safety when relying on visual perception, it is essential to have an approach to assess the integrity of the visual localization solution. However, to the best of our knowledge, such an approach does not exist for optimization-based visual localization. Receiver autonomous integrity monitoring (RAIM) has been widely used in global navigation satellite systems (GNSS) applications such as automated aircraft landing. In this paper, we propose a novel approach inspired by RAIM to monitor the integrity of optimization-based visual localization and calculate the protection level of a state estimate, i.e. the largest possible translational error in each direction. We also propose a metric that quantitatively evaluates the performance of the error bounds. Finally, we validate the protection level using the EuRoC dataset and demonstrate that the proposed protection level provides a significantly more reliable bound than the commonly used $3\sigma$ method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge