Chenghong Bian

Bridging Visual and Wireless Sensing: A Unified Radiation Field for 3D Radio Map Construction

Jan 27, 2026Abstract:The emerging applications of next-generation wireless networks (e.g., immersive 3D communication, low-altitude networks, and integrated sensing and communication) necessitate high-fidelity environmental intelligence. 3D radio maps have emerged as a critical tool for this purpose, enabling spectrum-aware planning and environment-aware sensing by bridging the gap between physical environments and electromagnetic signal propagation. However, constructing accurate 3D radio maps requires fine-grained 3D geometric information and a profound understanding of electromagnetic wave propagation. Existing approaches typically treat optical and wireless knowledge as distinct modalities, failing to exploit the fundamental physical principles governing both light and electromagnetic propagation. To bridge this gap, we propose URF-GS, a unified radio-optical radiation field representation framework for accurate and generalizable 3D radio map construction based on 3D Gaussian splatting (3D-GS) and inverse rendering. By fusing visual and wireless sensing observations, URF-GS recovers scene geometry and material properties while accurately predicting radio signal behavior at arbitrary transmitter-receiver (Tx-Rx) configurations. Experimental results demonstrate that URF-GS achieves up to a 24.7% improvement in spatial spectrum prediction accuracy and a 10x increase in sample efficiency for 3D radio map construction compared with neural radiance field (NeRF)-based methods. This work establishes a foundation for next-generation wireless networks by integrating perception, interaction, and communication through holistic radiation field reconstruction.

Towards AI-Native Fronthaul: Neural Compression for NextG Cloud RAN

Jun 07, 2025Abstract:The rapid growth of data traffic and the emerging AI-native wireless architectures in NextG cellular systems place new demands on the fronthaul links of Cloud Radio Access Networks (C-RAN). In this paper, we investigate neural compression techniques for the Common Public Radio Interface (CPRI), aiming to reduce the fronthaul bandwidth while preserving signal quality. We introduce two deep learning-based compression algorithms designed to optimize the transformation of wireless signals into bit sequences for CPRI transmission. The first algorithm utilizes a non-linear transformation coupled with scalar/vector quantization based on a learned codebook. The second algorithm generates a latent vector transformed into a variable-length output bit sequence via arithmetic encoding, guided by the predicted probability distribution of each latent element. Novel techniques such as a shared weight model for storage-limited devices and a successive refinement model for managing multiple CPRI links with varying Quality of Service (QoS) are proposed. Extensive simulation results demonstrate notable Error Vector Magnitude (EVM) gains with improved rate-distortion performance for both algorithms compared to traditional methods. The proposed solutions are robust to variations in channel conditions, modulation formats, and noise levels, highlighting their potential for enabling efficient and scalable fronthaul in NextG AI-native networks as well as aligning with the current 3GPP research directions.

Realizing Fully-Connected Layers Over the Air via Reconfigurable Intelligent Surfaces

May 02, 2025Abstract:By leveraging the waveform superposition property of the multiple access channel, over-the-air computation (AirComp) enables the execution of digital computations through analog means in the wireless domain, leading to faster processing and reduced latency. In this paper, we propose a novel approach to implement a neural network (NN) consisting of digital fully connected (FC) layers using physically reconfigurable hardware. Specifically, we investigate reconfigurable intelligent surfaces (RISs)-assisted multiple-input multiple-output (MIMO) systems to emulate the functionality of a NN for over-the-air inference. In this setup, both the RIS and the transceiver are jointly configured to manipulate the ambient wireless propagation environment, effectively reproducing the adjustable weights of a digital FC layer. We refer to this new computational paradigm as \textit{AirFC}. We formulate an imitation error minimization problem between the effective channel created by RIS and a target FC layer by jointly optimizing over-the-air parameters. To solve this non-convex optimization problem, an extremely low-complexity alternating optimization algorithm is proposed, where semi-closed-form/closed-form solutions for all optimization variables are derived. Simulation results show that the RIS-assisted MIMO-based AirFC can achieve competitive classification accuracy. Furthermore, it is also shown that a multi-RIS configuration significantly outperforms a single-RIS setup, particularly in line-of-sight (LoS)-dominated channels.

Over-the-Air Inference over Multi-hop MIMO Networks

May 01, 2025Abstract:A novel over-the-air machine learning framework over multi-hop multiple-input and multiple-output (MIMO) networks is proposed. The core idea is to imitate fully connected (FC) neural network layers using multiple MIMO channels by carefully designing the precoding matrices at the transmitting nodes. A neural network dubbed PrototypeNet is employed consisting of multiple FC layers, with the number of neurons of each layer equal to the number of antennas of the corresponding terminal. To achieve satisfactory performance, we train PrototypeNet based on a customized loss function consisting of classification error and the power of latent vectors to satisfy transmit power constraints, with noise injection during training. Precoding matrices for each hop are then obtained by solving an optimization problem. We also propose a multiple-block extension when the number of antennas is limited. Numerical results verify that the proposed over-the-air transmission scheme can achieve satisfactory classification accuracy under a power constraint. The results also show that higher classification accuracy can be achieved with an increasing number of hops at a modest signal-to-noise ratio (SNR).

Sparse Regression Codes for Integrated Passive Sensing and Communications

Nov 08, 2024

Abstract:We propose a novel integrated sensing and communication (ISAC) system, where the base station (BS) passively senses the channel parameters using the information carrying signals from a user. To simultaneously guarantee decoding and sensing performance, the user adopts sparse regression codes (SPARCs) with cyclic redundancy check (CRC) to transmit its information bits. The BS generates an initial coarse channel estimation of the parameters after receiving the pilot signal. Then, a novel iterative decoding and parameter sensing algorithm is proposed, where the correctly decoded codewords indicated by the CRC bits are utilized to improve the sensing and channel estimation performance at the BS. In turn, the improved estimate of the channel parameters lead to a better decoding performance. Simulation results show the effectiveness of the proposed iterative decoding and sensing algorithm, where both the sensing and the communication performance are significantly improved with a few iterations. Extensive ablation studies concerning different channel estimation methods and number of CRC bits are carried out for a comprehensive evaluation of the proposed scheme.

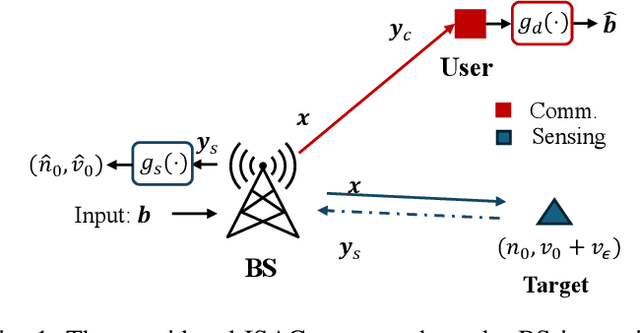

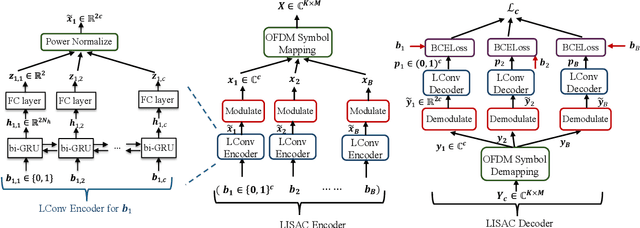

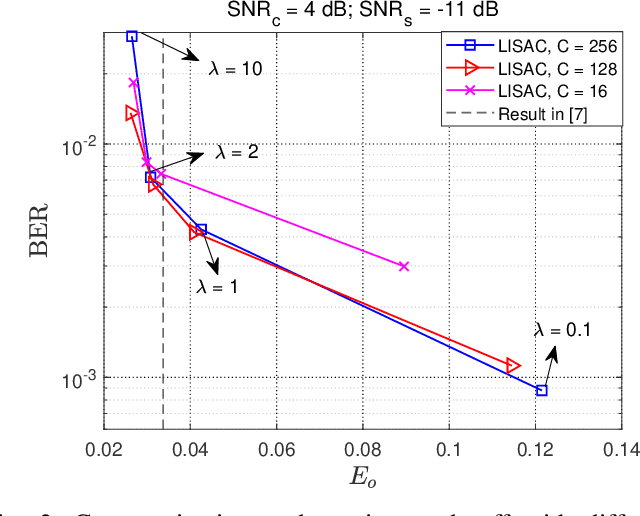

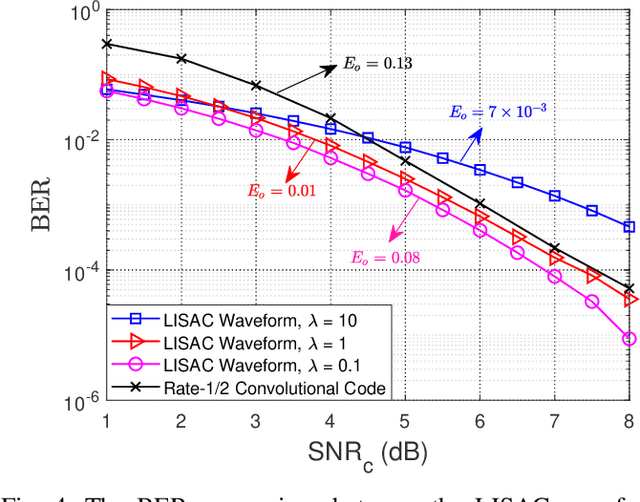

LISAC: Learned Coded Waveform Design for ISAC with OFDM

Oct 14, 2024

Abstract:We propose a novel deep learning based method to design a coded waveform for integrated sensing and communication (ISAC) system based on orthogonal frequency-division multiplexing (OFDM). Our ultimate goal is to design a coded waveform, which is capable of providing satisfactory sensing performance of the target while maintaining high communication quality measured in terms of the bit error rate (BER). The proposed LISAC provides an improved waveform design with the assistance of deep neural networks for the encoding and decoding of the information bits. In particular, the transmitter, parameterized by a recurrent neural network (RNN), encodes the input bit sequence into the transmitted waveform for both sensing and communications. The receiver employs a RNN-based decoder to decode the information bits while the transmitter senses the target via maximum likelihood detection. We optimize the system considering both the communication and sensing performance. Simulation results show that the proposed LISAC waveform achieves a better trade-off curve compared to existing alternatives.

A Deep Joint Source-Channel Coding Scheme for Hybrid Mobile Multi-hop Networks

May 15, 2024Abstract:Efficient data transmission across mobile multi-hop networks that connect edge devices to core servers presents significant challenges, particularly due to the variability in link qualities between wireless and wired segments. This variability necessitates a robust transmission scheme that transcends the limitations of existing deep joint source-channel coding (DeepJSCC) strategies, which often struggle at the intersection of analog and digital methods. Addressing this need, this paper introduces a novel hybrid DeepJSCC framework, h-DJSCC, tailored for effective image transmission from edge devices through a network architecture that includes initial wireless transmission followed by multiple wired hops. Our approach harnesses the strengths of DeepJSCC for the initial, variable-quality wireless link to avoid the cliff effect inherent in purely digital schemes. For the subsequent wired hops, which feature more stable and high-capacity connections, we implement digital compression and forwarding techniques to prevent noise accumulation. This dual-mode strategy is adaptable even in scenarios with limited knowledge of the image distribution, enhancing the framework's robustness and utility. Extensive numerical simulations demonstrate that our hybrid solution outperforms traditional fully digital approaches by effectively managing transitions between different network segments and optimizing for variable signal-to-noise ratios (SNRs). We also introduce a fully adaptive h-DJSCC architecture capable of adjusting to different network conditions and achieving diverse rate-distortion objectives, thereby reducing the memory requirements on network nodes.

Process-and-Forward: Deep Joint Source-Channel Coding Over Cooperative Relay Networks

Mar 15, 2024

Abstract:This paper introduces an innovative deep joint source-channel coding (DeepJSCC) approach to image transmission over a cooperative relay channel. The relay either amplifies and forwards a scaled version of its received signal, referred to as DeepJSCC-AF, or leverages neural networks to extract relevant features about the source signal before forwarding it to the destination, which we call DeepJSCC-PF (Process-and-Forward). In the full-duplex scheme, inspired by the block Markov coding (BMC) concept, we introduce a novel block transmission strategy built upon novel vision transformer architecture. In the proposed scheme, the source transmits information in blocks, and the relay updates its knowledge about the input signal after each block and generates its own signal to be conveyed to the destination. To enhance practicality, we introduce an adaptive transmission model, which allows a single trained DeepJSCC model to adapt seamlessly to various channel qualities, making it a versatile solution. Simulation results demonstrate the superior performance of our proposed DeepJSCC compared to the state-of-the-art BPG image compression algorithm, even when operating at the maximum achievable rate of conventional decode-and-forward and compress-and-forward protocols, for both half-duplex and full-duplex relay scenarios.

Point Cloud in the Air

Jan 01, 2024Abstract:Acquisition and processing of point clouds (PCs) is a crucial enabler for many emerging applications reliant on 3D spatial data, such as robot navigation, autonomous vehicles, and augmented reality. In most scenarios, PCs acquired by remote sensors must be transmitted to an edge server for fusion, segmentation, or inference. Wireless transmission of PCs not only puts on increased burden on the already congested wireless spectrum, but also confronts a unique set of challenges arising from the irregular and unstructured nature of PCs. In this paper, we meticulously delineate these challenges and offer a comprehensive examination of existing solutions while candidly acknowledging their inherent limitations. In response to these intricacies, we proffer four pragmatic solution frameworks, spanning advanced techniques, hybrid schemes, and distributed data aggregation approaches. In doing so, our goal is to chart a path toward efficient, reliable, and low-latency wireless PC transmission.

A Hybrid Joint Source-Channel Coding Scheme for Mobile Multi-hop Networks

Nov 13, 2023Abstract:We propose a novel hybrid joint source-channel coding (JSCC) scheme for robust image transmission over multi-hop networks. In the considered scenario, a mobile user wants to deliver an image to its destination over a mobile cellular network. We assume a practical setting, where the links between the nodes belonging to the mobile core network are stable and of high quality, while the link between the mobile user and the first node (e.g., the access point) is potentially time-varying with poorer quality. In recent years, neural network based JSCC schemes (called DeepJSCC) have emerged as promising solutions to overcome the limitations of separation-based fully digital schemes. However, relying on analog transmission, DeepJSCC suffers from noise accumulation over multi-hop networks. Moreover, most of the hops within the mobile core network may be high-capacity wireless connections, calling for digital approaches. To this end, we propose a hybrid solution, where DeepJSCC is adopted for the first hop, while the received signal at the first relay is digitally compressed and forwarded through the mobile core network. We show through numerical simulations that the proposed scheme is able to outperform both the fully analog and fully digital schemes. Thanks to DeepJSCC it can avoid the cliff effect over the first hop, while also avoiding noise forwarding over the mobile core network thank to digital transmission. We believe this work paves the way for the practical deployment of DeepJSCC solutions in 6G and future wireless networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge