Changhee Han

All-in-one platform for AI R&D in medical imaging, encompassing data collection, selection, annotation, and pre-processing

Mar 10, 2024Abstract:Deep Learning is advancing medical imaging Research and Development (R&D), leading to the frequent clinical use of Artificial Intelligence/Machine Learning (AI/ML)-based medical devices. However, to advance AI R&D, two challenges arise: 1) significant data imbalance, with most data from Europe/America and under 10% from Asia, despite its 60% global population share; and 2) hefty time and investment needed to curate proprietary datasets for commercial use. In response, we established the first commercial medical imaging platform, encompassing steps like: 1) data collection, 2) data selection, 3) annotation, and 4) pre-processing. Moreover, we focus on harnessing under-represented data from Japan and broader Asia, including Computed Tomography, Magnetic Resonance Imaging, and Whole Slide Imaging scans. Using the collected data, we are preparing/providing ready-to-use datasets for medical AI R&D by 1) offering these datasets to AI firms, biopharma, and medical device makers and 2) using them as training/test data to develop tailored AI solutions for such entities. We also aim to merge Blockchain for data security and plan to synthesize rare disease data via generative AI. DataHub Website: https://medical-datahub.ai/

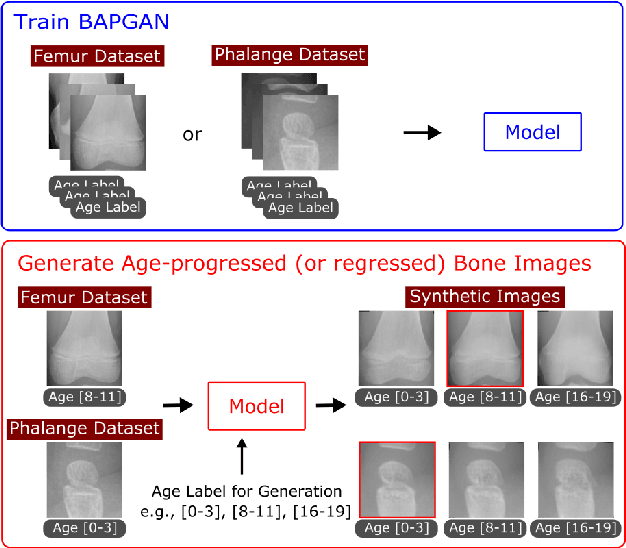

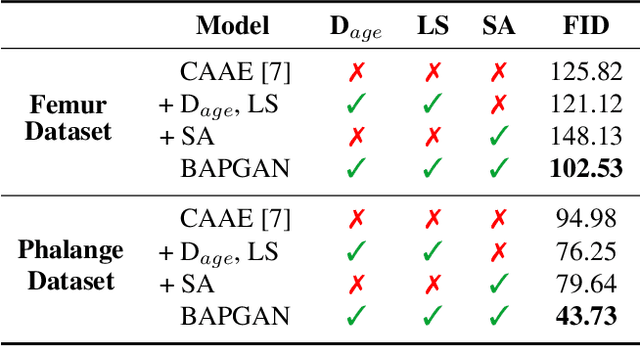

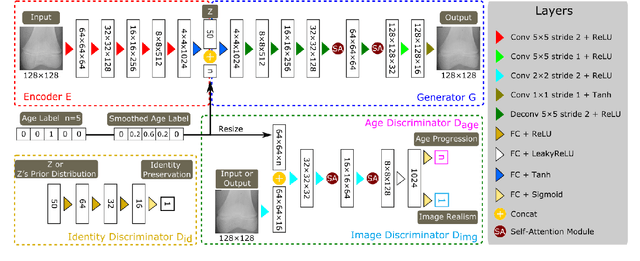

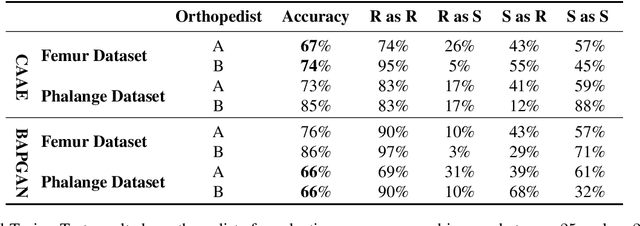

BAPGAN: GAN-based Bone Age Progression of Femur and Phalange X-ray Images

Oct 16, 2021

Abstract:Convolutional Neural Networks play a key role in bone age assessment for investigating endocrinology, genetic, and growth disorders under various modalities and body regions. However, no researcher has tackled bone age progression/regression despite its valuable potential applications: bone-related disease diagnosis, clinical knowledge acquisition, and museum education. Therefore, we propose Bone Age Progression Generative Adversarial Network (BAPGAN) to progress/regress both femur/phalange X-ray images while preserving identity and realism. We exhaustively confirm the BAPGAN's clinical potential via Frechet Inception Distance, Visual Turing Test by two expert orthopedists, and t-Distributed Stochastic Neighbor Embedding.

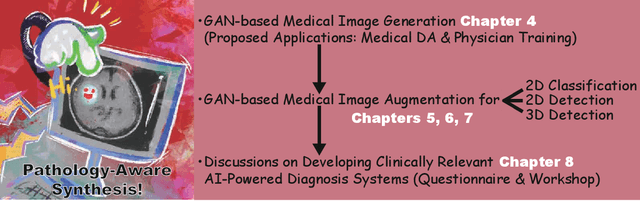

Pathology-Aware Generative Adversarial Networks for Medical Image Augmentation

Jun 03, 2021

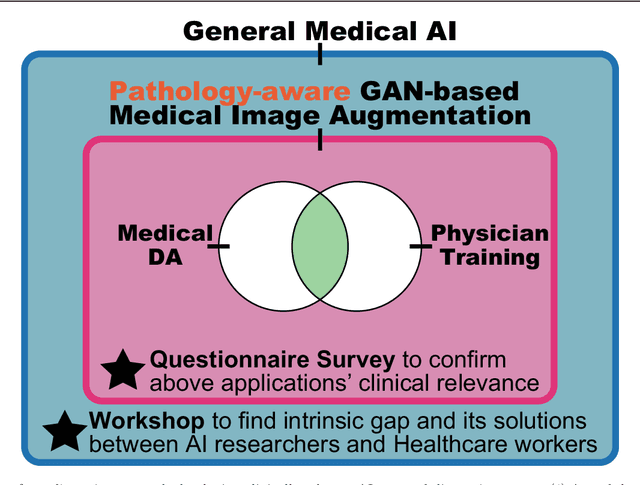

Abstract:Convolutional Neural Networks (CNNs) can play a key role in Medical Image Analysis under large-scale annotated datasets. However, preparing such massive dataset is demanding. In this context, Generative Adversarial Networks (GANs) can generate realistic but novel samples, and thus effectively cover the real image distribution. In terms of interpolation, the GAN-based medical image augmentation is reliable because medical modalities can display the human body's strong anatomical consistency at fixed position while clearly reflecting inter-subject variability; thus, we propose to use noise-to-image GANs (e.g., random noise samples to diverse pathological images) for (i) medical Data Augmentation (DA) and (ii) physician training. Regarding the DA, the GAN-generated images can improve Computer-Aided Diagnosis based on supervised learning. For the physician training, the GANs can display novel desired pathological images and help train medical trainees despite infrastructural/legal constraints. This thesis contains four GAN projects aiming to present such novel applications' clinical relevance in collaboration with physicians. Whereas the methods are more generally applicable, this thesis only explores a few oncological applications.

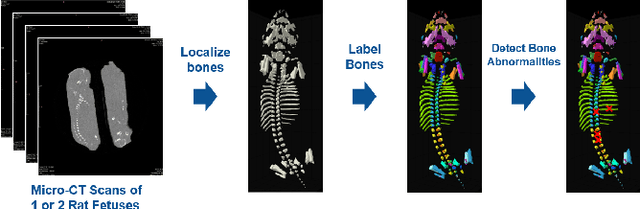

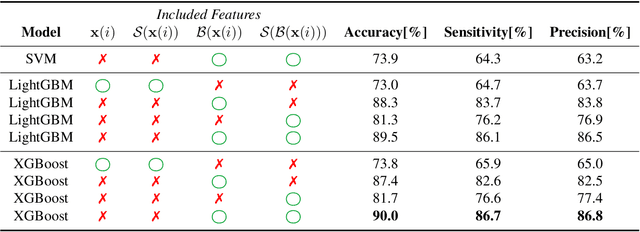

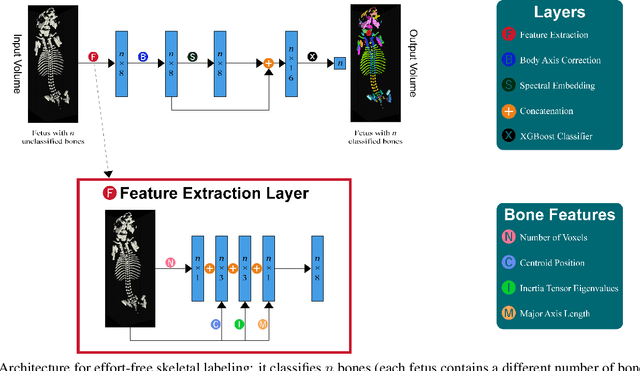

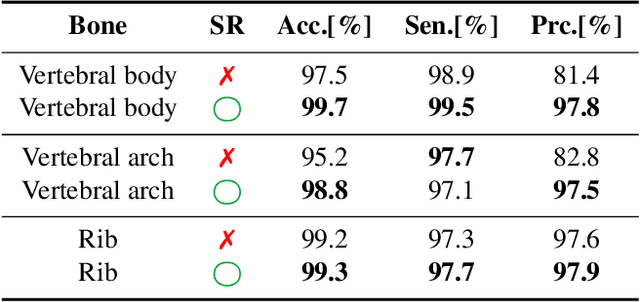

Effort-free Automated Skeletal Abnormality Detection of Rat Fetuses on Whole-body Micro-CT Scans

Jun 03, 2021

Abstract:Machine Learning-based fast and quantitative automated screening plays a key role in analyzing human bones on Computed Tomography (CT) scans. However, despite the requirement in drug safety assessment, such research is rare on animal fetus micro-CT scans due to its laborious data collection and annotation. Therefore, we propose various bone feature engineering techniques to thoroughly automate the skeletal localization/labeling/abnormality detection of rat fetuses on whole-body micro-CT scans with minimum effort. Despite limited training data of 49 fetuses, in skeletal labeling and abnormality detection, we achieve accuracy of 0.900 and 0.810, respectively.

Tips and Tricks to Improve CNN-based Chest X-ray Diagnosis: A Survey

Jun 02, 2021Abstract:Convolutional Neural Networks (CNNs) intrinsically requires large-scale data whereas Chest X-Ray (CXR) images tend to be data/annotation-scarce, leading to over-fitting. Therefore, based on our development experience and related work, this paper thoroughly introduces tricks to improve generalization in the CXR diagnosis: how to (i) leverage additional data, (ii) augment/distillate data, (iii) regularize training, and (iv) conduct efficient segmentation. As a development example based on such optimization techniques, we also feature LPIXEL's CNN-based CXR solution, EIRL Chest Nodule, which improved radiologists/non-radiologists' nodule detection sensitivity by 0.100/0.131, respectively, while maintaining specificity.

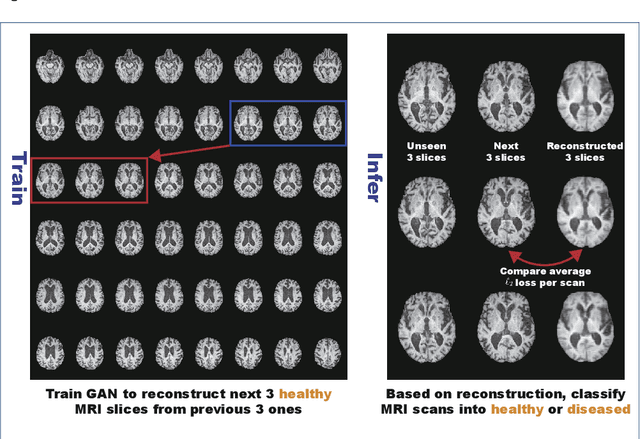

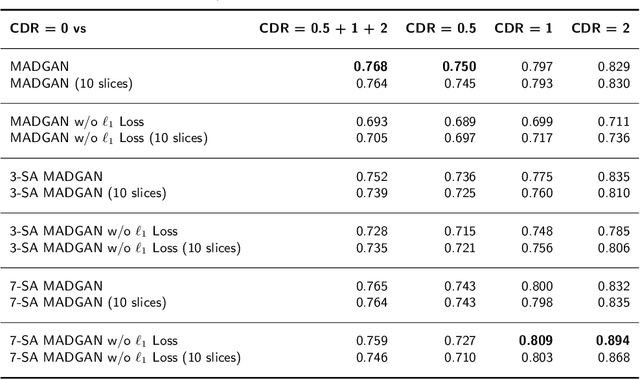

MADGAN: unsupervised Medical Anomaly Detection GAN using multiple adjacent brain MRI slice reconstruction

Jul 24, 2020

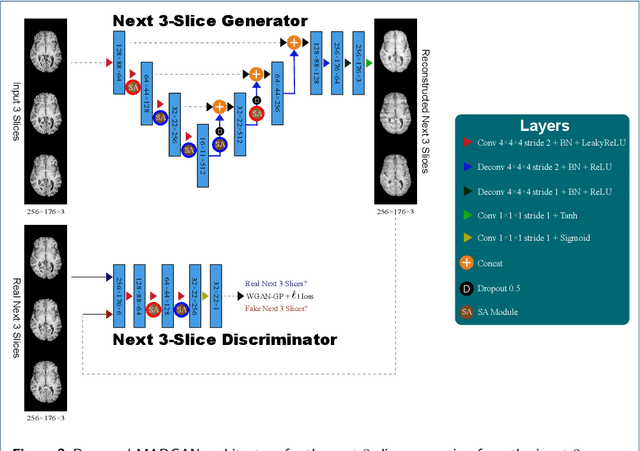

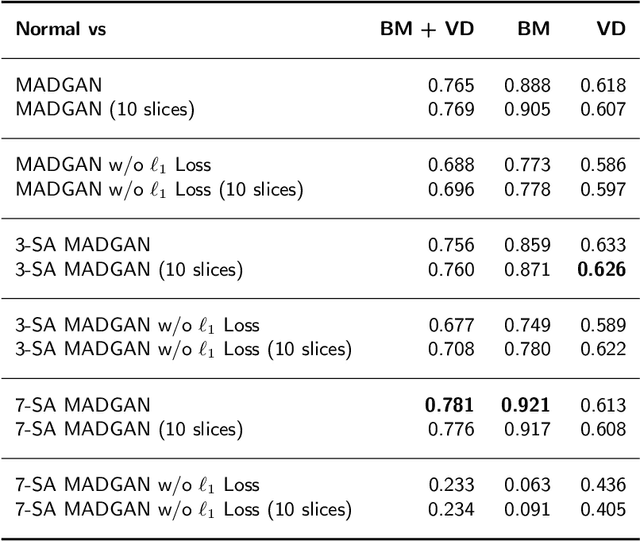

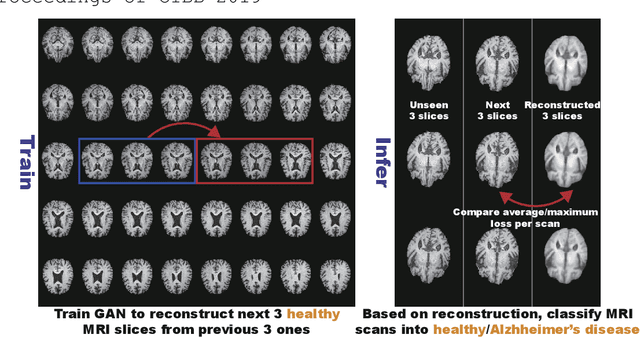

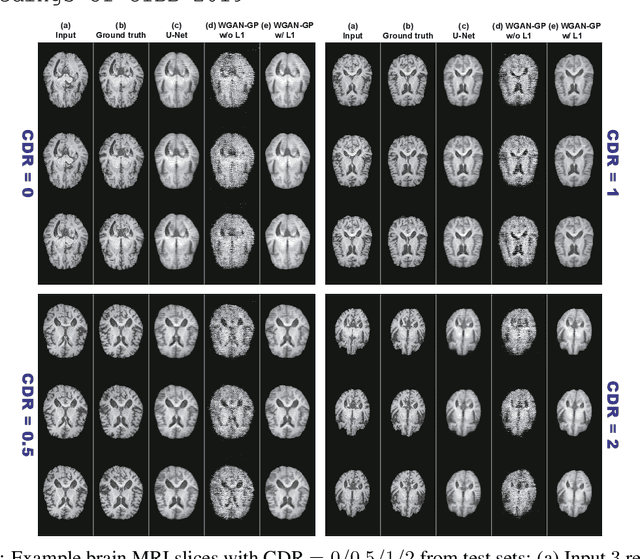

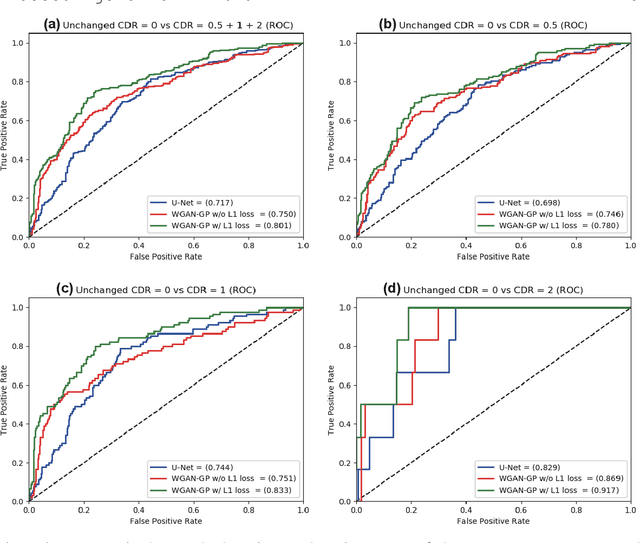

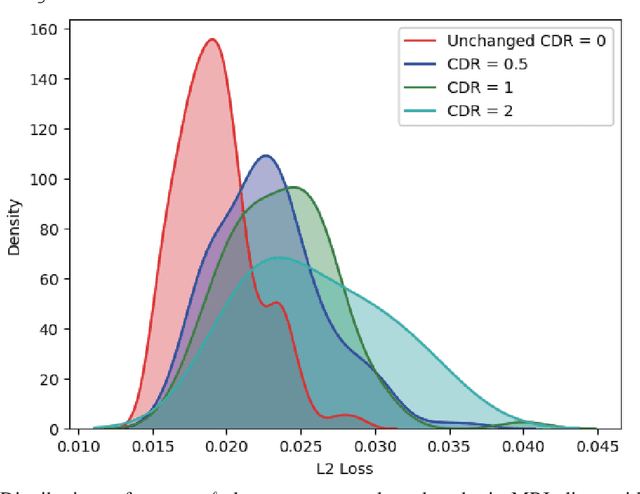

Abstract:Unsupervised learning can discover various unseen diseases, relying on large-scale unannotated medical images of healthy subjects. Towards this, unsupervised methods reconstruct a 2D/3D single medical image to detect outliers either in the learned feature space or from high reconstruction loss. However, without considering continuity between multiple adjacent slices, they cannot directly discriminate diseases composed of the accumulation of subtle anatomical anomalies, such as Alzheimer's Disease (AD). Moreover, no study has shown how unsupervised anomaly detection is associated with either disease stages, various (i.e., more than two types of) diseases, or multi-sequence Magnetic Resonance Imaging (MRI) scans. Therefore, we propose unsupervised Medical Anomaly Detection Generative Adversarial Network (MADGAN), a novel two-step method using GAN-based multiple adjacent brain MRI slice reconstruction to detect various diseases at different stages on multi-sequence structural MRI: (Reconstruction) Wasserstein loss with Gradient Penalty + 100 L1 loss-trained on 3 healthy brain axial MRI slices to reconstruct the next 3 ones-reconstructs unseen healthy/abnormal scans; (Diagnosis) Average L2 loss per scan discriminates them, comparing the ground truth/reconstructed slices. For training, we use 1,133 healthy T1-weighted (T1) and 135 healthy contrast-enhanced T1 (T1c) brain MRI scans. Our Self-Attention MADGAN can detect AD on T1 scans at a very early stage, Mild Cognitive Impairment (MCI), with Area Under the Curve (AUC) 0.727, and AD at a late stage with AUC 0.894, while detecting brain metastases on T1c scans with AUC 0.921.

Bridging the gap between AI and Healthcare sides: towards developing clinically relevant AI-powered diagnosis systems

Jan 12, 2020

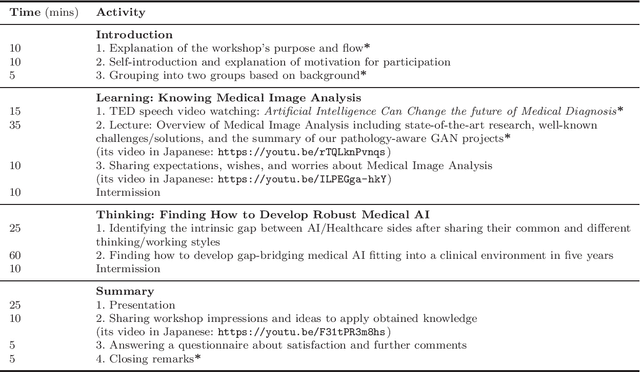

Abstract:This work aims to identify/bridge the gap between Artificial Intelligence (AI) and Healthcare sides in Japan towards developing medical AI fitting into a clinical environment in five years. Moreover, we attempt to confirm the clinical relevance for diagnosis of our research-proven pathology-aware Generative Adversarial Network (GAN)-based medical image augmentation: a data wrangling and information conversion technique to address data paucity. We hold a clinically valuable AI-envisioning workshop among 2 Medical Imaging experts, 2 physicians, and 3 Healthcare/Informatics generalists. A qualitative/quantitative questionnaire survey for 3 project-related physicians and 6 project non-related radiologists evaluates the GAN projects in terms of Data Augmentation (DA) and physician training. The workshop reveals the intrinsic gap between AI/Healthcare sides and its preliminary solutions on Why (i.e., clinical significance/interpretation) and How (i.e., data acquisition, commercial deployment, and safety/feeling safe). The survey confirms our pathology-aware GANs' clinical relevance as a clinical decision support system and non-expert physician training tool. Radiologists generally have high expectations for AI-based diagnosis as a reliable second opinion and abnormal candidate detection, instead of replacing them. Our findings would play a key role in connecting inter-disciplinary research and clinical applications, not limited to the Japanese medical context and pathology-aware GANs. We find that better DA and expert physician training would require atypical image generation via further GAN-based extrapolation.

GAN-based Multiple Adjacent Brain MRI Slice Reconstruction for Unsupervised Alzheimer's Disease Diagnosis

Jul 08, 2019

Abstract:Unsupervised learning can discover various unseen diseases, relying on large-scale unannotated medical images of healthy subjects. Towards this, unsupervised methods reconstruct a single medical image to detect outliers either in the learned feature space or from high reconstruction loss. However, without considering continuity between multiple adjacent images, they cannot directly discriminate diseases composed of the accumulation of subtle anatomical anomalies, such as Alzheimer's Disease (AD). Moreover, no study shows how unsupervised anomaly detection is associated with disease stages. Therefore, we propose a two-step method using Generative Adversarial Network-based multiple adjacent brain MRI slice reconstruction to detect AD at various stages: (Reconstruction) Wasserstein loss with Gradient Penalty + L1 loss---trained on 3 healthy slices to reconstruct the next 3 ones---reconstructs unseen healthy/AD cases; (Diagnosis) Average/Maximum loss (e.g., L2 loss) per scan discriminates them, comparing the reconstructed/ground truth images. The results show that we can reliably detect AD at a very early stage with Area Under the Curve (AUC) 0.780 while also detecting AD at a late stage much more accurately with AUC 0.917; since our method is unsupervised, it should also discover and alert any anomalies including rare disease.

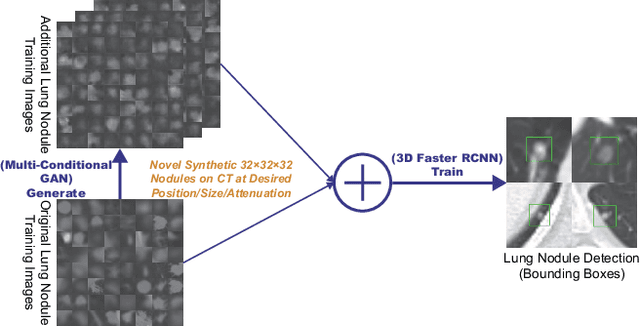

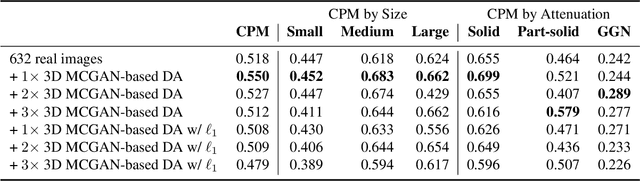

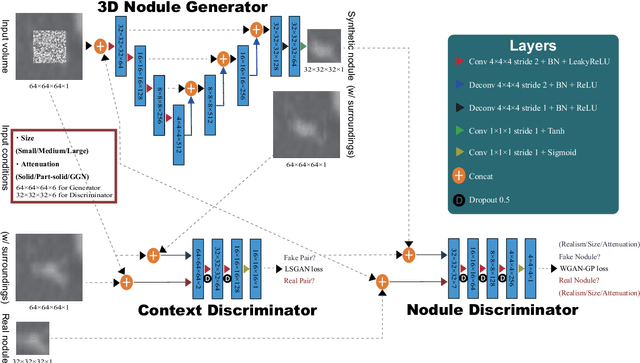

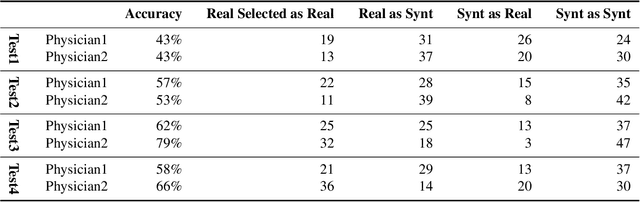

Synthesizing Diverse Lung Nodules Wherever Massively: 3D Multi-Conditional GAN-based CT Image Augmentation for Object Detection

Jun 12, 2019

Abstract:Accurate computer-assisted diagnosis, relying on large-scale annotated pathological images, can alleviate the risk of overlooking the diagnosis. Unfortunately, in medical imaging, most available datasets are small/fragmented. To tackle this, as a Data Augmentation (DA) method, 3D conditional Generative Adversarial Networks (GANs) can synthesize desired realistic/diverse 3D images as additional training data. However, no 3D conditional GAN-based DA approach exists for general bounding box-based 3D object detection, while it can locate disease areas with physicians' minimum annotation cost, unlike rigorous 3D segmentation. Moreover, since lesions vary in position/size/attenuation, further GAN-based DA performance requires multiple conditions. Therefore, we propose 3D Multi-Conditional GAN (MCGAN) to generate realistic/diverse 32 x 32 x 32 nodules placed naturally on lung Computed Tomography images to boost sensitivity in 3D object detection. Our MCGAN adopts two discriminators for conditioning: the context discriminator learns to classify real vs synthetic nodule/surrounding pairs with noise box-centered surroundings; the nodule discriminator attempts to classify real vs synthetic nodules with size/attenuation conditions. The results show that 3D Convolutional Neural Network-based detection can achieve higher sensitivity under any nodule size/attenuation at fixed False Positive rates and overcome the medical data paucity with the MCGAN-generated realistic nodules---even expert physicians fail to distinguish them from the real ones in Visual Turing Test.

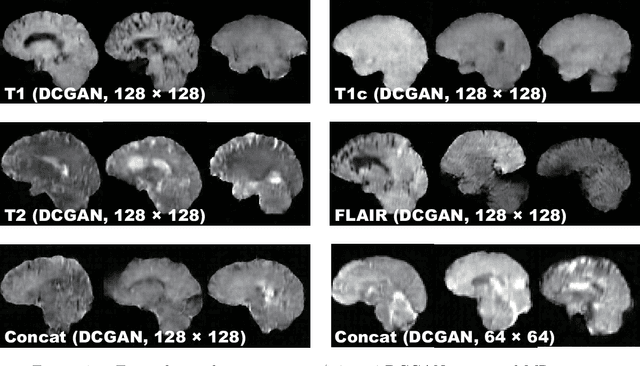

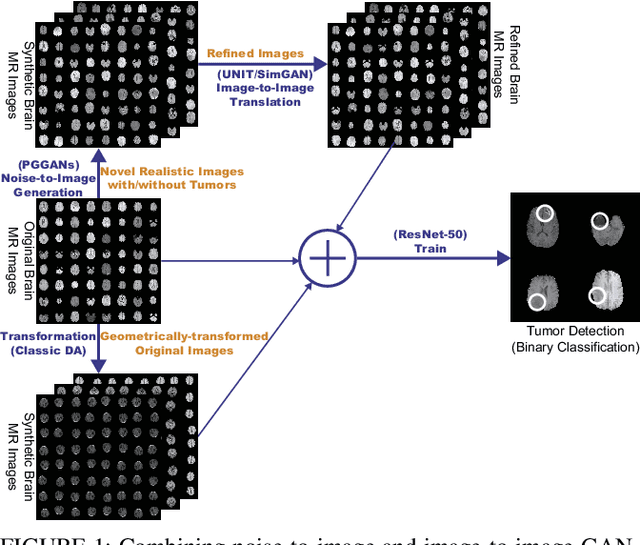

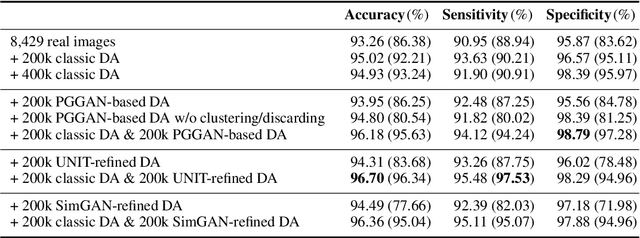

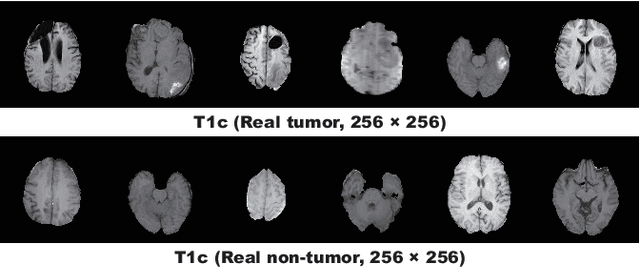

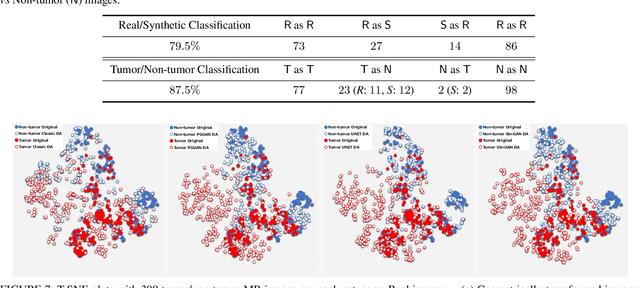

Combining Noise-to-Image and Image-to-Image GANs: Brain MR Image Augmentation for Tumor Detection

May 31, 2019

Abstract:Convolutional Neural Networks (CNNs) can achieve excellent computer-assisted diagnosis performance, relying on sufficient annotated training data. Unfortunately, most medical imaging datasets, often collected from various scanners, are small and fragmented. In this context, as a Data Augmentation (DA) technique, Generative Adversarial Networks (GANs) can synthesize realistic/diverse additional training images to fill the data lack in the real image distribution; researchers have improved classification by augmenting images with noise-to-image (e.g., random noise samples to diverse pathological images) or image-to-image GANs (e.g., a benign image to a malignant one). Yet, no research has reported results combining (i) noise-to-image GANs and image-to-image GANs or (ii) GANs and other deep generative models, for further performance boost. Therefore, to maximize the DA effect with the GAN combinations, we propose a two-step GAN-based DA that generates and refines brain MR images with/without tumors separately: (i) Progressive Growing of GANs (PGGANs), multi-stage noise-to-image GAN for high-resolution image generation, first generates realistic/diverse 256 x 256 images--even a physician cannot accurately distinguish them from real ones via Visual Turing Test; (ii) UNsupervised Image-to-image Translation or SimGAN, image-to-image GAN combining GANs/Variational AutoEncoders or using a GAN loss for DA, further refines the texture/shape of the PGGAN-generated images similarly to the real ones. We thoroughly investigate CNN-based tumor classification results, also considering the influence of pre-training on ImageNet and discarding weird-looking GAN-generated images. The results show that, when combined with classic DA, our two-step GAN-based DA can significantly outperform the classic DA alone, in tumor detection (i.e., boosting sensitivity from 93.63% to 97.53%) and also in other tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge