Kohei Murao

MADGAN: unsupervised Medical Anomaly Detection GAN using multiple adjacent brain MRI slice reconstruction

Jul 24, 2020

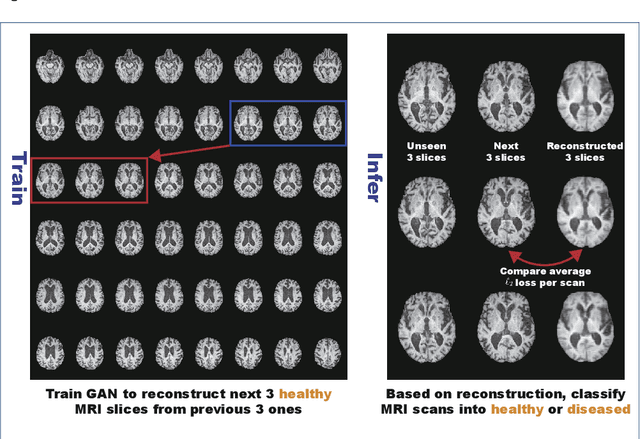

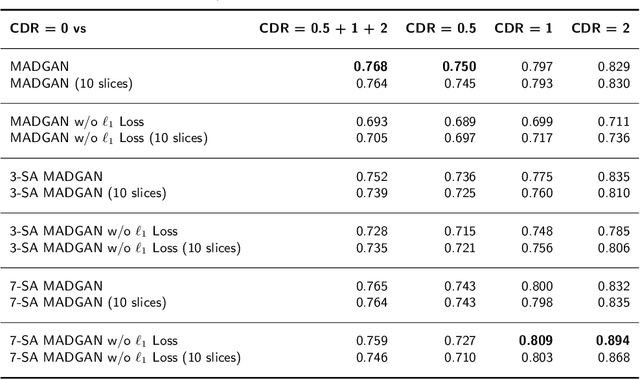

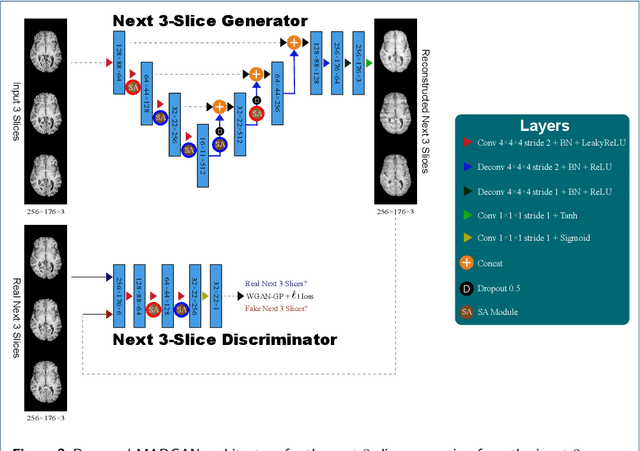

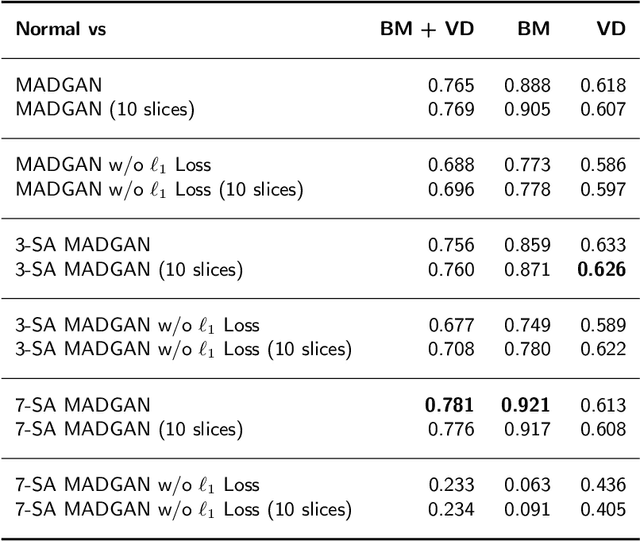

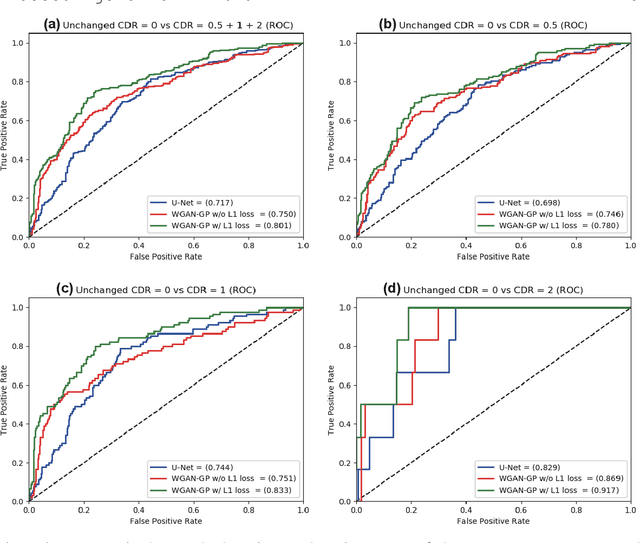

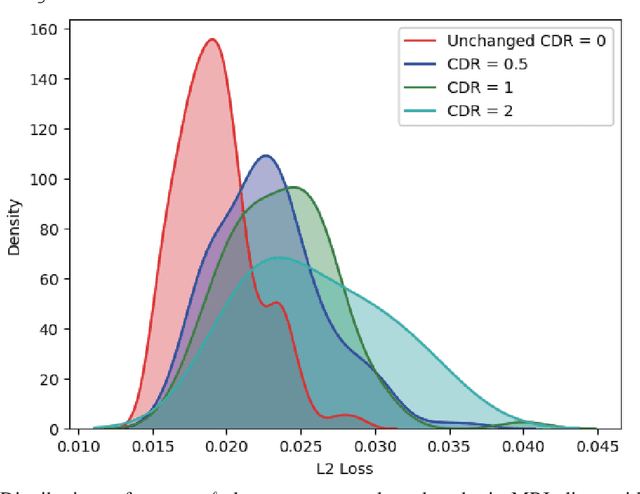

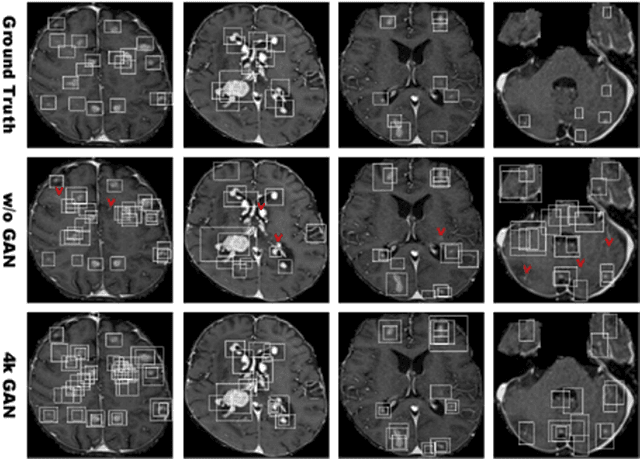

Abstract:Unsupervised learning can discover various unseen diseases, relying on large-scale unannotated medical images of healthy subjects. Towards this, unsupervised methods reconstruct a 2D/3D single medical image to detect outliers either in the learned feature space or from high reconstruction loss. However, without considering continuity between multiple adjacent slices, they cannot directly discriminate diseases composed of the accumulation of subtle anatomical anomalies, such as Alzheimer's Disease (AD). Moreover, no study has shown how unsupervised anomaly detection is associated with either disease stages, various (i.e., more than two types of) diseases, or multi-sequence Magnetic Resonance Imaging (MRI) scans. Therefore, we propose unsupervised Medical Anomaly Detection Generative Adversarial Network (MADGAN), a novel two-step method using GAN-based multiple adjacent brain MRI slice reconstruction to detect various diseases at different stages on multi-sequence structural MRI: (Reconstruction) Wasserstein loss with Gradient Penalty + 100 L1 loss-trained on 3 healthy brain axial MRI slices to reconstruct the next 3 ones-reconstructs unseen healthy/abnormal scans; (Diagnosis) Average L2 loss per scan discriminates them, comparing the ground truth/reconstructed slices. For training, we use 1,133 healthy T1-weighted (T1) and 135 healthy contrast-enhanced T1 (T1c) brain MRI scans. Our Self-Attention MADGAN can detect AD on T1 scans at a very early stage, Mild Cognitive Impairment (MCI), with Area Under the Curve (AUC) 0.727, and AD at a late stage with AUC 0.894, while detecting brain metastases on T1c scans with AUC 0.921.

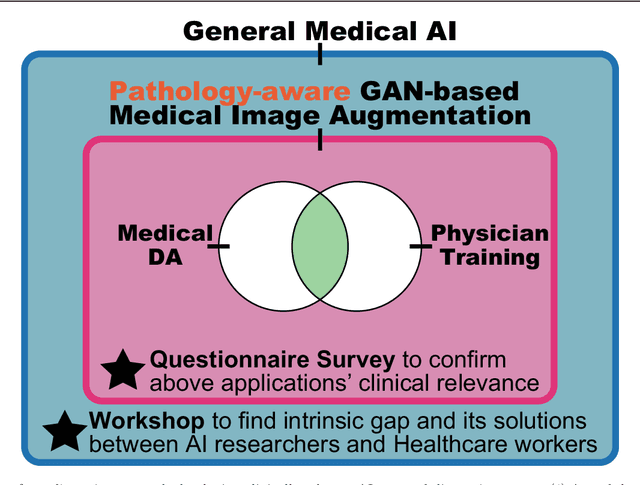

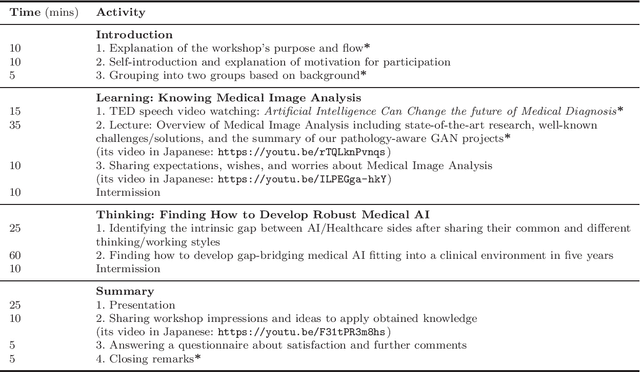

Bridging the gap between AI and Healthcare sides: towards developing clinically relevant AI-powered diagnosis systems

Jan 12, 2020

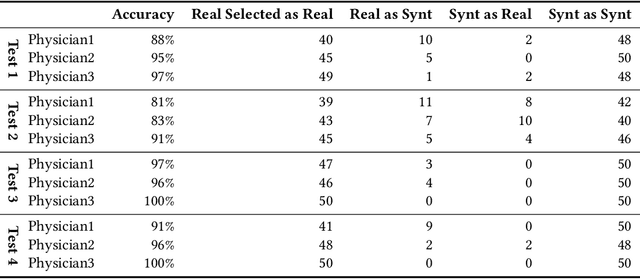

Abstract:This work aims to identify/bridge the gap between Artificial Intelligence (AI) and Healthcare sides in Japan towards developing medical AI fitting into a clinical environment in five years. Moreover, we attempt to confirm the clinical relevance for diagnosis of our research-proven pathology-aware Generative Adversarial Network (GAN)-based medical image augmentation: a data wrangling and information conversion technique to address data paucity. We hold a clinically valuable AI-envisioning workshop among 2 Medical Imaging experts, 2 physicians, and 3 Healthcare/Informatics generalists. A qualitative/quantitative questionnaire survey for 3 project-related physicians and 6 project non-related radiologists evaluates the GAN projects in terms of Data Augmentation (DA) and physician training. The workshop reveals the intrinsic gap between AI/Healthcare sides and its preliminary solutions on Why (i.e., clinical significance/interpretation) and How (i.e., data acquisition, commercial deployment, and safety/feeling safe). The survey confirms our pathology-aware GANs' clinical relevance as a clinical decision support system and non-expert physician training tool. Radiologists generally have high expectations for AI-based diagnosis as a reliable second opinion and abnormal candidate detection, instead of replacing them. Our findings would play a key role in connecting inter-disciplinary research and clinical applications, not limited to the Japanese medical context and pathology-aware GANs. We find that better DA and expert physician training would require atypical image generation via further GAN-based extrapolation.

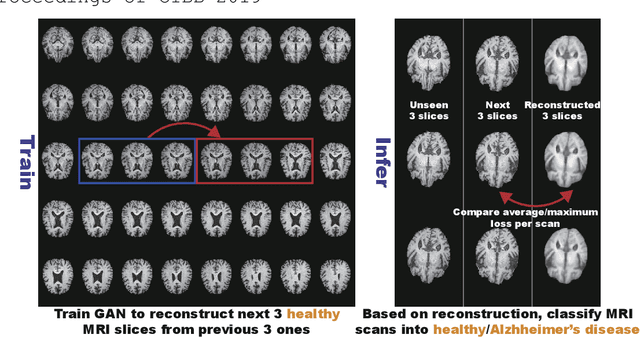

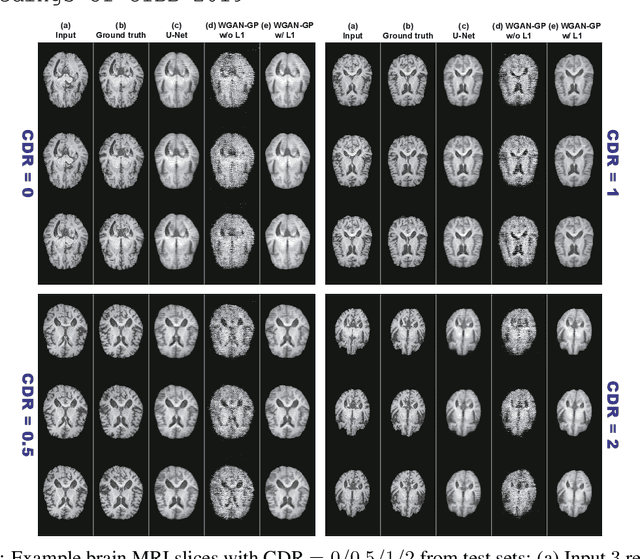

GAN-based Multiple Adjacent Brain MRI Slice Reconstruction for Unsupervised Alzheimer's Disease Diagnosis

Jul 08, 2019

Abstract:Unsupervised learning can discover various unseen diseases, relying on large-scale unannotated medical images of healthy subjects. Towards this, unsupervised methods reconstruct a single medical image to detect outliers either in the learned feature space or from high reconstruction loss. However, without considering continuity between multiple adjacent images, they cannot directly discriminate diseases composed of the accumulation of subtle anatomical anomalies, such as Alzheimer's Disease (AD). Moreover, no study shows how unsupervised anomaly detection is associated with disease stages. Therefore, we propose a two-step method using Generative Adversarial Network-based multiple adjacent brain MRI slice reconstruction to detect AD at various stages: (Reconstruction) Wasserstein loss with Gradient Penalty + L1 loss---trained on 3 healthy slices to reconstruct the next 3 ones---reconstructs unseen healthy/AD cases; (Diagnosis) Average/Maximum loss (e.g., L2 loss) per scan discriminates them, comparing the reconstructed/ground truth images. The results show that we can reliably detect AD at a very early stage with Area Under the Curve (AUC) 0.780 while also detecting AD at a late stage much more accurately with AUC 0.917; since our method is unsupervised, it should also discover and alert any anomalies including rare disease.

Learning More with Less: GAN-based Medical Image Augmentation

May 07, 2019

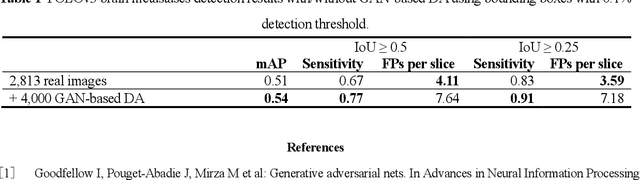

Abstract:Convolutional Neural Network (CNN)-based accurate prediction typically requires large-scale annotated training data. In Medical Imaging, however, both obtaining medical data and annotating them by expert physicians are challenging; to overcome this lack of data, Data Augmentation (DA) using Generative Adversarial Networks (GANs) is essential, since they can synthesize additional annotated training data to handle small and fragmented medical images from various scanners--those generated images, realistic but completely novel, can further fill the real image distribution uncovered by the original dataset. As a tutorial, this paper introduces GAN-based Medical Image Augmentation, along with tricks to boost classification/object detection/segmentation performance using them, based on our experience and related work. Moreover, we show our first GAN-based DA work using automatic bounding box annotation, for robust CNN-based brain metastases detection on 256 x 256 MR images; GAN-based DA can boost 10% sensitivity in diagnosis with a clinically acceptable number of additional False Positives, even with highly-rough and inconsistent bounding boxes.

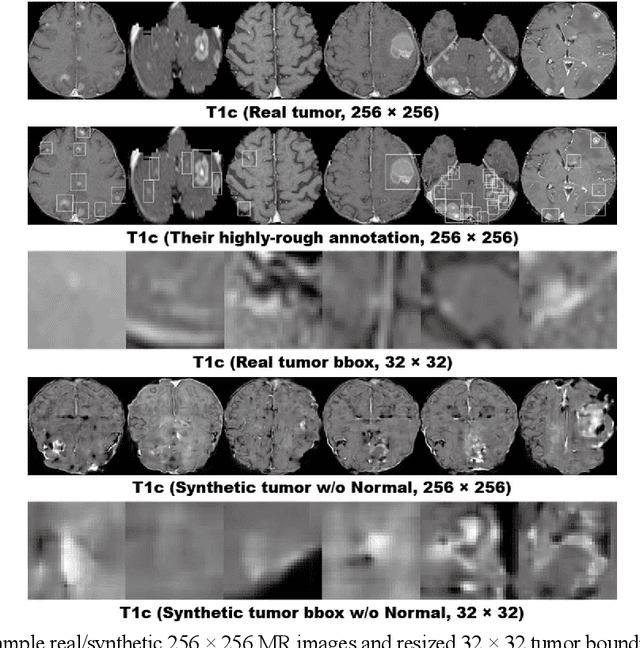

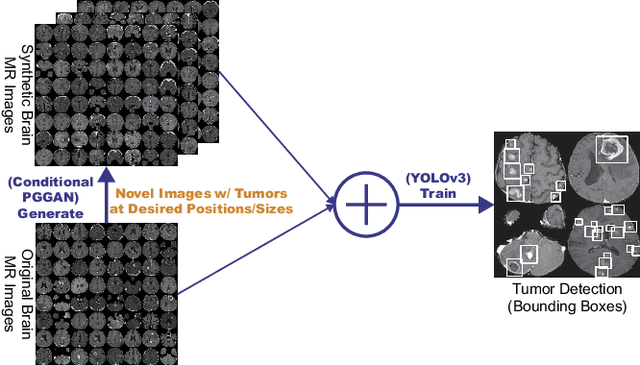

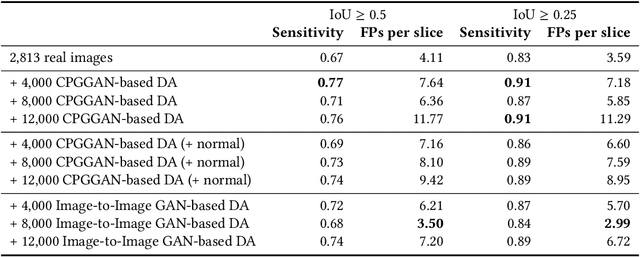

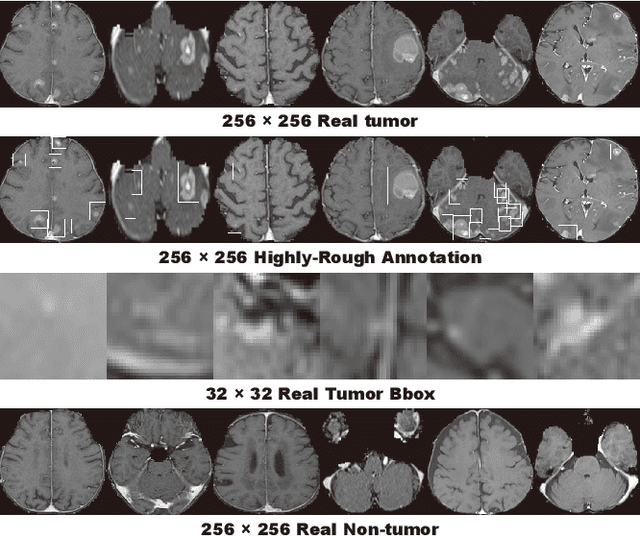

Learning More with Less: Conditional PGGAN-based Data Augmentation for Brain Metastases Detection Using Highly-Rough Annotation on MR Images

Mar 03, 2019

Abstract:Accurate computer-assisted diagnosis can alleviate the risk of overlooking the diagnosis in a clinical environment. Towards this, as a Data Augmentation (DA) technique, Generative Adversarial Networks (GANs) can synthesize additional training data to handle small/fragmented medical images from various scanners; those images are realistic but completely different from the original ones, filling the data lack in the real image distribution. However, we cannot easily use them to locate the position of disease areas, considering expert physicians' annotation as time-expensive tasks. Therefore, this paper proposes Conditional Progressive Growing of GANs (CPGGANs), incorporating bounding box conditions into PGGANs to place brain metastases at desired position/size on 256 x 256 Magnetic Resonance (MR) images, for Convolutional Neural Network-based tumor detection; this first GAN-based medical DA using automatic bounding box annotation improves the robustness during training. The results show that CPGGAN-based DA can boost 10% sensitivity in diagnosis with an acceptable amount of additional False Positives---even with physicians' highly-rough and inconsistent bounding box annotation. Surprisingly, further realistic tumor appearance, achieved with additional normal brain MR images for CPGGAN training, does not contribute to detection performance, while even three expert physicians cannot accurately distinguish them from the real ones in Visual Turing Test.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge