Fumiya Uchiyama

Which Programming Language and What Features at Pre-training Stage Affect Downstream Logical Inference Performance?

Oct 09, 2024

Abstract:Recent large language models (LLMs) have demonstrated remarkable generalization abilities in mathematics and logical reasoning tasks. Prior research indicates that LLMs pre-trained with programming language data exhibit high mathematical and reasoning abilities; however, this causal relationship has not been rigorously tested. Our research aims to verify which programming languages and features during pre-training affect logical inference performance. Specifically, we pre-trained decoder-based language models from scratch using datasets from ten programming languages (e.g., Python, C, Java) and three natural language datasets (Wikipedia, Fineweb, C4) under identical conditions. Thereafter, we evaluated the trained models in a few-shot in-context learning setting on logical reasoning tasks: FLD and bAbi, which do not require commonsense or world knowledge. The results demonstrate that nearly all models trained with programming languages consistently outperform those trained with natural languages, indicating that programming languages contain factors that elicit logic inference performance. In addition, we found that models trained with programming languages exhibit a better ability to follow instructions compared to those trained with natural languages. Further analysis reveals that the depth of Abstract Syntax Trees representing parsed results of programs also affects logical reasoning performance. These findings will offer insights into the essential elements of pre-training for acquiring the foundational abilities of LLMs.

Learning More with Less: Conditional PGGAN-based Data Augmentation for Brain Metastases Detection Using Highly-Rough Annotation on MR Images

Mar 03, 2019

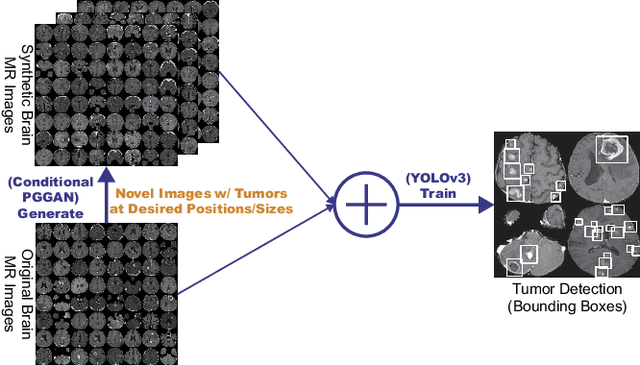

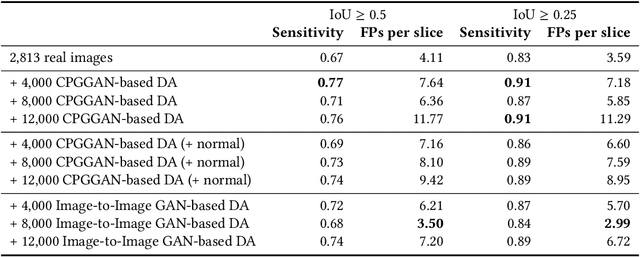

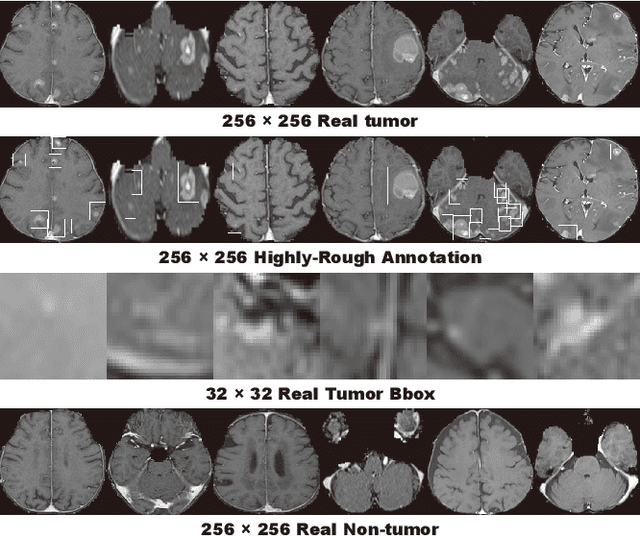

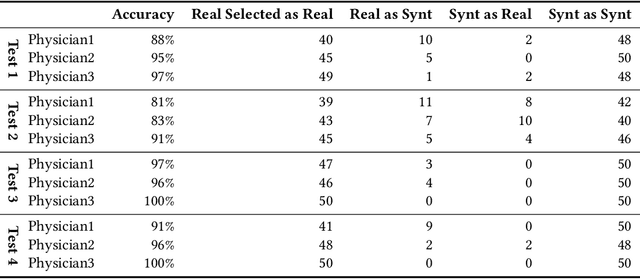

Abstract:Accurate computer-assisted diagnosis can alleviate the risk of overlooking the diagnosis in a clinical environment. Towards this, as a Data Augmentation (DA) technique, Generative Adversarial Networks (GANs) can synthesize additional training data to handle small/fragmented medical images from various scanners; those images are realistic but completely different from the original ones, filling the data lack in the real image distribution. However, we cannot easily use them to locate the position of disease areas, considering expert physicians' annotation as time-expensive tasks. Therefore, this paper proposes Conditional Progressive Growing of GANs (CPGGANs), incorporating bounding box conditions into PGGANs to place brain metastases at desired position/size on 256 x 256 Magnetic Resonance (MR) images, for Convolutional Neural Network-based tumor detection; this first GAN-based medical DA using automatic bounding box annotation improves the robustness during training. The results show that CPGGAN-based DA can boost 10% sensitivity in diagnosis with an acceptable amount of additional False Positives---even with physicians' highly-rough and inconsistent bounding box annotation. Surprisingly, further realistic tumor appearance, achieved with additional normal brain MR images for CPGGAN training, does not contribute to detection performance, while even three expert physicians cannot accurately distinguish them from the real ones in Visual Turing Test.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge