Chanchal K. Roy

Human-Aligned Enhancement of Programming Answers with LLMs Guided by User Feedback

Jan 24, 2026Abstract:Large Language Models (LLMs) are widely used to support software developers in tasks such as code generation, optimization, and documentation. However, their ability to improve existing programming answers in a human-like manner remains underexplored. On technical question-and-answer platforms such as Stack Overflow (SO), contributors often revise answers based on user comments that identify errors, inefficiencies, or missing explanations. Yet roughly one-third of this feedback is never addressed due to limited time, expertise, or visibility, leaving many answers incomplete or outdated. This study investigates whether LLMs can enhance programming answers by interpreting and incorporating comment-based feedback. We make four main contributions. First, we introduce ReSOlve, a benchmark consisting of 790 SO answers with associated comment threads, annotated for improvement-related and general feedback. Second, we evaluate four state-of-the-art LLMs on their ability to identify actionable concerns, finding that DeepSeek achieves the best balance between precision and recall. Third, we present AUTOCOMBAT, an LLM-powered tool that improves programming answers by jointly leveraging user comments and question context. Compared to human revised references, AUTOCOMBAT produces near-human quality improvements while preserving the original intent and significantly outperforming the baseline. Finally, a user study with 58 practitioners shows strong practical value, with 84.5 percent indicating they would adopt or recommend the tool. Overall, AUTOCOMBAT demonstrates the potential of scalable, feedback-driven answer refinement to improve the reliability and trustworthiness of technical knowledge platforms.

A Metamorphic Testing Perspective on Knowledge Distillation for Language Models of Code: Does the Student Deeply Mimic the Teacher?

Nov 07, 2025

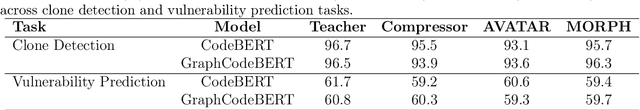

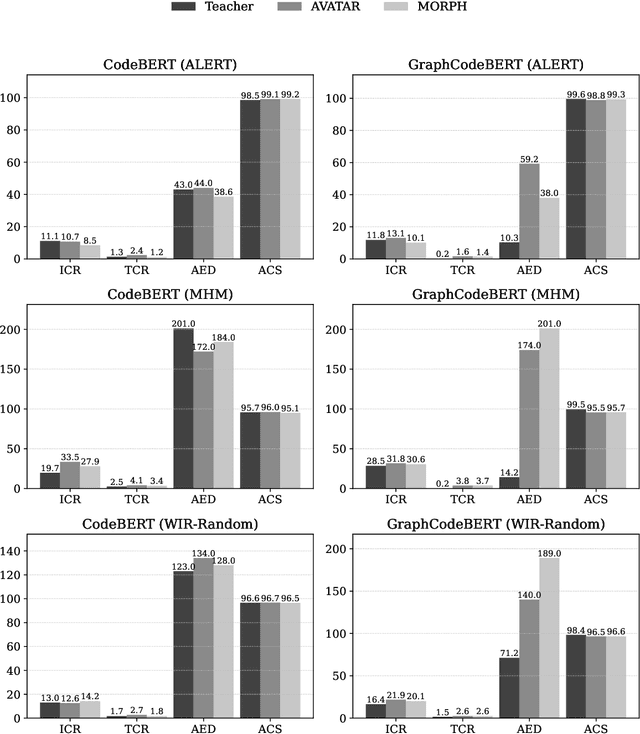

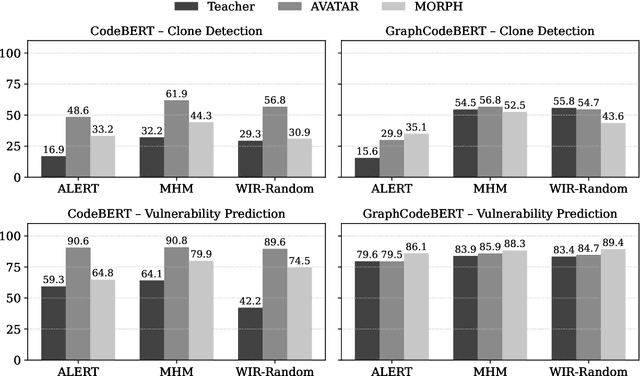

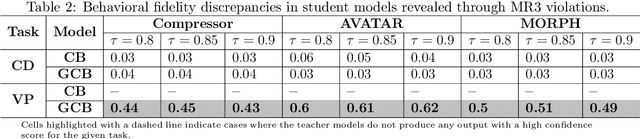

Abstract:Transformer-based language models of code have achieved state-of-the-art performance across a wide range of software analytics tasks, but their practical deployment remains limited due to high computational costs, slow inference speeds, and significant environmental impact. To address these challenges, recent research has increasingly explored knowledge distillation as a method for compressing a large language model of code (the teacher) into a smaller model (the student) while maintaining performance. However, the degree to which a student model deeply mimics the predictive behavior and internal representations of its teacher remains largely unexplored, as current accuracy-based evaluation provides only a surface-level view of model quality and often fails to capture more profound discrepancies in behavioral fidelity between the teacher and student models. To address this gap, we empirically show that the student model often fails to deeply mimic the teacher model, resulting in up to 285% greater performance drop under adversarial attacks, which is not captured by traditional accuracy-based evaluation. Therefore, we propose MetaCompress, a metamorphic testing framework that systematically evaluates behavioral fidelity by comparing the outputs of teacher and student models under a set of behavior-preserving metamorphic relations. We evaluate MetaCompress on two widely studied tasks, using compressed versions of popular language models of code, obtained via three different knowledge distillation techniques: Compressor, AVATAR, and MORPH. The results show that MetaCompress identifies up to 62% behavioral discrepancies in student models, underscoring the need for behavioral fidelity evaluation within the knowledge distillation pipeline and establishing MetaCompress as a practical framework for testing compressed language models of code derived through knowledge distillation.

Comparative Analysis of Quantum and Classical Support Vector Classifiers for Software Bug Prediction: An Exploratory Study

Jan 08, 2025Abstract:Purpose: Quantum computing promises to transform problem-solving across various domains with rapid and practical solutions. Within Software Evolution and Maintenance, Quantum Machine Learning (QML) remains mostly an underexplored domain, particularly in addressing challenges such as detecting buggy software commits from code repositories. Methods: In this study, we investigate the practical application of Quantum Support Vector Classifiers (QSVC) for detecting buggy software commits across 14 open-source software projects with diverse dataset sizes encompassing 30,924 data instances. We compare the QML algorithm PQSVC (Pegasos QSVC) and QSVC against the classical Support Vector Classifier (SVC). Our technique addresses large datasets in QSVC algorithms by dividing them into smaller subsets. We propose and evaluate an aggregation method to combine predictions from these models to detect the entire test dataset. We also introduce an incremental testing methodology to overcome the difficulties of quantum feature mapping during the testing approach. Results: The study shows the effectiveness of QSVC and PQSVC in detecting buggy software commits. The aggregation technique successfully combines predictions from smaller data subsets, enhancing the overall detection accuracy for the entire test dataset. The incremental testing methodology effectively manages the challenges associated with quantum feature mapping during the testing process. Conclusion: We contribute to the advancement of QML algorithms in defect prediction, unveiling the potential for further research in this domain. The specific scenario of the Short-Term Activity Frame (STAF) highlights the early detection of buggy software commits during the initial developmental phases of software systems, particularly when dataset sizes remain insufficient to train machine learning models.

On the Use of Deep Learning Models for Semantic Clone Detection

Dec 19, 2024

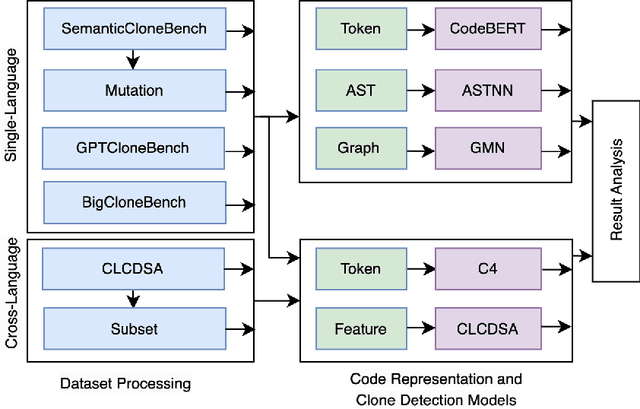

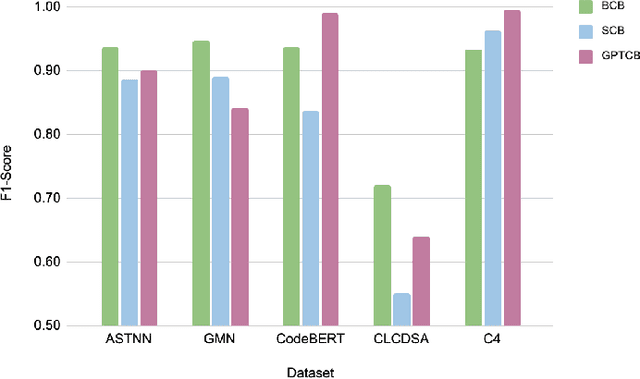

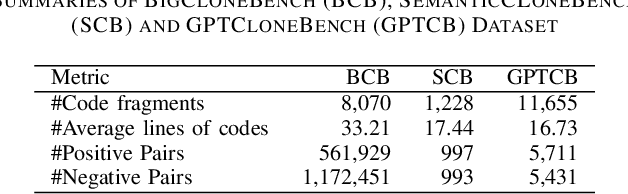

Abstract:Detecting and tracking code clones can ease various software development and maintenance tasks when changes in a code fragment should be propagated over all its copies. Several deep learning-based clone detection models have appeared in the literature for detecting syntactic and semantic clones, widely evaluated with the BigCloneBench dataset. However, class imbalance and the small number of semantic clones make BigCloneBench less ideal for interpreting model performance. Researchers also use other datasets such as GoogleCodeJam, OJClone, and SemanticCloneBench to understand model generalizability. To overcome the limitations of existing datasets, the GPT-assisted semantic and cross-language clone dataset GPTCloneBench has been released. However, how these models compare across datasets remains unclear. In this paper, we propose a multi-step evaluation approach for five state-of-the-art clone detection models leveraging existing benchmark datasets, including GPTCloneBench, and using mutation operators to study model ability. Specifically, we examine three highly-performing single-language models (ASTNN, GMN, CodeBERT) on BigCloneBench, SemanticCloneBench, and GPTCloneBench, testing their robustness with mutation operations. Additionally, we compare them against cross-language models (C4, CLCDSA) known for detecting semantic clones. While single-language models show high F1 scores for BigCloneBench, their performance on SemanticCloneBench varies (up to 20%). Interestingly, the cross-language model (C4) shows superior performance (around 7%) on SemanticCloneBench over other models and performs similarly on BigCloneBench and GPTCloneBench. On mutation-based datasets, C4 has more robust performance (less than 1% difference) compared to single-language models, which show high variability.

Quantum vs. Classical Machine Learning Algorithms for Software Defect Prediction: Challenges and Opportunities

Dec 10, 2024Abstract:Software defect prediction is a critical aspect of software quality assurance, as it enables early identification and mitigation of defects, thereby reducing the cost and impact of software failures. Over the past few years, quantum computing has risen as an exciting technology capable of transforming multiple domains; Quantum Machine Learning (QML) is one of them. QML algorithms harness the power of quantum computing to solve complex problems with better efficiency and effectiveness than their classical counterparts. However, research into its application in software engineering to predict software defects still needs to be explored. In this study, we worked to fill the research gap by comparing the performance of three QML and five classical machine learning (CML) algorithms on the 20 software defect datasets. Our investigation reports the comparative scenarios of QML vs. CML algorithms and identifies the better-performing and consistent algorithms to predict software defects. We also highlight the challenges and future directions of employing QML algorithms in real software defect datasets based on the experience we faced while performing this investigation. The findings of this study can help practitioners and researchers further progress in this research domain by making software systems reliable and bug-free.

Evaluating the Performance of a D-Wave Quantum Annealing System for Feature Subset Selection in Software Defect Prediction

Oct 21, 2024

Abstract:Predicting software defects early in the development process not only enhances the quality and reliability of the software but also decreases the cost of development. A wide range of machine learning techniques can be employed to create software defect prediction models, but the effectiveness and accuracy of these models are often influenced by the choice of appropriate feature subset. Since finding the optimal feature subset is computationally intensive, heuristic and metaheuristic approaches are commonly employed to identify near-optimal solutions within a reasonable time frame. Recently, the quantum computing paradigm quantum annealing (QA) has been deployed to find solutions to complex optimization problems. This opens up the possibility of addressing the feature subset selection problem with a QA machine. Although several strategies have been proposed for feature subset selection using a QA machine, little exploration has been done regarding the viability of a QA machine for feature subset selection in software defect prediction. This study investigates the potential of D-Wave QA system for this task, where we formulate a mutual information (MI)-based filter approach as an optimization problem and utilize a D-Wave Quantum Processing Unit (QPU) solver as a QA solver for feature subset selection. We evaluate the performance of this approach using multiple software defect datasets from the AEEM, JIRA, and NASA projects. We also utilize a D-Wave classical solver for comparative analysis. Our experimental results demonstrate that QA-based feature subset selection can enhance software defect prediction. Although the D-Wave QPU solver exhibits competitive prediction performance with the classical solver in software defect prediction, it significantly reduces the time required to identify the best feature subset compared to its classical counterpart.

Unveiling the potential of large language models in generating semantic and cross-language clones

Sep 12, 2023

Abstract:Semantic and Cross-language code clone generation may be useful for code reuse, code comprehension, refactoring and benchmarking. OpenAI's GPT model has potential in such clone generation as GPT is used for text generation. When developers copy/paste codes from Stack Overflow (SO) or within a system, there might be inconsistent changes leading to unexpected behaviours. Similarly, if someone possesses a code snippet in a particular programming language but seeks equivalent functionality in a different language, a semantic cross-language code clone generation approach could provide valuable assistance. In this study, using SemanticCloneBench as a vehicle, we evaluated how well the GPT-3 model could help generate semantic and cross-language clone variants for a given fragment.We have comprised a diverse set of code fragments and assessed GPT-3s performance in generating code variants.Through extensive experimentation and analysis, where 9 judges spent 158 hours to validate, we investigate the model's ability to produce accurate and semantically correct variants. Our findings shed light on GPT-3's strengths in code generation, offering insights into the potential applications and challenges of using advanced language models in software development. Our quantitative analysis yields compelling results. In the realm of semantic clones, GPT-3 attains an impressive accuracy of 62.14% and 0.55 BLEU score, achieved through few-shot prompt engineering. Furthermore, the model shines in transcending linguistic confines, boasting an exceptional 91.25% accuracy in generating cross-language clones

Leveraging Structural Properties of Source Code Graphs for Just-In-Time Bug Prediction

Jan 25, 2022

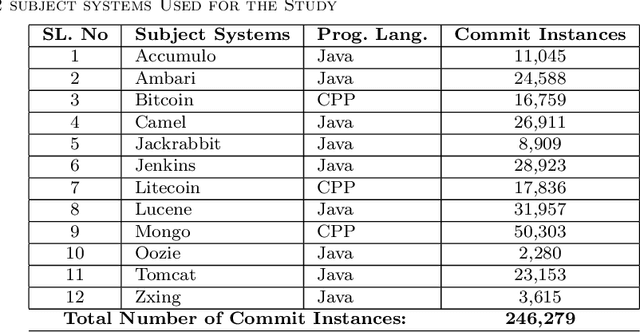

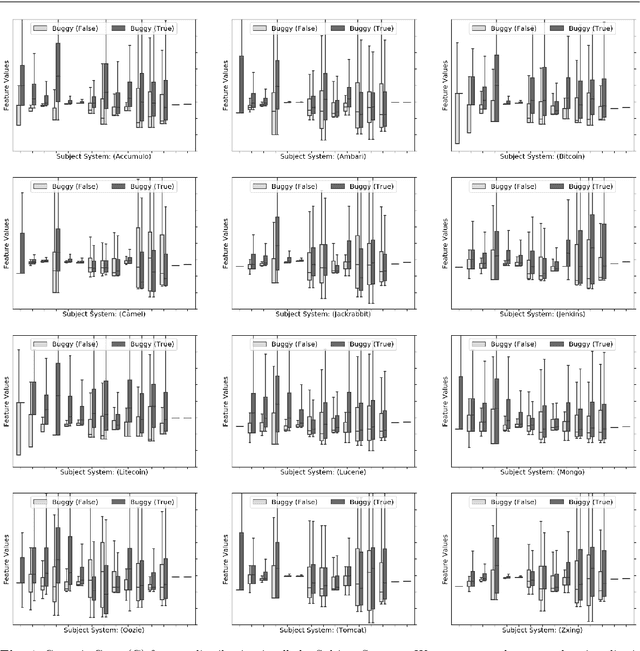

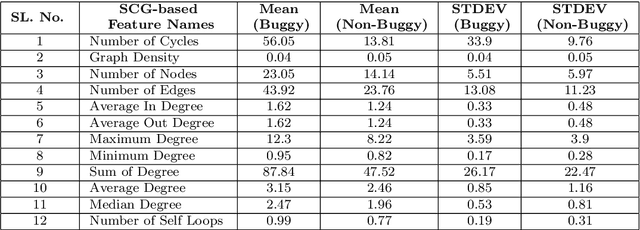

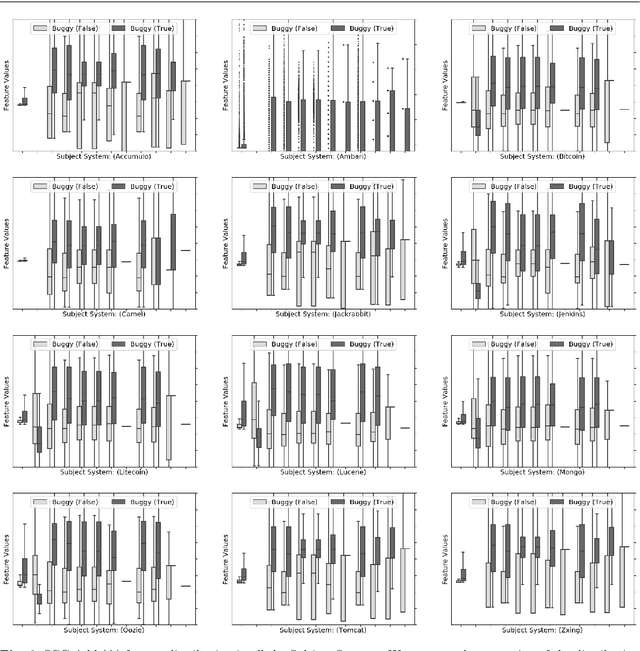

Abstract:The most common use of data visualization is to minimize the complexity for proper understanding. A graph is one of the most commonly used representations for understanding relational data. It produces a simplified representation of data that is challenging to comprehend if kept in a textual format. In this study, we propose a methodology to utilize the relational properties of source code in the form of a graph to identify Just-in-Time (JIT) bug prediction in software systems during different revisions of software evolution and maintenance. We presented a method to convert the source codes of commit patches to equivalent graph representations and named it Source Code Graph (SCG). To understand and compare multiple source code graphs, we extracted several structural properties of these graphs, such as the density, number of cycles, nodes, edges, etc. We then utilized the attribute values of those SCGs to visualize and detect buggy software commits. We process more than 246K software commits from 12 subject systems in this investigation. Our investigation on these 12 open-source software projects written in C++ and Java programming languages shows that if we combine the features from SCG with conventional features used in similar studies, we will get the increased performance of Machine Learning (ML) based buggy commit detection models. We also find the increase of F1~Scores in predicting buggy and non-buggy commits statistically significant using the Wilcoxon Signed Rank Test. Since SCG-based feature values represent the style or structural properties of source code updates or changes in the software system, it suggests the importance of careful maintenance of source code style or structure for keeping a software system bug-free.

A Systematic Literature Review of Automated Query Reformulations in Source Code Search

Aug 22, 2021

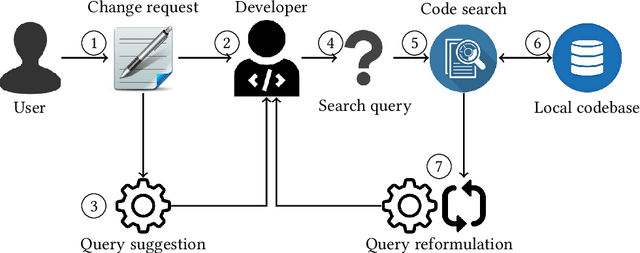

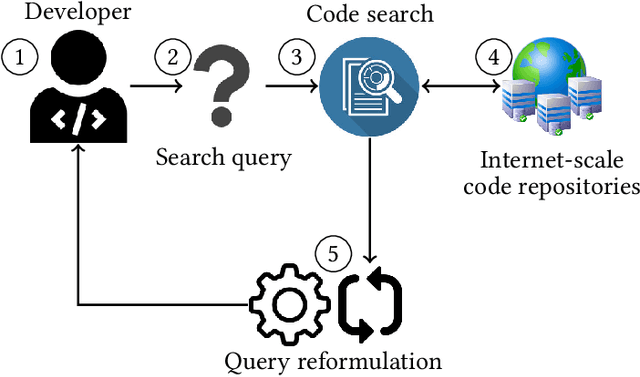

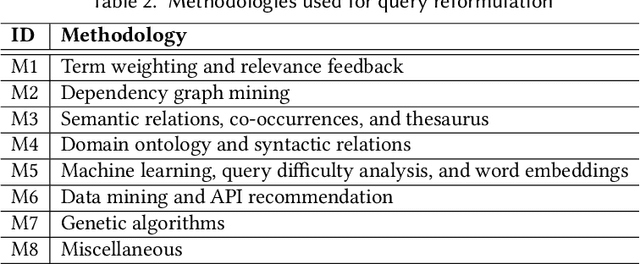

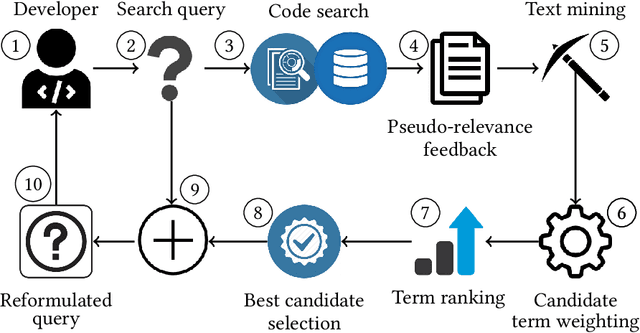

Abstract:Software developers often fix critical bugs to ensure the reliability of their software. They might also need to add new features to their software at a regular interval to stay competitive in the market. These bugs and features are reported as change requests (i.e., technical documents written by software users). Developers consult these documents to implement the required changes in the software code. As a part of change implementation, they often choose a few important keywords from a change request as an ad hoc query. Then they execute the query with a code search engine (e.g., Lucene) and attempt to find out the exact locations within the software code that need to be changed. Unfortunately, even experienced developers often fail to choose the right queries. As a consequence, the developers often experience difficulties in detecting the appropriate locations within the code and spend the majority of their time in numerous trials and errors. There have been many studies that attempt to support developers in constructing queries by automatically reformulating their ad hoc queries. In this systematic literature review, we carefully select 70 primary studies on query reformulations from 2,970 candidate studies, perform an in-depth qualitative analysis using the Grounded Theory approach, and then answer six important research questions. Our investigation has reported several major findings. First, to date, eight major methodologies (e.g., term weighting, query-term co-occurrence analysis, thesaurus lookup) have been adopted in query reformulation. Second, the existing studies suffer from several major limitations (e.g., lack of generalizability, vocabulary mismatch problem, weak evaluation, the extra burden on the developers) that might prevent their wide adoption. Finally, we discuss several open issues in search query reformulations and suggest multiple future research opportunities.

The Forgotten Role of Search Queries in IR-based Bug Localization: An Empirical Study

Aug 11, 2021

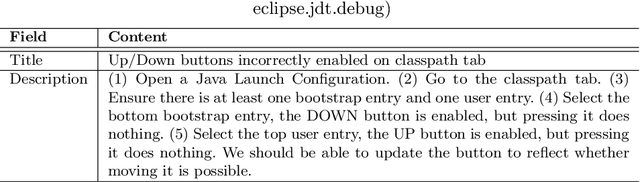

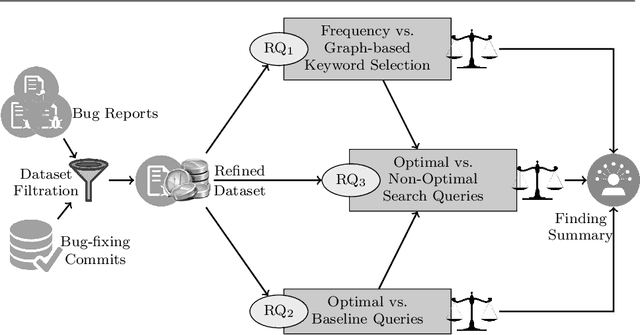

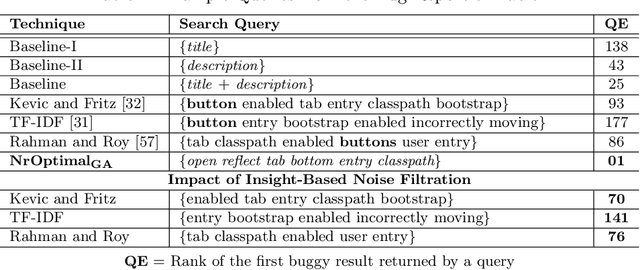

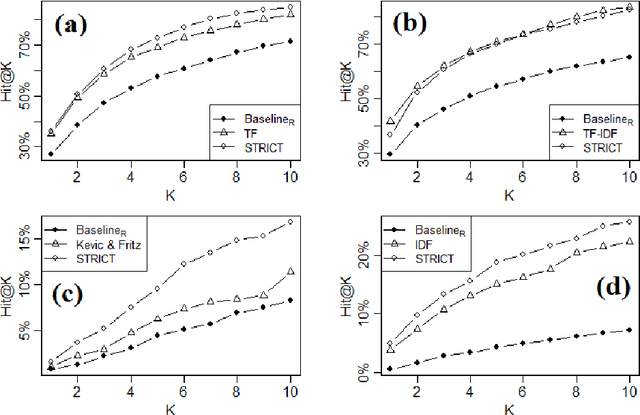

Abstract:Being light-weight and cost-effective, IR-based approaches for bug localization have shown promise in finding software bugs. However, the accuracy of these approaches heavily depends on their used bug reports. A significant number of bug reports contain only plain natural language texts. According to existing studies, IR-based approaches cannot perform well when they use these bug reports as search queries. On the other hand, there is a piece of recent evidence that suggests that even these natural language-only reports contain enough good keywords that could help localize the bugs successfully. On one hand, these findings suggest that natural language-only bug reports might be a sufficient source for good query keywords. On the other hand, they cast serious doubt on the query selection practices in the IR-based bug localization. In this article, we attempted to clear the sky on this aspect by conducting an in-depth empirical study that critically examines the state-of-the-art query selection practices in IR-based bug localization. In particular, we use a dataset of 2,320 bug reports, employ ten existing approaches from the literature, exploit the Genetic Algorithm-based approach to construct optimal, near-optimal search queries from these bug reports, and then answer three research questions. We confirmed that the state-of-the-art query construction approaches are indeed not sufficient for constructing appropriate queries (for bug localization) from certain natural language-only bug reports although they contain such queries. We also demonstrate that optimal queries and non-optimal queries chosen from bug report texts are significantly different in terms of several keyword characteristics, which has led us to actionable insights. Furthermore, we demonstrate 27%--34% improvement in the performance of non-optimal queries through the application of our actionable insights to them.

* 57 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge