Carola Winzen

Black-Box Complexity: Breaking the $O$ Barrier of LeadingOnes

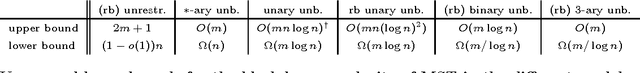

Oct 24, 2012Abstract:We show that the unrestricted black-box complexity of the $n$-dimensional XOR- and permutation-invariant LeadingOnes function class is $O(n \log (n) / \log \log n)$. This shows that the recent natural looking $O(n\log n)$ bound is not tight. The black-box optimization algorithm leading to this bound can be implemented in a way that only 3-ary unbiased variation operators are used. Hence our bound is also valid for the unbiased black-box complexity recently introduced by Lehre and Witt (GECCO 2010). The bound also remains valid if we impose the additional restriction that the black-box algorithm does not have access to the objective values but only to their relative order (ranking-based black-box complexity).

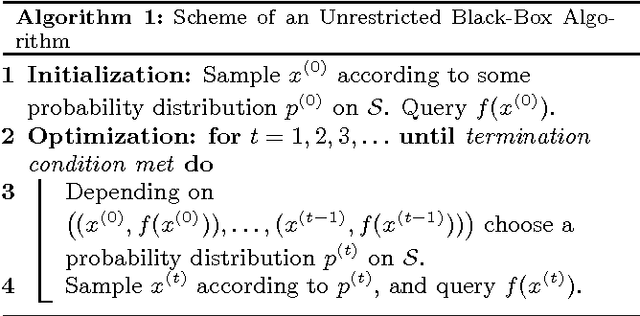

Ranking-Based Black-Box Complexity

Sep 03, 2012Abstract:Randomized search heuristics such as evolutionary algorithms, simulated annealing, and ant colony optimization are a broadly used class of general-purpose algorithms. Analyzing them via classical methods of theoretical computer science is a growing field. While several strong runtime analysis results have appeared in the last 20 years, a powerful complexity theory for such algorithms is yet to be developed. We enrich the existing notions of black-box complexity by the additional restriction that not the actual objective values, but only the relative quality of the previously evaluated solutions may be taken into account by the black-box algorithm. Many randomized search heuristics belong to this class of algorithms. We show that the new ranking-based model gives more realistic complexity estimates for some problems. For example, the class of all binary-value functions has a black-box complexity of $O(\log n)$ in the previous black-box models, but has a ranking-based complexity of $\Theta(n)$. For the class of all OneMax functions, we present a ranking-based black-box algorithm that has a runtime of $\Theta(n / \log n)$, which shows that the OneMax problem does not become harder with the additional ranking-basedness restriction.

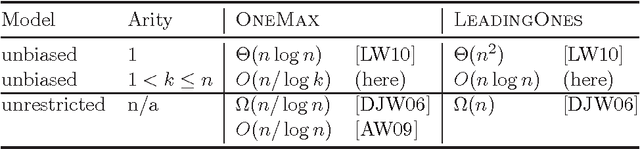

Reducing the Arity in Unbiased Black-Box Complexity

Mar 19, 2012Abstract:We show that for all $1<k \leq \log n$ the $k$-ary unbiased black-box complexity of the $n$-dimensional $\onemax$ function class is $O(n/k)$. This indicates that the power of higher arity operators is much stronger than what the previous $O(n/\log k)$ bound by Doerr et al. (Faster black-box algorithms through higher arity operators, Proc. of FOGA 2011, pp. 163--172, ACM, 2011) suggests. The key to this result is an encoding strategy, which might be of independent interest. We show that, using $k$-ary unbiased variation operators only, we may simulate an unrestricted memory of size $O(2^k)$ bits.

Playing Mastermind With Constant-Size Memory

Oct 17, 2011Abstract:We analyze the classic board game of Mastermind with $n$ holes and a constant number of colors. A result of Chv\'atal (Combinatorica 3 (1983), 325-329) states that the codebreaker can find the secret code with $\Theta(n / \log n)$ questions. We show that this bound remains valid if the codebreaker may only store a constant number of guesses and answers. In addition to an intrinsic interest in this question, our result also disproves a conjecture of Droste, Jansen, and Wegener (Theory of Computing Systems 39 (2006), 525-544) on the memory-restricted black-box complexity of the OneMax function class.

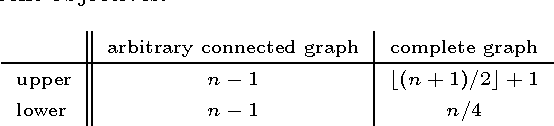

Black-Box Complexities of Combinatorial Problems

Aug 01, 2011

Abstract:Black-box complexity is a complexity theoretic measure for how difficult a problem is to be optimized by a general purpose optimization algorithm. It is thus one of the few means trying to understand which problems are tractable for genetic algorithms and other randomized search heuristics. Most previous work on black-box complexity is on artificial test functions. In this paper, we move a step forward and give a detailed analysis for the two combinatorial problems minimum spanning tree and single-source shortest paths. Besides giving interesting bounds for their black-box complexities, our work reveals that the choice of how to model the optimization problem is non-trivial here. This in particular comes true where the search space does not consist of bit strings and where a reasonable definition of unbiasedness has to be agreed on.

Multiplicative Drift Analysis

Jan 04, 2011Abstract:In this work, we introduce multiplicative drift analysis as a suitable way to analyze the runtime of randomized search heuristics such as evolutionary algorithms. We give a multiplicative version of the classical drift theorem. This allows easier analyses in those settings where the optimization progress is roughly proportional to the current distance to the optimum. To display the strength of this tool, we regard the classical problem how the (1+1) Evolutionary Algorithm optimizes an arbitrary linear pseudo-Boolean function. Here, we first give a relatively simple proof for the fact that any linear function is optimized in expected time $O(n \log n)$, where $n$ is the length of the bit string. Afterwards, we show that in fact any such function is optimized in expected time at most ${(1+o(1)) 1.39 \euler n\ln (n)}$, again using multiplicative drift analysis. We also prove a corresponding lower bound of ${(1-o(1))e n\ln(n)}$ which actually holds for all functions with a unique global optimum. We further demonstrate how our drift theorem immediately gives natural proofs (with better constants) for the best known runtime bounds for the (1+1) Evolutionary Algorithm on combinatorial problems like finding minimum spanning trees, shortest paths, or Euler tours.

* Contains results from our GECCO 2010 and CEC 2010 conference paper

Faster Black-Box Algorithms Through Higher Arity Operators

Dec 04, 2010

Abstract:We extend the work of Lehre and Witt (GECCO 2010) on the unbiased black-box model by considering higher arity variation operators. In particular, we show that already for binary operators the black-box complexity of \leadingones drops from $\Theta(n^2)$ for unary operators to $O(n \log n)$. For \onemax, the $\Omega(n \log n)$ unary black-box complexity drops to O(n) in the binary case. For $k$-ary operators, $k \leq n$, the \onemax-complexity further decreases to $O(n/\log k)$.

Non-Existence of Linear Universal Drift Functions

Nov 15, 2010Abstract:Drift analysis has become a powerful tool to prove bounds on the runtime of randomized search heuristics. It allows, for example, fairly simple proofs for the classical problem how the (1+1) Evolutionary Algorithm (EA) optimizes an arbitrary pseudo-Boolean linear function. The key idea of drift analysis is to measure the progress via another pseudo-Boolean function (called drift function) and use deeper results from probability theory to derive from this a good bound for the runtime of the EA. Surprisingly, all these results manage to use the same drift function for all linear objective functions. In this work, we show that such universal drift functions only exist if the mutation probability is close to the standard value of $1/n$.

Optimizing Monotone Functions Can Be Difficult

Oct 14, 2010Abstract:Extending previous analyses on function classes like linear functions, we analyze how the simple (1+1) evolutionary algorithm optimizes pseudo-Boolean functions that are strictly monotone. Contrary to what one would expect, not all of these functions are easy to optimize. The choice of the constant $c$ in the mutation probability $p(n) = c/n$ can make a decisive difference. We show that if $c < 1$, then the (1+1) evolutionary algorithm finds the optimum of every such function in $\Theta(n \log n)$ iterations. For $c=1$, we can still prove an upper bound of $O(n^{3/2})$. However, for $c > 33$, we present a strictly monotone function such that the (1+1) evolutionary algorithm with overwhelming probability does not find the optimum within $2^{\Omega(n)}$ iterations. This is the first time that we observe that a constant factor change of the mutation probability changes the run-time by more than constant factors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge