Bryce Goodman

xView3-SAR: Detecting Dark Fishing Activity Using Synthetic Aperture Imagery

Jun 02, 2022

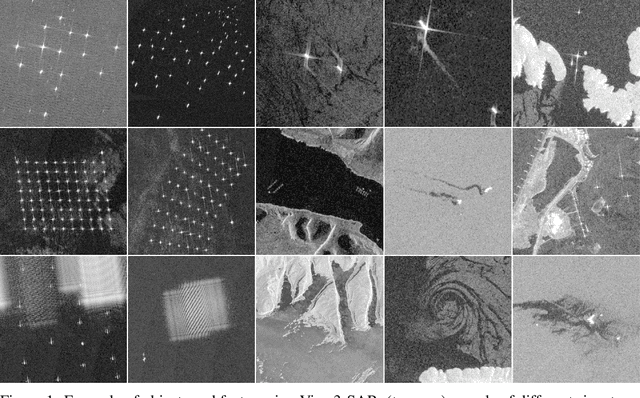

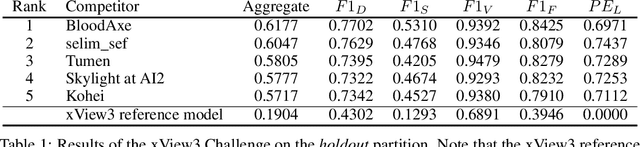

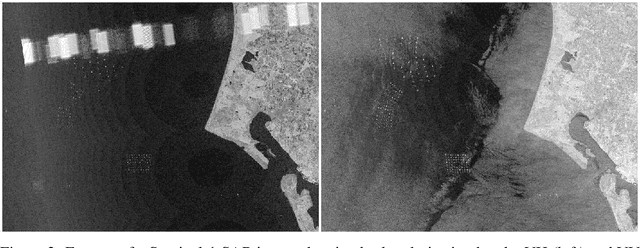

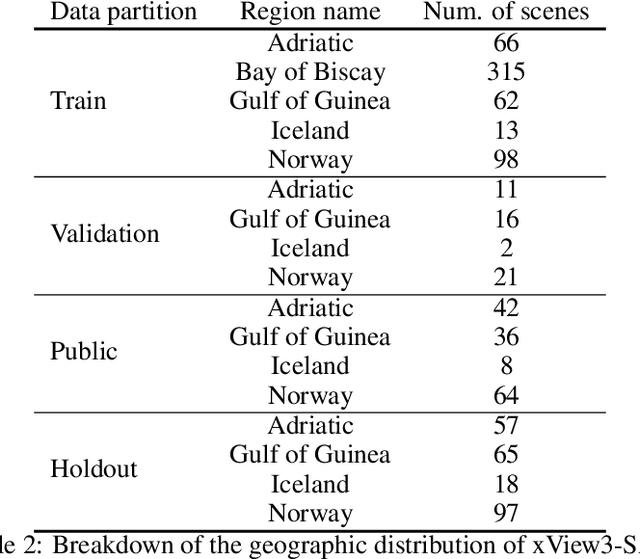

Abstract:Unsustainable fishing practices worldwide pose a major threat to marine resources and ecosystems. Identifying vessels that evade monitoring systems -- known as "dark vessels" -- is key to managing and securing the health of marine environments. With the rise of satellite-based synthetic aperture radar (SAR) imaging and modern machine learning (ML), it is now possible to automate detection of dark vessels day or night, under all-weather conditions. SAR images, however, require domain-specific treatment and is not widely accessible to the ML community. Moreover, the objects (vessels) are small and sparse, challenging traditional computer vision approaches. We present the largest labeled dataset for training ML models to detect and characterize vessels from SAR. xView3-SAR consists of nearly 1,000 analysis-ready SAR images from the Sentinel-1 mission that are, on average, 29,400-by-24,400 pixels each. The images are annotated using a combination of automated and manual analysis. Co-located bathymetry and wind state rasters accompany every SAR image. We provide an overview of the results from the xView3 Computer Vision Challenge, an international competition using xView3-SAR for ship detection and characterization at large scale. We release the data (https://iuu.xview.us/) and code (https://github.com/DIUx-xView) to support ongoing development and evaluation of ML approaches for this important application.

Hard Choices and Hard Limits for Artificial Intelligence

May 04, 2021Abstract:Artificial intelligence (AI) is supposed to help us make better choices. Some of these choices are small, like what route to take to work, or what music to listen to. Others are big, like what treatment to administer for a disease or how long to sentence someone for a crime. If AI can assist with these big decisions, we might think it can also help with hard choices, cases where alternatives are neither better, worse nor equal but on a par. The aim of this paper, however, is to show that this view is mistaken: the fact of parity shows that there are hard limits on AI in decision making and choices that AI cannot, and should not, resolve.

xBD: A Dataset for Assessing Building Damage from Satellite Imagery

Nov 21, 2019

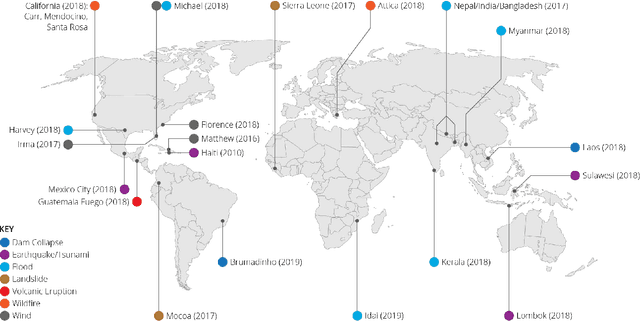

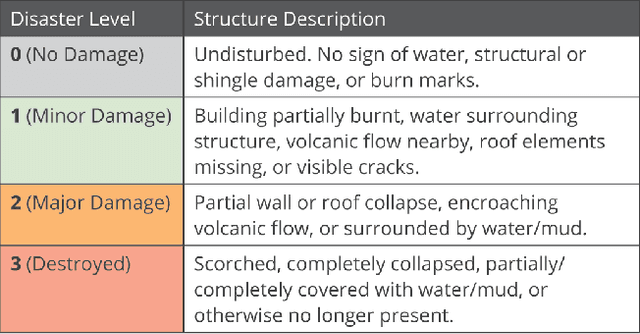

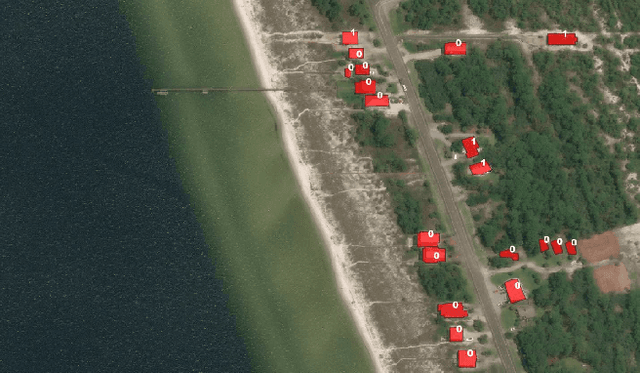

Abstract:We present xBD, a new, large-scale dataset for the advancement of change detection and building damage assessment for humanitarian assistance and disaster recovery research. Natural disaster response requires an accurate understanding of damaged buildings in an affected region. Current response strategies require in-person damage assessments within 24-48 hours of a disaster. Massive potential exists for using aerial imagery combined with computer vision algorithms to assess damage and reduce the potential danger to human life. In collaboration with multiple disaster response agencies, xBD provides pre- and post-event satellite imagery across a variety of disaster events with building polygons, ordinal labels of damage level, and corresponding satellite metadata. Furthermore, the dataset contains bounding boxes and labels for environmental factors such as fire, water, and smoke. xBD is the largest building damage assessment dataset to date, containing 850,736 building annotations across 45,362 km\textsuperscript{2} of imagery.

European Union regulations on algorithmic decision-making and a "right to explanation"

Aug 31, 2016

Abstract:We summarize the potential impact that the European Union's new General Data Protection Regulation will have on the routine use of machine learning algorithms. Slated to take effect as law across the EU in 2018, it will restrict automated individual decision-making (that is, algorithms that make decisions based on user-level predictors) which "significantly affect" users. The law will also effectively create a "right to explanation," whereby a user can ask for an explanation of an algorithmic decision that was made about them. We argue that while this law will pose large challenges for industry, it highlights opportunities for computer scientists to take the lead in designing algorithms and evaluation frameworks which avoid discrimination and enable explanation.

* presented at 2016 ICML Workshop on Human Interpretability in Machine Learning (WHI 2016), New York, NY

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge