Boyan Zhang

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

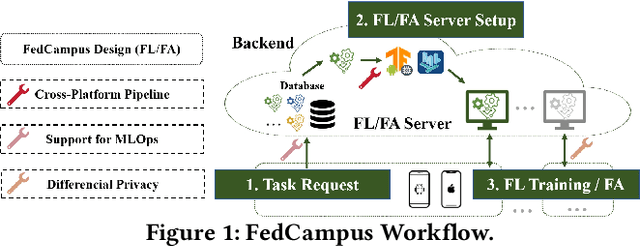

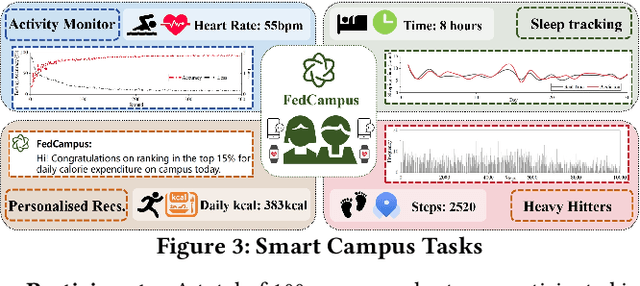

Demo: FedCampus: A Real-world Privacy-preserving Mobile Application for Smart Campus via Federated Learning & Analytics

Aug 31, 2024

Abstract:In this demo, we introduce FedCampus, a privacy-preserving mobile application for smart \underline{campus} with \underline{fed}erated learning (FL) and federated analytics (FA). FedCampus enables cross-platform on-device FL/FA for both iOS and Android, supporting continuously models and algorithms deployment (MLOps). Our app integrates privacy-preserving processed data via differential privacy (DP) from smartwatches, where the processed parameters are used for FL/FA through the FedCampus backend platform. We distributed 100 smartwatches to volunteers at Duke Kunshan University and have successfully completed a series of smart campus tasks featuring capabilities such as sleep tracking, physical activity monitoring, personalized recommendations, and heavy hitters. Our project is opensourced at https://github.com/FedCampus/FedCampus_Flutter. See the FedCampus video at https://youtu.be/k5iu46IjA38.

FedKit: Enabling Cross-Platform Federated Learning for Android and iOS

Feb 16, 2024Abstract:We present FedKit, a federated learning (FL) system tailored for cross-platform FL research on Android and iOS devices. FedKit pipelines cross-platform FL development by enabling model conversion, hardware-accelerated training, and cross-platform model aggregation. Our FL workflow supports flexible machine learning operations (MLOps) in production, facilitating continuous model delivery and training. We have deployed FedKit in a real-world use case for health data analysis on university campuses, demonstrating its effectiveness. FedKit is open-source at https://github.com/FedCampus/FedKit.

IntersectGAN: Learning Domain Intersection for Generating Images with Multiple Attributes

Oct 03, 2019

Abstract:Generative adversarial networks (GANs) have demonstrated great success in generating various visual content. However, images generated by existing GANs are often of attributes (e.g., smiling expression) learned from one image domain. As a result, generating images of multiple attributes requires many real samples possessing multiple attributes which are very resource expensive to be collected. In this paper, we propose a novel GAN, namely IntersectGAN, to learn multiple attributes from different image domains through an intersecting architecture. For example, given two image domains $X_1$ and $X_2$ with certain attributes, the intersection $X_1 \cap X_2$ denotes a new domain where images possess the attributes from both $X_1$ and $X_2$ domains. The proposed IntersectGAN consists of two discriminators $D_1$ and $D_2$ to distinguish between generated and real samples of different domains, and three generators where the intersection generator is trained against both discriminators. And an overall adversarial loss function is defined over three generators. As a result, our proposed IntersectGAN can be trained on multiple domains of which each presents one specific attribute, and eventually eliminates the need of real sample images simultaneously possessing multiple attributes. By using the CelebFaces Attributes dataset, our proposed IntersectGAN is able to produce high quality face images possessing multiple attributes (e.g., a face with black hair and a smiling expression). Both qualitative and quantitative evaluations are conducted to compare our proposed IntersectGAN with other baseline methods. Besides, several different applications of IntersectGAN have been explored with promising results.

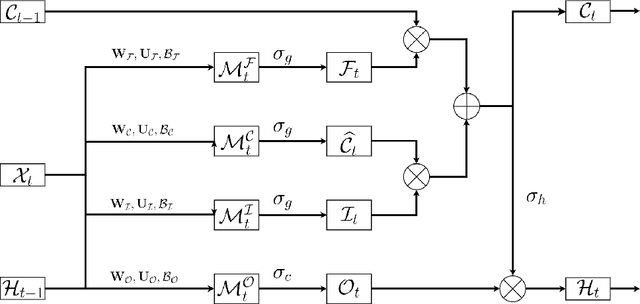

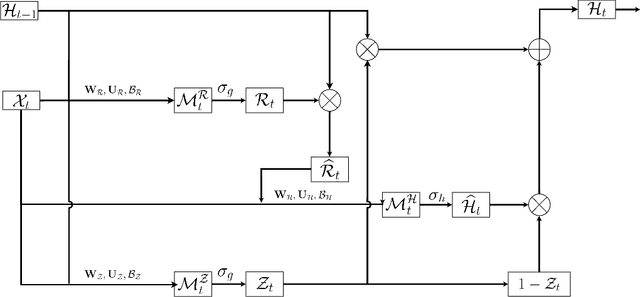

Tensorial Recurrent Neural Networks for Longitudinal Data Analysis

Aug 01, 2017

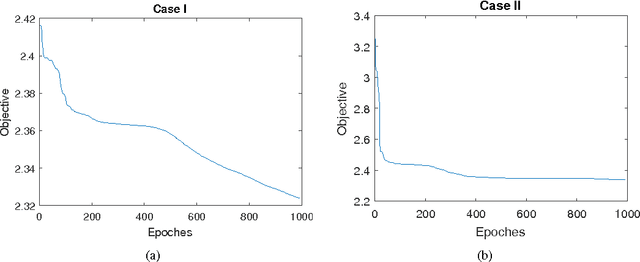

Abstract:Traditional Recurrent Neural Networks assume vectorized data as inputs. However many data from modern science and technology come in certain structures such as tensorial time series data. To apply the recurrent neural networks for this type of data, a vectorisation process is necessary, while such a vectorisation leads to the loss of the precise information of the spatial or longitudinal dimensions. In addition, such a vectorized data is not an optimum solution for learning the representation of the longitudinal data. In this paper, we propose a new variant of tensorial neural networks which directly take tensorial time series data as inputs. We call this new variant as Tensorial Recurrent Neural Network (TRNN). The proposed TRNN is based on tensor Tucker decomposition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge