Borjan Geshkovski

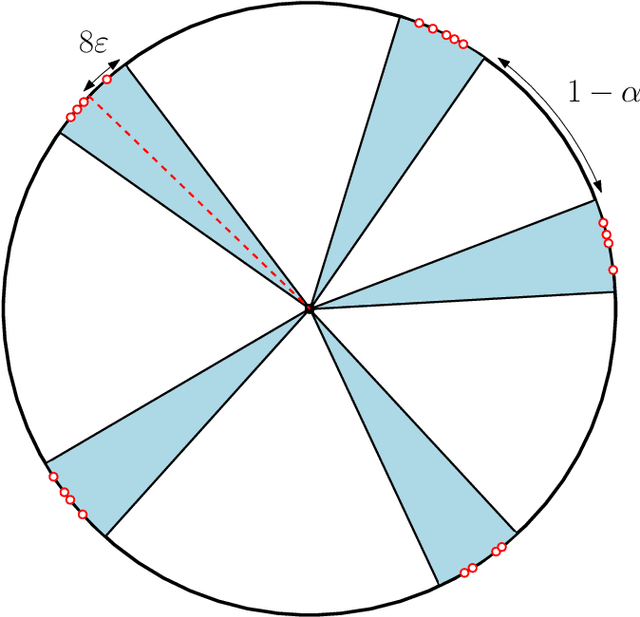

Perceptrons and localization of attention's mean-field landscape

Jan 29, 2026Abstract:The forward pass of a Transformer can be seen as an interacting particle system on the unit sphere: time plays the role of layers, particles that of token embeddings, and the unit sphere idealizes layer normalization. In some weight settings the system can even be seen as a gradient flow for an explicit energy, and one can make sense of the infinite context length (mean-field) limit thanks to Wasserstein gradient flows. In this paper we study the effect of the perceptron block in this setting, and show that critical points are generically atomic and localized on subsets of the sphere.

Constructive approximate transport maps with normalizing flows

Dec 26, 2024Abstract:We study an approximate controllability problem for the continuity equation and its application to constructing transport maps with normalizing flows. Specifically, we construct time-dependent controls $\theta=(w, a, b)$ in the vector field $w(a^\top x + b)_+$ to approximately transport a known base density $\rho_{\mathrm{B}}$ to a target density $\rho_*$. The approximation error is measured in relative entropy, and $\theta$ are constructed piecewise constant, with bounds on the number of switches being provided. Our main result relies on an assumption on the relative tail decay of $\rho_*$ and $\rho_{\mathrm{B}}$, and provides hints on characterizing the reachable space of the continuity equation in relative entropy.

On the number of modes of Gaussian kernel density estimators

Dec 12, 2024

Abstract:We consider the Gaussian kernel density estimator with bandwidth $\beta^{-\frac12}$ of $n$ iid Gaussian samples. Using the Kac-Rice formula and an Edgeworth expansion, we prove that the expected number of modes on the real line scales as $\Theta(\sqrt{\beta\log\beta})$ as $\beta,n\to\infty$ provided $n^c\lesssim \beta\lesssim n^{2-c}$ for some constant $c>0$. An impetus behind this investigation is to determine the number of clusters to which Transformers are drawn in a metastable state.

Measure-to-measure interpolation using Transformers

Nov 07, 2024

Abstract:Transformers are deep neural network architectures that underpin the recent successes of large language models. Unlike more classical architectures that can be viewed as point-to-point maps, a Transformer acts as a measure-to-measure map implemented as specific interacting particle system on the unit sphere: the input is the empirical measure of tokens in a prompt and its evolution is governed by the continuity equation. In fact, Transformers are not limited to empirical measures and can in principle process any input measure. As the nature of data processed by Transformers is expanding rapidly, it is important to investigate their expressive power as maps from an arbitrary measure to another arbitrary measure. To that end, we provide an explicit choice of parameters that allows a single Transformer to match $N$ arbitrary input measures to $N$ arbitrary target measures, under the minimal assumption that every pair of input-target measures can be matched by some transport map.

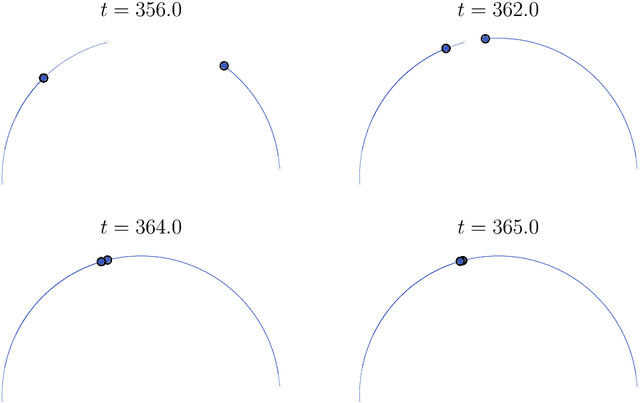

Dynamic metastability in the self-attention model

Oct 09, 2024

Abstract:We consider the self-attention model - an interacting particle system on the unit sphere, which serves as a toy model for Transformers, the deep neural network architecture behind the recent successes of large language models. We prove the appearance of dynamic metastability conjectured in [GLPR23] - although particles collapse to a single cluster in infinite time, they remain trapped near a configuration of several clusters for an exponentially long period of time. By leveraging a gradient flow interpretation of the system, we also connect our result to an overarching framework of slow motion of gradient flows proposed by Otto and Reznikoff [OR07] in the context of coarsening and the Allen-Cahn equation. We finally probe the dynamics beyond the exponentially long period of metastability, and illustrate that, under an appropriate time-rescaling, the energy reaches its global maximum in finite time and has a staircase profile, with trajectories manifesting saddle-to-saddle-like behavior, reminiscent of recent works in the analysis of training dynamics via gradient descent for two-layer neural networks.

A mathematical perspective on Transformers

Dec 22, 2023

Abstract:Transformers play a central role in the inner workings of large language models. We develop a mathematical framework for analyzing Transformers based on their interpretation as interacting particle systems, which reveals that clusters emerge in long time. Our study explores the underlying theory and offers new perspectives for mathematicians as well as computer scientists.

The emergence of clusters in self-attention dynamics

May 17, 2023

Abstract:Viewing Transformers as interacting particle systems, we describe the geometry of learned representations when the weights are not time dependent. We show that particles, representing tokens, tend to cluster toward particular limiting objects as time tends to infinity. Cluster locations are determined by the initial tokens, confirming context-awareness of representations learned by Transformers. Using techniques from dynamical systems and partial differential equations, we show that the type of limiting object that emerges depends on the spectrum of the value matrix. Additionally, in the one-dimensional case we prove that the self-attention matrix converges to a low-rank Boolean matrix. The combination of these results mathematically confirms the empirical observation made by Vaswani et al. [VSP'17] that leaders appear in a sequence of tokens when processed by Transformers.

Turnpike in optimal control of PDEs, ResNets, and beyond

Feb 08, 2022Abstract:The \emph{turnpike property} in contemporary macroeconomics asserts that if an economic planner seeks to move an economy from one level of capital to another, then the most efficient path, as long as the planner has enough time, is to rapidly move stock to a level close to the optimal stationary or constant path, then allow for capital to develop along that path until the desired term is nearly reached, at which point the stock ought to be moved to the final target. Motivated in part by its nature as a resource allocation strategy, over the past decade, the turnpike property has also been shown to hold for several classes of partial differential equations arising in mechanics. When formalized mathematically, the turnpike theory corroborates the insights from economics: for an optimal control problem set in a finite-time horizon, optimal controls and corresponding states, are close (often exponentially), during most of the time, except near the initial and final time, to the optimal control and corresponding state for the associated stationary optimal control problem. In particular, the former are mostly constant over time. This fact provides a rigorous meaning to the asymptotic simplification that some optimal control problems appear to enjoy over long time intervals, allowing the consideration of the corresponding stationary problem for computing and applications. We review a slice of the theory developed over the past decade --the controllability of the underlying system is an important ingredient, and can even be used to devise simple turnpike-like strategies which are nearly optimal--, and present several novel applications, including, among many others, the characterization of Hamilton-Jacobi-Bellman asymptotics, and stability estimates in deep learning via residual neural networks.

Sparse approximation in learning via neural ODEs

Feb 26, 2021

Abstract:We consider the continuous-time, neural ordinary differential equation (neural ODE) perspective of deep supervised learning, and study the impact of the final time horizon $T$ in training. We focus on a cost consisting of an integral of the empirical risk over the time interval, and $L^1$--parameter regularization. Under homogeneity assumptions on the dynamics (typical for ReLU activations), we prove that any global minimizer is sparse, in the sense that there exists a positive stopping time $T^*$ beyond which the optimal parameters vanish. Moreover, under appropriate interpolation assumptions on the neural ODE, we provide quantitative estimates of the stopping time $T^\ast$, and of the training error of the trajectories at the stopping time. The latter stipulates a quantitative approximation property of neural ODE flows with sparse parameters. In practical terms, a shorter time-horizon in the training problem can be interpreted as considering a shallower residual neural network (ResNet), and since the optimal parameters are concentrated over a shorter time horizon, such a consideration may lower the computational cost of training without discarding relevant information.

Large-time asymptotics in deep learning

Aug 06, 2020

Abstract:It is by now well-known that practical deep supervised learning may roughly be cast as an optimal control problem for a specific discrete-time, nonlinear dynamical system called an artificial neural network. In this work, we consider the continuous-time formulation of the deep supervised learning problem, and study the latter's behavior when the final time horizon increases, a fact that can be interpreted as increasing the number of layers in the neural network setting.When considering the classical regularized empirical risk minimization problem, we show that, in long time, the optimal states converge to zero training error, namely approach the zero training error regime, whilst the optimal control parameters approach, on an appropriate scale, minimal norm parameters with corresponding states precisely in the zero training error regime. This result provides an alternative theoretical underpinning to the notion that neural networks learn best in the overparametrized regime, when seen from the large layer perspective. We also propose a learning problem consisting of minimizing a cost with a state tracking term, and establish the well-known turnpike property, which indicates that the solutions of the learning problem in long time intervals consist of three pieces, the first and the last of which being transient short-time arcs, and the middle piece being a long-time arc staying exponentially close to the optimal solution of an associated static learning problem. This property in fact stipulates a quantitative estimate for the number of layers required to reach the zero training error regime. Both of the aforementioned asymptotic regimes are addressed in the context of continuous-time and continuous space-time neural networks, the latter taking the form of nonlinear, integro-differential equations, hence covering residual neural networks with both fixed and possibly variable depths.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge