Bolun Xu

Conformal Uncertainty Quantification of Electricity Price Predictions for Risk-Averse Storage Arbitrage

Dec 10, 2024

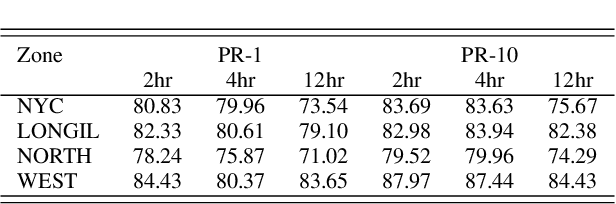

Abstract:This paper proposes a risk-averse approach to energy storage price arbitrage, leveraging conformal uncertainty quantification for electricity price predictions. The method addresses the significant challenges posed by the inherent volatility and uncertainty of real-time electricity prices, which create substantial risks of financial losses for energy storage participants relying on future price forecasts to plan their operations. The framework comprises a two-layer prediction model to quantify real-time price uncertainty confidence intervals with high coverage. The framework is distribution-free and can work with any underlying point prediction model. We evaluate the quantification effectiveness through storage price arbitrage application by managing the risk of participating in the real-time market. We design a risk-averse policy for profit-maximization of energy storage arbitrage to find the safest storage schedule with very minimal losses. Using historical data from New York State and synthetic price predictions, our evaluations demonstrate that this framework can achieve good profit margins with less than $35\%$ purchases.

Energy Storage Arbitrage in Two-settlement Markets: A Transformer-Based Approach

Apr 26, 2024Abstract:This paper presents an integrated model for bidding energy storage in day-ahead and real-time markets to maximize profits. We show that in integrated two-stage bidding, the real-time bids are independent of day-ahead settlements, while the day-ahead bids should be based on predicted real-time prices. We utilize a transformer-based model for real-time price prediction, which captures complex dynamical patterns of real-time prices, and use the result for day-ahead bidding design. For real-time bidding, we utilize a long short-term memory-dynamic programming hybrid real-time bidding model. We train and test our model with historical data from New York State, and our results showed that the integrated system achieved promising results of almost a 20\% increase in profit compared to only bidding in real-time markets, and at the same time reducing the risk in terms of the number of days with negative profits.

Equitable Time-Varying Pricing Tariff Design: A Joint Learning and Optimization Approach

Jul 26, 2023Abstract:Time-varying pricing tariffs incentivize consumers to shift their electricity demand and reduce costs, but may increase the energy burden for consumers with limited response capability. The utility must thus balance affordability and response incentives when designing these tariffs by considering consumers' response expectations. This paper proposes a joint learning-based identification and optimization method to design equitable time-varying tariffs. Our proposed method encodes historical prices and demand response data into a recurrent neural network (RNN) to capture high-dimensional and non-linear consumer price response behaviors. We then embed the RNN into the tariff design optimization, formulating a non-linear optimization problem with a quadratic objective. We propose a gradient-based solution method that achieves fast and scalable computation. Simulation using real-world consumer data shows that our equitable tariffs protect low-income consumers from price surges while effectively motivating consumers to reduce peak demand. The method also ensures revenue recovery for the utility company and achieves robust performance against demand response uncertainties and prediction errors.

Transferable Energy Storage Bidder

Jan 02, 2023

Abstract:Energy storage resources must consider both price uncertainties and their physical operating characteristics when participating in wholesale electricity markets. This is a challenging problem as electricity prices are highly volatile, and energy storage has efficiency losses, power, and energy constraints. This paper presents a novel, versatile, and transferable approach combining model-based optimization with a convolutional long short-term memory network for energy storage to respond to or bid into wholesale electricity markets. We apply transfer learning to the ConvLSTM network to quickly adapt the trained bidding model to new market environments. We test our proposed approach using historical prices from New York State, showing it achieves state-of-the-art results, achieving between 70% to near 90% profit ratio compared to perfect foresight cases, in both price response and wholesale market bidding setting with various energy storage durations. We also test a transfer learning approach by pre-training the bidding model using New York data and applying it to arbitrage in Queensland, Australia. The result shows transfer learning achieves exceptional arbitrage profitability with as little as three days of local training data, demonstrating its significant advantage over training from scratch in scenarios with very limited data availability.

Energy Storage Price Arbitrage via Opportunity Value Function Prediction

Nov 20, 2022

Abstract:This paper proposes a novel energy storage price arbitrage algorithm combining supervised learning with dynamic programming. The proposed approach uses a neural network to directly predicts the opportunity cost at different energy storage state-of-charge levels, and then input the predicted opportunity cost into a model-based arbitrage control algorithm for optimal decisions. We generate the historical optimal opportunity value function using price data and a dynamic programming algorithm, then use it as the ground truth and historical price as predictors to train the opportunity value function prediction model. Our method achieves 65% to 90% profit compared to perfect foresight in case studies using different energy storage models and price data from New York State, which significantly outperforms existing model-based and learning-based methods. While guaranteeing high profitability, the algorithm is also light-weighted and can be trained and implemented with minimal computational cost. Our results also show that the learned prediction model has excellent transferability. The prediction model trained using price data from one region also provides good arbitrage results when tested over other regions.

End-to-End Demand Response Model Identification and Baseline Estimation with Deep Learning

Sep 02, 2021

Abstract:This paper proposes a novel end-to-end deep learning framework that simultaneously identifies demand baselines and the incentive-based agent demand response model, from the net demand measurements and incentive signals. This learning framework is modularized as two modules: 1) the decision making process of a demand response participant is represented as a differentiable optimization layer, which takes the incentive signal as input and predicts user's response; 2) the baseline demand forecast is represented as a standard neural network model, which takes relevant features and predicts user's baseline demand. These two intermediate predictions are integrated, to form the net demand forecast. We then propose a gradient-descent approach that backpropagates the net demand forecast errors to update the weights of the agent model and the weights of baseline demand forecast, jointly. We demonstrate the effectiveness of our approach through computation experiments with synthetic demand response traces and a large-scale real world demand response dataset. Our results show that the approach accurately identifies the demand response model, even without any prior knowledge about the baseline demand.

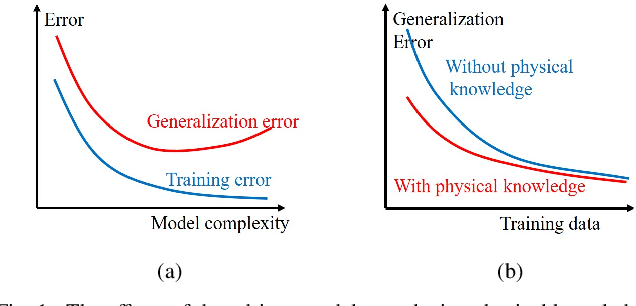

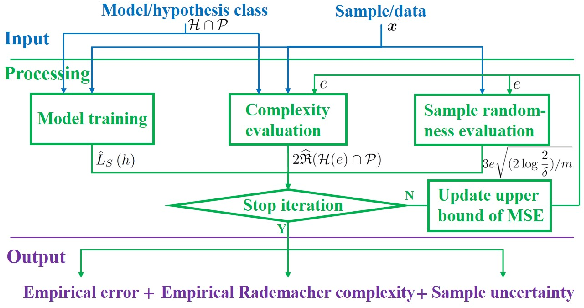

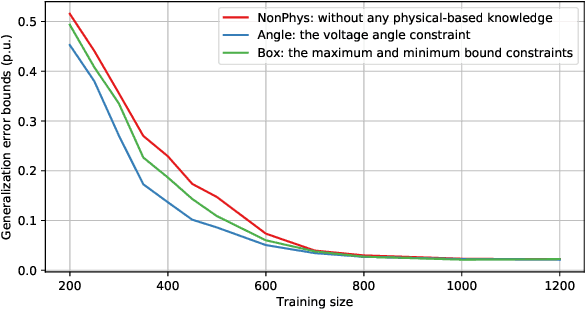

Bounding Data-driven Model Errors in Power Grid Analysis

Oct 30, 2019

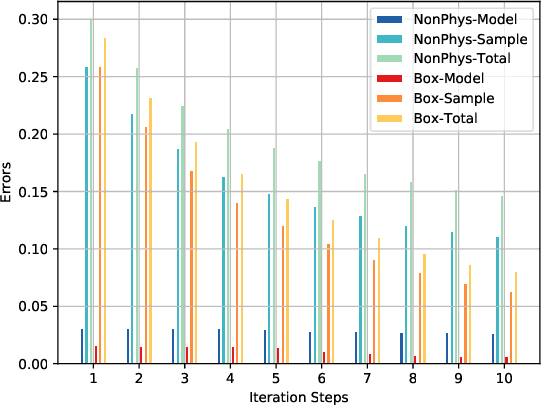

Abstract:Data-driven models analyze power grids under incomplete physical information, and their accuracy has been mostly validated empirically using certain training and testing datasets. This paper explores error bounds for data-driven models under all possible training and testing scenarios, and proposes an evaluation implementation based on Rademacher complexity theory. We answer key questions for data-driven models: how much training data is required to guarantee a certain error bound, and how partial physical knowledge can be utilized to reduce the required amount of data. Our results are crucial for the evaluation and application of data-driven models in power grid analysis. We demonstrate the proposed method by finding generalization error bounds for two applications, i.e. branch flow linearization and external network equivalent under different degrees of physical knowledge. Results identify how the bounds decrease with additional power grid physical knowledge or more training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge