Bingheng Wang

Learning Agile Gate Traversal via Analytical Optimal Policy Gradient

Aug 29, 2025Abstract:Traversing narrow gates presents a significant challenge and has become a standard benchmark for evaluating agile and precise quadrotor flight. Traditional modularized autonomous flight stacks require extensive design and parameter tuning, while end-to-end reinforcement learning (RL) methods often suffer from low sample efficiency and limited interpretability. In this work, we present a novel hybrid framework that adaptively fine-tunes model predictive control (MPC) parameters online using outputs from a neural network (NN) trained offline. The NN jointly predicts a reference pose and cost-function weights, conditioned on the coordinates of the gate corners and the current drone state. To achieve efficient training, we derive analytical policy gradients not only for the MPC module but also for an optimization-based gate traversal detection module. Furthermore, we introduce a new formulation of the attitude tracking error that admits a simplified representation, facilitating effective learning with bounded gradients. Hardware experiments demonstrate that our method enables fast and accurate quadrotor traversal through narrow gates in confined environments. It achieves several orders of magnitude improvement in sample efficiency compared to naive end-to-end RL approaches.

NeRC: Neural Ranging Correction through Differentiable Moving Horizon Location Estimation

Aug 20, 2025Abstract:GNSS localization using everyday mobile devices is challenging in urban environments, as ranging errors caused by the complex propagation of satellite signals and low-quality onboard GNSS hardware are blamed for undermining positioning accuracy. Researchers have pinned their hopes on data-driven methods to regress such ranging errors from raw measurements. However, the grueling annotation of ranging errors impedes their pace. This paper presents a robust end-to-end Neural Ranging Correction (NeRC) framework, where localization-related metrics serve as the task objective for training the neural modules. Instead of seeking impractical ranging error labels, we train the neural network using ground-truth locations that are relatively easy to obtain. This functionality is supported by differentiable moving horizon location estimation (MHE) that handles a horizon of measurements for positioning and backpropagates the gradients for training. Even better, as a blessing of end-to-end learning, we propose a new training paradigm using Euclidean Distance Field (EDF) cost maps, which alleviates the demands on labeled locations. We evaluate the proposed NeRC on public benchmarks and our collected datasets, demonstrating its distinguished improvement in positioning accuracy. We also deploy NeRC on the edge to verify its real-time performance for mobile devices.

Auto-Multilift: Distributed Learning and Control for Cooperative Load Transportation With Quadrotors

Jun 07, 2024

Abstract:Designing motion control and planning algorithms for multilift systems remains challenging due to the complexities of dynamics, collision avoidance, actuator limits, and scalability. Existing methods that use optimization and distributed techniques effectively address these constraints and scalability issues. However, they often require substantial manual tuning, leading to suboptimal performance. This paper proposes Auto-Multilift, a novel framework that automates the tuning of model predictive controllers (MPCs) for multilift systems. We model the MPC cost functions with deep neural networks (DNNs), enabling fast online adaptation to various scenarios. We develop a distributed policy gradient algorithm to train these DNNs efficiently in a closed-loop manner. Central to our algorithm is distributed sensitivity propagation, which parallelizes gradient computation across quadrotors, focusing on actual system state sensitivities relative to key MPC parameters. We also provide theoretical guarantees for the convergence of this algorithm. Extensive simulations show rapid convergence and favorable scalability to a large number of quadrotors. Our method outperforms a state-of-the-art open-loop MPC tuning approach by effectively learning adaptive MPCs from trajectory tracking errors and handling the unique dynamics couplings within the multilift system. Additionally, our framework can learn an adaptive reference for reconfigurating the system when traversing through multiple narrow slots.

Trust-Region Neural Moving Horizon Estimation for Robots

Sep 19, 2023

Abstract:Accurate disturbance estimation is essential for safe robot operations. The recently proposed neural moving horizon estimation (NeuroMHE), which uses a portable neural network to model the MHE's weightings, has shown promise in further pushing the accuracy and efficiency boundary. Currently, NeuroMHE is trained through gradient descent, with its gradient computed recursively using a Kalman filter. This paper proposes a trust-region policy optimization method for training NeuroMHE. We achieve this by providing the second-order derivatives of MHE, referred to as the MHE Hessian. Remarkably, we show that much of computation already used to obtain the gradient, especially the Kalman filter, can be efficiently reused to compute the MHE Hessian. This offers linear computational complexity relative to the MHE horizon. As a case study, we evaluate the proposed trust region NeuroMHE on real quadrotor flight data for disturbance estimation. Our approach demonstrates highly efficient training in under 5 min using only 100 data points. It outperforms a state-of-the-art neural estimator by up to 68.1% in force estimation accuracy, utilizing only 1.4% of its network parameters. Furthermore, our method showcases enhanced robustness to network initialization compared to the gradient descent counterpart.

Learning Agile Flight Maneuvers: Deep SE(3) Motion Planning and Control for Quadrotors

Sep 22, 2022

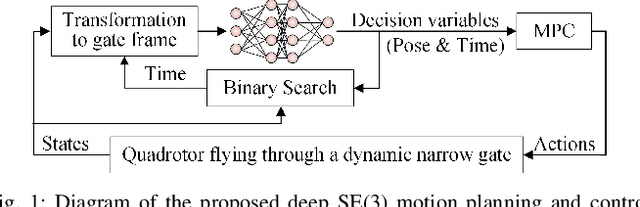

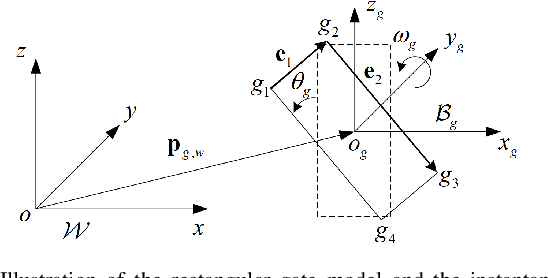

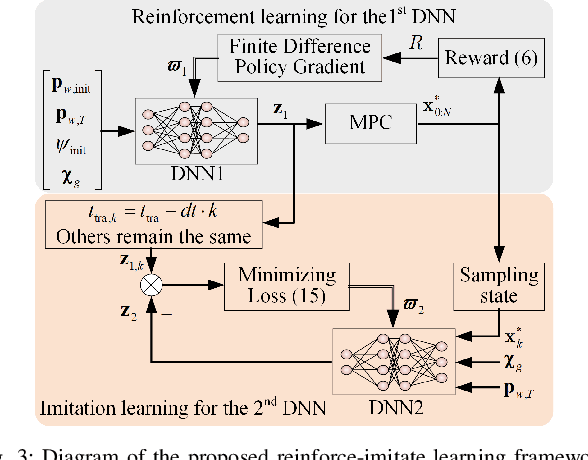

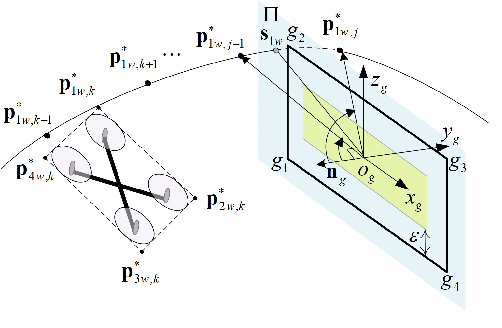

Abstract:Agile flights of autonomous quadrotors in cluttered environments require constrained motion planning and control subject to translational and rotational dynamics. Traditional model-based methods typically demand complicated design and heavy computation. In this paper, we develop a novel deep reinforcement learning-based method that tackles the challenging task of flying through a dynamic narrow gate. We design a model predictive controller with its adaptive tracking references parameterized by a deep neural network (DNN). These references include the traversal time and the quadrotor SE(3) traversal pose that encourage the robot to fly through the gate with maximum safety margins from various initial conditions. To cope with the difficulty of training in highly dynamic environments, we develop a reinforce-imitate learning framework to train the DNN efficiently that generalizes well to diverse settings. Furthermore, we propose a binary search algorithm that allows online adaption of the SE(3) references to dynamic gates in real-time. Finally, through extensive high-fidelity simulations, we show that our approach is robust to the gate's velocity uncertainties and adaptive to different gate trajectories and orientations.

Neural Moving Horizon Estimation for Robust Flight Control

Jul 07, 2022

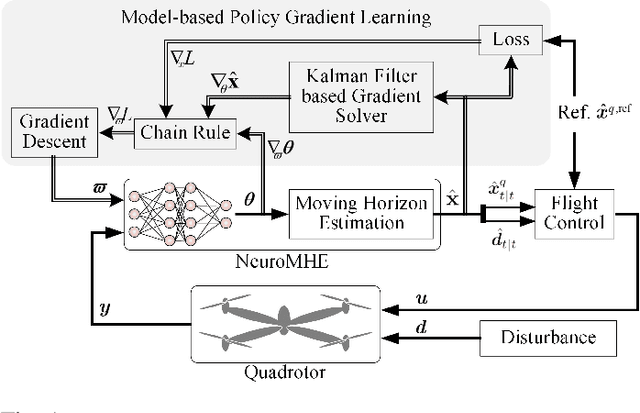

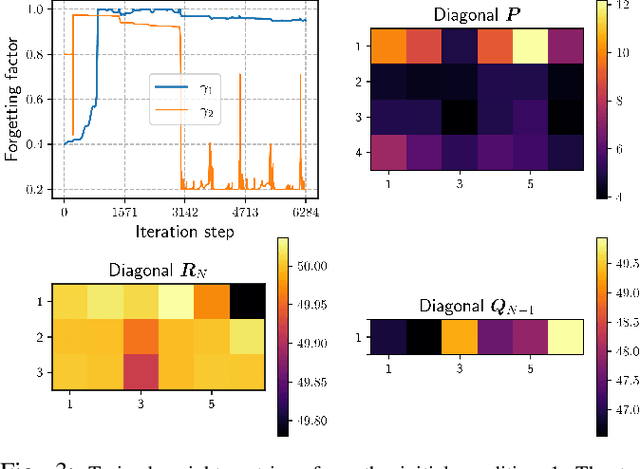

Abstract:Estimating and reacting to external disturbances is crucial for robust flight control of quadrotors. Existing estimators typically require significant tuning for a specific flight scenario or training with extensive ground-truth disturbance data to achieve satisfactory performance. In this paper, we propose a neural moving horizon estimator (NeuroMHE) that can automatically tune the MHE parameters modeled by a neural network and adapt to different flight scenarios. We achieve this by deriving the analytical gradients of the MHE estimates with respect to the tuning parameters, which enable a seamless embedding of an MHE as a learnable layer into the neural network for highly effective learning. Most interestingly, we show that the gradients can be obtained efficiently from a Kalman filter in a recursive form. Moreover, we develop a model-based policy gradient algorithm to train NeuroMHE directly from the trajectory tracking error without the need for the ground-truth disturbance data. The effectiveness of NeuroMHE is verified extensively via both simulations and physical experiments on a quadrotor in various challenging flights. Notably, NeuroMHE outperforms the state-of-the-art estimator with force estimation error reductions of up to 49.4% by using only a 2.5% amount of the neural network parameters. The proposed method is general and can be applied to robust adaptive control for other robotic systems.

Differentiable Moving Horizon Estimation for Robust Flight Control

Aug 29, 2021

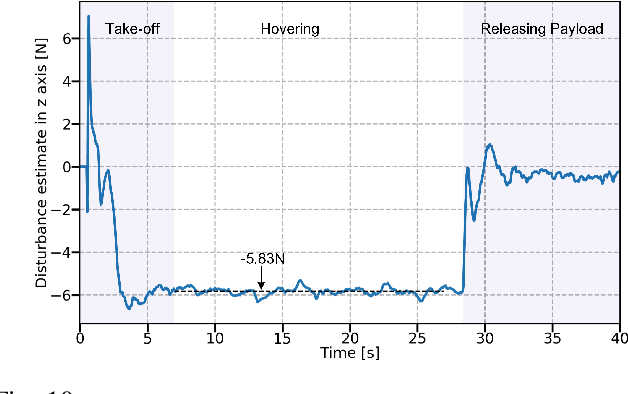

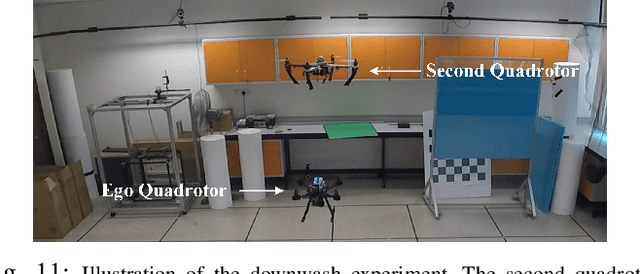

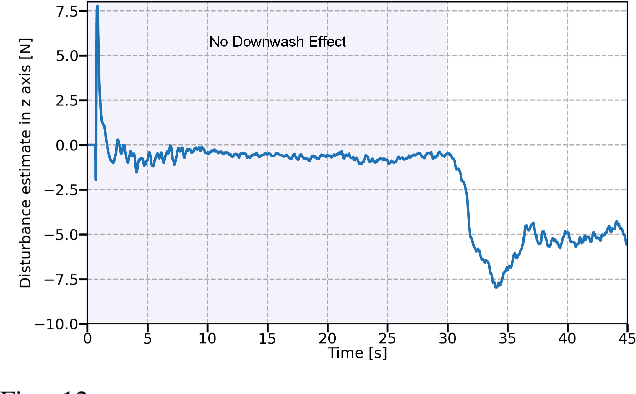

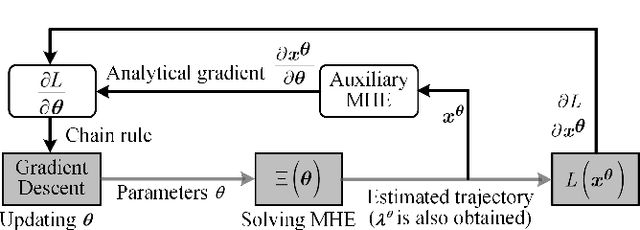

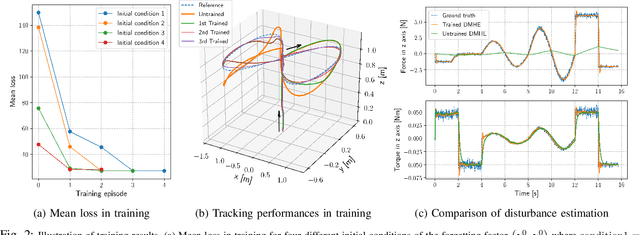

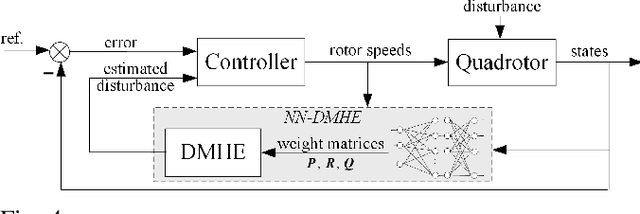

Abstract:Estimating and reacting to external disturbances is of fundamental importance for robust control of quadrotors. Existing estimators typically require significant tuning or training with a large amount of data, including the ground truth, to achieve satisfactory performance. This paper proposes a data-efficient differentiable moving horizon estimation (DMHE) algorithm that can automatically tune the MHE parameters online and also adapt to different scenarios. We achieve this by deriving the analytical gradient of the estimated trajectory from MHE with respect to the tuning parameters, enabling end-to-end learning for auto-tuning. Most interestingly, we show that the gradient can be calculated efficiently from a Kalman filter in a recursive form. Moreover, we develop a model-based policy gradient algorithm to learn the parameters directly from the trajectory tracking errors without the need for the ground truth. The proposed DMHE can be further embedded as a layer with other neural networks for joint optimization. Finally, we demonstrate the effectiveness of the proposed method via both simulation and experiments on quadrotors, where challenging scenarios such as sudden payload change and flying in downwash are examined.

Underactuated Motion Planning and Control for Jumping with Wheeled-Bipedal Robots

Dec 11, 2020

Abstract:This paper studies jumping for wheeled-bipedal robots, a motion that takes full advantage of the benefits from the hybrid wheeled and legged design features. A comprehensive hierarchical scheme for motion planning and control of jumping with wheeled-bipedal robots is developed. Underactuation of the wheeled-bipedal dynamics is the main difficulty to be addressed, especially in the planning problem. To tackle this issue, a novel wheeled-spring-loaded inverted pendulum (W-SLIP) model is proposed to characterize the essential dynamics of wheeled-bipedal robots during jumping. Relying on a differential-flatness-like property of the W-SLIP model, a tractable quadratic programming based solution is devised for planning jumping motions for wheeled-bipedal robots. Combined with a kinematic planning scheme accounting for the flight phase motion, a complete planning scheme for the W-SLIP model is developed. To enable accurate tracking of the planned trajectories, a linear quadratic regulator based wheel controller and a task-space whole-body controller for the other joints are blended through disturbance observers. The overall planning and control scheme is validated using V-REP simulations of a prototype wheeled-bipedal robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge