Shupeng Lai

Neural Moving Horizon Estimation for Robust Flight Control

Jul 07, 2022

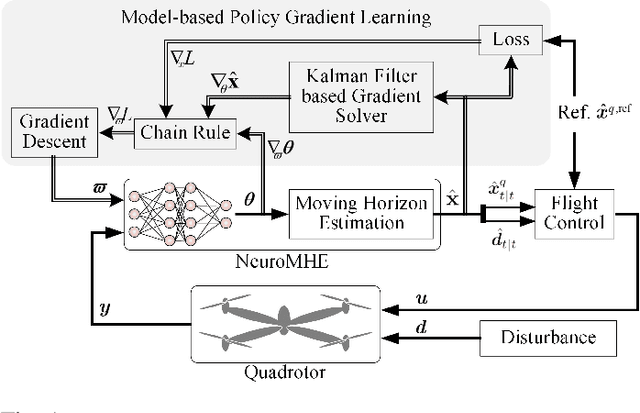

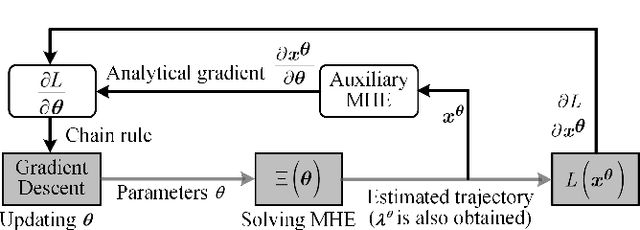

Abstract:Estimating and reacting to external disturbances is crucial for robust flight control of quadrotors. Existing estimators typically require significant tuning for a specific flight scenario or training with extensive ground-truth disturbance data to achieve satisfactory performance. In this paper, we propose a neural moving horizon estimator (NeuroMHE) that can automatically tune the MHE parameters modeled by a neural network and adapt to different flight scenarios. We achieve this by deriving the analytical gradients of the MHE estimates with respect to the tuning parameters, which enable a seamless embedding of an MHE as a learnable layer into the neural network for highly effective learning. Most interestingly, we show that the gradients can be obtained efficiently from a Kalman filter in a recursive form. Moreover, we develop a model-based policy gradient algorithm to train NeuroMHE directly from the trajectory tracking error without the need for the ground-truth disturbance data. The effectiveness of NeuroMHE is verified extensively via both simulations and physical experiments on a quadrotor in various challenging flights. Notably, NeuroMHE outperforms the state-of-the-art estimator with force estimation error reductions of up to 49.4% by using only a 2.5% amount of the neural network parameters. The proposed method is general and can be applied to robust adaptive control for other robotic systems.

Differentiable Moving Horizon Estimation for Robust Flight Control

Aug 29, 2021

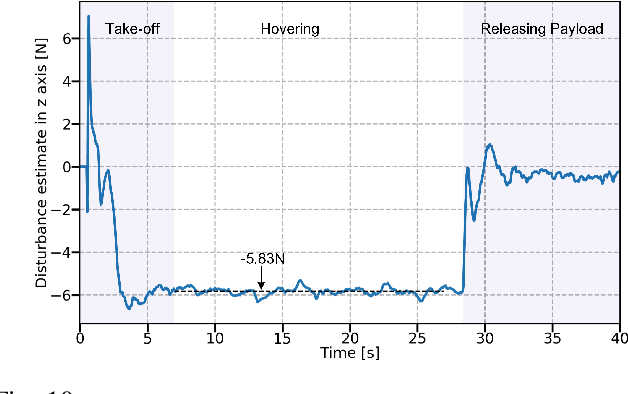

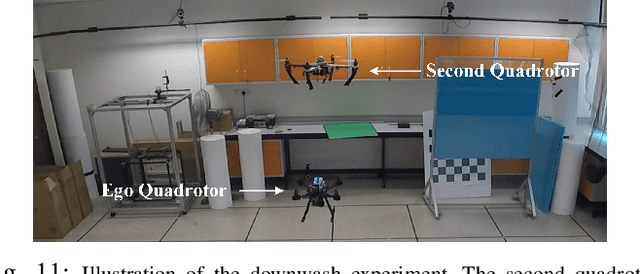

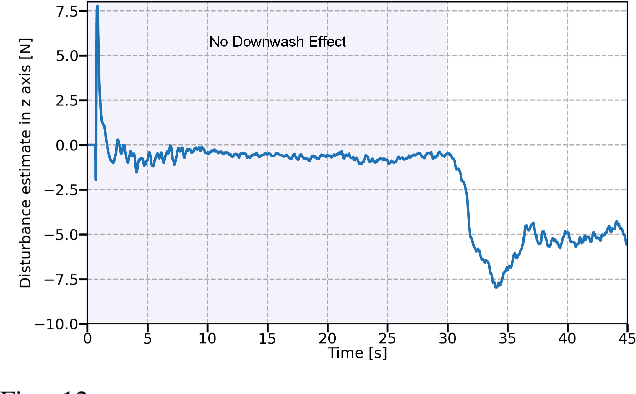

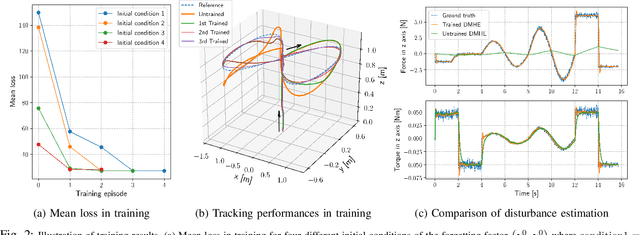

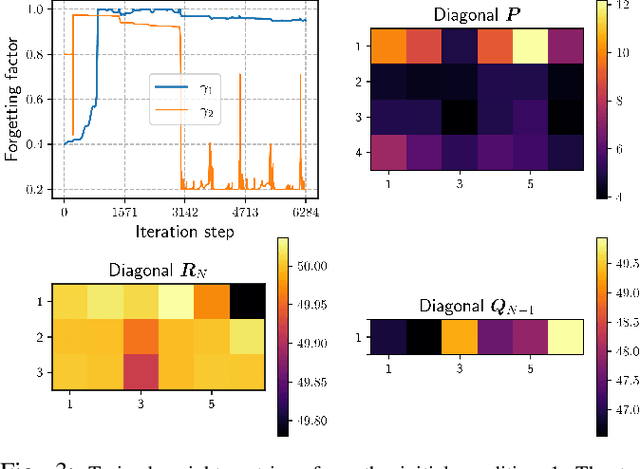

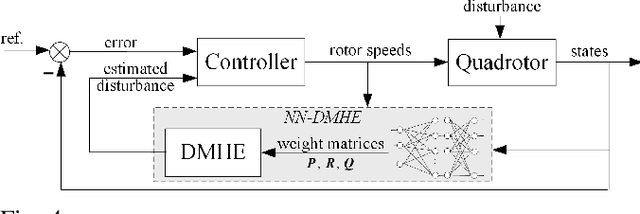

Abstract:Estimating and reacting to external disturbances is of fundamental importance for robust control of quadrotors. Existing estimators typically require significant tuning or training with a large amount of data, including the ground truth, to achieve satisfactory performance. This paper proposes a data-efficient differentiable moving horizon estimation (DMHE) algorithm that can automatically tune the MHE parameters online and also adapt to different scenarios. We achieve this by deriving the analytical gradient of the estimated trajectory from MHE with respect to the tuning parameters, enabling end-to-end learning for auto-tuning. Most interestingly, we show that the gradient can be calculated efficiently from a Kalman filter in a recursive form. Moreover, we develop a model-based policy gradient algorithm to learn the parameters directly from the trajectory tracking errors without the need for the ground truth. The proposed DMHE can be further embedded as a layer with other neural networks for joint optimization. Finally, we demonstrate the effectiveness of the proposed method via both simulation and experiments on quadrotors, where challenging scenarios such as sudden payload change and flying in downwash are examined.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge