Shenning Zhang

Learning Agile Flight Maneuvers: Deep SE(3) Motion Planning and Control for Quadrotors

Sep 22, 2022

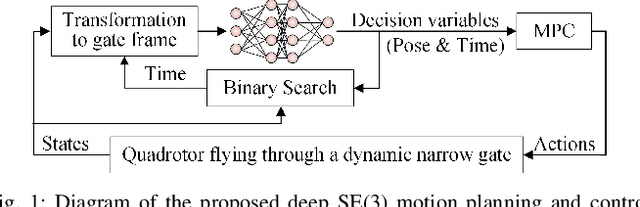

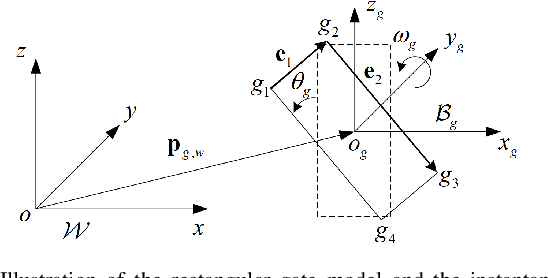

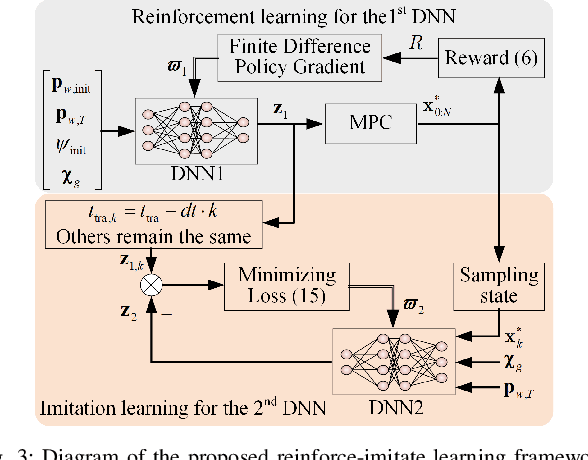

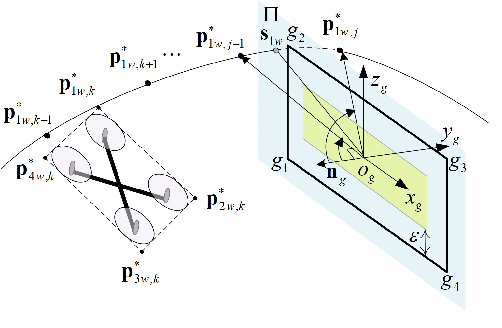

Abstract:Agile flights of autonomous quadrotors in cluttered environments require constrained motion planning and control subject to translational and rotational dynamics. Traditional model-based methods typically demand complicated design and heavy computation. In this paper, we develop a novel deep reinforcement learning-based method that tackles the challenging task of flying through a dynamic narrow gate. We design a model predictive controller with its adaptive tracking references parameterized by a deep neural network (DNN). These references include the traversal time and the quadrotor SE(3) traversal pose that encourage the robot to fly through the gate with maximum safety margins from various initial conditions. To cope with the difficulty of training in highly dynamic environments, we develop a reinforce-imitate learning framework to train the DNN efficiently that generalizes well to diverse settings. Furthermore, we propose a binary search algorithm that allows online adaption of the SE(3) references to dynamic gates in real-time. Finally, through extensive high-fidelity simulations, we show that our approach is robust to the gate's velocity uncertainties and adaptive to different gate trajectories and orientations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge