Bingchan Zhao

MPO: Boosting LLM Agents with Meta Plan Optimization

Mar 04, 2025

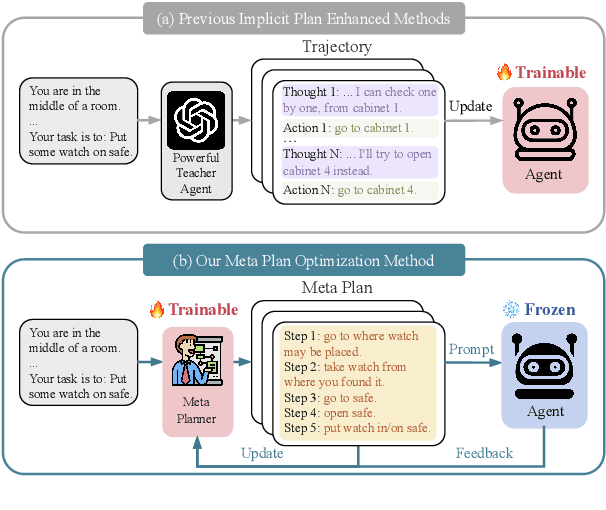

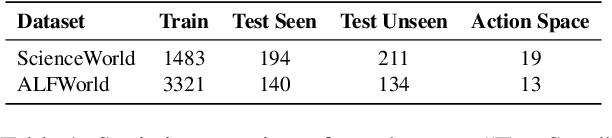

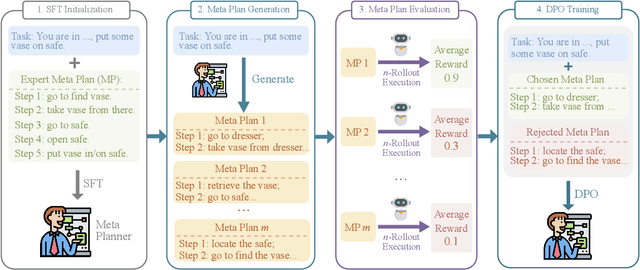

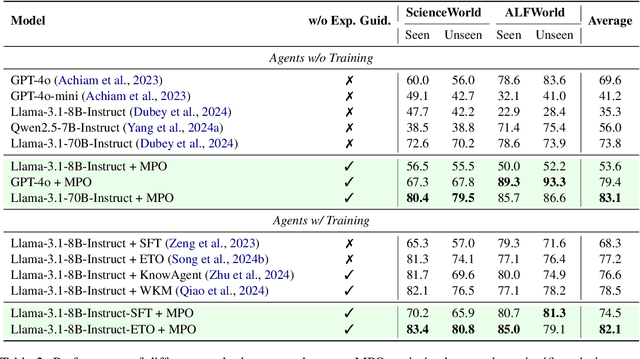

Abstract:Recent advancements in large language models (LLMs) have enabled LLM-based agents to successfully tackle interactive planning tasks. However, despite their successes, existing approaches often suffer from planning hallucinations and require retraining for each new agent. To address these challenges, we propose the Meta Plan Optimization (MPO) framework, which enhances agent planning capabilities by directly incorporating explicit guidance. Unlike previous methods that rely on complex knowledge, which either require significant human effort or lack quality assurance, MPO leverages high-level general guidance through meta plans to assist agent planning and enables continuous optimization of the meta plans based on feedback from the agent's task execution. Our experiments conducted on two representative tasks demonstrate that MPO significantly outperforms existing baselines. Moreover, our analysis indicates that MPO provides a plug-and-play solution that enhances both task completion efficiency and generalization capabilities in previous unseen scenarios.

DLGAN: Disentangling Label-Specific Fine-Grained Features for Image Manipulation

Nov 22, 2019

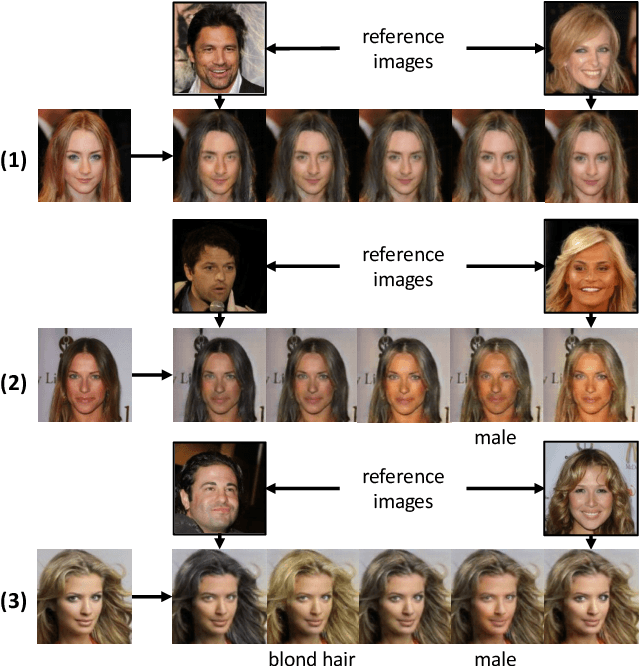

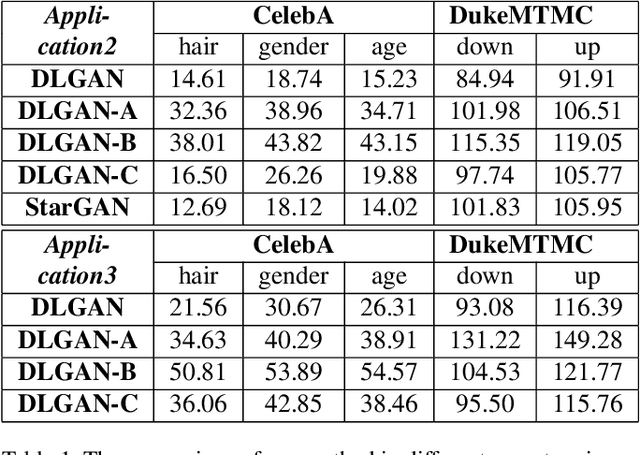

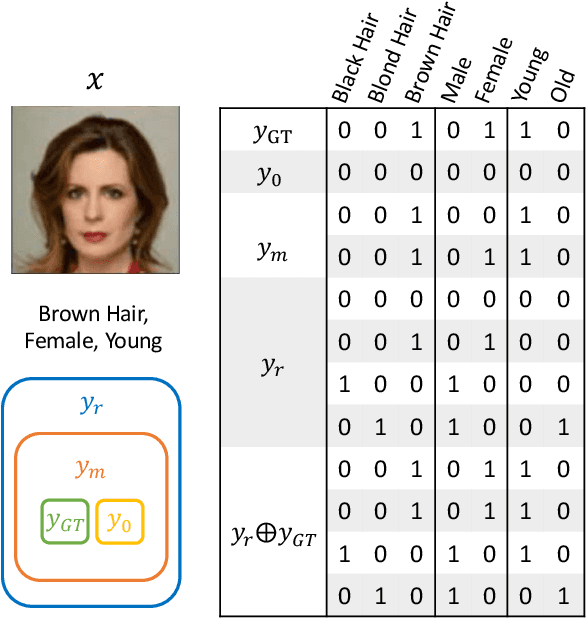

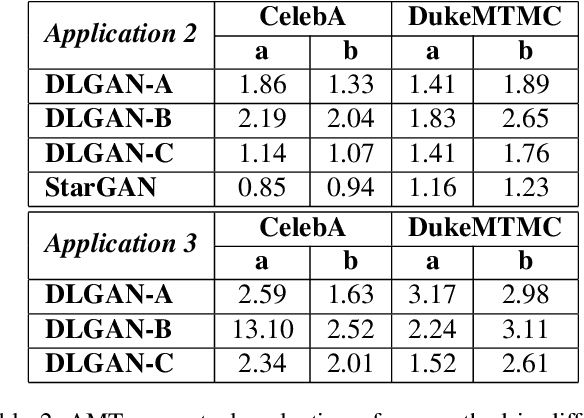

Abstract:Several recent studies have shown how disentangling images into content and feature spaces can provide controllable image translation/manipulation. In this paper, we propose a framework to enable utilizing discrete multi-labels to control which features to be disentangled,i.e., disentangling label-specific fine-grained features for image manipulation (dubbed DLGAN). By mapping the discrete label-specific attribute features into a continuous prior distribution, we enable leveraging the advantages of both discrete labels and reference images to achieve image manipulation in a hybrid fashion. For example, given a face image dataset (e.g., CelebA) with multiple discrete fine-grained labels, we can learn to smoothly interpolate a face image between black hair and blond hair through reference images while immediately control the gender and age through discrete input labels. To the best of our knowledge, this is the first work to realize such a hybrid manipulation within a single model. Qualitative and quantitative experiments demonstrate the effectiveness of the proposed method

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge