Bhalaji Nagarajan

Conjuring Positive Pairs for Efficient Unification of Representation Learning and Image Synthesis

Mar 20, 2025Abstract:While representation learning and generative modeling seek to understand visual data, unifying both domains remains unexplored. Recent Unified Self-Supervised Learning (SSL) methods have started to bridge the gap between both paradigms. However, they rely solely on semantic token reconstruction, which requires an external tokenizer during training -- introducing a significant overhead. In this work, we introduce Sorcen, a novel unified SSL framework, incorporating a synergic Contrastive-Reconstruction objective. Our Contrastive objective, "Echo Contrast", leverages the generative capabilities of Sorcen, eliminating the need for additional image crops or augmentations during training. Sorcen "generates" an echo sample in the semantic token space, forming the contrastive positive pair. Sorcen operates exclusively on precomputed tokens, eliminating the need for an online token transformation during training, thereby significantly reducing computational overhead. Extensive experiments on ImageNet-1k demonstrate that Sorcen outperforms the previous Unified SSL SoTA by 0.4%, 1.48 FID, 1.76%, and 1.53% on linear probing, unconditional image generation, few-shot learning, and transfer learning, respectively, while being 60.8% more efficient. Additionally, Sorcen surpasses previous single-crop MIM SoTA in linear probing and achieves SoTA performance in unconditional image generation, highlighting significant improvements and breakthroughs in Unified SSL models.

Precision at Scale: Domain-Specific Datasets On-Demand

Jul 03, 2024

Abstract:In the realm of self-supervised learning (SSL), conventional wisdom has gravitated towards the utility of massive, general domain datasets for pretraining robust backbones. In this paper, we challenge this idea by exploring if it is possible to bridge the scale between general-domain datasets and (traditionally smaller) domain-specific datasets to reduce the current performance gap. More specifically, we propose Precision at Scale (PaS), a novel method for the autonomous creation of domain-specific datasets on-demand. The modularity of the PaS pipeline enables leveraging state-of-the-art foundational and generative models to create a collection of images of any given size belonging to any given domain with minimal human intervention. Extensive analysis in two complex domains, proves the superiority of PaS datasets over existing traditional domain-specific datasets in terms of diversity, scale, and effectiveness in training visual transformers and convolutional neural networks. Most notably, we prove that automatically generated domain-specific datasets lead to better pretraining than large-scale supervised datasets such as ImageNet-1k and ImageNet-21k. Concretely, models trained on domain-specific datasets constructed by PaS pipeline, beat ImageNet-1k pretrained backbones by at least 12% in all the considered domains and classification tasks and lead to better food domain performance than supervised ImageNet-21k pretrain while being 12 times smaller. Code repository: https://github.com/jesusmolrdv/Precision-at-Scale/

All4One: Symbiotic Neighbour Contrastive Learning via Self-Attention and Redundancy Reduction

Mar 16, 2023Abstract:Nearest neighbour based methods have proved to be one of the most successful self-supervised learning (SSL) approaches due to their high generalization capabilities. However, their computational efficiency decreases when more than one neighbour is used. In this paper, we propose a novel contrastive SSL approach, which we call All4One, that reduces the distance between neighbour representations using ''centroids'' created through a self-attention mechanism. We use a Centroid Contrasting objective along with single Neighbour Contrasting and Feature Contrasting objectives. Centroids help in learning contextual information from multiple neighbours whereas the neighbour contrast enables learning representations directly from the neighbours and the feature contrast allows learning representations unique to the features. This combination enables All4One to outperform popular instance discrimination approaches by more than 1% on linear classification evaluation for popular benchmark datasets and obtains state-of-the-art (SoTA) results. Finally, we show that All4One is robust towards embedding dimensionalities and augmentations, surpassing NNCLR and Barlow Twins by more than 5% on low dimensionality and weak augmentation settings. The source code would be made available soon.

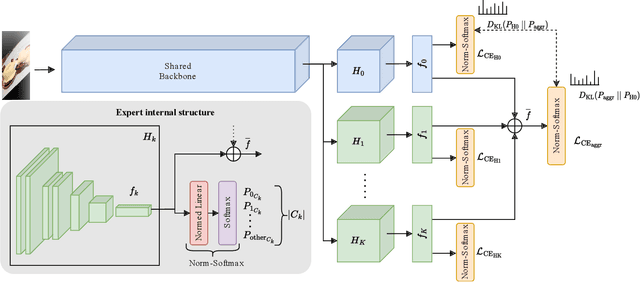

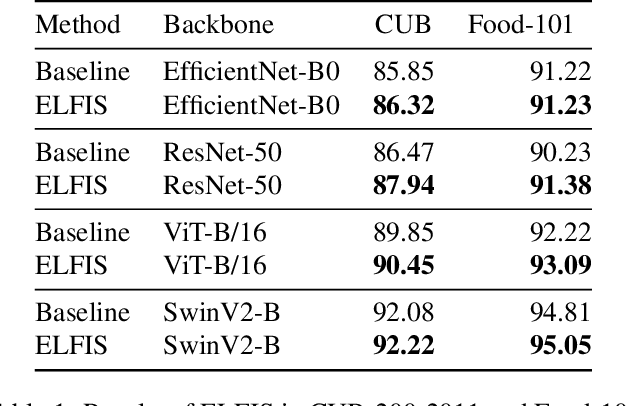

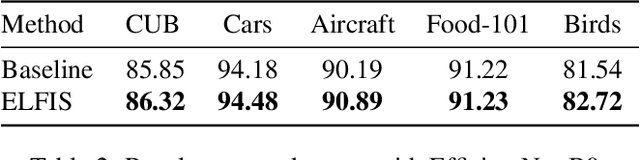

ELFIS: Expert Learning for Fine-grained Image Recognition Using Subsets

Mar 16, 2023

Abstract:Fine-Grained Visual Recognition (FGVR) tackles the problem of distinguishing highly similar categories. One of the main approaches to FGVR, namely subset learning, tries to leverage information from existing class taxonomies to improve the performance of deep neural networks. However, these methods rely on the existence of handcrafted hierarchies that are not necessarily optimal for the models. In this paper, we propose ELFIS, an expert learning framework for FGVR that clusters categories of the dataset into meta-categories using both dataset-inherent lexical and model-specific information. A set of neural networks-based experts are trained focusing on the meta-categories and are integrated into a multi-task framework. Extensive experimentation shows improvements in the SoTA FGVR benchmarks of up to +1.3% of accuracy using both CNNs and transformer-based networks. Overall, the obtained results evidence that ELFIS can be applied on top of any classification model, enabling the obtention of SoTA results. The source code will be made public soon.

Hyper-Spectral Imaging for Overlapping Plastic Flakes Segmentation

Mar 23, 2022

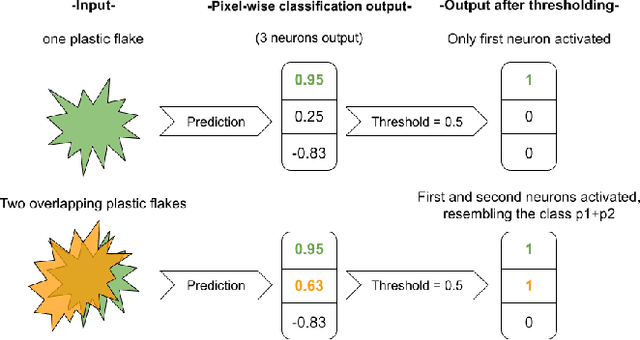

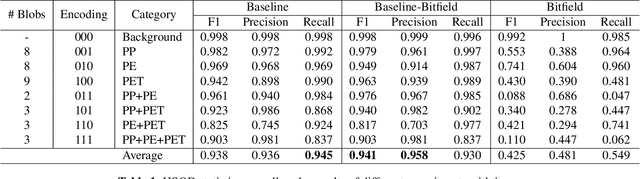

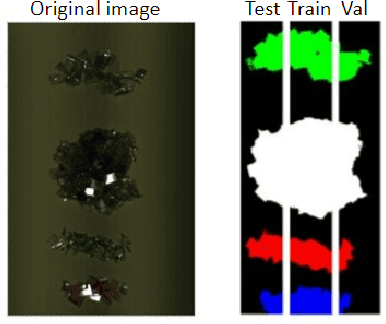

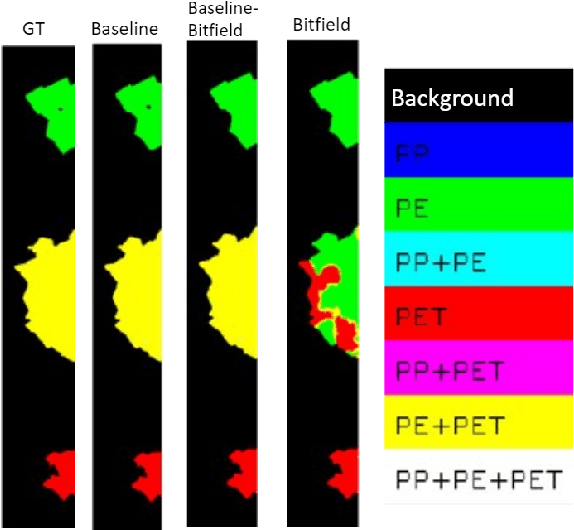

Abstract:Given the hyper-spectral imaging unique potentials in grasping the polymer characteristics of different materials, it is commonly used in sorting procedures. In a practical plastic sorting scenario, multiple plastic flakes may overlap which depending on their characteristics, the overlap can be reflected in their spectral signature. In this work, we use hyper-spectral imaging for the segmentation of three types of plastic flakes and their possible overlapping combinations. We propose an intuitive and simple multi-label encoding approach, bitfield encoding, to account for the overlapping regions. With our experiments, we show that the bitfield encoding improves over the baseline single-label approach and we further demonstrate its potential in predicting multiple labels for overlapping classes even when the model is only trained with non-overlapping classes.

Deep Net Features for Complex Emotion Recognition

Nov 02, 2018

Abstract:This paper investigates the influence of different acoustic features, audio-events based features and automatic speech translation based lexical features in complex emotion recognition such as curiosity. Pretrained networks, namely, AudioSet Net, VoxCeleb Net and Deep Speech Net trained extensively for different speech based applications are studied for this objective. Information from deep layers of these networks are considered as descriptors and encoded into feature vectors. Experimental results on the EmoReact dataset consisting of 8 complex emotions show the effectiveness, yielding highest F1 score of 0.85 as against the baseline of 0.69 in the literature.

Deep Learning as Feature Encoding for Emotion Recognition

Oct 30, 2018

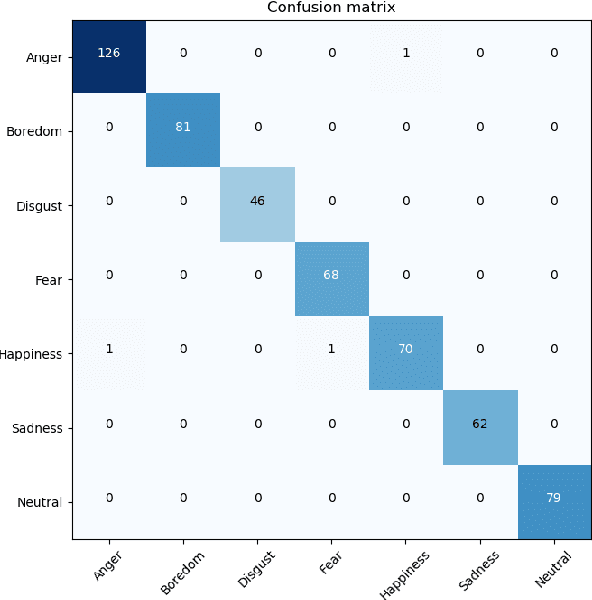

Abstract:Deep learning is popular as an end-to-end framework extracting the prominent features and performing the classification also. In this paper, we extensively investigate deep networks as an alternate to feature encoding technique of low level descriptors for emotion recognition on the benchmark EmoDB dataset. Fusion performance with such obtained encoded features with other available features is also investigated. Highest performance to date in the literature is observed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge