V Ramana Murthy Oruganti

Deep Net Features for Complex Emotion Recognition

Nov 02, 2018

Abstract:This paper investigates the influence of different acoustic features, audio-events based features and automatic speech translation based lexical features in complex emotion recognition such as curiosity. Pretrained networks, namely, AudioSet Net, VoxCeleb Net and Deep Speech Net trained extensively for different speech based applications are studied for this objective. Information from deep layers of these networks are considered as descriptors and encoded into feature vectors. Experimental results on the EmoReact dataset consisting of 8 complex emotions show the effectiveness, yielding highest F1 score of 0.85 as against the baseline of 0.69 in the literature.

Deep Learning as Feature Encoding for Emotion Recognition

Oct 30, 2018

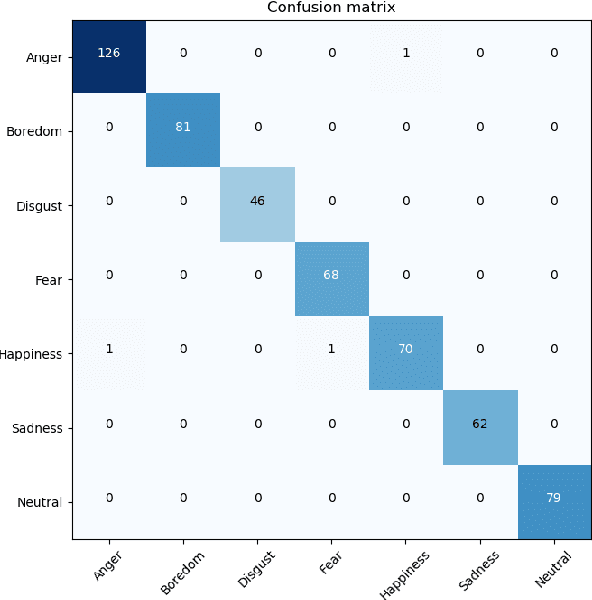

Abstract:Deep learning is popular as an end-to-end framework extracting the prominent features and performing the classification also. In this paper, we extensively investigate deep networks as an alternate to feature encoding technique of low level descriptors for emotion recognition on the benchmark EmoDB dataset. Fusion performance with such obtained encoded features with other available features is also investigated. Highest performance to date in the literature is observed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge