Bernd T. Meyer

Real-time multichannel deep speech enhancement in hearing aids: Comparing monaural and binaural processing in complex acoustic scenarios

May 03, 2024

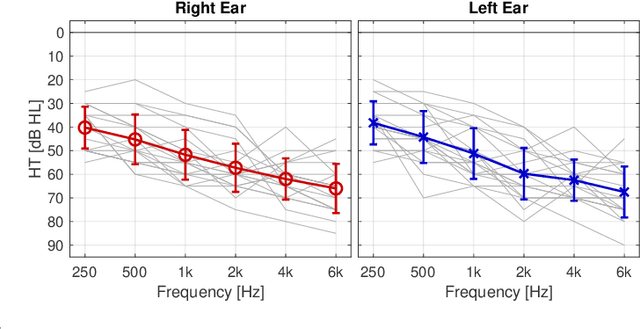

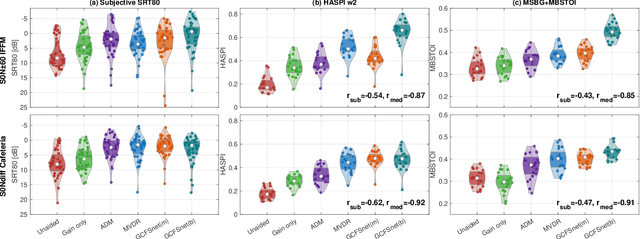

Abstract:Deep learning has the potential to enhance speech signals and increase their intelligibility for users of hearing aids. Deep models suited for real-world application should feature a low computational complexity and low processing delay of only a few milliseconds. In this paper, we explore deep speech enhancement that matches these requirements and contrast monaural and binaural processing algorithms in two complex acoustic scenes. Both algorithms are evaluated with objective metrics and in experiments with hearing-impaired listeners performing a speech-in-noise test. Results are compared to two traditional enhancement strategies, i.e., adaptive differential microphone processing and binaural beamforming. While in diffuse noise, all algorithms perform similarly, the binaural deep learning approach performs best in the presence of spatial interferers. Through a post-analysis, this can be attributed to improvements at low SNRs and to precise spatial filtering.

Binaural multichannel blind speaker separation with a causal low-latency and low-complexity approach

Dec 08, 2023

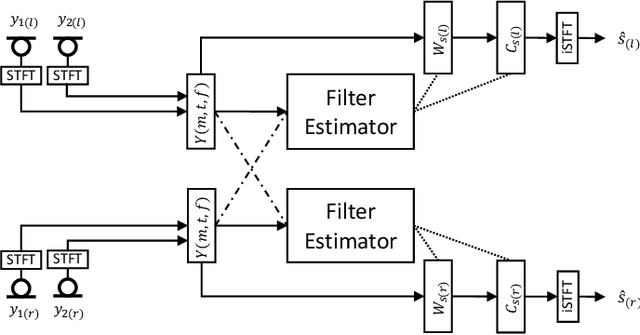

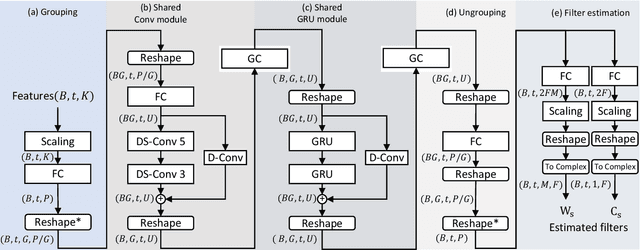

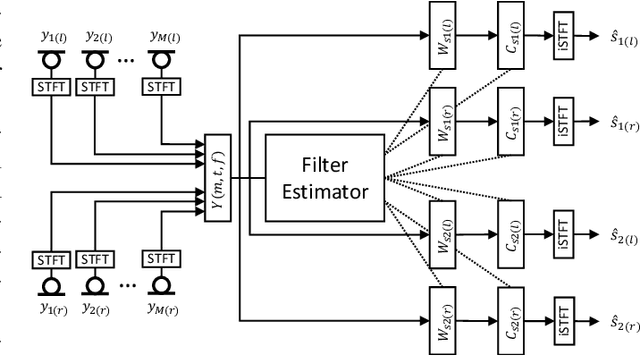

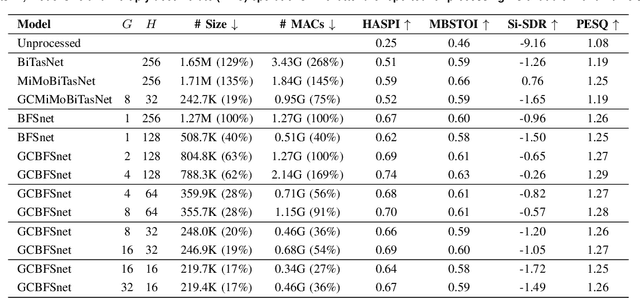

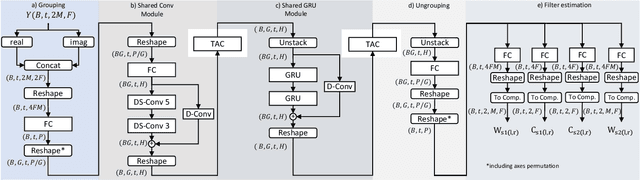

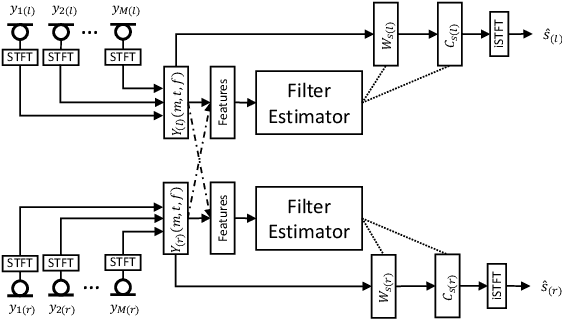

Abstract:In this paper, we introduce a causal low-latency low-complexity approach for binaural multichannel blind speaker separation in noisy reverberant conditions. The model, referred to as Group Communication Binaural Filter and Sum Network (GCBFSnet) predicts complex filters for filter-and-sum beamforming in the time-frequency domain. We apply Group Communication (GC), i.e., latent model variables are split into groups and processed with a shared sequence model with the aim of reducing the complexity of a simple model only containing one convolutional and one recurrent module. With GC we are able to reduce the size of the model by up to 83 % and the complexity up to 73 % compared to the model without GC, while mostly retaining performance. Even for the smallest model configuration, GCBFSnet matches the performance of a low-complexity TasNet baseline in most metrics despite the larger size and higher number of required operations of the baseline.

Low bit rate binaural link for improved ultra low-latency low-complexity multichannel speech enhancement in Hearing Aids

Jul 17, 2023

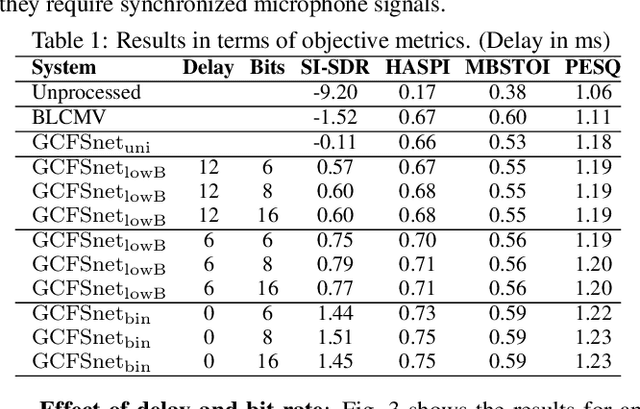

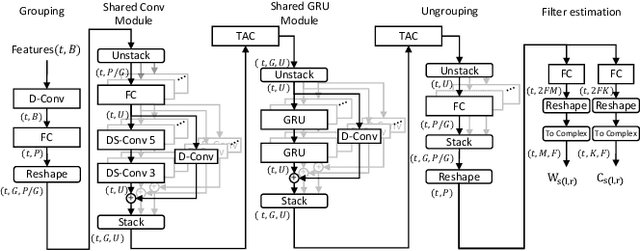

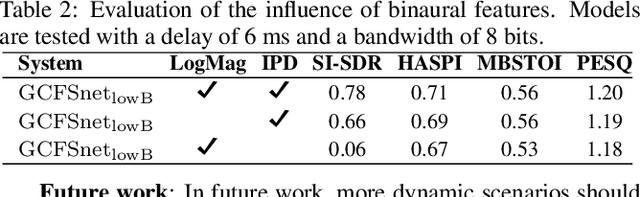

Abstract:Speech enhancement in hearing aids is a challenging task since the hardware limits the number of possible operations and the latency needs to be in the range of only a few milliseconds. We propose a deep-learning model compatible with these limitations, which we refer to as Group-Communication Filter-and-Sum Network (GCFSnet). GCFSnet is a causal multiple-input single output enhancement model using filter-and-sum processing in the time-frequency domain and a multi-frame deep post filter. All filters are complex-valued and are estimated by a deep-learning model using weight-sharing through Group Communication and quantization-aware training for reducing model size and computational footprint. For a further increase in performance, a low bit rate binaural link for delayed binaural features is proposed to use binaural information while retaining a latency of 2ms. The performance of an oracle binaural LCMV beamformer in non-low-latency configuration can be matched even by a unilateral configuration of the GCFSnet in terms of objective metrics.

Multilingual Query-by-Example Keyword Spotting with Metric Learning and Phoneme-to-Embedding Mapping

Apr 19, 2023

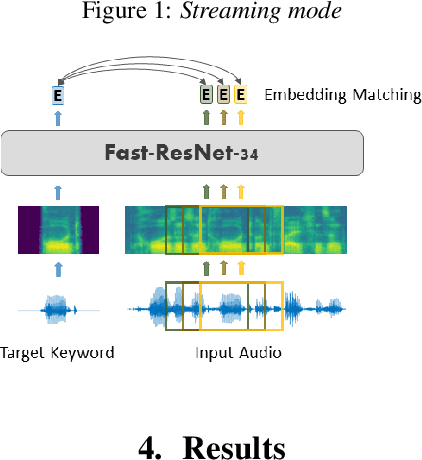

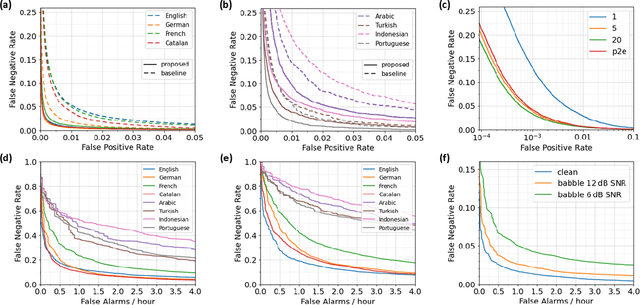

Abstract:In this paper, we propose a multilingual query-by-example keyword spotting (KWS) system based on a residual neural network. The model is trained as a classifier on a multilingual keyword dataset extracted from Common Voice sentences and fine-tuned using circle loss. We demonstrate the generalization ability of the model to new languages and report a mean reduction in EER of 59.2 % for previously seen and 47.9 % for unseen languages compared to a competitive baseline. We show that the word embeddings learned by the KWS model can be accurately predicted from the phoneme sequences using a simple LSTM model. Our system achieves a promising accuracy for streaming keyword spotting and keyword search on Common Voice audio using just 5 examples per keyword. Experiments on the Hey-Snips dataset show a good performance with a false negative rate of 5.4 % at only 0.1 false alarms per hour.

tPLCnet: Real-time Deep Packet Loss Concealment in the Time Domain Using a Short Temporal Context

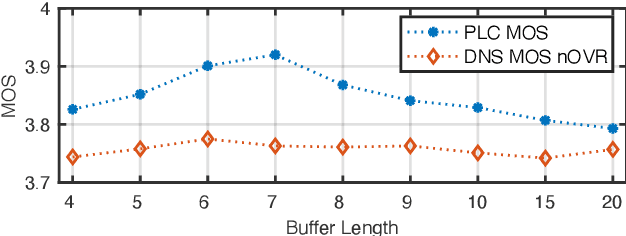

Apr 04, 2022

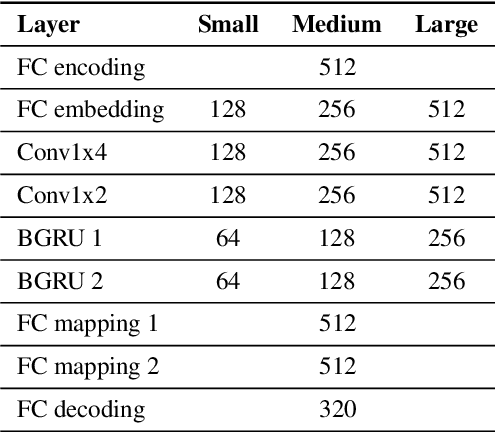

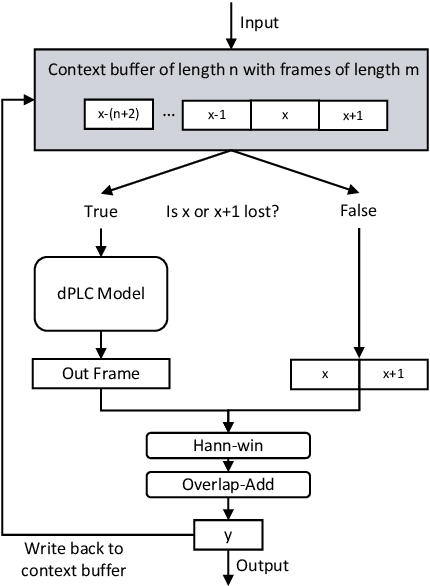

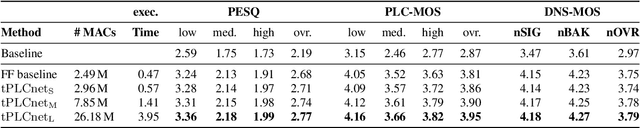

Abstract:This paper introduces a real-time time-domain packet loss concealment (PLC) neural-network (tPLCnet). It efficiently predicts lost frames from a short context buffer in a sequence-to-one (seq2one) fashion. Because of its seq2one structure, a continuous inference of the model is not required since it can be triggered when packet loss is actually detected. It is trained on 64h of open-source speech data and packet-loss traces of real calls provided by the Audio PLC Challenge. The model with the lowest complexity described in this paper reaches a robust PLC performance and consistent improvements over the zero-filling baseline for all metrics. A configuration with higher complexity is submitted to the PLC Challenge and shows a performance increase of 1.07 compared to the zero-filling baseline in terms of PLC-MOS on the blind test set and reaches a competitive 3rd place in the challenge ranking.

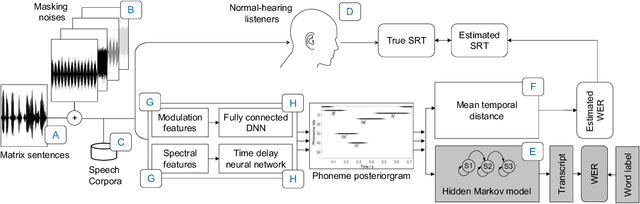

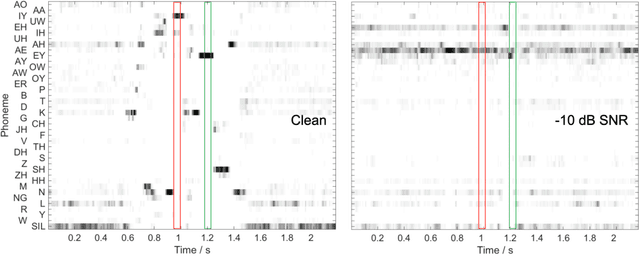

Prediction of speech intelligibility with DNN-based performance measures

Mar 17, 2022

Abstract:This paper presents a speech intelligibility model based on automatic speech recognition (ASR), combining phoneme probabilities from deep neural networks (DNN) and a performance measure that estimates the word error rate from these probabilities. This model does not require the clean speech reference nor the word labels during testing as the ASR decoding step, which finds the most likely sequence of words given phoneme posterior probabilities, is omitted. The model is evaluated via the root-mean-squared error between the predicted and observed speech reception thresholds from eight normal-hearing listeners. The recognition task consists of identifying noisy words from a German matrix sentence test. The speech material was mixed with eight noise maskers covering different modulation types, from speech-shaped stationary noise to a single-talker masker. The prediction performance is compared to five established models and an ASR-model using word labels. Two combinations of features and networks were tested. Both include temporal information either at the feature level (amplitude modulation filterbanks and a feed-forward network) or captured by the architecture (mel-spectrograms and a time-delay deep neural network, TDNN). The TDNN model is on par with the DNN while reducing the number of parameters by a factor of 37; this optimization allows parallel streams on dedicated hearing aid hardware as a forward-pass can be computed within the 10ms of each frame. The proposed model performs almost as well as the label-based model and produces more accurate predictions than the baseline models.

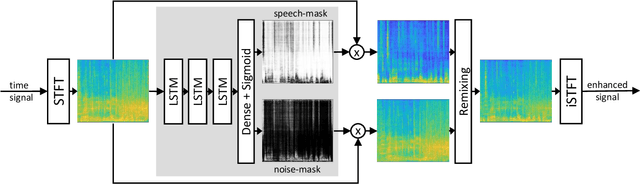

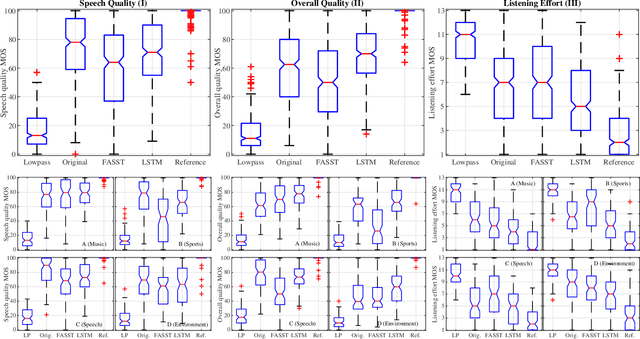

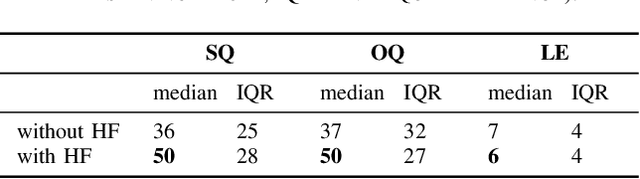

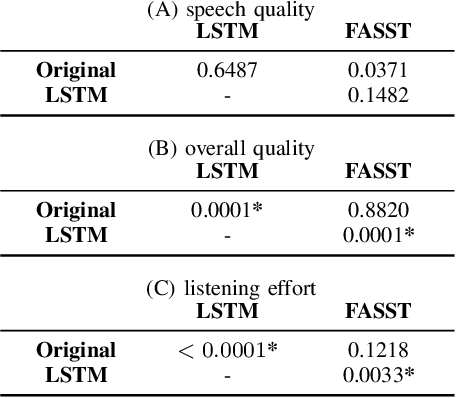

Reduction of Subjective Listening Effort for TV Broadcast Signals with Recurrent Neural Networks

Nov 02, 2021

Abstract:Listening to the audio of TV broadcast signals can be challenging for hearing-impaired as well as normal-hearing listeners, especially when background sounds are prominent or too loud compared to the speech signal. This can result in a reduced satisfaction and increased listening effort of the listeners. Since the broadcast sound is usually premixed, we perform a subjective evaluation for quantifying the potential of speech enhancement systems based on audio source separation and recurrent neural networks (RNN). Recently, RNNs have shown promising results in the context of sound source separation and real-time signal processing. In this paper, we separate the speech from the background signals and remix the separated sounds at a higher signal-to-noise ratio. This differs from classic speech enhancement, where usually only the extracted speech signal is exploited. The subjective evaluation with 20 normal-hearing subjects on real TV-broadcast material shows that our proposed enhancement system is able to reduce the listening effort by around 2 points on a 13-point listening effort rating scale and increases the perceived sound quality compared to the original mixture.

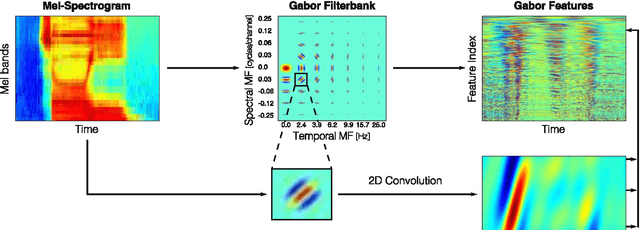

On the Relevance of Auditory-Based Gabor Features for Deep Learning in Automatic Speech Recognition

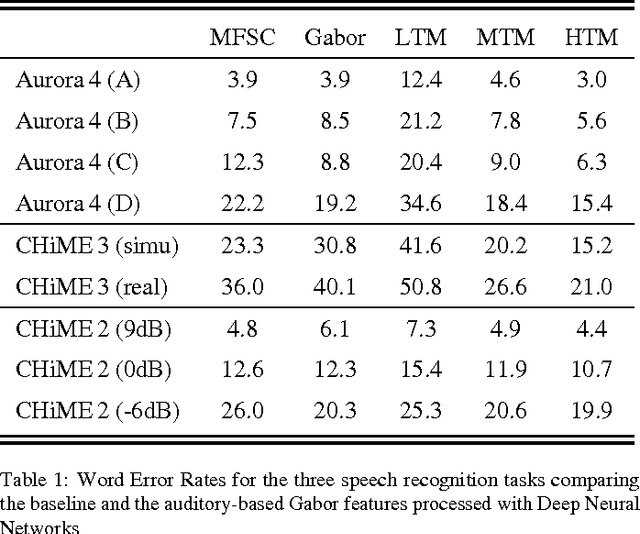

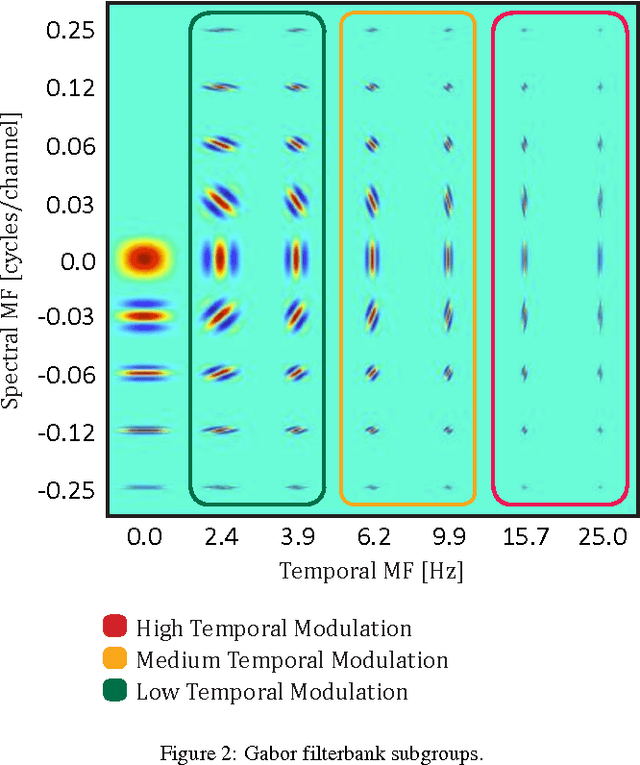

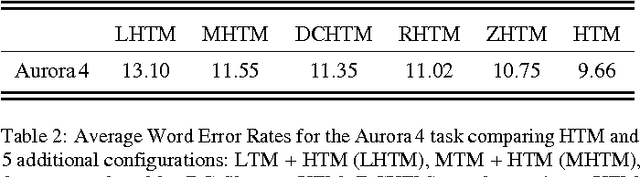

Feb 14, 2017

Abstract:Previous studies support the idea of merging auditory-based Gabor features with deep learning architectures to achieve robust automatic speech recognition, however, the cause behind the gain of such combination is still unknown. We believe these representations provide the deep learning decoder with more discriminable cues. Our aim with this paper is to validate this hypothesis by performing experiments with three different recognition tasks (Aurora 4, CHiME 2 and CHiME 3) and assess the discriminability of the information encoded by Gabor filterbank features. Additionally, to identify the contribution of low, medium and high temporal modulation frequencies subsets of the Gabor filterbank were used as features (dubbed LTM, MTM and HTM respectively). With temporal modulation frequencies between 16 and 25 Hz, HTM consistently outperformed the remaining ones in every condition, highlighting the robustness of these representations against channel distortions, low signal-to-noise ratios and acoustically challenging real-life scenarios with relative improvements from 11 to 56% against a Mel-filterbank-DNN baseline. To explain the results, a measure of similarity between phoneme classes from DNN activations is proposed and linked to their acoustic properties. We find this measure to be consistent with the observed error rates and highlight specific differences on phoneme level to pinpoint the benefit of the proposed features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge