Basem Rizk

From Videos to Indexed Knowledge Graphs -- Framework to Marry Methods for Multimodal Content Analysis and Understanding

Oct 01, 2025Abstract:Analysis of multi-modal content can be tricky, computationally expensive, and require a significant amount of engineering efforts. Lots of work with pre-trained models on static data is out there, yet fusing these opensource models and methods with complex data such as videos is relatively challenging. In this paper, we present a framework that enables efficiently prototyping pipelines for multi-modal content analysis. We craft a candidate recipe for a pipeline, marrying a set of pre-trained models, to convert videos into a temporal semi-structured data format. We translate this structure further to a frame-level indexed knowledge graph representation that is query-able and supports continual learning, enabling the dynamic incorporation of new domain-specific knowledge through an interactive medium.

Optimizing SIA Development: A Case Study in User-Centered Design for Estuary, a Multimodal Socially Interactive Agent Framework

Apr 20, 2025Abstract:This case study presents our user-centered design model for Socially Intelligent Agent (SIA) development frameworks through our experience developing Estuary, an open source multimodal framework for building low-latency real-time socially interactive agents. We leverage the Rapid Assessment Process (RAP) to collect the thoughts of leading researchers in the field of SIAs regarding the current state of the art for SIA development as well as their evaluation of how well Estuary may potentially address current research gaps. We achieve this through a series of end-user interviews conducted by a fellow researcher in the community. We hope that the findings of our work will not only assist the continued development of Estuary but also guide the development of other future frameworks and technologies for SIAs.

Beyond Visual Understanding: Introducing PARROT-360V for Vision Language Model Benchmarking

Nov 20, 2024Abstract:Current benchmarks for evaluating Vision Language Models (VLMs) often fall short in thoroughly assessing model abilities to understand and process complex visual and textual content. They typically focus on simple tasks that do not require deep reasoning or the integration of multiple data modalities to solve an original problem. To address this gap, we introduce the PARROT-360V Benchmark, a novel and comprehensive benchmark featuring 2487 challenging visual puzzles designed to test VLMs on complex visual reasoning tasks. We evaluated leading models: GPT-4o, Claude-3.5-Sonnet, and Gemini-1.5-Pro, using PARROT-360V to assess their capabilities in combining visual clues with language skills to solve tasks in a manner akin to human problem-solving. Our findings reveal a notable performance gap: state-of-the-art models scored between 28 to 56 percentage on our benchmark, significantly lower than their performance on popular benchmarks. This underscores the limitations of current VLMs in handling complex, multi-step reasoning tasks and highlights the need for more robust evaluation frameworks to advance the field.

Estuary: A Framework For Building Multimodal Low-Latency Real-Time Socially Interactive Agents

Oct 26, 2024

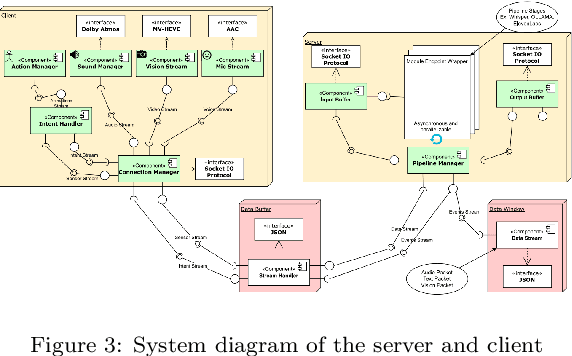

Abstract:The rise in capability and ubiquity of generative artificial intelligence (AI) technologies has enabled its application to the field of Socially Interactive Agents (SIAs). Despite rising interest in modern AI-powered components used for real-time SIA research, substantial friction remains due to the absence of a standardized and universal SIA framework. To target this absence, we developed Estuary: a multimodal (text, audio, and soon video) framework which facilitates the development of low-latency, real-time SIAs. Estuary seeks to reduce repeat work between studies and to provide a flexible platform that can be run entirely off-cloud to maximize configurability, controllability, reproducibility of studies, and speed of agent response times. We are able to do this by constructing a robust multimodal framework which incorporates current and future components seamlessly into a modular and interoperable architecture.

Can Language Model Moderators Improve the Health of Online Discourse?

Nov 16, 2023

Abstract:Human moderation of online conversation is essential to maintaining civility and focus in a dialogue, but is challenging to scale and harmful to moderators. The inclusion of sophisticated natural language generation modules as a force multiplier aid moderators is a tantalizing prospect, but adequate evaluation approaches have so far been elusive. In this paper, we establish a systematic definition of conversational moderation effectiveness through a multidisciplinary lens that incorporates insights from social science. We then propose a comprehensive evaluation framework that uses this definition to asses models' moderation capabilities independently of human intervention. With our framework, we conduct the first known study of conversational dialogue models as moderators, finding that appropriately prompted models can provide specific and fair feedback on toxic behavior but struggle to influence users to increase their levels of respect and cooperation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge