Antonio Alvarez Valdivia

A Modular Haptic Display with Reconfigurable Signals for Personalized Information Transfer

Jun 06, 2025Abstract:We present a customizable soft haptic system that integrates modular hardware with an information-theoretic algorithm to personalize feedback for different users and tasks. Our platform features modular, multi-degree-of-freedom pneumatic displays, where different signal types, such as pressure, frequency, and contact area, can be activated or combined using fluidic logic circuits. These circuits simplify control by reducing reliance on specialized electronics and enabling coordinated actuation of multiple haptic elements through a compact set of inputs. Our approach allows rapid reconfiguration of haptic signal rendering through hardware-level logic switching without rewriting code. Personalization of the haptic interface is achieved through the combination of modular hardware and software-driven signal selection. To determine which display configurations will be most effective, we model haptic communication as a signal transmission problem, where an agent must convey latent information to the user. We formulate the optimization problem to identify the haptic hardware setup that maximizes the information transfer between the intended message and the user's interpretation, accounting for individual differences in sensitivity, preferences, and perceptual salience. We evaluate this framework through user studies where participants interact with reconfigurable displays under different signal combinations. Our findings support the role of modularity and personalization in creating multimodal haptic interfaces and advance the development of reconfigurable systems that adapt with users in dynamic human-machine interaction contexts.

Anisotropic Stiffness and Programmable Actuation for Soft Robots Enabled by an Inflated Rotational Joint

Oct 16, 2024

Abstract:Soft robots are known for their ability to perform tasks with great adaptability, enabled by their distributed, non-uniform stiffness and actuation. Bending is the most fundamental motion for soft robot design, but creating robust, and easy-to-fabricate soft bending joint with tunable properties remains an active problem of research. In this work, we demonstrate an inflatable actuation module for soft robots with a defined bending plane enabled by forced partial wrinkling. This lowers the structural stiffness in the bending direction, with the final stiffness easily designed by the ratio of wrinkled and unwrinkled regions. We present models and experimental characterization showing the stiffness properties of the actuation module, as well as its ability to maintain the kinematic constraint over a large range of loading conditions. We demonstrate the potential for complex actuation in a soft continuum robot and for decoupling actuation force and efficiency from load capacity. The module provides a novel method for embedding intelligent actuation into soft pneumatic robots.

AeroHaptix: A Wearable Vibrotactile Feedback System for Enhancing Collision Avoidance in UAV Teleoperation

Jul 16, 2024Abstract:Haptic feedback enhances collision avoidance by providing directional obstacle information to operators in unmanned aerial vehicle (UAV) teleoperation. However, such feedback is often rendered via haptic joysticks, which are unfamiliar to UAV operators and limited to single-directional force feedback. Additionally, the direct coupling of the input device and the feedback method diminishes the operators' control authority and causes oscillatory movements. To overcome these limitations, we propose AeroHaptix, a wearable haptic feedback system that uses high-resolution vibrations to communicate multiple obstacle directions simultaneously. The vibrotactile actuators' layout was optimized based on a perceptual study to eliminate perceptual biases and achieve uniform spatial coverage. A novel rendering algorithm, MultiCBF, was adapted from control barrier functions to support multi-directional feedback. System evaluation showed that AeroHaptix effectively reduced collisions in complex environment, and operators reported significantly lower physical workload, improved situational awareness, and increased control authority.

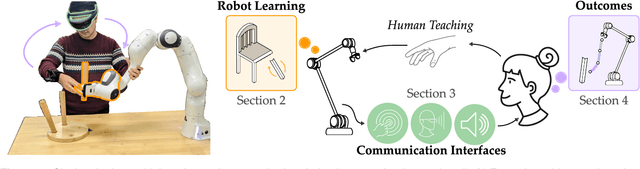

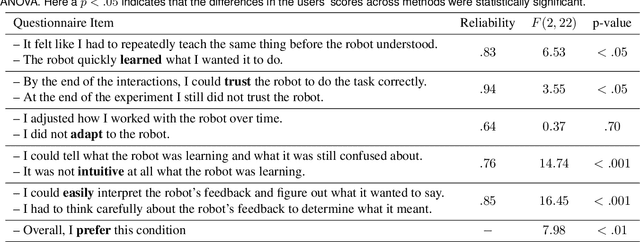

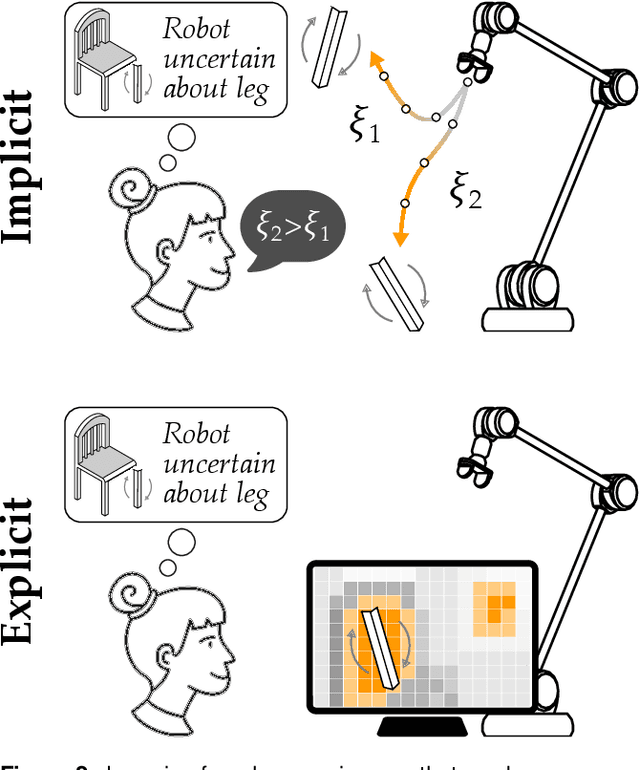

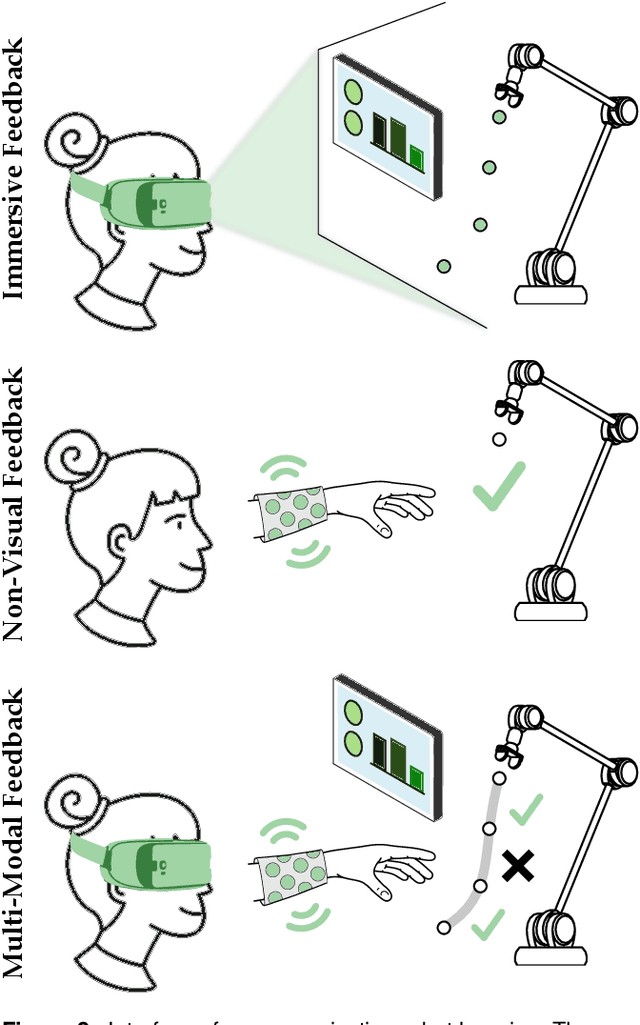

A Review of Communicating Robot Learning during Human-Robot Interaction

Dec 01, 2023

Abstract:For robots to seamlessly interact with humans, we first need to make sure that humans and robots understand one another. Diverse algorithms have been developed to enable robots to learn from humans (i.e., transferring information from humans to robots). In parallel, visual, haptic, and auditory communication interfaces have been designed to convey the robot's internal state to the human (i.e., transferring information from robots to humans). Prior research often separates these two directions of information transfer, and focuses primarily on either learning algorithms or communication interfaces. By contrast, in this review we take an interdisciplinary approach to identify common themes and emerging trends that close the loop between learning and communication. Specifically, we survey state-of-the-art methods and outcomes for communicating a robot's learning back to the human teacher during human-robot interaction. This discussion connects human-in-the-loop learning methods and explainable robot learning with multi-modal feedback systems and measures of human-robot interaction. We find that -- when learning and communication are developed together -- the resulting closed-loop system can lead to improved human teaching, increased human trust, and human-robot co-adaptation. The paper includes a perspective on several of the interdisciplinary research themes and open questions that could advance how future robots communicate their learning to everyday operators. Finally, we implement a selection of the reviewed methods in a case study where participants kinesthetically teach a robot arm. This case study documents and tests an integrated approach for learning in ways that can be communicated, conveying this learning across multi-modal interfaces, and measuring the resulting changes in human and robot behavior. See videos of our case study here: https://youtu.be/EXfQctqFzWs

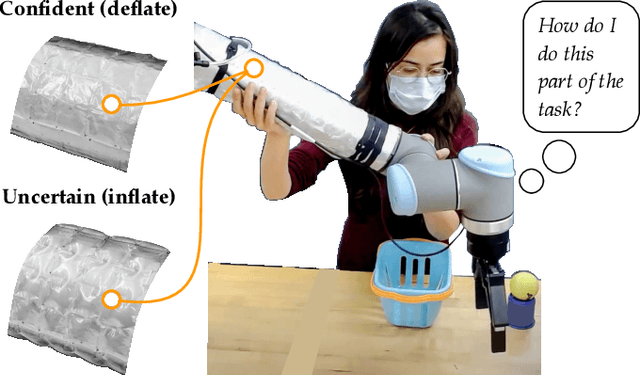

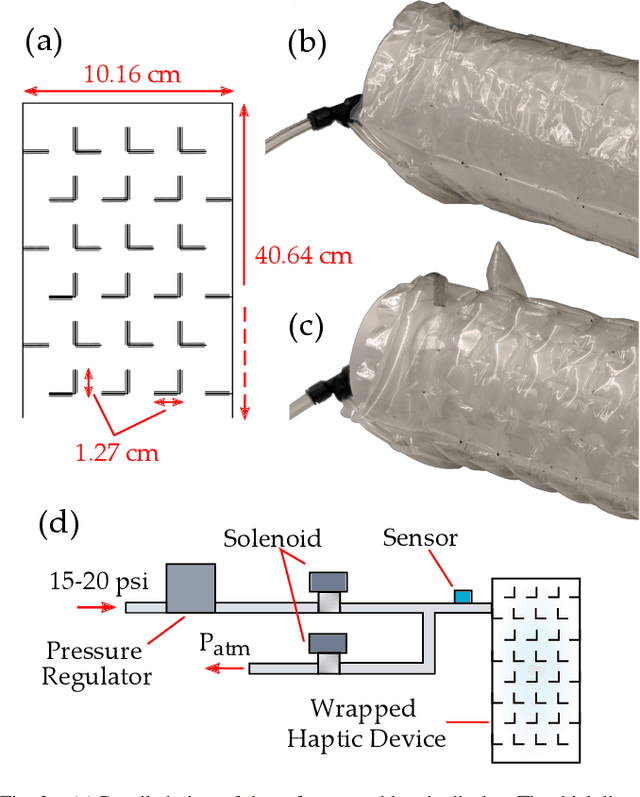

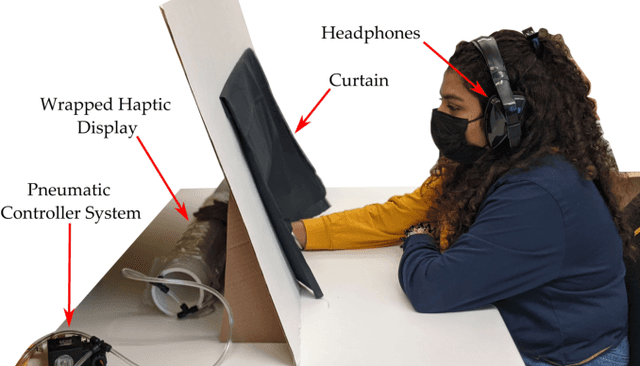

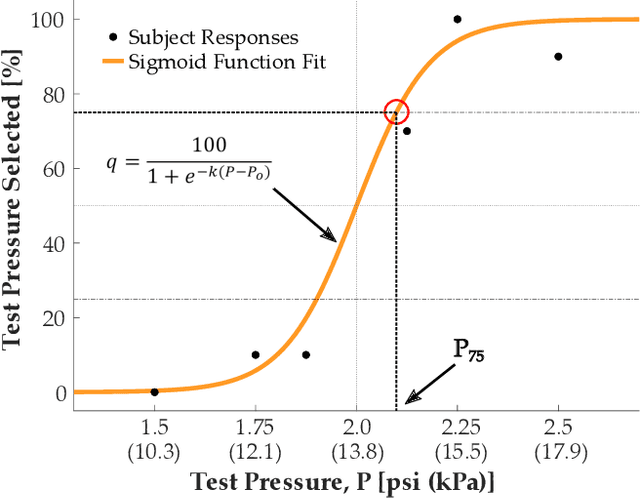

Wrapping Haptic Displays Around Robot Arms to Communicate Learning

Jul 07, 2022

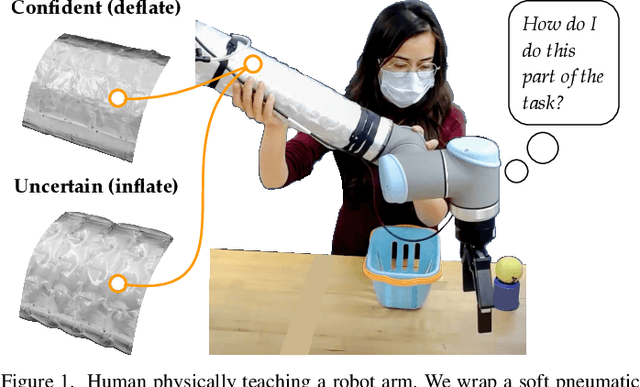

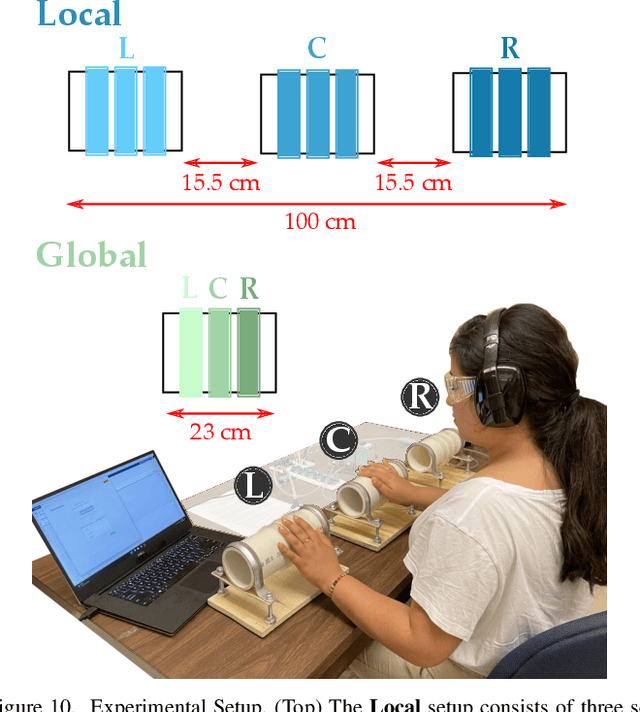

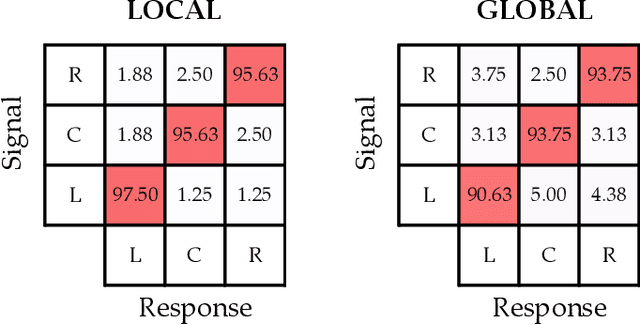

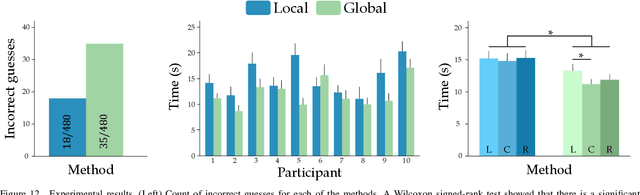

Abstract:Humans can leverage physical interaction to teach robot arms. As the human kinesthetically guides the robot through demonstrations, the robot learns the desired task. While prior works focus on how the robot learns, it is equally important for the human teacher to understand what their robot is learning. Visual displays can communicate this information; however, we hypothesize that visual feedback alone misses out on the physical connection between the human and robot. In this paper we introduce a novel class of soft haptic displays that wrap around the robot arm, adding signals without affecting interaction. We first design a pneumatic actuation array that remains flexible in mounting. We then develop single and multi-dimensional versions of this wrapped haptic display, and explore human perception of the rendered signals during psychophysic tests and robot learning. We ultimately find that people accurately distinguish single-dimensional feedback with a Weber fraction of 11.4%, and identify multi-dimensional feedback with 94.5% accuracy. When physically teaching robot arms, humans leverage the single- and multi-dimensional feedback to provide better demonstrations than with visual feedback: our wrapped haptic display decreases teaching time while increasing demonstration quality. This improvement depends on the location and distribution of the wrapped haptic display. You can see videos of our device and experiments here: https://youtu.be/yPcMGeqsjdM

Wrapped Haptic Display for Communicating Physical Robot Learning

Nov 08, 2021

Abstract:Physical interaction between humans and robots can help robots learn to perform complex tasks. The robot arm gains information by observing how the human kinesthetically guides it throughout the task. While prior works focus on how the robot learns, it is equally important that this learning is transparent to the human teacher. Visual displays that show the robot's uncertainty can potentially communicate this information; however, we hypothesize that visual feedback mechanisms miss out on the physical connection between the human and robot. In this work we present a soft haptic display that wraps around and conforms to the surface of a robot arm, adding a haptic signal at an existing point of contact without significantly affecting the interaction. We demonstrate how soft actuation creates a salient haptic signal while still allowing flexibility in device mounting. Using a psychophysics experiment, we show that users can accurately distinguish inflation levels of the wrapped display with an average Weber fraction of 11.4%. When we place the wrapped display around the arm of a robotic manipulator, users are able to interpret and leverage the haptic signal in sample robot learning tasks, improving identification of areas where the robot needs more training and enabling the user to provide better demonstrations. See videos of our device and user studies here: https://youtu.be/tX-2Tqeb9Nw

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge