Francesco Fuentes

Physics-Grounded Differentiable Simulation for Soft Growing Robots

Jan 29, 2025

Abstract:Soft-growing robots (i.e., vine robots) are a promising class of soft robots that allow for navigation and growth in tightly confined environments. However, these robots remain challenging to model and control due to the complex interplay of the inflated structure and inextensible materials, which leads to obstacles for autonomous operation and design optimization. Although there exist simulators for these systems that have achieved qualitative and quantitative success in matching high-level behavior, they still often fail to capture realistic vine robot shapes using simplified parameter models and have difficulties in high-throughput simulation necessary for planning and parameter optimization. We propose a differentiable simulator for these systems, enabling the use of the simulator "in-the-loop" of gradient-based optimization approaches to address the issues listed above. With the more complex parameter fitting made possible by this approach, we experimentally validate and integrate a closed-form nonlinear stiffness model for thin-walled inflated tubes based on a first-principles approach to local material wrinkling. Our simulator also takes advantage of data-parallel operations by leveraging existing differentiable computation frameworks, allowing multiple simultaneous rollouts. We demonstrate the feasibility of using a physics-grounded nonlinear stiffness model within our simulator, and how it can be an effective tool in sim-to-real transfer. We provide our implementation open source.

Wrapping Haptic Displays Around Robot Arms to Communicate Learning

Jul 07, 2022

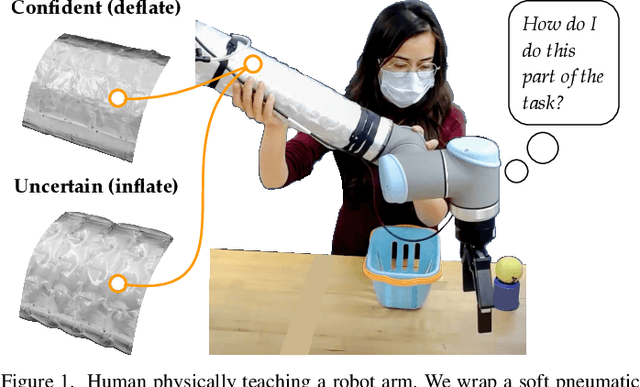

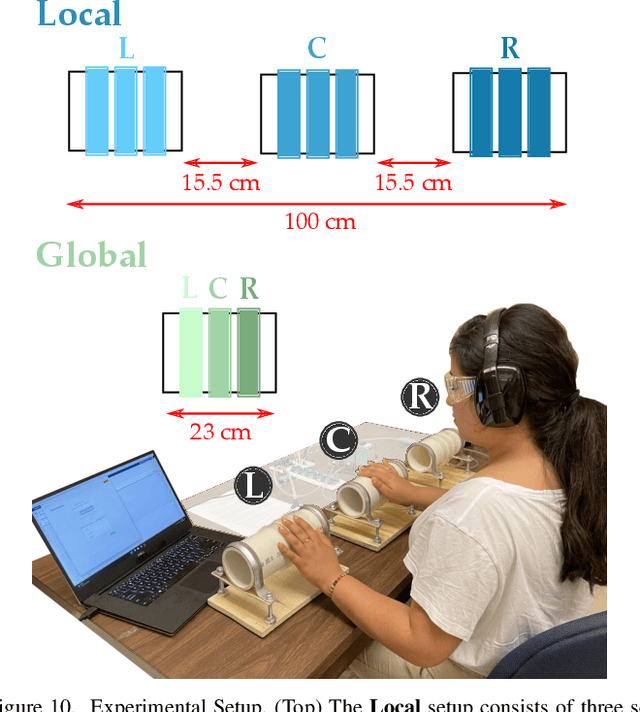

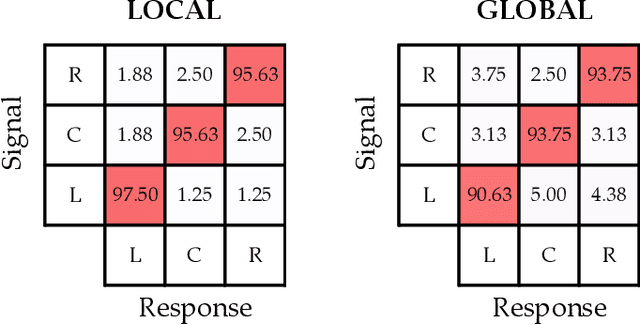

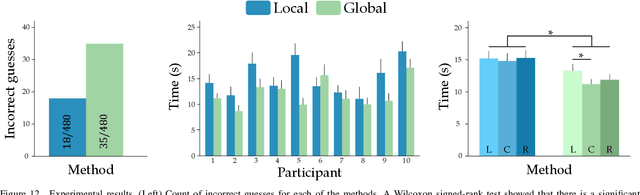

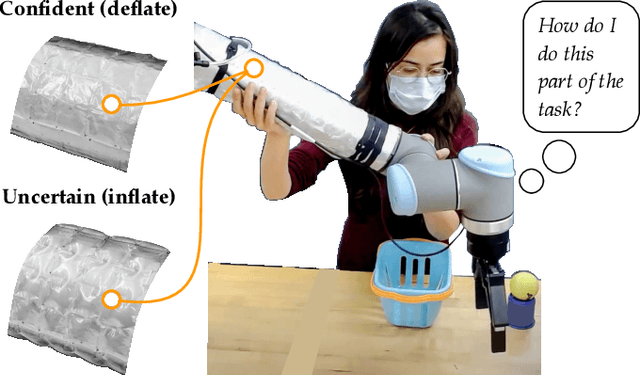

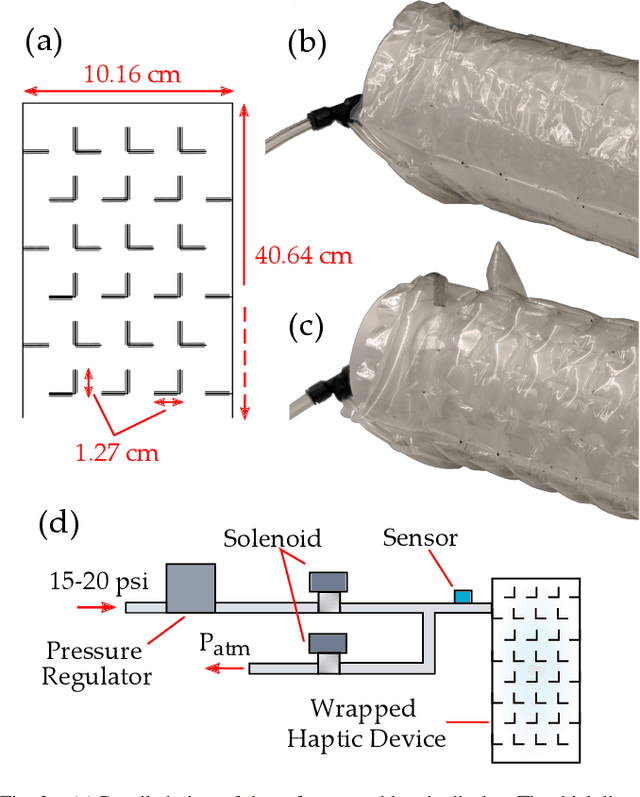

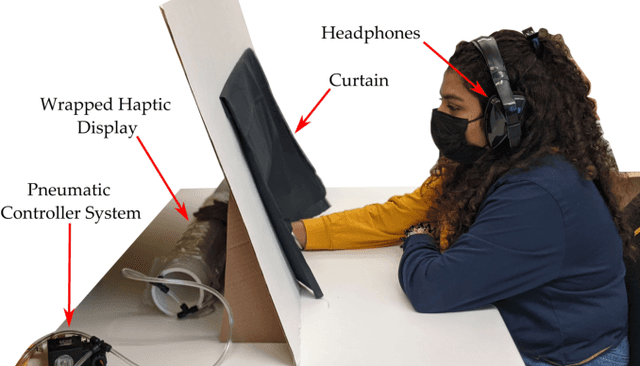

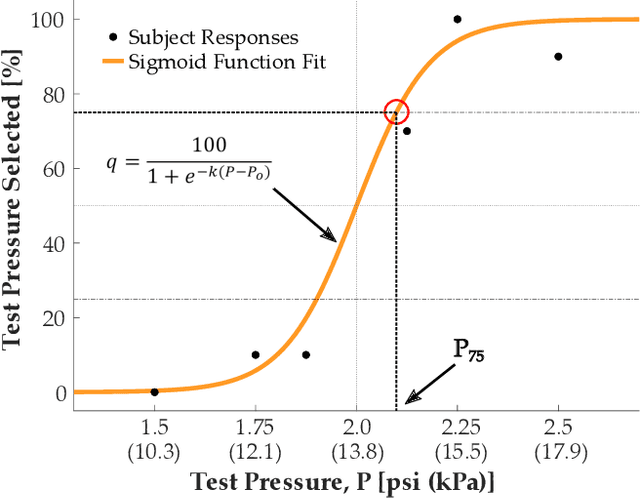

Abstract:Humans can leverage physical interaction to teach robot arms. As the human kinesthetically guides the robot through demonstrations, the robot learns the desired task. While prior works focus on how the robot learns, it is equally important for the human teacher to understand what their robot is learning. Visual displays can communicate this information; however, we hypothesize that visual feedback alone misses out on the physical connection between the human and robot. In this paper we introduce a novel class of soft haptic displays that wrap around the robot arm, adding signals without affecting interaction. We first design a pneumatic actuation array that remains flexible in mounting. We then develop single and multi-dimensional versions of this wrapped haptic display, and explore human perception of the rendered signals during psychophysic tests and robot learning. We ultimately find that people accurately distinguish single-dimensional feedback with a Weber fraction of 11.4%, and identify multi-dimensional feedback with 94.5% accuracy. When physically teaching robot arms, humans leverage the single- and multi-dimensional feedback to provide better demonstrations than with visual feedback: our wrapped haptic display decreases teaching time while increasing demonstration quality. This improvement depends on the location and distribution of the wrapped haptic display. You can see videos of our device and experiments here: https://youtu.be/yPcMGeqsjdM

Wrapped Haptic Display for Communicating Physical Robot Learning

Nov 08, 2021

Abstract:Physical interaction between humans and robots can help robots learn to perform complex tasks. The robot arm gains information by observing how the human kinesthetically guides it throughout the task. While prior works focus on how the robot learns, it is equally important that this learning is transparent to the human teacher. Visual displays that show the robot's uncertainty can potentially communicate this information; however, we hypothesize that visual feedback mechanisms miss out on the physical connection between the human and robot. In this work we present a soft haptic display that wraps around and conforms to the surface of a robot arm, adding a haptic signal at an existing point of contact without significantly affecting the interaction. We demonstrate how soft actuation creates a salient haptic signal while still allowing flexibility in device mounting. Using a psychophysics experiment, we show that users can accurately distinguish inflation levels of the wrapped display with an average Weber fraction of 11.4%. When we place the wrapped display around the arm of a robotic manipulator, users are able to interpret and leverage the haptic signal in sample robot learning tasks, improving identification of areas where the robot needs more training and enabling the user to provide better demonstrations. See videos of our device and user studies here: https://youtu.be/tX-2Tqeb9Nw

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge