Anthony C. Constantinou

Decoding the mechanisms of the Hattrick football manager game using Bayesian network structure learning for optimal decision-making

Apr 13, 2025Abstract:Hattrick is a free web-based probabilistic football manager game with over 200,000 users competing for titles at national and international levels. Launched in Sweden in 1997 as part of an MSc project, the game's slow-paced design has fostered a loyal community, with many users remaining active for decades. Hattrick's game-engine mechanics are partially hidden, and users have attempted to decode them with incremental success over the years. Rule-based, statistical and machine learning models have been developed to aid this effort and are widely used by the community. However, these models or tools have not been formally described or evaluated in the scientific literature. This study is the first to explore Hattrick using structure learning techniques and Bayesian networks, integrating both data and domain knowledge to develop models capable of explaining and simulating the game engine. We present a comprehensive analysis assessing the effectiveness of structure learning algorithms in relation to knowledge-based structures, and show that while structure learning may achieve a higher overall network fit, it does not result in more accurate predictions for selected variables of interest, when compared to knowledge-based networks that produce a lower overall network fit. Additionally, we introduce and publicly share a fully specified Bayesian network model that matches the performance of top models used by the Hattrick community. We further demonstrate how analysis extends beyond prediction by providing a visual representation of conditional dependencies, and using the best performing Bayesian network model for in-game decision-making. To support future research, we make all data, graphical structures, and models publicly available online.

Stable Structure Learning with HC-Stable and Tabu-Stable Algorithms

Apr 02, 2025Abstract:Many Bayesian Network structure learning algorithms are unstable, with the learned graph sensitive to arbitrary dataset artifacts, such as the ordering of columns (i.e., variable order). PC-Stable attempts to address this issue for the widely-used PC algorithm, prompting researchers to use the "stable" version instead. However, this problem seems to have been overlooked for score-based algorithms. In this study, we show that some widely-used score-based algorithms, as well as hybrid and constraint-based algorithms, including PC-Stable, suffer from the same issue. We propose a novel solution for score-based greedy hill-climbing that eliminates instability by determining a stable node order, leading to consistent results regardless of variable ordering. Two implementations, HC-Stable and Tabu-Stable, are introduced. Tabu-Stable achieves the highest BIC scores across all networks, and the highest accuracy for categorical networks. These results highlight the importance of addressing instability in structure learning and provide a robust and practical approach for future applications. This extends the scope and impact of our previous work presented at Probabilistic Graphical Models 2024 by incorporating continuous variables. The implementation, along with usage instructions, is freely available on GitHub at https://github.com/causal-iq/discovery.

Using GPT-4 to guide causal machine learning

Jul 26, 2024Abstract:Since its introduction to the public, ChatGPT has had an unprecedented impact. While some experts praised AI advancements and highlighted their potential risks, others have been critical about the accuracy and usefulness of Large Language Models (LLMs). In this paper, we are interested in the ability of LLMs to identify causal relationships. We focus on the well-established GPT-4 (Turbo) and evaluate its performance under the most restrictive conditions, by isolating its ability to infer causal relationships based solely on the variable labels without being given any context, demonstrating the minimum level of effectiveness one can expect when it is provided with label-only information. We show that questionnaire participants judge the GPT-4 graphs as the most accurate in the evaluated categories, closely followed by knowledge graphs constructed by domain experts, with causal Machine Learning (ML) far behind. We use these results to highlight the important limitation of causal ML, which often produces causal graphs that violate common sense, affecting trust in them. However, we show that pairing GPT-4 with causal ML overcomes this limitation, resulting in graphical structures learnt from real data that align more closely with those identified by domain experts, compared to structures learnt by causal ML alone. Overall, our findings suggest that despite GPT-4 not being explicitly designed to reason causally, it can still be a valuable tool for causal representation, as it improves the causal discovery process of causal ML algorithms that are designed to do just that.

Investigating potential causes of Sepsis with Bayesian network structure learning

Jun 13, 2024Abstract:Sepsis is a life-threatening and serious global health issue. This study combines knowledge with available hospital data to investigate the potential causes of Sepsis that can be affected by policy decisions. We investigate the underlying causal structure of this problem by combining clinical expertise with score-based, constraint-based, and hybrid structure learning algorithms. A novel approach to model averaging and knowledge-based constraints was implemented to arrive at a consensus structure for causal inference. The structure learning process highlighted the importance of exploring data-driven approaches alongside clinical expertise. This includes discovering unexpected, although reasonable, relationships from a clinical perspective. Hypothetical interventions on Chronic Obstructive Pulmonary Disease, Alcohol dependence, and Diabetes suggest that the presence of any of these risk factors in patients increases the likelihood of Sepsis. This finding, alongside measuring the effect of these risk factors on Sepsis, has potential policy implications. Recognising the importance of prediction in improving Sepsis related health outcomes, the model built is also assessed in its ability to predict Sepsis. The predictions generated by the consensus model were assessed for their accuracy, sensitivity, and specificity. These three indicators all had results around 70%, and the AUC was 80%, which means the causal structure of the model is reasonably accurate given that the models were trained on data available for commissioning purposes only.

Investigating the validity of structure learning algorithms in identifying risk factors for intervention in patients with diabetes

Mar 21, 2024

Abstract:Diabetes, a pervasive and enduring health challenge, imposes significant global implications on health, financial healthcare systems, and societal well-being. This study undertakes a comprehensive exploration of various structural learning algorithms to discern causal pathways amongst potential risk factors influencing diabetes progression. The methodology involves the application of these algorithms to relevant diabetes data, followed by the conversion of their output graphs into Causal Bayesian Networks (CBNs), enabling predictive analysis and the evaluation of discrepancies in the effect of hypothetical interventions within our context-specific case study. This study highlights the substantial impact of algorithm selection on intervention outcomes. To consolidate insights from diverse algorithms, we employ a model-averaging technique that helps us obtain a unique causal model for diabetes derived from a varied set of structural learning algorithms. We also investigate how each of those individual graphs, as well as the average graph, compare to the structures elicited by a domain expert who categorised graph edges into high confidence, moderate, and low confidence types, leading into three individual graphs corresponding to the three levels of confidence. The resulting causal model and data are made available online, and serve as a valuable resource and a guide for informed decision-making by healthcare practitioners, offering a comprehensive understanding of the interactions between relevant risk factors and the effect of hypothetical interventions. Therefore, this research not only contributes to the academic discussion on diabetes, but also provides practical guidance for healthcare professionals in developing efficient intervention and risk management strategies.

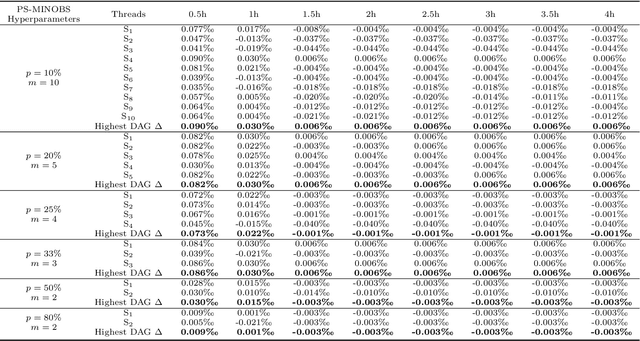

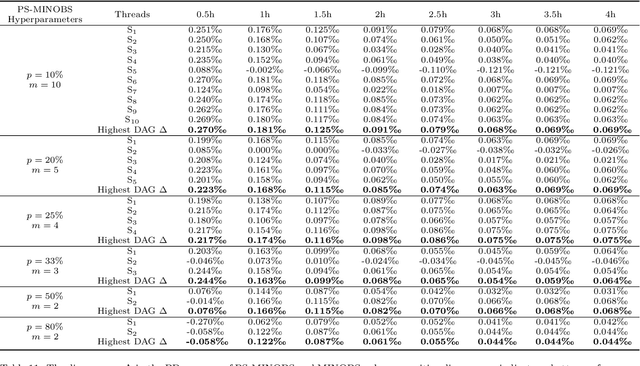

Tuning structure learning algorithms with out-of-sample and resampling strategies

Jun 24, 2023Abstract:One of the challenges practitioners face when applying structure learning algorithms to their data involves determining a set of hyperparameters; otherwise, a set of hyperparameter defaults is assumed. The optimal hyperparameter configuration often depends on multiple factors, including the size and density of the usually unknown underlying true graph, the sample size of the input data, and the structure learning algorithm. We propose a novel hyperparameter tuning method, called the Out-of-sample Tuning for Structure Learning (OTSL), that employs out-of-sample and resampling strategies to estimate the optimal hyperparameter configuration for structure learning, given the input data set and structure learning algorithm. Synthetic experiments show that employing OTSL as a means to tune the hyperparameters of hybrid and score-based structure learning algorithms leads to improvements in graphical accuracy compared to the state-of-the-art. We also illustrate the applicability of this approach to real datasets from different disciplines.

Open problems in causal structure learning: A case study of COVID-19 in the UK

May 05, 2023Abstract:Causal machine learning (ML) algorithms recover graphical structures that tell us something about cause-and-effect relationships. The causal representation provided by these algorithms enables transparency and explainability, which is necessary in critical real-world problems. Yet, causal ML has had limited impact in practice compared to associational ML. This paper investigates the challenges of causal ML with application to COVID-19 UK pandemic data. We collate data from various public sources and investigate what the various structure learning algorithms learn from these data. We explore the impact of different data formats on algorithms spanning different classes of learning, and assess the results produced by each algorithm, and groups of algorithms, in terms of graphical structure, model dimensionality, sensitivity analysis, confounding variables, predictive and interventional inference. We use these results to highlight open problems in causal structure learning and directions for future research. To facilitate future work, we make all graphs, models and data sets publicly available online.

Discovery and density estimation of latent confounders in Bayesian networks with evidence lower bound

Jun 16, 2022

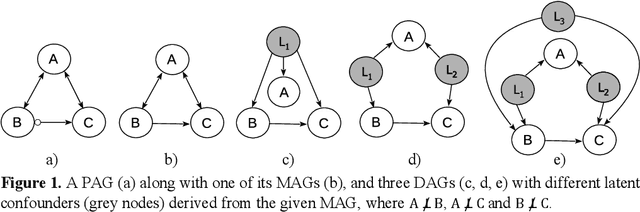

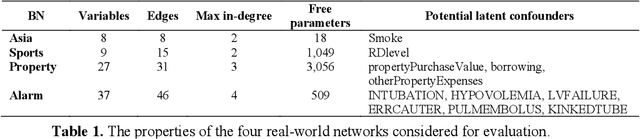

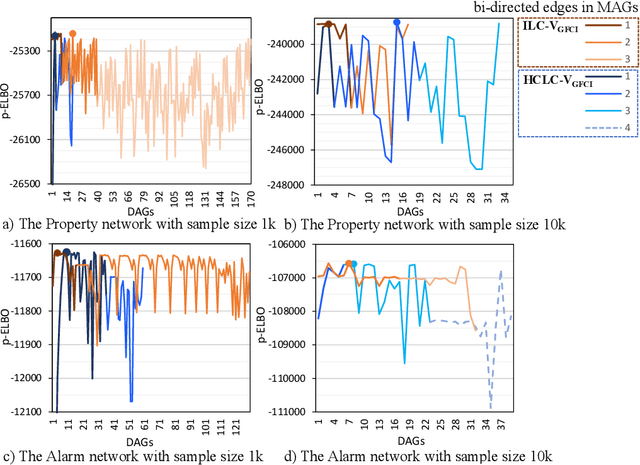

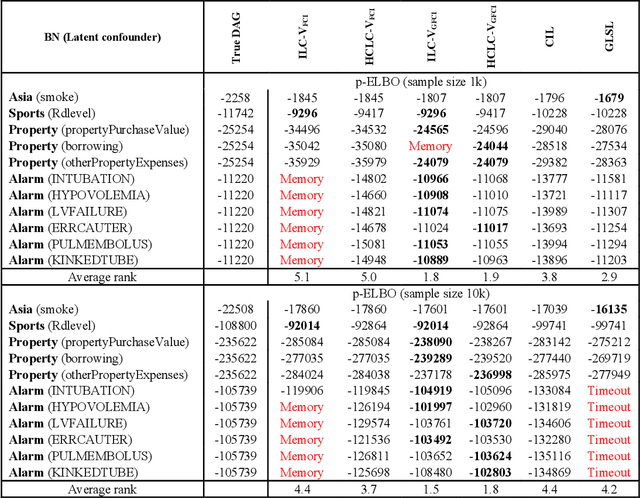

Abstract:Discovering and parameterising latent confounders represent important and challenging problems in causal structure learning and density estimation respectively. In this paper, we focus on both discovering and learning the distribution of latent confounders. This task requires solutions that come from different areas of statistics and machine learning. We combine elements of variational Bayesian methods, expectation-maximisation, hill-climbing search, and structure learning under the assumption of causal insufficiency. We propose two learning strategies; one that maximises model selection accuracy, and another that improves computational efficiency in exchange for minor reductions in accuracy. The former strategy is suitable for small networks and the latter for moderate size networks. Both learning strategies perform well relative to existing solutions.

Parallel Sampling for Efficient High-dimensional Bayesian Network Structure Learning

Feb 19, 2022

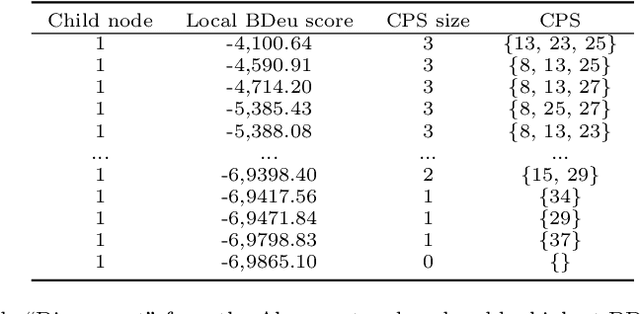

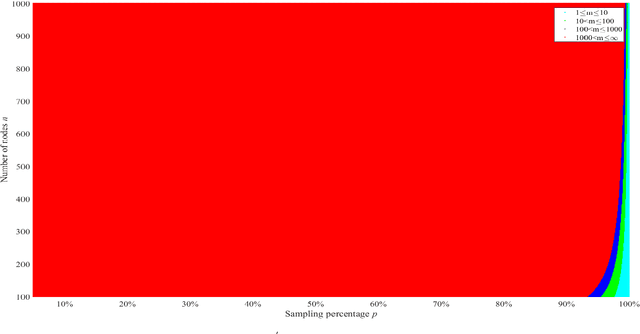

Abstract:Score-based algorithms that learn the structure of Bayesian networks can be used for both exact and approximate solutions. While approximate learning scales better with the number of variables, it can be computationally expensive in the presence of high dimensional data. This paper describes an approximate algorithm that performs parallel sampling on Candidate Parent Sets (CPSs), and can be viewed as an extension of MINOBS which is a state-of-the-art algorithm for structure learning from high dimensional data. The modified algorithm, which we call Parallel Sampling MINOBS (PS-MINOBS), constructs the graph by sampling CPSs for each variable. Sampling is performed in parallel under the assumption the distribution of CPSs is half-normal when ordered by Bayesian score for each variable. Sampling from a half-normal distribution ensures that the CPSs sampled are likely to be those which produce the higher scores. Empirical results show that, in most cases, the proposed algorithm discovers higher score structures than MINOBS when both algorithms are restricted to the same runtime limit.

Hybrid Bayesian network discovery with latent variables by scoring multiple interventions

Dec 20, 2021

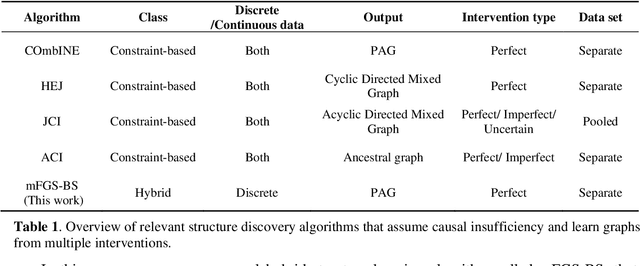

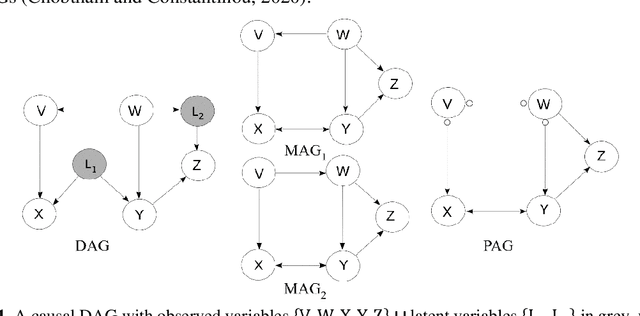

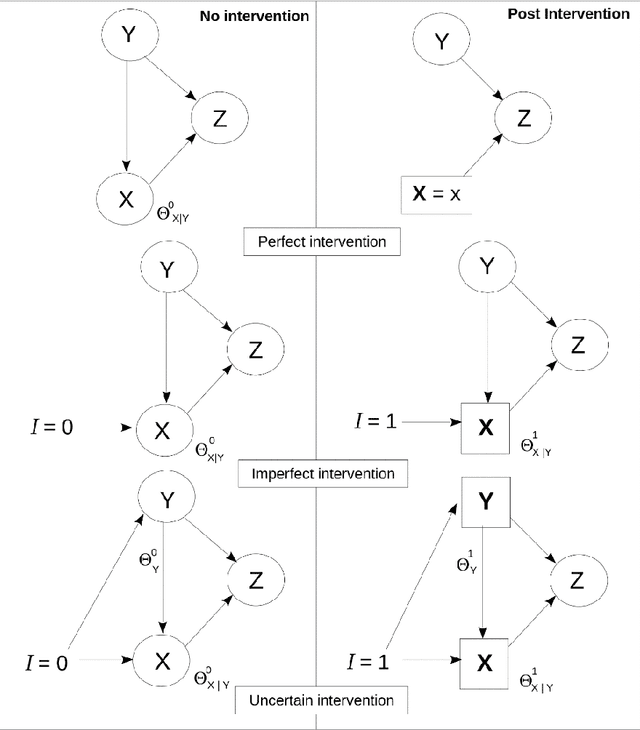

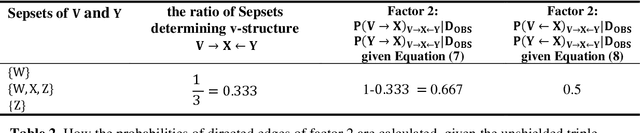

Abstract:In Bayesian Networks (BNs), the direction of edges is crucial for causal reasoning and inference. However, Markov equivalence class considerations mean it is not always possible to establish edge orientations, which is why many BN structure learning algorithms cannot orientate all edges from purely observational data. Moreover, latent confounders can lead to false positive edges. Relatively few methods have been proposed to address these issues. In this work, we present the hybrid mFGS-BS (majority rule and Fast Greedy equivalence Search with Bayesian Scoring) algorithm for structure learning from discrete data that involves an observational data set and one or more interventional data sets. The algorithm assumes causal insufficiency in the presence of latent variables and produces a Partial Ancestral Graph (PAG). Structure learning relies on a hybrid approach and a novel Bayesian scoring paradigm that calculates the posterior probability of each directed edge being added to the learnt graph. Experimental results based on well-known networks of up to 109 variables and 10k sample size show that mFGS-BS improves structure learning accuracy relative to the state-of-the-art and it is computationally efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge