Anna Zamansky

Semantic Style Transfer for Enhancing Animal Facial Landmark Detection

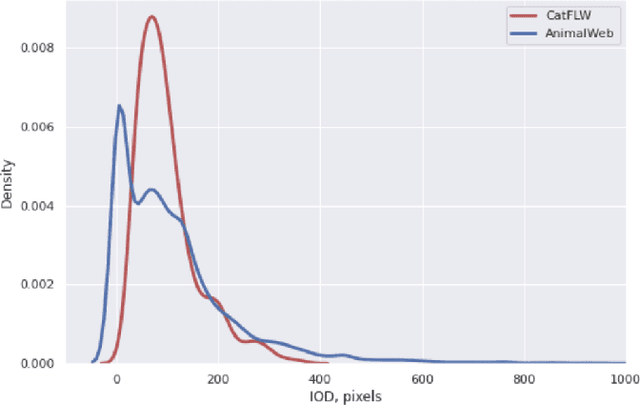

May 08, 2025Abstract:Neural Style Transfer (NST) is a technique for applying the visual characteristics of one image onto another while preserving structural content. Traditionally used for artistic transformations, NST has recently been adapted, e.g., for domain adaptation and data augmentation. This study investigates the use of this technique for enhancing animal facial landmark detectors training. As a case study, we use a recently introduced Ensemble Landmark Detector for 48 anatomical cat facial landmarks and the CatFLW dataset it was trained on, making three main contributions. First, we demonstrate that applying style transfer to cropped facial images rather than full-body images enhances structural consistency, improving the quality of generated images. Secondly, replacing training images with style-transferred versions raised challenges of annotation misalignment, but Supervised Style Transfer (SST) - which selects style sources based on landmark accuracy - retained up to 98% of baseline accuracy. Finally, augmenting the dataset with style-transferred images further improved robustness, outperforming traditional augmentation methods. These findings establish semantic style transfer as an effective augmentation strategy for enhancing the performance of facial landmark detection models for animals and beyond. While this study focuses on cat facial landmarks, the proposed method can be generalized to other species and landmark detection models.

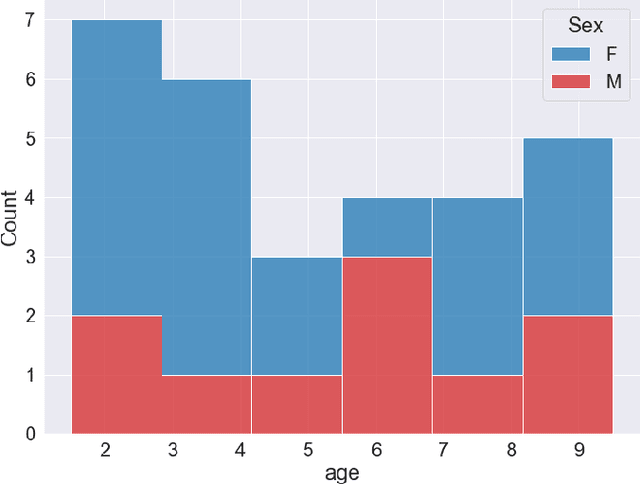

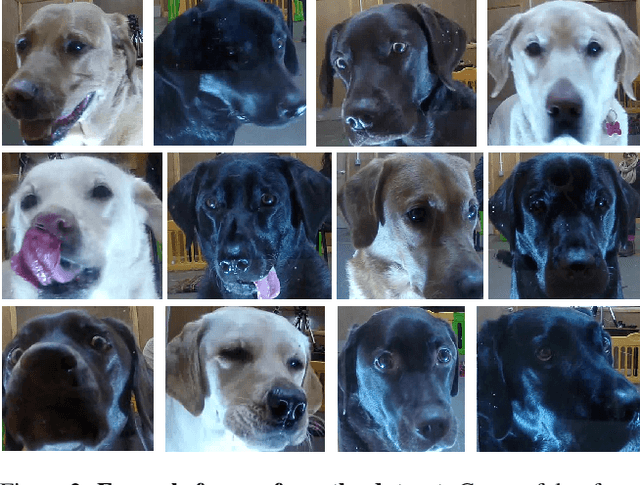

DogFLW: Dog Facial Landmarks in the Wild Dataset

May 19, 2024Abstract:Affective computing for animals is a rapidly expanding research area that is going deeper than automated movement tracking to address animal internal states, like pain and emotions. Facial expressions can serve to communicate information about these states in mammals. However, unlike human-related studies, there is a significant shortage of datasets that would enable the automated analysis of animal facial expressions. Inspired by the recently introduced Cat Facial Landmarks in the Wild dataset, presenting cat faces annotated with 48 facial anatomy-based landmarks, in this paper, we develop an analogous dataset containing 3,274 annotated images of dogs. Our dataset is based on a scheme of 46 facial anatomy-based landmarks. The DogFLW dataset is available from the corresponding author upon a reasonable request.

Automated Detection of Cat Facial Landmarks

Oct 15, 2023Abstract:The field of animal affective computing is rapidly emerging, and analysis of facial expressions is a crucial aspect. One of the most significant challenges that researchers in the field currently face is the scarcity of high-quality, comprehensive datasets that allow the development of models for facial expressions analysis. One of the possible approaches is the utilisation of facial landmarks, which has been shown for humans and animals. In this paper we present a novel dataset of cat facial images annotated with bounding boxes and 48 facial landmarks grounded in cat facial anatomy. We also introduce a landmark detection convolution neural network-based model which uses a magnifying ensembe method. Our model shows excellent performance on cat faces and is generalizable to human facial landmark detection.

Machine Learning Approaches to Predict and Detect Early-Onset of Digital Dermatitis in Dairy Cows using Sensor Data

Sep 18, 2023Abstract:The aim of this study was to employ machine learning algorithms based on sensor behavior data for (1) early-onset detection of digital dermatitis (DD); and (2) DD prediction in dairy cows. With the ultimate goal to set-up early warning tools for DD prediction, which would than allow a better monitoring and management of DD under commercial settings, resulting in a decrease of DD prevalence and severity, while improving animal welfare. A machine learning model that is capable of predicting and detecting digital dermatitis in cows housed under free-stall conditions based on behavior sensor data has been purposed and tested in this exploratory study. The model for DD detection on day 0 of the appearance of the clinical signs has reached an accuracy of 79%, while the model for prediction of DD 2 days prior to the appearance of the first clinical signs has reached an accuracy of 64%. The proposed machine learning models could help to develop a real-time automated tool for monitoring and diagnostic of DD in lactating dairy cows, based on behavior sensor data under conventional dairy environments. Results showed that alterations in behavioral patterns at individual levels can be used as inputs in an early warning system for herd management in order to detect variances in health of individual cows.

BovineTalk: Machine Learning for Vocalization Analysis of Dairy Cattle under Negative Affective States

Jul 26, 2023

Abstract:There is a critical need to develop and validate non-invasive animal-based indicators of affective states in livestock species, in order to integrate them into on-farm assessment protocols, potentially via the use of precision livestock farming (PLF) tools. One such promising approach is the use of vocal indicators. The acoustic structure of vocalizations and their functions were extensively studied in important livestock species, such as pigs, horses, poultry and goats, yet cattle remain understudied in this context to date. Cows were shown to produce two types vocalizations: low-frequency calls (LF), produced with the mouth closed, or partially closed, for close distance contacts and open mouth emitted high-frequency calls (HF), produced for long distance communication, with the latter considered to be largely associated with negative affective states. Moreover, cattle vocalizations were shown to contain information on individuality across a wide range of contexts, both negative and positive. Nowadays, dairy cows are facing a series of negative challenges and stressors in a typical production cycle, making vocalizations during negative affective states of special interest for research. One contribution of this study is providing the largest to date pre-processed (clean from noises) dataset of lactating adult multiparous dairy cows during negative affective states induced by visual isolation challenges. Here we present two computational frameworks - deep learning based and explainable machine learning based, to classify high and low-frequency cattle calls, and individual cow voice recognition. Our models in these two frameworks reached 87.2% and 89.4% accuracy for LF and HF classification, with 68.9% and 72.5% accuracy rates for the cow individual identification, respectively.

Digitally-Enhanced Dog Behavioral Testing: Getting Help from the Machine

Jul 26, 2023Abstract:The assessment of behavioral traits in dogs is a well-studied challenge due to its many practical applications such as selection for breeding, prediction of working aptitude, chances of being adopted, etc. Most methods for assessing behavioral traits are questionnaire or observation-based, which require a significant amount of time, effort and expertise. In addition, these methods are also susceptible to subjectivity and bias, making them less reliable. In this study, we proposed an automated computational approach that may provide a more objective, robust and resource-efficient alternative to current solutions. Using part of a Stranger Test protocol, we tested n=53 dogs for their response to the presence and benign actions of a stranger. Dog coping styles were scored by three experts. Moreover, data were collected from their handlers using the Canine Behavioral Assessment and Research Questionnaire (C-BARQ). An unsupervised clustering of the dogs' trajectories revealed two main clusters showing a significant difference in the stranger-directed fear C-BARQ factor, as well as a good separation between (sufficiently) relaxed dogs and dogs with excessive behaviors towards strangers based on expert scoring. Based on the clustering, we obtained a machine learning classifier for expert scoring of coping styles towards strangers, which reached an accuracy of 78%. We also obtained a regression model predicting C-BARQ factor scores with varying performance, the best being Owner-Directed Aggression (with a mean average error of 0.108) and Excitability (with a mean square error of 0.032). This case study demonstrates a novel paradigm of digitally enhanced canine behavioral testing.

CatFLW: Cat Facial Landmarks in the Wild Dataset

May 07, 2023

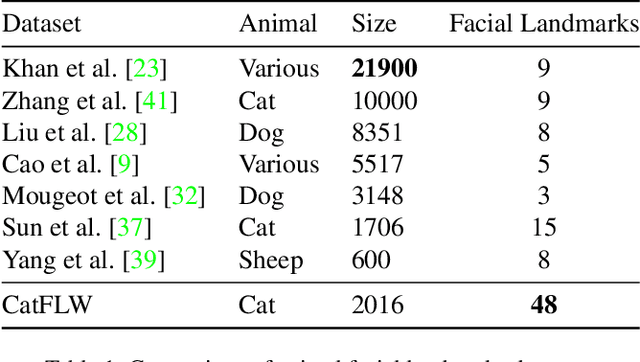

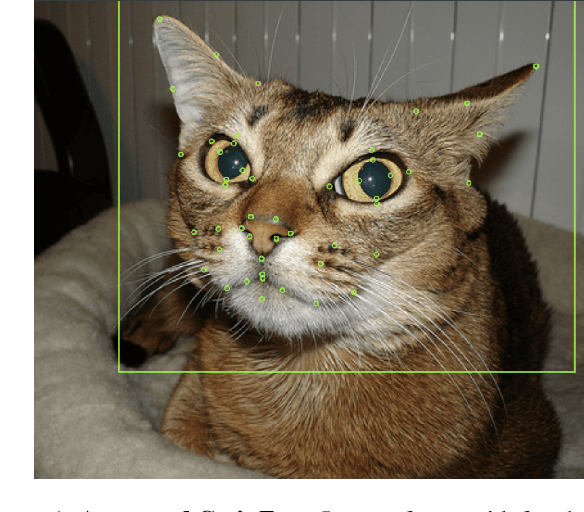

Abstract:Animal affective computing is a quickly growing field of research, where only recently first efforts to go beyond animal tracking into recognizing their internal states, such as pain and emotions, have emerged. In most mammals, facial expressions are an important channel for communicating information about these states. However, unlike the human domain, there is an acute lack of datasets that make automation of facial analysis of animals feasible. This paper aims to fill this gap by presenting a dataset called Cat Facial Landmarks in the Wild (CatFLW) which contains 2016 images of cat faces in different environments and conditions, annotated with 48 facial landmarks specifically chosen for their relationship with underlying musculature, and relevance to cat-specific facial Action Units (CatFACS). To the best of our knowledge, this dataset has the largest amount of cat facial landmarks available. In addition, we describe a semi-supervised (human-in-the-loop) method of annotating images with landmarks, used for creating this dataset, which significantly reduces the annotation time and could be used for creating similar datasets for other animals. The dataset is available on request.

Going Deeper than Tracking: a Survey of Computer-Vision Based Recognition of Animal Pain and Affective States

Jun 16, 2022

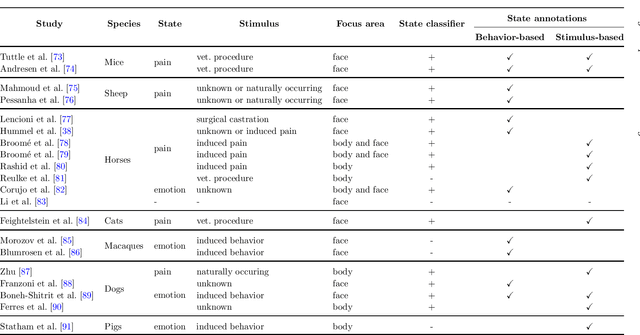

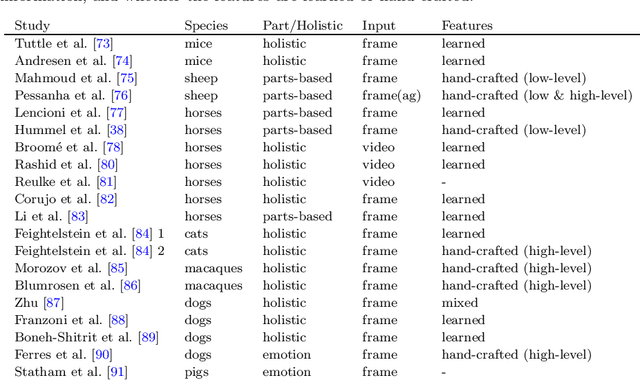

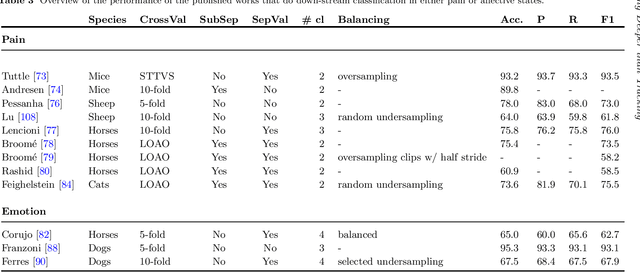

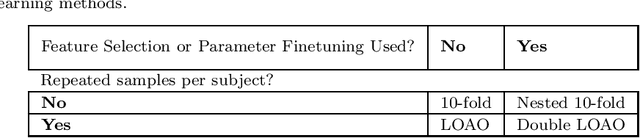

Abstract:Advances in animal motion tracking and pose recognition have been a game changer in the study of animal behavior. Recently, an increasing number of works go 'deeper' than tracking, and address automated recognition of animals' internal states such as emotions and pain with the aim of improving animal welfare, making this a timely moment for a systematization of the field. This paper provides a comprehensive survey of computer vision-based research on recognition of affective states and pain in animals, addressing both facial and bodily behavior analysis. We summarize the efforts that have been presented so far within this topic -- classifying them across different dimensions, highlight challenges and research gaps, and provide best practice recommendations for advancing the field, and some future directions for research.

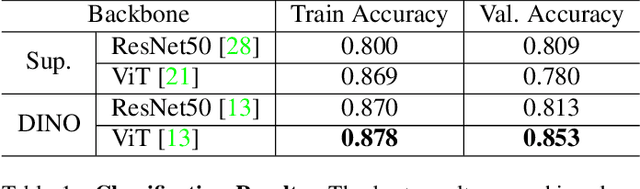

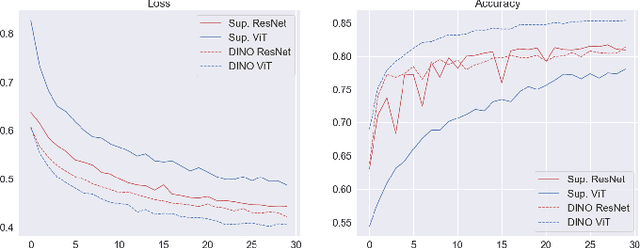

Deep Learning Models for Automated Classification of Dog Emotional States from Facial Expressions

Jun 11, 2022

Abstract:Similarly to humans, facial expressions in animals are closely linked with emotional states. However, in contrast to the human domain, automated recognition of emotional states from facial expressions in animals is underexplored, mainly due to difficulties in data collection and establishment of ground truth concerning emotional states of non-verbal users. We apply recent deep learning techniques to classify (positive) anticipation and (negative) frustration of dogs on a dataset collected in a controlled experimental setting. We explore the suitability of different backbones (e.g. ResNet, ViT) under different supervisions to this task, and find that features of a self-supervised pretrained ViT (DINO-ViT) are superior to the other alternatives. To the best of our knowledge, this work is the first to address the task of automatic classification of canine emotions on data acquired in a controlled experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge