Marwa Mahmoud

Cross-Language Bias Examination in Large Language Models

Dec 17, 2025Abstract:This study introduces an innovative multilingual bias evaluation framework for assessing bias in Large Language Models, combining explicit bias assessment through the BBQ benchmark with implicit bias measurement using a prompt-based Implicit Association Test. By translating the prompts and word list into five target languages, English, Chinese, Arabic, French, and Spanish, we directly compare different types of bias across languages. The results reveal substantial gaps in bias across languages used in LLMs. For example, Arabic and Spanish consistently show higher levels of stereotype bias, while Chinese and English exhibit lower levels of bias. We also identify contrasting patterns across bias types. Age shows the lowest explicit bias but the highest implicit bias, emphasizing the importance of detecting implicit biases that are undetectable with standard benchmarks. These findings indicate that LLMs vary significantly across languages and bias dimensions. This study fills a key research gap by providing a comprehensive methodology for cross-lingual bias analysis. Ultimately, our work establishes a foundation for the development of equitable multilingual LLMs, ensuring fairness and effectiveness across diverse languages and cultures.

Towards early prediction of neurodevelopmental disorders: Computational model for Face Touch and Self-adaptors in Infants

Jan 15, 2023

Abstract:Infants' neurological development is heavily influenced by their motor skills. Evaluating a baby's movements is key to understanding possible risks of developmental disorders in their growth. Previous research in psychology has shown that measuring specific movements or gestures such as face touches in babies is essential to analyse how babies understand themselves and their context. This research proposes the first automatic approach that detects face touches from video recordings by tracking infants' movements and gestures. The study uses a multimodal feature fusion approach mixing spatial and temporal features and exploits skeleton tracking information to generate more than 170 aggregated features of hand, face and body. This research proposes data-driven machine learning models for the detection and classification of face touch in infants. We used cross dataset testing to evaluate our proposed models. The models achieved 87.0% accuracy in detecting face touches and 71.4% macro-average accuracy in detecting specific face touch locations with significant improvements over Zero Rule and uniform random chance baselines. Moreover, we show that when we run our model to extract face touch frequencies of a larger dataset, we can predict the development of fine motor skills during the first 5 months after birth.

Going Deeper than Tracking: a Survey of Computer-Vision Based Recognition of Animal Pain and Affective States

Jun 16, 2022

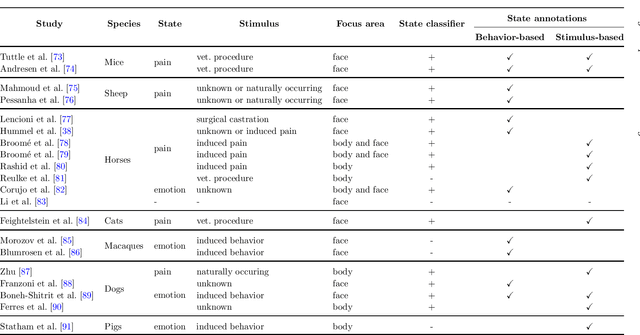

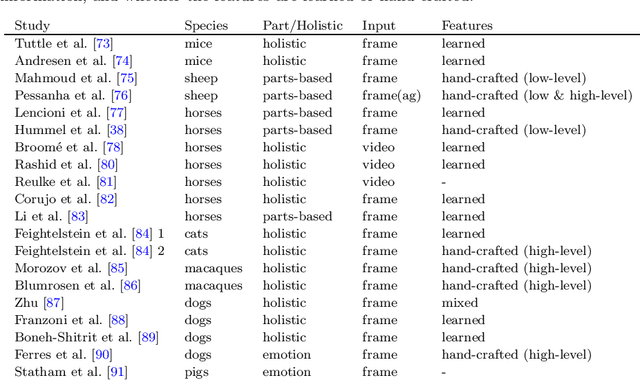

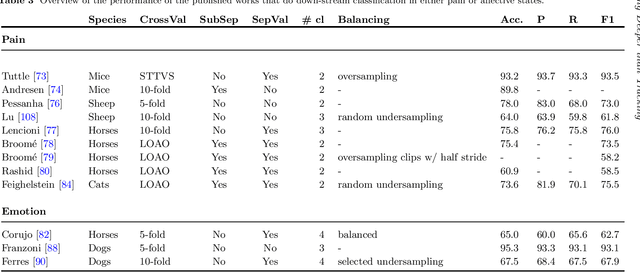

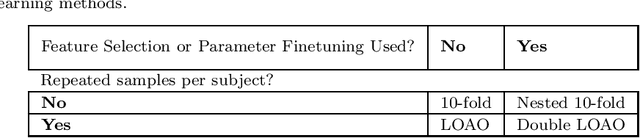

Abstract:Advances in animal motion tracking and pose recognition have been a game changer in the study of animal behavior. Recently, an increasing number of works go 'deeper' than tracking, and address automated recognition of animals' internal states such as emotions and pain with the aim of improving animal welfare, making this a timely moment for a systematization of the field. This paper provides a comprehensive survey of computer vision-based research on recognition of affective states and pain in animals, addressing both facial and bodily behavior analysis. We summarize the efforts that have been presented so far within this topic -- classifying them across different dimensions, highlight challenges and research gaps, and provide best practice recommendations for advancing the field, and some future directions for research.

Looking At The Body: Automatic Analysis of Body Gestures and Self-Adaptors in Psychological Distress

Jul 31, 2020

Abstract:Psychological distress is a significant and growing issue in society. Automatic detection, assessment, and analysis of such distress is an active area of research. Compared to modalities such as face, head, and vocal, research investigating the use of the body modality for these tasks is relatively sparse. This is, in part, due to the limited available datasets and difficulty in automatically extracting useful body features. Recent advances in pose estimation and deep learning have enabled new approaches to this modality and domain. To enable this research, we have collected and analyzed a new dataset containing full body videos for short interviews and self-reported distress labels. We propose a novel method to automatically detect self-adaptors and fidgeting, a subset of self-adaptors that has been shown to be correlated with psychological distress. We perform analysis on statistical body gestures and fidgeting features to explore how distress levels affect participants' behaviors. We then propose a multi-modal approach that combines different feature representations using Multi-modal Deep Denoising Auto-Encoders and Improved Fisher Vector Encoding. We demonstrate that our proposed model, combining audio-visual features with automatically detected fidgeting behavioral cues, can successfully predict distress levels in a dataset labeled with self-reported anxiety and depression levels.

Considering Race a Problem of Transfer Learning

Dec 12, 2018

Abstract:As biometric applications are fielded to serve large population groups, issues of performance differences between individual sub-groups are becoming increasingly important. In this paper we examine cases where we believe race is one such factor. We look in particular at two forms of problem; facial classification and image synthesis. We take the novel approach of considering race as a boundary for transfer learning in both the task (facial classification) and the domain (synthesis over distinct datasets). We demonstrate a series of techniques to improve transfer learning of facial classification; outperforming similar models trained in the target's own domain. We conduct a study to evaluate the performance drop of Generative Adversarial Networks trained to conduct image synthesis, in this process, we produce a new annotation for the Celeb-A dataset by race. These networks are trained solely on one race and tested on another - demonstrating the subsets of the CelebA to be distinct domains for this task.

Shape-only Features for Plant Leaf Identification

Nov 20, 2018

Abstract:This paper presents a novel feature set for shape-only leaf identification motivated by real-world, mobile deployment. The feature set includes basic shape features, as well as signal features extracted from local area integral invariants (LAIIs), similar to curvature maps, at multiple scales. The proposed methodology is evaluated on a number of publicly available leaf datasets with comparable results to existing methods which make use of colour and texture features in addition to shape. Over 90% classification accuracy is achieved on most datasets, with top-four accuracy for these datasets reaching over 98%. Rotation and scale invariance of the proposed features are demonstrated, along with an evaluation of the generalisability of the approach for generic shape matching.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge