Andrzej Wasowski

Risk-Averse Planning and Plan Assessment for Marine Robots

Oct 01, 2024

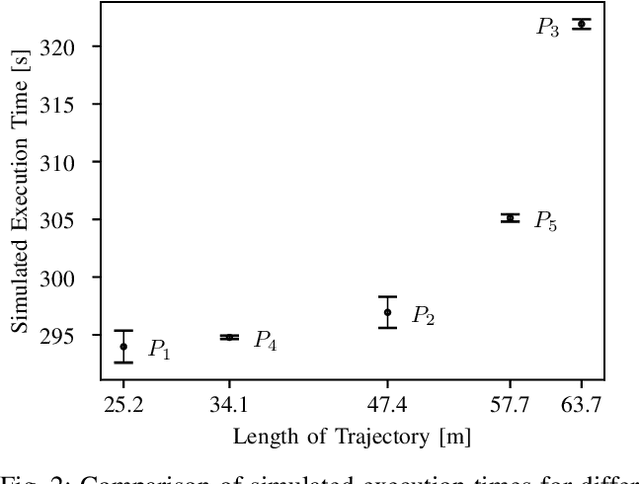

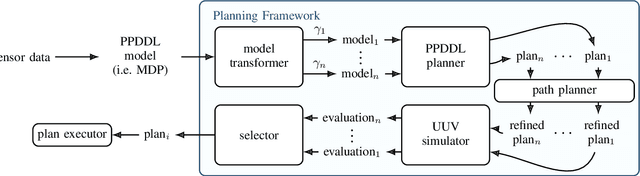

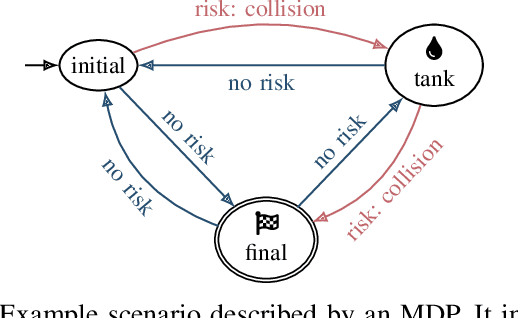

Abstract:Autonomous Underwater Vehicles (AUVs) need to operate for days without human intervention and thus must be able to do efficient and reliable task planning. Unfortunately, efficient task planning requires deliberately abstract domain models (for scalability reasons), which in practice leads to plans that might be unreliable or under performing in practice. An optimal abstract plan may turn out suboptimal or unreliable during physical execution. To overcome this, we introduce a method that first generates a selection of diverse high-level plans and then assesses them in a low-level simulation to select the optimal and most reliable candidate. We evaluate the method using a realistic underwater robot simulation, estimating the risk metrics for different scenarios, demonstrating feasibility and effectiveness of the approach.

Uncertainty Driven Active Learning for Image Segmentation in Underwater Inspection

Mar 20, 2024Abstract:Active learning aims to select the minimum amount of data to train a model that performs similarly to a model trained with the entire dataset. We study the potential of active learning for image segmentation in underwater infrastructure inspection tasks, where large amounts of data are typically collected. The pipeline inspection images are usually semantically repetitive but with great variations in quality. We use mutual information as the acquisition function, calculated using Monte Carlo dropout. To assess the effectiveness of the framework, DenseNet and HyperSeg are trained with the CamVid dataset using active learning. In addition, HyperSeg is trained with a pipeline inspection dataset of over 50,000 images. For the pipeline dataset, HyperSeg with active learning achieved 67.5% meanIoU using 12.5% of the data, and 61.4% with the same amount of randomly selected images. This shows that using active learning for segmentation models in underwater inspection tasks can lower the cost significantly.

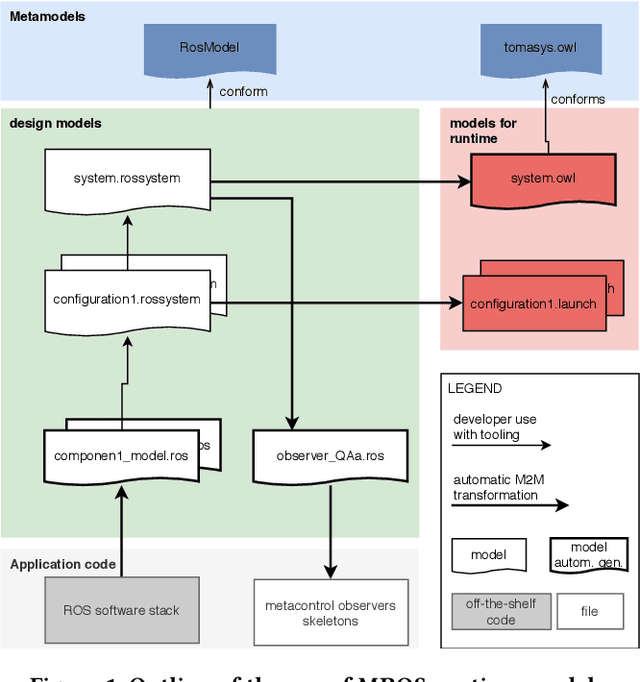

MROS: A framework for robot self-adaptation

Mar 16, 2023Abstract:Self-adaptation can be used in robotics to increase system robustness and reliability. This work describes the Metacontrol method for self-adaptation in robotics. Particularly, it details how the MROS (Metacontrol for ROS Systems) framework implements and packages Metacontrol, and it demonstrate how MROS can be applied in a navigation scenario where a mobile robot navigates in a factory floor. Video: https://www.youtube.com/watch?v=ISe9aMskJuE

Behavior Trees and State Machines in Robotics Applications

Aug 08, 2022

Abstract:Autonomous robots combine a variety of skills to form increasingly complex behaviors called missions. While the skills are often programmed at a relatively low level of abstraction, their coordination is architecturally separated and often expressed in higher-level languages or frameworks. State Machines have been the go-to modeling language for decades, but recently, the language of Behavior Trees gained attention among roboticists. Originally designed for computer games to model autonomous actors, Behavior Trees offer an extensible tree-based representation of missions and are praised for supporting modular design and reuse of code. However, even though, several implementations of the language are in use, little is known about its usage and scope in the real world. How do concepts offered by behavior trees relate to traditional languages, such as state machines? How are behavior tree and state machine concepts used in applications? We present a study of the key language concepts in Behavior Trees and their use in real-world robotic applications. We identify behavior tree languages and compare their semantics to the most well-known behavior modeling language in robotics: state machines. We mine open-source repositories for robotics applications that use the languages and analyze this usage. We find similarity aspects between the two behavior modeling languages in terms of language design and their usage in open-source projects to accommodate the need of robotic domain. We contribute a dataset of real-world behavior models, hoping to inspire the community to use and further develop this language, associated tools, and analysis techniques.

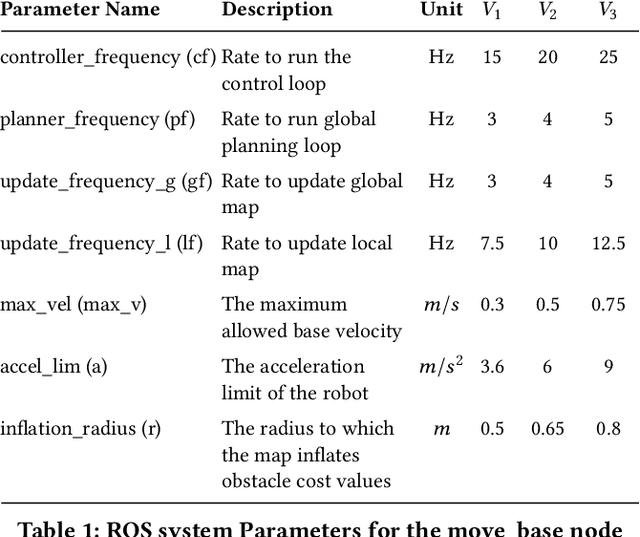

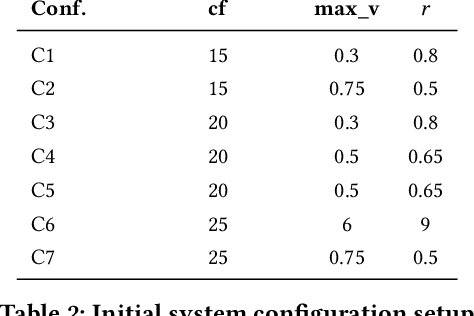

MROS: Runtime Adaptation For Robot Control Architectures

Oct 19, 2020

Abstract:Known attempts to build autonomous robots rely on complex control architectures, often implemented with the Robot Operating System platform (ROS). These architectures need to be dynamically adaptable in order to cope with changing environment conditions, new mission requirements or component failures. The implementation of adaptable architectures is very often ad hoc, quickly gets cumbersome and expensive. We present a structured model-based framework for the adaptation of robot control architectures at run-time to satisfy set quality requirements. We use a formal meta-model to represent the configuration space of control architectures and the corresponding mission requirements. The meta-model is implemented as an OWL ontology with SWRL rules, enabling the use of an off-the-shelf reasoner for diagnostics and adaptation. The method is discussed and evaluated using two case studies of real, ROS-based systems: (i) for an autonomous dual arm mobile manipulator building a pyramid and (ii) a mobile robot navigating in a factory environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge