Einar Broch Johnsen

Dept. of Informatics, University of Oslo

Beyond the Control Equations: An Artifact Study of Implementation Quality in Robot Control Software

Feb 04, 2026Abstract:A controller -- a software module managing hardware behavior -- is a key component of a typical robot system. While control theory gives safety guarantees for standard controller designs, the practical implementation of controllers in software introduces complexities that are often overlooked. Controllers are often designed in continuous space, while the software is executed in discrete space, undermining some of the theoretical guarantees. Despite extensive research on control theory and control modeling, little attention has been paid to the implementations of controllers and how their theoretical guarantees are ensured in real-world software systems. We investigate 184 real-world controller implementations in open-source robot software. We examine their application context, the implementation characteristics, and the testing methods employed to ensure correctness. We find that the implementations often handle discretization in an ad hoc manner, leading to potential issues with real-time reliability. Challenges such as timing inconsistencies, lack of proper error handling, and inadequate consideration of real-time constraints further complicate matters. Testing practices are superficial, no systematic verification of theoretical guarantees is used, leaving possible inconsistencies between expected and actual behavior. Our findings highlight the need for improved implementation guidelines and rigorous verification techniques to ensure the reliability and safety of robotic controllers in practice.

Counterfactual Strategies for Markov Decision Processes

May 14, 2025Abstract:Counterfactuals are widely used in AI to explain how minimal changes to a model's input can lead to a different output. However, established methods for computing counterfactuals typically focus on one-step decision-making, and are not directly applicable to sequential decision-making tasks. This paper fills this gap by introducing counterfactual strategies for Markov Decision Processes (MDPs). During MDP execution, a strategy decides which of the enabled actions (with known probabilistic effects) to execute next. Given an initial strategy that reaches an undesired outcome with a probability above some limit, we identify minimal changes to the initial strategy to reduce that probability below the limit. We encode such counterfactual strategies as solutions to non-linear optimization problems, and further extend our encoding to synthesize diverse counterfactual strategies. We evaluate our approach on four real-world datasets and demonstrate its practical viability in sophisticated sequential decision-making tasks.

BedreFlyt: Improving Patient Flows through Hospital Wards with Digital Twins

May 07, 2025Abstract:Digital twins are emerging as a valuable tool for short-term decision-making as well as for long-term strategic planning across numerous domains, including process industry, energy, space, transport, and healthcare. This paper reports on our ongoing work on designing a digital twin to enhance resource planning, e.g., for the in-patient ward needs in hospitals. By leveraging executable formal models for system exploration, ontologies for knowledge representation and an SMT solver for constraint satisfiability, our approach aims to explore hypothetical "what-if" scenarios to improve strategic planning processes, as well as to solve concrete, short-term decision-making tasks. Our proposed solution uses the executable formal model to turn a stream of arriving patients, that need to be hospitalized, into a stream of optimization problems, e.g., capturing daily inpatient ward needs, that can be solved by SMT techniques. The knowledge base, which formalizes domain knowledge, is used to model the needed configuration in the digital twin, allowing the twin to support both short-term decision-making and long-term strategic planning by generating scenarios spanning average-case as well as worst-case resource needs, depending on the expected treatment of patients, as well as ranging over variations in available resources, e.g., bed distribution in different rooms. We illustrate our digital twin architecture by considering the problem of bed bay allocation in a hospital ward.

* In Proceedings ASQAP 2025, arXiv:2505.02873

ROSA: A Knowledge-based Solution for Robot Self-Adaptation

Apr 29, 2025Abstract:Autonomous robots must operate in diverse environments and handle multiple tasks despite uncertainties. This creates challenges in designing software architectures and task decision-making algorithms, as different contexts may require distinct task logic and architectural configurations. To address this, robotic systems can be designed as self-adaptive systems capable of adapting their task execution and software architecture at runtime based on their context.This paper introduces ROSA, a novel knowledge-based framework for RObot Self-Adaptation, which enables task-and-architecture co-adaptation (TACA) in robotic systems. ROSA achieves this by providing a knowledge model that captures all application-specific knowledge required for adaptation and by reasoning over this knowledge at runtime to determine when and how adaptation should occur. In addition to a conceptual framework, this work provides an open-source ROS 2-based reference implementation of ROSA and evaluates its feasibility and performance in an underwater robotics application. Experimental results highlight ROSA's advantages in reusability and development effort for designing self-adaptive robotic systems.

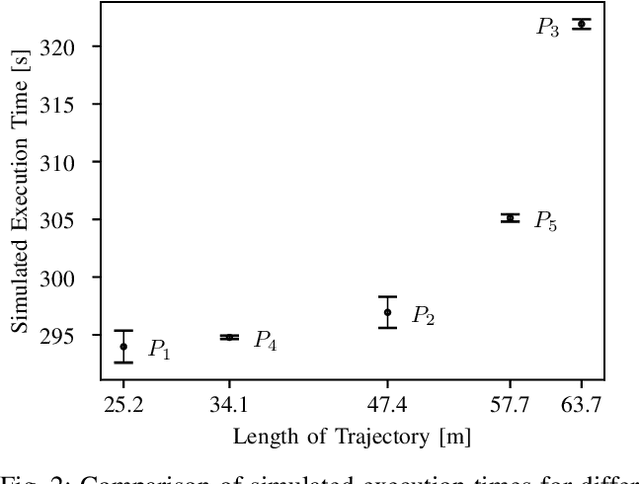

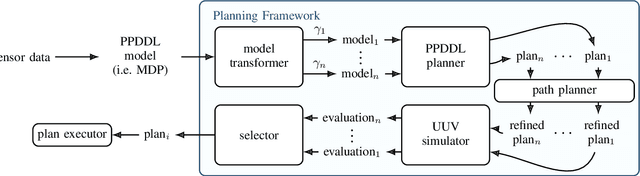

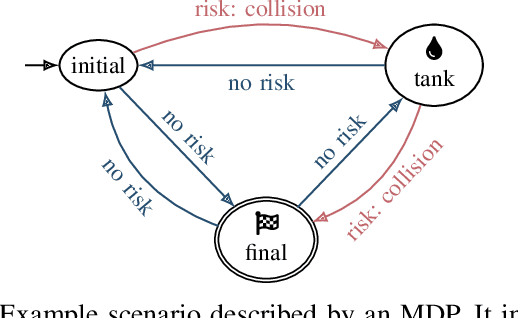

Risk-Averse Planning and Plan Assessment for Marine Robots

Oct 01, 2024

Abstract:Autonomous Underwater Vehicles (AUVs) need to operate for days without human intervention and thus must be able to do efficient and reliable task planning. Unfortunately, efficient task planning requires deliberately abstract domain models (for scalability reasons), which in practice leads to plans that might be unreliable or under performing in practice. An optimal abstract plan may turn out suboptimal or unreliable during physical execution. To overcome this, we introduce a method that first generates a selection of diverse high-level plans and then assesses them in a low-level simulation to select the optimal and most reliable candidate. We evaluate the method using a realistic underwater robot simulation, estimating the risk metrics for different scenarios, demonstrating feasibility and effectiveness of the approach.

Symbolic State Partition for Reinforcement Learning

Sep 25, 2024Abstract:Tabular reinforcement learning methods cannot operate directly on continuous state spaces. One solution for this problem is to partition the state space. A good partitioning enables generalization during learning and more efficient exploitation of prior experiences. Consequently, the learning process becomes faster and produces more reliable policies. However, partitioning introduces approximation, which is particularly harmful in the presence of nonlinear relations between state components. An ideal partition should be as coarse as possible, while capturing the key structure of the state space for the given problem. This work extracts partitions from the environment dynamics by symbolic execution. We show that symbolic partitioning improves state space coverage with respect to environmental behavior and allows reinforcement learning to perform better for sparse rewards. We evaluate symbolic state space partitioning with respect to precision, scalability, learning agent performance and state space coverage for the learnt policies.

Formal Modelling and Analysis of a Self-Adaptive Robotic System

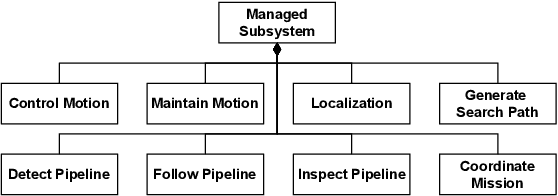

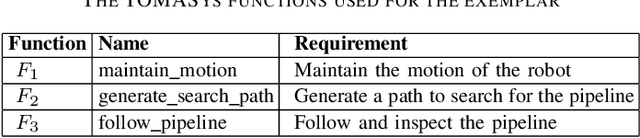

Aug 28, 2023Abstract:Self-adaptation is a crucial feature of autonomous systems that must cope with uncertainties in, e.g., their environment and their internal state. Self-adaptive systems are often modelled as two-layered systems with a managed subsystem handling the domain concerns and a managing subsystem implementing the adaptation logic. We consider a case study of a self-adaptive robotic system; more concretely, an autonomous underwater vehicle (AUV) used for pipeline inspection. In this paper, we model and analyse it with the feature-aware probabilistic model checker ProFeat. The functionalities of the AUV are modelled in a feature model, capturing the AUV's variability. This allows us to model the managed subsystem of the AUV as a family of systems, where each family member corresponds to a valid feature configuration of the AUV. The managing subsystem of the AUV is modelled as a control layer capable of dynamically switching between such valid feature configurations, depending both on environmental and internal conditions. We use this model to analyse probabilistic reward and safety properties for the AUV.

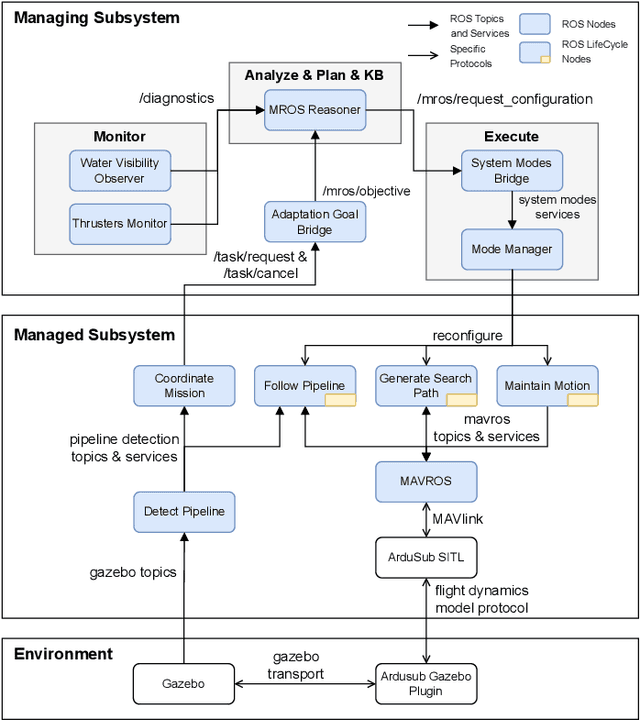

SUAVE: An Exemplar for Self-Adaptive Underwater Vehicles

Mar 16, 2023

Abstract:Once deployed in the real world, autonomous underwater vehicles (AUVs) are out of reach for human supervision yet need to take decisions to adapt to unstable and unpredictable environments. To facilitate research on self-adaptive AUVs, this paper presents SUAVE, an exemplar for two-layered system-level adaptation of AUVs, which clearly separates the application and self-adaptation concerns. The exemplar focuses on a mission for underwater pipeline inspection by a single AUV, implemented as a ROS2-based system. This mission must be completed while simultaneously accounting for uncertainties such as thruster failures and unfavorable environmental conditions. The paper discusses how SUAVE can be used with different self-adaptation frameworks, illustrated by an experiment using the Metacontrol framework to compare AUV behavior with and without self-adaptation. The experiment shows that the use of Metacontrol to adapt the AUV during its mission improves its performance when measured by the overall time taken to complete the mission or the length of the inspected pipeline.

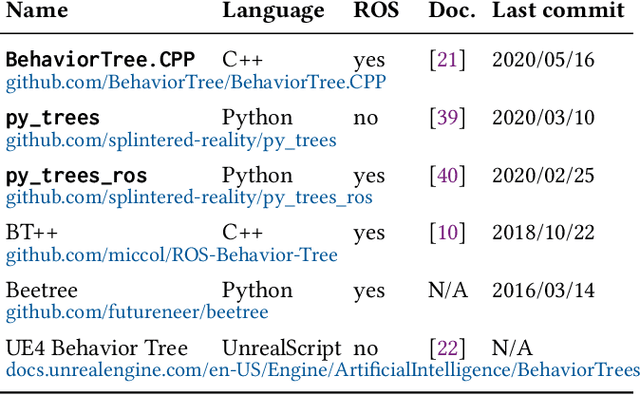

Behavior Trees and State Machines in Robotics Applications

Aug 08, 2022

Abstract:Autonomous robots combine a variety of skills to form increasingly complex behaviors called missions. While the skills are often programmed at a relatively low level of abstraction, their coordination is architecturally separated and often expressed in higher-level languages or frameworks. State Machines have been the go-to modeling language for decades, but recently, the language of Behavior Trees gained attention among roboticists. Originally designed for computer games to model autonomous actors, Behavior Trees offer an extensible tree-based representation of missions and are praised for supporting modular design and reuse of code. However, even though, several implementations of the language are in use, little is known about its usage and scope in the real world. How do concepts offered by behavior trees relate to traditional languages, such as state machines? How are behavior tree and state machine concepts used in applications? We present a study of the key language concepts in Behavior Trees and their use in real-world robotic applications. We identify behavior tree languages and compare their semantics to the most well-known behavior modeling language in robotics: state machines. We mine open-source repositories for robotics applications that use the languages and analyze this usage. We find similarity aspects between the two behavior modeling languages in terms of language design and their usage in open-source projects to accommodate the need of robotic domain. We contribute a dataset of real-world behavior models, hoping to inspire the community to use and further develop this language, associated tools, and analysis techniques.

Behavior Trees in Action: A Study of Robotics Applications

Oct 13, 2020

Abstract:Autonomous robots combine a variety of skills to form increasingly complex behaviors called missions. While the skills are often programmed at a relatively low level of abstraction, their coordination is architecturally separated and often expressed in higher-level languages or frameworks. Recently, the language of Behavior Trees gained attention among roboticists for this reason. Originally designed for computer games to model autonomous actors, Behavior Trees offer an extensible tree-based representation of missions. However, even though, several implementations of the language are in use, little is known about its usage and scope in the real world. How do behavior trees relate to traditional languages for describing behavior? How are behavior tree concepts used in applications? What are the benefits of using them? We present a study of the key language concepts in Behavior Trees and their use in real-world robotic applications. We identify behavior tree languages and compare their semantics to the most well-known behavior modeling languages: state and activity diagrams. We mine open source repositories for robotics applications that use the language and analyze this usage. We find that Behavior Trees are a pragmatic language, not fully specified, allowing projects to extend it even for just one model. Behavior trees clearly resemble the models-at-runtime paradigm. We contribute a dataset of real-world behavior models, hoping to inspire the community to use and further develop this language, associated tools, and analysis techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge