Andrzej Wąsowski

Beyond the Control Equations: An Artifact Study of Implementation Quality in Robot Control Software

Feb 04, 2026Abstract:A controller -- a software module managing hardware behavior -- is a key component of a typical robot system. While control theory gives safety guarantees for standard controller designs, the practical implementation of controllers in software introduces complexities that are often overlooked. Controllers are often designed in continuous space, while the software is executed in discrete space, undermining some of the theoretical guarantees. Despite extensive research on control theory and control modeling, little attention has been paid to the implementations of controllers and how their theoretical guarantees are ensured in real-world software systems. We investigate 184 real-world controller implementations in open-source robot software. We examine their application context, the implementation characteristics, and the testing methods employed to ensure correctness. We find that the implementations often handle discretization in an ad hoc manner, leading to potential issues with real-time reliability. Challenges such as timing inconsistencies, lack of proper error handling, and inadequate consideration of real-time constraints further complicate matters. Testing practices are superficial, no systematic verification of theoretical guarantees is used, leaving possible inconsistencies between expected and actual behavior. Our findings highlight the need for improved implementation guidelines and rigorous verification techniques to ensure the reliability and safety of robotic controllers in practice.

NeRF-To-Real Tester: Neural Radiance Fields as Test Image Generators for Vision of Autonomous Systems

Dec 20, 2024Abstract:Autonomous inspection of infrastructure on land and in water is a quickly growing market, with applications including surveying constructions, monitoring plants, and tracking environmental changes in on- and off-shore wind energy farms. For Autonomous Underwater Vehicles and Unmanned Aerial Vehicles overfitting of controllers to simulation conditions fundamentally leads to poor performance in the operation environment. There is a pressing need for more diverse and realistic test data that accurately represents the challenges faced by these systems. We address the challenge of generating perception test data for autonomous systems by leveraging Neural Radiance Fields to generate realistic and diverse test images, and integrating them into a metamorphic testing framework for vision components such as vSLAM and object detection. Our tool, N2R-Tester, allows training models of custom scenes and rendering test images from perturbed positions. An experimental evaluation of N2R-Tester on eight different vision components in AUVs and UAVs demonstrates the efficacy and versatility of the approach.

Symbolic State Partition for Reinforcement Learning

Sep 25, 2024Abstract:Tabular reinforcement learning methods cannot operate directly on continuous state spaces. One solution for this problem is to partition the state space. A good partitioning enables generalization during learning and more efficient exploitation of prior experiences. Consequently, the learning process becomes faster and produces more reliable policies. However, partitioning introduces approximation, which is particularly harmful in the presence of nonlinear relations between state components. An ideal partition should be as coarse as possible, while capturing the key structure of the state space for the given problem. This work extracts partitions from the environment dynamics by symbolic execution. We show that symbolic partitioning improves state space coverage with respect to environmental behavior and allows reinforcement learning to perform better for sparse rewards. We evaluate symbolic state space partitioning with respect to precision, scalability, learning agent performance and state space coverage for the learnt policies.

ROBUST: 221 Bugs in the Robot Operating System

Apr 04, 2024Abstract:As robotic systems such as autonomous cars and delivery drones assume greater roles and responsibilities within society, the likelihood and impact of catastrophic software failure within those systems is increased.To aid researchers in the development of new methods to measure and assure the safety and quality of robotics software, we systematically curated a dataset of 221 bugs across 7 popular and diverse software systems implemented via the Robot Operating System (ROS). We produce historically accurate recreations of each of the 221 defective software versions in the form of Docker images, and use a grounded theory approach to examine and categorize their corresponding faults, failures, and fixes. Finally, we reflect on the implications of our findings and outline future research directions for the community.

Behavior Trees in Action: A Study of Robotics Applications

Oct 13, 2020

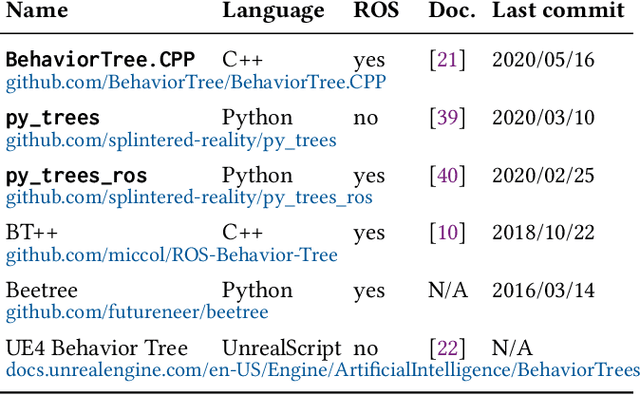

Abstract:Autonomous robots combine a variety of skills to form increasingly complex behaviors called missions. While the skills are often programmed at a relatively low level of abstraction, their coordination is architecturally separated and often expressed in higher-level languages or frameworks. Recently, the language of Behavior Trees gained attention among roboticists for this reason. Originally designed for computer games to model autonomous actors, Behavior Trees offer an extensible tree-based representation of missions. However, even though, several implementations of the language are in use, little is known about its usage and scope in the real world. How do behavior trees relate to traditional languages for describing behavior? How are behavior tree concepts used in applications? What are the benefits of using them? We present a study of the key language concepts in Behavior Trees and their use in real-world robotic applications. We identify behavior tree languages and compare their semantics to the most well-known behavior modeling languages: state and activity diagrams. We mine open source repositories for robotics applications that use the language and analyze this usage. We find that Behavior Trees are a pragmatic language, not fully specified, allowing projects to extend it even for just one model. Behavior trees clearly resemble the models-at-runtime paradigm. We contribute a dataset of real-world behavior models, hoping to inspire the community to use and further develop this language, associated tools, and analysis techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge