Andrew Katumba

WAXAL: A Large-Scale Multilingual African Language Speech Corpus

Feb 02, 2026Abstract:The advancement of speech technology has predominantly favored high-resource languages, creating a significant digital divide for speakers of most Sub-Saharan African languages. To address this gap, we introduce WAXAL, a large-scale, openly accessible speech dataset for 21 languages representing over 100 million speakers. The collection consists of two main components: an Automated Speech Recognition (ASR) dataset containing approximately 1,250 hours of transcribed, natural speech from a diverse range of speakers, and a Text-to-Speech (TTS) dataset with over 180 hours of high-quality, single-speaker recordings reading phonetically balanced scripts. This paper details our methodology for data collection, annotation, and quality control, which involved partnerships with four African academic and community organizations. We provide a detailed statistical overview of the dataset and discuss its potential limitations and ethical considerations. The WAXAL datasets are released at https://huggingface.co/datasets/google/WaxalNLP under the permissive CC-BY-4.0 license to catalyze research, enable the development of inclusive technologies, and serve as a vital resource for the digital preservation of these languages.

Intelligent Traffic Surveillance for Real-Time Vehicle Detection, License Plate Recognition, and Speed Estimation

Jan 01, 2026Abstract:Speeding is a major contributor to road fatalities, particularly in developing countries such as Uganda, where road safety infrastructure is limited. This study proposes a real-time intelligent traffic surveillance system tailored to such regions, using computer vision techniques to address vehicle detection, license plate recognition, and speed estimation. The study collected a rich dataset using a speed gun, a Canon Camera, and a mobile phone to train the models. License plate detection using YOLOv8 achieved a mean average precision (mAP) of 97.9%. For character recognition of the detected license plate, the CNN model got a character error rate (CER) of 3.85%, while the transformer model significantly reduced the CER to 1.79%. Speed estimation used source and target regions of interest, yielding a good performance of 10 km/h margin of error. Additionally, a database was established to correlate user information with vehicle detection data, enabling automated ticket issuance via SMS via Africa's Talking API. This system addresses critical traffic management needs in resource-constrained environments and shows potential to reduce road accidents through automated traffic enforcement in developing countries where such interventions are urgently needed.

Amplify Initiative: Building A Localized Data Platform for Globalized AI

Apr 18, 2025Abstract:Current AI models often fail to account for local context and language, given the predominance of English and Western internet content in their training data. This hinders the global relevance, usefulness, and safety of these models as they gain more users around the globe. Amplify Initiative, a data platform and methodology, leverages expert communities to collect diverse, high-quality data to address the limitations of these models. The platform is designed to enable co-creation of datasets, provide access to high-quality multilingual datasets, and offer recognition to data authors. This paper presents the approach to co-creating datasets with domain experts (e.g., health workers, teachers) through a pilot conducted in Sub-Saharan Africa (Ghana, Kenya, Malawi, Nigeria, and Uganda). In partnership with local researchers situated in these countries, the pilot demonstrated an end-to-end approach to co-creating data with 155 experts in sensitive domains (e.g., physicians, bankers, anthropologists, human and civil rights advocates). This approach, implemented with an Android app, resulted in an annotated dataset of 8,091 adversarial queries in seven languages (e.g., Luganda, Swahili, Chichewa), capturing nuanced and contextual information related to key themes such as misinformation and public interest topics. This dataset in turn can be used to evaluate models for their safety and cultural relevance within the context of these languages.

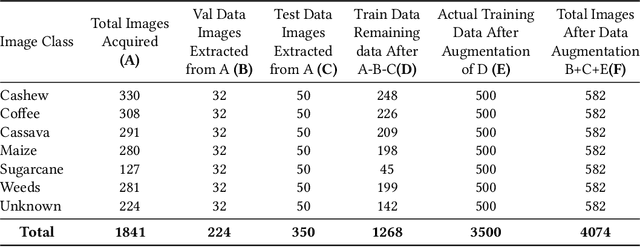

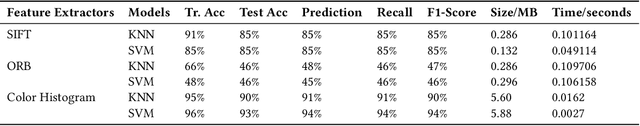

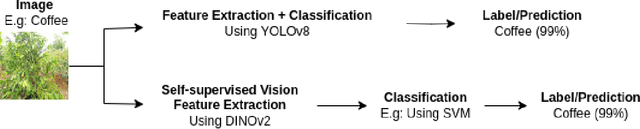

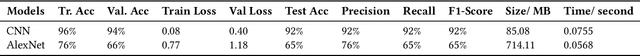

Enhanced Infield Agriculture with Interpretable Machine Learning Approaches for Crop Classification

Aug 22, 2024

Abstract:The increasing popularity of Artificial Intelligence in recent years has led to a surge in interest in image classification, especially in the agricultural sector. With the help of Computer Vision, Machine Learning, and Deep Learning, the sector has undergone a significant transformation, leading to the development of new techniques for crop classification in the field. Despite the extensive research on various image classification techniques, most have limitations such as low accuracy, limited use of data, and a lack of reporting model size and prediction. The most significant limitation of all is the need for model explainability. This research evaluates four different approaches for crop classification, namely traditional ML with handcrafted feature extraction methods like SIFT, ORB, and Color Histogram; Custom Designed CNN and established DL architecture like AlexNet; transfer learning on five models pre-trained using ImageNet such as EfficientNetV2, ResNet152V2, Xception, Inception-ResNetV2, MobileNetV3; and cutting-edge foundation models like YOLOv8 and DINOv2, a self-supervised Vision Transformer Model. All models performed well, but Xception outperformed all of them in terms of generalization, achieving 98% accuracy on the test data, with a model size of 80.03 MB and a prediction time of 0.0633 seconds. A key aspect of this research was the application of Explainable AI to provide the explainability of all the models. This journal presents the explainability of Xception model with LIME, SHAP, and GradCAM, ensuring transparency and trustworthiness in the models' predictions. This study highlights the importance of selecting the right model according to task-specific needs. It also underscores the important role of explainability in deploying AI in agriculture, providing insightful information to help enhance AI-driven crop management strategies.

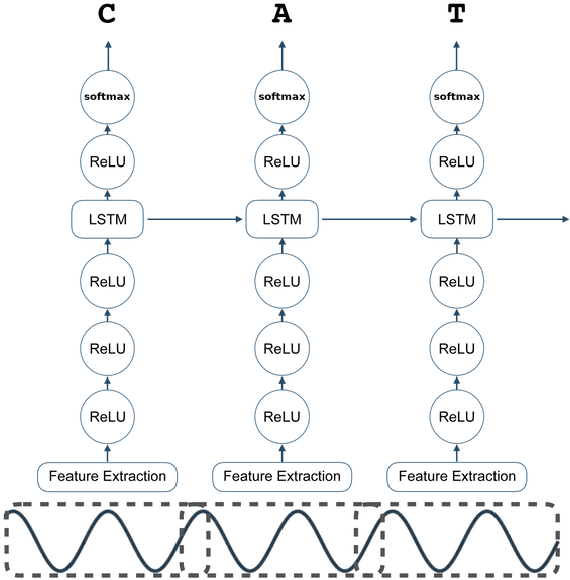

Building a Luganda Text-to-Speech Model From Crowdsourced Data

May 16, 2024Abstract:Text-to-speech (TTS) development for African languages such as Luganda is still limited, primarily due to the scarcity of high-quality, single-speaker recordings essential for training TTS models. Prior work has focused on utilizing the Luganda Common Voice recordings of multiple speakers aged between 20-49. Although the generated speech is intelligible, it is still of lower quality than the model trained on studio-grade recordings. This is due to the insufficient data preprocessing methods applied to improve the quality of the Common Voice recordings. Furthermore, speech convergence is more difficult to achieve due to varying intonations, as well as background noise. In this paper, we show that the quality of Luganda TTS from Common Voice can improve by training on multiple speakers of close intonation in addition to further preprocessing of the training data. Specifically, we selected six female speakers with close intonation determined by subjectively listening and comparing their voice recordings. In addition to trimming out silent portions from the beginning and end of the recordings, we applied a pre-trained speech enhancement model to reduce background noise and enhance audio quality. We also utilized a pre-trained, non-intrusive, self-supervised Mean Opinion Score (MOS) estimation model to filter recordings with an estimated MOS over 3.5, indicating high perceived quality. Subjective MOS evaluations from nine native Luganda speakers demonstrate that our TTS model achieves a significantly better MOS of 3.55 compared to the reported 2.5 MOS of the existing model. Moreover, for a fair comparison, our model trained on six speakers outperforms models trained on a single-speaker (3.13 MOS) or two speakers (3.22 MOS). This showcases the effectiveness of compensating for the lack of data from one speaker with data from multiple speakers of close intonation to improve TTS quality.

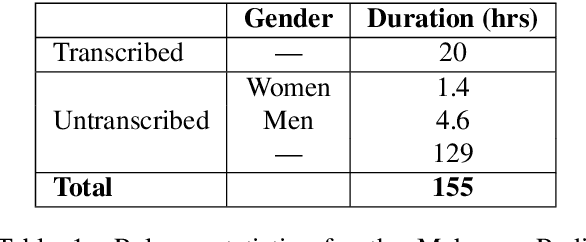

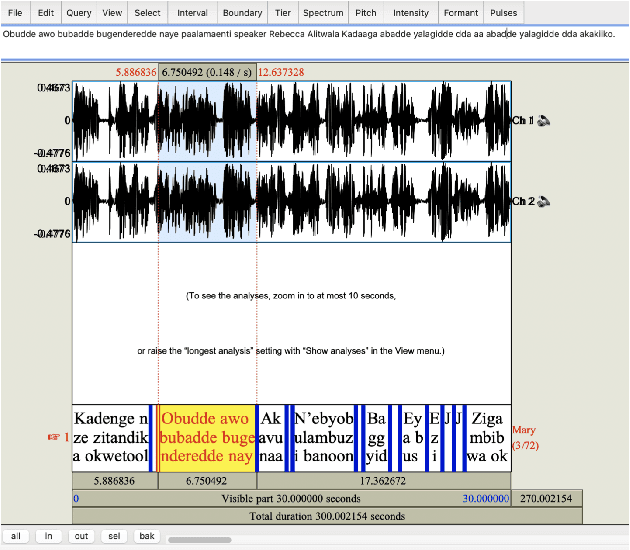

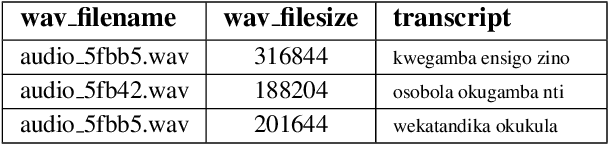

The Makerere Radio Speech Corpus: A Luganda Radio Corpus for Automatic Speech Recognition

Jun 20, 2022

Abstract:Building a usable radio monitoring automatic speech recognition (ASR) system is a challenging task for under-resourced languages and yet this is paramount in societies where radio is the main medium of public communication and discussions. Initial efforts by the United Nations in Uganda have proved how understanding the perceptions of rural people who are excluded from social media is important in national planning. However, these efforts are being challenged by the absence of transcribed speech datasets. In this paper, The Makerere Artificial Intelligence research lab releases a Luganda radio speech corpus of 155 hours. To our knowledge, this is the first publicly available radio dataset in sub-Saharan Africa. The paper describes the development of the voice corpus and presents baseline Luganda ASR performance results using Coqui STT toolkit, an open source speech recognition toolkit.

An Overview of Machine Learning-aided Optical Performance Monitoring Techniques

Jun 20, 2021

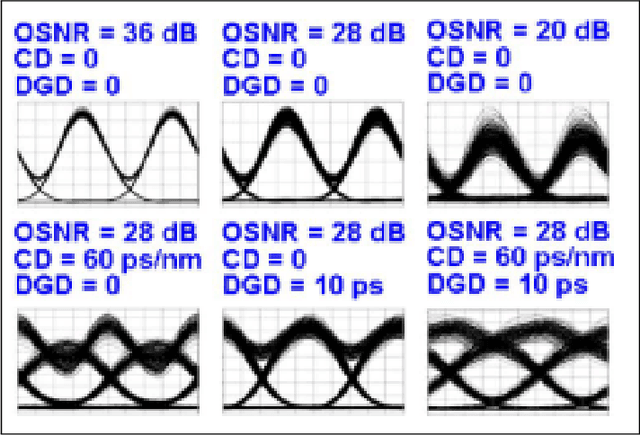

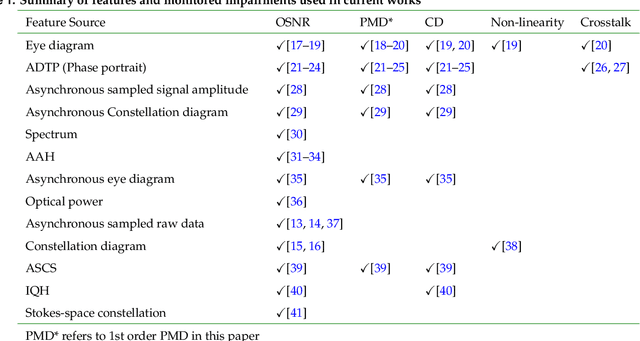

Abstract:Future communication systems are faced with increased demand for high capacity, dynamic bandwidth, reliability and heterogeneous traffic. To meet these requirements, networks have become more complex and thus require new design methods and monitoring techniques, as they evolve towards becoming autonomous. Machine learning has come to the forefront in recent years as a promising technology to aid in this evolution. Optical fiber communications can already provide the high capacity required for most applications, however, there is a need for increased scalability and adaptability to changing user demands and link conditions. Accurate performance monitoring is an integral part of this transformation. In this paper we review optical performance monitoring techniques where machine learning algorithms have been applied. Moreover, since alot of OPM depends on knowledge of the signal type, we also review work for modulation format recognition and bitrate identification. We additionally briefly introduce a neuromorphic approach to OPM as an emerging technique that has only recently been applied to this domain.

Face Recognition as a Method of Authentication in a Web-Based System

Mar 28, 2021

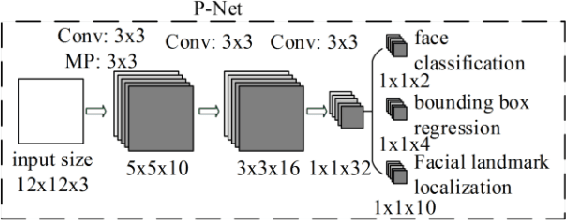

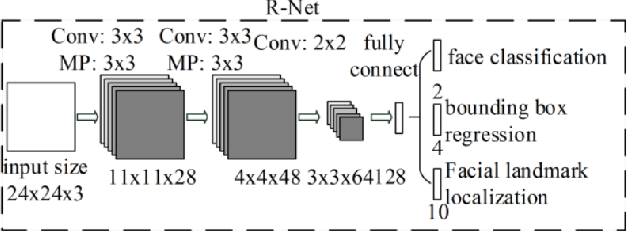

Abstract:Online information systems currently heavily rely on the username and password traditional method for protecting information and controlling access. With the advancement in biometric technology and popularity of fields like AI and Machine Learning, biometric security is becoming increasingly popular because of the usability advantage. This paper reports how machine learning based face recognition can be integrated into a web-based system as a method of authentication to reap the benefits of improved usability. This paper includes a comparison of combinations of detection and classification algorithms with FaceNet for face recognition. The results show that a combination of MTCNN for detection, Facenet for generating embeddings, and LinearSVC for classification outperforms other combinations with a 95% accuracy. The resulting classifier is integrated into the web-based system and used for authenticating users.

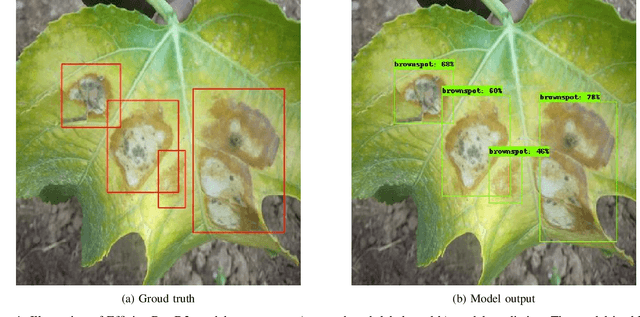

A Deep Learning-based Detector for Brown Spot Disease in Passion Fruit Plant Leaves

Jul 29, 2020

Abstract:Pests and diseases pose a key challenge to passion fruit farmers across Uganda and East Africa in general. They lead to loss of investment as yields reduce and losses increases. As the majority of the farmers, including passion fruit farmers, in the country are smallholder farmers from low-income households, they do not have the sufficient information and means to combat these challenges. While, passion fruits have the potential to improve the well-being of these farmers as they have a short maturity period and high market value , without the required knowledge about the health of their crops, farmers cannot intervene promptly to turn the situation around. For this work, we have partnered with the Uganda National Crop Research Institute (NaCRRI) to develop a dataset of expertly labelled passion fruit plant leaves and fruits, both diseased and healthy. We have made use of their extension service to collect images from 5 districts in Uganda, With the dataset in place, we are employing state-of-the-art techniques in machine learning, and specifically deep learning, techniques at scale for object detection and classification to correctly determine the health status of passion fruit plants and provide an accurate diagnosis for positive detections.This work focuses on two major diseases woodiness (viral) and brown spot (fungal) diseases.

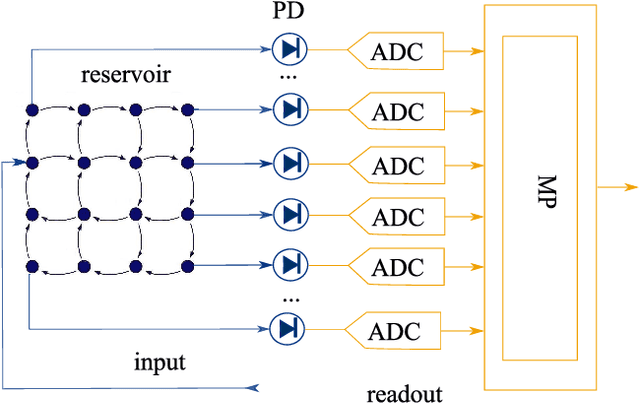

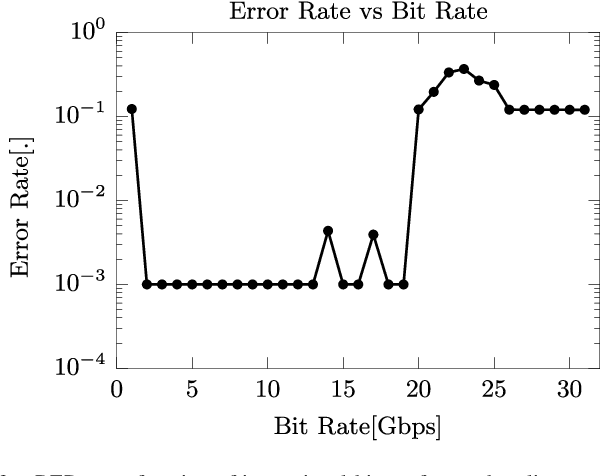

Training Passive Photonic Reservoirs with Integrated Optical Readout

Oct 08, 2018

Abstract:As Moore's law comes to an end, neuromorphic approaches to computing are on the rise. One of these, passive photonic reservoir computing, is a strong candidate for computing at high bitrates (> 10 Gbps) and with low energy consumption. Currently though, both benefits are limited by the necessity to perform training and readout operations in the electrical domain. Thus, efforts are currently underway in the photonic community to design an integrated optical readout, which allows to perform all operations in the optical domain. In addition to the technological challenge of designing such a readout, new algorithms have to be designed in order to train it. Foremost, suitable algorithms need to be able to deal with the fact that the actual on-chip reservoir states are not directly observable. In this work, we investigate several options for such a training algorithm and propose a solution in which the complex states of the reservoir can be observed by appropriately setting the readout weights, while iterating over a predefined input sequence. We perform numerical simulations in order to compare our method with an ideal baseline requiring full observability as well as with an established black-box optimization approach (CMA-ES).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge