Rose Nakibuule

Enhanced Infield Agriculture with Interpretable Machine Learning Approaches for Crop Classification

Aug 22, 2024

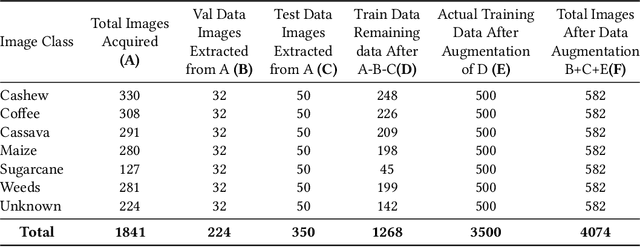

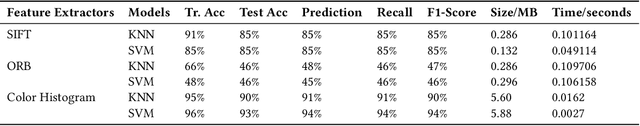

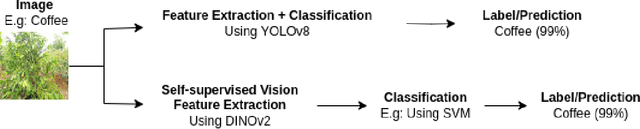

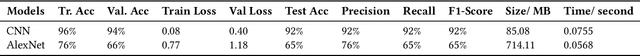

Abstract:The increasing popularity of Artificial Intelligence in recent years has led to a surge in interest in image classification, especially in the agricultural sector. With the help of Computer Vision, Machine Learning, and Deep Learning, the sector has undergone a significant transformation, leading to the development of new techniques for crop classification in the field. Despite the extensive research on various image classification techniques, most have limitations such as low accuracy, limited use of data, and a lack of reporting model size and prediction. The most significant limitation of all is the need for model explainability. This research evaluates four different approaches for crop classification, namely traditional ML with handcrafted feature extraction methods like SIFT, ORB, and Color Histogram; Custom Designed CNN and established DL architecture like AlexNet; transfer learning on five models pre-trained using ImageNet such as EfficientNetV2, ResNet152V2, Xception, Inception-ResNetV2, MobileNetV3; and cutting-edge foundation models like YOLOv8 and DINOv2, a self-supervised Vision Transformer Model. All models performed well, but Xception outperformed all of them in terms of generalization, achieving 98% accuracy on the test data, with a model size of 80.03 MB and a prediction time of 0.0633 seconds. A key aspect of this research was the application of Explainable AI to provide the explainability of all the models. This journal presents the explainability of Xception model with LIME, SHAP, and GradCAM, ensuring transparency and trustworthiness in the models' predictions. This study highlights the importance of selecting the right model according to task-specific needs. It also underscores the important role of explainability in deploying AI in agriculture, providing insightful information to help enhance AI-driven crop management strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge