Andrew Elliott

Individualised Counterfactual Examples Using Conformal Prediction Intervals

May 28, 2025

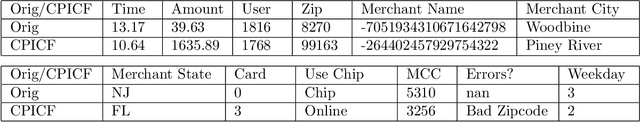

Abstract:Counterfactual explanations for black-box models aim to pr ovide insight into an algorithmic decision to its recipient. For a binary classification problem an individual counterfactual details which features might be changed for the model to infer the opposite class. High-dimensional feature spaces that are typical of machine learning classification models admit many possible counterfactual examples to a decision, and so it is important to identify additional criteria to select the most useful counterfactuals. In this paper, we explore the idea that the counterfactuals should be maximally informative when considering the knowledge of a specific individual about the underlying classifier. To quantify this information gain we explicitly model the knowledge of the individual, and assess the uncertainty of predictions which the individual makes by the width of a conformal prediction interval. Regions of feature space where the prediction interval is wide correspond to areas where the confidence in decision making is low, and an additional counterfactual example might be more informative to an individual. To explore and evaluate our individualised conformal prediction interval counterfactuals (CPICFs), first we present a synthetic data set on a hypercube which allows us to fully visualise the decision boundary, conformal intervals via three different methods, and resultant CPICFs. Second, in this synthetic data set we explore the impact of a single CPICF on the knowledge of an individual locally around the original query. Finally, in both our synthetic data set and a complex real world dataset with a combination of continuous and discrete variables, we measure the utility of these counterfactuals via data augmentation, testing the performance on a held out set.

L2G2G: a Scalable Local-to-Global Network Embedding with Graph Autoencoders

Feb 02, 2024

Abstract:For analysing real-world networks, graph representation learning is a popular tool. These methods, such as a graph autoencoder (GAE), typically rely on low-dimensional representations, also called embeddings, which are obtained through minimising a loss function; these embeddings are used with a decoder for downstream tasks such as node classification and edge prediction. While GAEs tend to be fairly accurate, they suffer from scalability issues. For improved speed, a Local2Global approach, which combines graph patch embeddings based on eigenvector synchronisation, was shown to be fast and achieve good accuracy. Here we propose L2G2G, a Local2Global method which improves GAE accuracy without sacrificing scalability. This improvement is achieved by dynamically synchronising the latent node representations, while training the GAEs. It also benefits from the decoder computing an only local patch loss. Hence, aligning the local embeddings in each epoch utilises more information from the graph than a single post-training alignment does, while maintaining scalability. We illustrate on synthetic benchmarks, as well as real-world examples, that L2G2G achieves higher accuracy than the standard Local2Global approach and scales efficiently on the larger data sets. We find that for large and dense networks, it even outperforms the slow, but assumed more accurate, GAEs.

SaGess: Sampling Graph Denoising Diffusion Model for Scalable Graph Generation

Jun 29, 2023

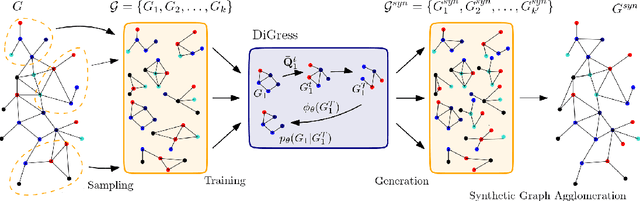

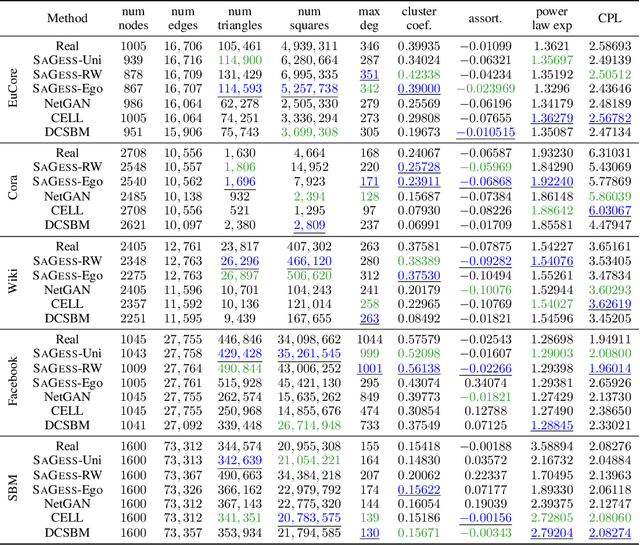

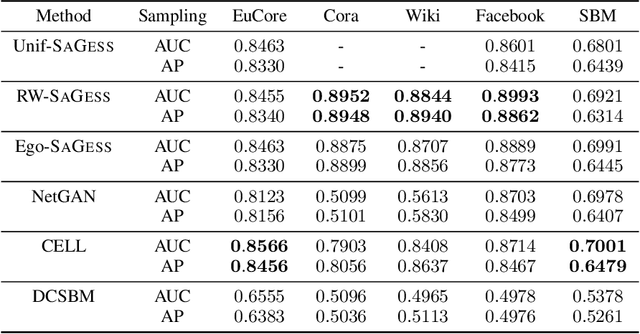

Abstract:Over recent years, denoising diffusion generative models have come to be considered as state-of-the-art methods for synthetic data generation, especially in the case of generating images. These approaches have also proved successful in other applications such as tabular and graph data generation. However, due to computational complexity, to this date, the application of these techniques to graph data has been restricted to small graphs, such as those used in molecular modeling. In this paper, we propose SaGess, a discrete denoising diffusion approach, which is able to generate large real-world networks by augmenting a diffusion model (DiGress) with a generalized divide-and-conquer framework. The algorithm is capable of generating larger graphs by sampling a covering of subgraphs of the initial graph in order to train DiGress. SaGess then constructs a synthetic graph using the subgraphs that have been generated by DiGress. We evaluate the quality of the synthetic data sets against several competitor methods by comparing graph statistics between the original and synthetic samples, as well as evaluating the utility of the synthetic data set produced by using it to train a task-driven model, namely link prediction. In our experiments, SaGess, outperforms most of the one-shot state-of-the-art graph generating methods by a significant factor, both on the graph metrics and on the link prediction task.

TAPAS: a Toolbox for Adversarial Privacy Auditing of Synthetic Data

Nov 12, 2022

Abstract:Personal data collected at scale promises to improve decision-making and accelerate innovation. However, sharing and using such data raises serious privacy concerns. A promising solution is to produce synthetic data, artificial records to share instead of real data. Since synthetic records are not linked to real persons, this intuitively prevents classical re-identification attacks. However, this is insufficient to protect privacy. We here present TAPAS, a toolbox of attacks to evaluate synthetic data privacy under a wide range of scenarios. These attacks include generalizations of prior works and novel attacks. We also introduce a general framework for reasoning about privacy threats to synthetic data and showcase TAPAS on several examples.

DAMNETS: A Deep Autoregressive Model for Generating Markovian Network Time Series

Mar 28, 2022

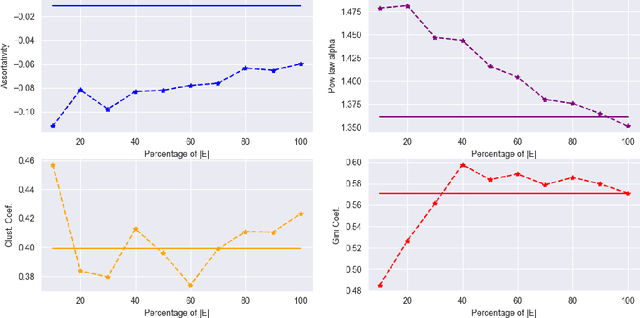

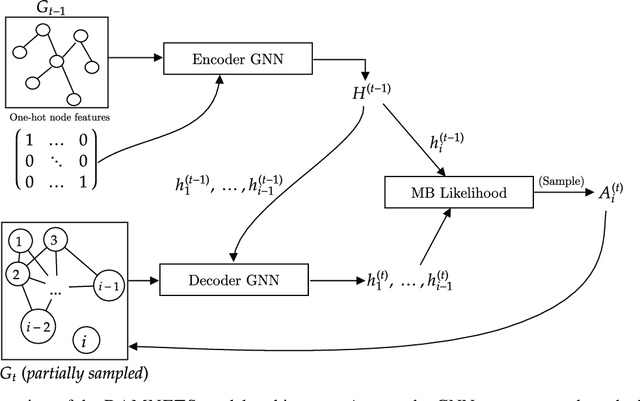

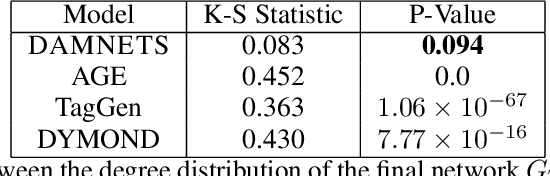

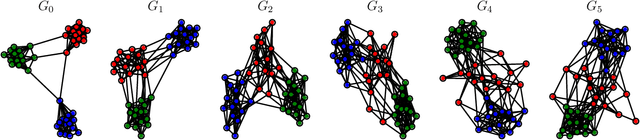

Abstract:In this work, we introduce DAMNETS, a deep generative model for Markovian network time series. Time series of networks are found in many fields such as trade or payment networks in economics, contact networks in epidemiology or social media posts over time. Generative models of such data are useful for Monte-Carlo estimation and data set expansion, which is of interest for both data privacy and model fitting. Using recent ideas from the Graph Neural Network (GNN) literature, we introduce a novel GNN encoder-decoder structure in which an encoder GNN learns a latent representation of the input graph, and a decoder GNN uses this representation to simulate the network dynamics. We show using synthetic data sets that DAMNETS can replicate features of network topology across time observed in the real world, such as changing community structure and preferential attachment. DAMNETS outperforms competing methods on all of our measures of sample quality over several real and synthetic data sets.

Motif-Based Spectral Clustering of Weighted Directed Networks

Apr 02, 2020

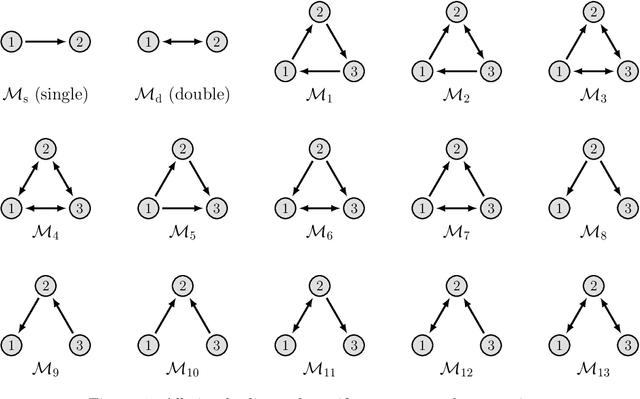

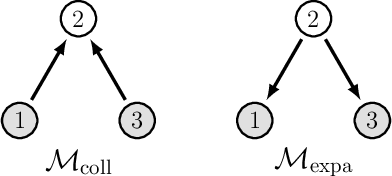

Abstract:Clustering is an essential technique for network analysis, with applications in a diverse range of fields. Although spectral clustering is a popular and effective method, it fails to consider higher-order structure and can perform poorly on directed networks. One approach is to capture and cluster higher-order structures using motif adjacency matrices. However, current formulations fail to take edge weights into account, and thus are somewhat limited when weight is a key component of the network under study. We address these shortcomings by exploring motif-based weighted spectral clustering methods. We present new and computationally useful matrix formulae for motif adjacency matrices on weighted networks, which can be used to construct efficient algorithms for any anchored or non-anchored motif on three nodes. In a very sparse regime, our proposed method can handle graphs with five million nodes and tens of millions of edges in under ten minutes. We further use our framework to construct a motif-based approach for clustering bipartite networks. We provide comprehensive experimental results, demonstrating (i) the scalability of our approach, (ii) advantages of higher-order clustering on synthetic examples, and (iii) the effectiveness of our techniques on a variety of real world data sets. We conclude that motif-based spectral clustering is a valuable tool for analysis of directed and bipartite weighted networks, which is also scalable and easy to implement.

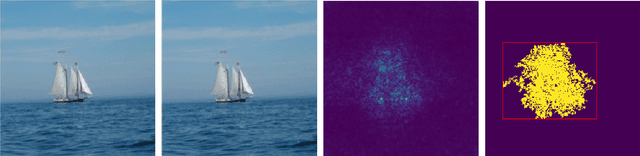

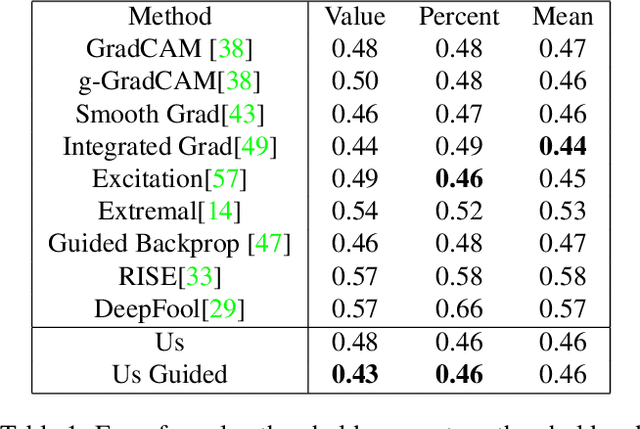

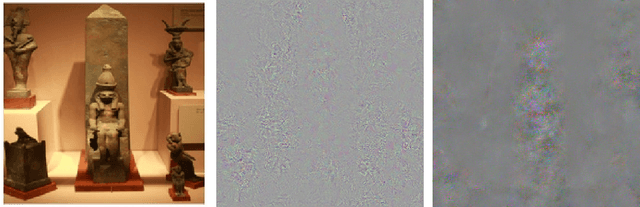

Adversarial Perturbations on the Perceptual Ball

Dec 19, 2019

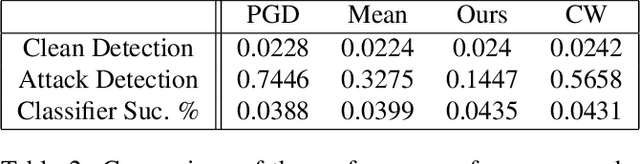

Abstract:We present a simple regularisation of Adversarial Perturbations based upon the perceptual loss. While the resulting perturbations remain imperceptible to the human eye, they differ from existing adversarial perturbations in two important regards: (i) our resulting perturbations are semi-sparse,and typically make alterations to objects and regions of interest leaving the background static; (ii) our perturbations do not alter the distribution of data in the image and are undetectable by state-of-the-art-methods. As such this workreinforces the connection between explainable AI and adversarial perturbations. We show the merits of our approach by evaluating onstandard explainablity benchmarks and by defeating recenttests for detecting adversarial perturbations, substantially decreasing the effectiveness of detecting adversarial perturbations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge