Adversarial Perturbations on the Perceptual Ball

Paper and Code

Dec 19, 2019

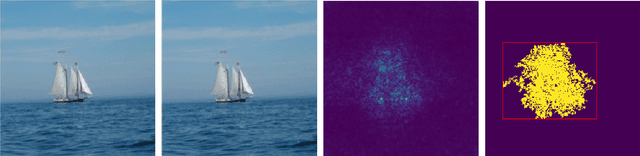

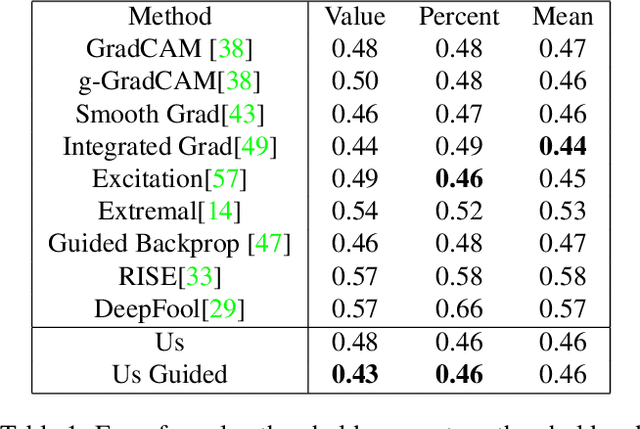

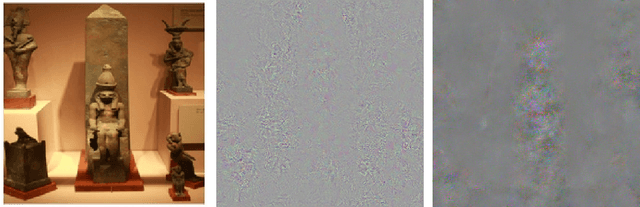

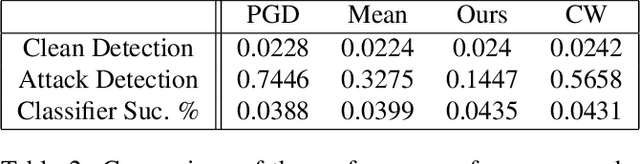

We present a simple regularisation of Adversarial Perturbations based upon the perceptual loss. While the resulting perturbations remain imperceptible to the human eye, they differ from existing adversarial perturbations in two important regards: (i) our resulting perturbations are semi-sparse,and typically make alterations to objects and regions of interest leaving the background static; (ii) our perturbations do not alter the distribution of data in the image and are undetectable by state-of-the-art-methods. As such this workreinforces the connection between explainable AI and adversarial perturbations. We show the merits of our approach by evaluating onstandard explainablity benchmarks and by defeating recenttests for detecting adversarial perturbations, substantially decreasing the effectiveness of detecting adversarial perturbations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge