Andrea Iaboni

CARE-PD: A Multi-Site Anonymized Clinical Dataset for Parkinson's Disease Gait Assessment

Oct 05, 2025

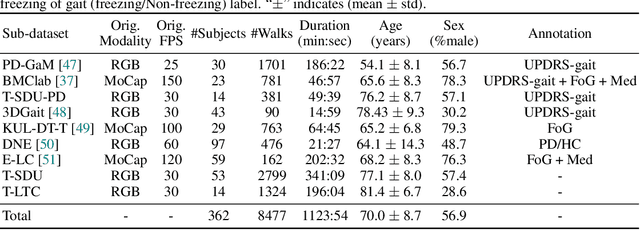

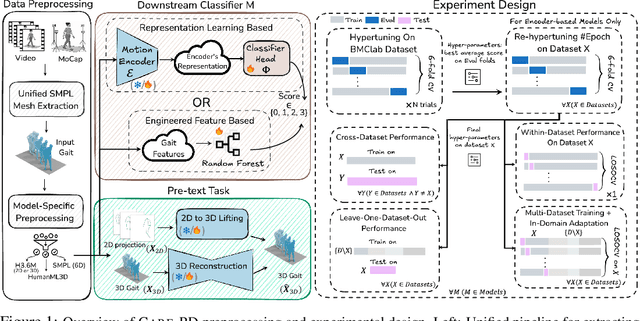

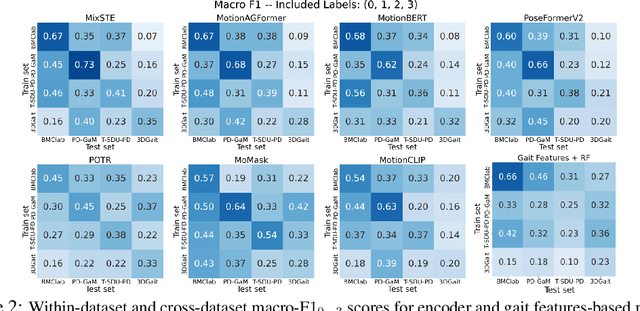

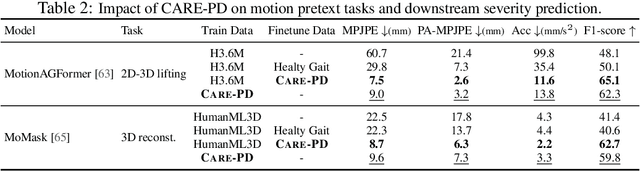

Abstract:Objective gait assessment in Parkinson's Disease (PD) is limited by the absence of large, diverse, and clinically annotated motion datasets. We introduce CARE-PD, the largest publicly available archive of 3D mesh gait data for PD, and the first multi-site collection spanning 9 cohorts from 8 clinical centers. All recordings (RGB video or motion capture) are converted into anonymized SMPL meshes via a harmonized preprocessing pipeline. CARE-PD supports two key benchmarks: supervised clinical score prediction (estimating Unified Parkinson's Disease Rating Scale, UPDRS, gait scores) and unsupervised motion pretext tasks (2D-to-3D keypoint lifting and full-body 3D reconstruction). Clinical prediction is evaluated under four generalization protocols: within-dataset, cross-dataset, leave-one-dataset-out, and multi-dataset in-domain adaptation. To assess clinical relevance, we compare state-of-the-art motion encoders with a traditional gait-feature baseline, finding that encoders consistently outperform handcrafted features. Pretraining on CARE-PD reduces MPJPE (from 60.8mm to 7.5mm) and boosts PD severity macro-F1 by 17 percentage points, underscoring the value of clinically curated, diverse training data. CARE-PD and all benchmark code are released for non-commercial research at https://neurips2025.care-pd.ca/.

Depth-Weighted Detection of Behaviours of Risk in People with Dementia using Cameras

Aug 28, 2024

Abstract:The behavioural and psychological symptoms of dementia, such as agitation and aggression, present a significant health and safety risk in residential care settings. Many care facilities have video cameras in place for digital monitoring of public spaces, which can be leveraged to develop an automated behaviours of risk detection system that can alert the staff to enable timely intervention and prevent the situation from escalating. However, one of the challenges in our previous study was the presence of false alarms due to obstruction of view by activities happening close to the camera. To address this issue, we proposed a novel depth-weighted loss function to train a customized convolutional autoencoder to enforce equivalent importance to the events happening both near and far from the cameras; thus, helping to reduce false alarms and making the method more suitable for real-world deployment. The proposed method was trained using data from nine participants with dementia across three cameras situated in a specialized dementia unit and achieved an area under the curve of receiver operating characteristic of $0.852$, $0.81$ and $0.768$ for the three cameras. Ablation analysis was conducted for the individual components of the proposed method and the performance of the proposed method was investigated for participant-specific and sex-specific behaviours of risk detection. The proposed method performed reasonably well in detecting behaviours of risk in people with dementia motivating further research toward the development of a behaviours of risk detection system suitable for deployment in video surveillance systems in care facilities.

Benchmarking Skeleton-based Motion Encoder Models for Clinical Applications: Estimating Parkinson's Disease Severity in Walking Sequences

May 28, 2024

Abstract:This study investigates the application of general human motion encoders trained on large-scale human motion datasets for analyzing gait patterns in PD patients. Although these models have learned a wealth of human biomechanical knowledge, their effectiveness in analyzing pathological movements, such as parkinsonian gait, has yet to be fully validated. We propose a comparative framework and evaluate six pre-trained state-of-the-art human motion encoder models on their ability to predict the Movement Disorder Society - Unified Parkinson's Disease Rating Scale (MDS-UPDRS-III) gait scores from motion capture data. We compare these against a traditional gait feature-based predictive model in a recently released large public PD dataset, including PD patients on and off medication. The feature-based model currently shows higher weighted average accuracy, precision, recall, and F1-score. Motion encoder models with closely comparable results demonstrate promise for scalability and efficiency in clinical settings. This potential is underscored by the enhanced performance of the encoder model upon fine-tuning on PD training set. Four of the six human motion models examined provided prediction scores that were significantly different between on- and off-medication states. This finding reveals the sensitivity of motion encoder models to nuanced clinical changes. It also underscores the necessity for continued customization of these models to better capture disease-specific features, thereby reducing the reliance on labor-intensive feature engineering. Lastly, we establish a benchmark for the analysis of skeleton-based motion encoder models in clinical settings. To the best of our knowledge, this is the first study to provide a benchmark that enables state-of-the-art models to be tested and compete in a clinical context. Codes and benchmark leaderboard are available at code.

Pose2Gait: Extracting Gait Features from Monocular Video of Individuals with Dementia

Aug 22, 2023

Abstract:Video-based ambient monitoring of gait for older adults with dementia has the potential to detect negative changes in health and allow clinicians and caregivers to intervene early to prevent falls or hospitalizations. Computer vision-based pose tracking models can process video data automatically and extract joint locations; however, publicly available models are not optimized for gait analysis on older adults or clinical populations. In this work we train a deep neural network to map from a two dimensional pose sequence, extracted from a video of an individual walking down a hallway toward a wall-mounted camera, to a set of three-dimensional spatiotemporal gait features averaged over the walking sequence. The data of individuals with dementia used in this work was captured at two sites using a wall-mounted system to collect the video and depth information used to train and evaluate our model. Our Pose2Gait model is able to extract velocity and step length values from the video that are correlated with the features from the depth camera, with Spearman's correlation coefficients of .83 and .60 respectively, showing that three dimensional spatiotemporal features can be predicted from monocular video. Future work remains to improve the accuracy of other features, such as step time and step width, and test the utility of the predicted values for detecting meaningful changes in gait during longitudinal ambient monitoring.

Undersampling and Cumulative Class Re-decision Methods to Improve Detection of Agitation in People with Dementia

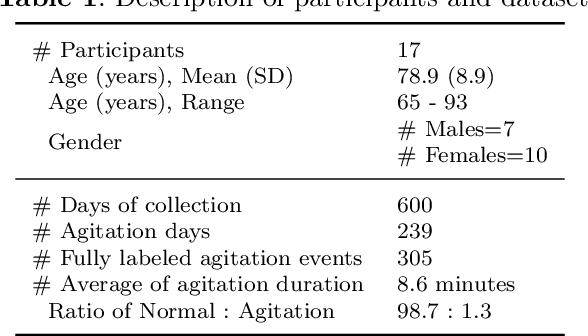

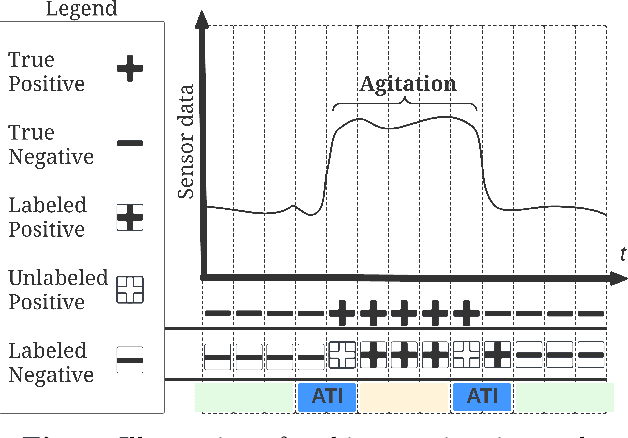

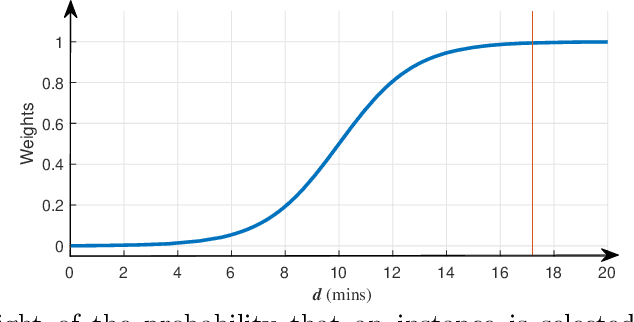

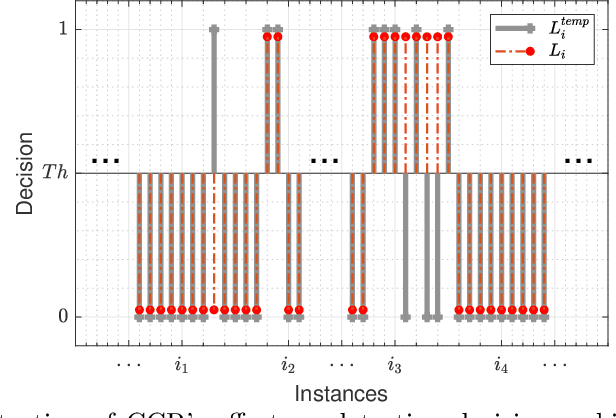

Feb 07, 2023

Abstract:Agitation is one of the most prevalent symptoms in people with dementia (PwD) that can place themselves and the caregiver's safety at risk. Developing objective agitation detection approaches is important to support health and safety of PwD living in a residential setting. In a previous study, we collected multimodal wearable sensor data from 17 participants for 600 days and developed machine learning models for predicting agitation in one-minute windows. However, there are significant limitations in the dataset, such as imbalance problem and potential imprecise labels as the occurrence of agitation is much rarer in comparison to the normal behaviours. In this paper, we first implement different undersampling methods to eliminate the imbalance problem, and come to the conclusion that only 20% of normal behaviour data are adequate to train a competitive agitation detection model. Then, we design a weighted undersampling method to evaluate the manual labeling mechanism given the ambiguous time interval (ATI) assumption. After that, the postprocessing method of cumulative class re-decision (CCR) is proposed based on the historical sequential information and continuity characteristic of agitation, improving the decision-making performance for the potential application of agitation detection system. The results show that a combination of undersampling and CCR improves best F1-score by 26.6% and other metrics to varying degrees with less training time and data used, and inspires a way to find the potential range of optimal threshold reference for clinical purpose.

Privacy-Protecting Behaviours of Risk Detection in People with Dementia using Videos

Dec 20, 2022Abstract:People living with dementia often exhibit behavioural and psychological symptoms of dementia that can put their and others' safety at risk. Existing video surveillance systems in long-term care facilities can be used to monitor such behaviours of risk to alert the staff to prevent potential injuries or death in some cases. However, these behaviours of risk events are heterogeneous and infrequent in comparison to normal events. Moreover, analyzing raw videos can also raise privacy concerns. In this paper, we present two novel privacy-protecting video-based anomaly detection approaches to detect behaviours of risks in people with dementia. We either extracted body pose information as skeletons and use semantic segmentation masks to replace multiple humans in the scene with their semantic boundaries. Our work differs from most existing approaches for video anomaly detection that focus on appearance-based features, which can put the privacy of a person at risk and is also susceptible to pixel-based noise, including illumination and viewing direction. We used anonymized videos of normal activities to train customized spatio-temporal convolutional autoencoders and identify behaviours of risk as anomalies. We show our results on a real-world study conducted in a dementia care unit with patients with dementia, containing approximately 21 hours of normal activities data for training and 9 hours of data containing normal and behaviours of risk events for testing. We compared our approaches with the original RGB videos and obtained an equivalent area under the receiver operating characteristic curve performance of 0.807 for the skeleton-based approach and 0.823 for the segmentation mask-based approach. This is one of the first studies to incorporate privacy for the detection of behaviours of risks in people with dementia.

Estimating Parkinsonism Severity in Natural Gait Videos of Older Adults with Dementia

May 07, 2021

Abstract:Drug-induced parkinsonism affects many older adults with dementia, often causing gait disturbances. New advances in vision-based human pose-estimation have opened possibilities for frequent and unobtrusive analysis of gait in residential settings. This work proposes novel spatial-temporal graph convolutional network (ST-GCN) architectures and training procedures to predict clinical scores of parkinsonism in gait from video of individuals with dementia. We propose a two-stage training approach consisting of a self-supervised pretraining stage that encourages the ST-GCN model to learn about gait patterns before predicting clinical scores in the finetuning stage. The proposed ST-GCN models are evaluated on joint trajectories extracted from video and are compared against traditional (ordinal, linear, random forest) regression models and temporal convolutional network baselines. Three 2D human pose-estimation libraries (OpenPose, Detectron, AlphaPose) and the Microsoft Kinect (2D and 3D) are used to extract joint trajectories of 4787 natural walking bouts from 53 older adults with dementia. A subset of 399 walks from 14 participants is annotated with scores of parkinsonism severity on the gait criteria of the Unified Parkinson's Disease Rating Scale (UPDRS) and the Simpson-Angus Scale (SAS). Our results demonstrate that ST-GCN models operating on 3D joint trajectories extracted from the Kinect consistently outperform all other models and feature sets. Prediction of parkinsonism scores in natural walking bouts of unseen participants remains a challenging task, with the best models achieving macro-averaged F1-scores of 0.53 +/- 0.03 and 0.40 +/- 0.02 for UPDRS-gait and SAS-gait, respectively. Pre-trained model and demo code for this work is available: https://github.com/TaatiTeam/stgcn_parkinsonism_prediction.

Tracking agitation in people living with dementia in a care environment

Apr 26, 2021

Abstract:Agitation is a symptom that communicates distress in people living with dementia (PwD), and that can place them and others at risk. In a long term care (LTC) environment, care staff track and document these symptoms as a way to detect when there has been a change in resident status to assess risk, and to monitor for response to interventions. However, this documentation can be time-consuming, and due to staffing constraints, episodes of agitation may go unobserved. This brings into question the reliability of these assessments, and presents an opportunity for technology to help track and monitor behavioural symptoms in dementia. In this paper, we present the outcomes of a 2 year real-world study performed in a dementia unit, where a multi-modal wearable device was worn by $20$ PwD. In line with a commonly used clinical documentation tool, this large multi-modal time-series data was analyzed to track the presence of episodes of agitation in 8-hour nursing shifts. The development of a baseline classification model (AUC=0.717) on this dataset and subsequent improvement (AUC= 0.779) lays the groundwork for automating the process of annotating agitation events in nursing charts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge