Kristine Newman

Depth-Weighted Detection of Behaviours of Risk in People with Dementia using Cameras

Aug 28, 2024

Abstract:The behavioural and psychological symptoms of dementia, such as agitation and aggression, present a significant health and safety risk in residential care settings. Many care facilities have video cameras in place for digital monitoring of public spaces, which can be leveraged to develop an automated behaviours of risk detection system that can alert the staff to enable timely intervention and prevent the situation from escalating. However, one of the challenges in our previous study was the presence of false alarms due to obstruction of view by activities happening close to the camera. To address this issue, we proposed a novel depth-weighted loss function to train a customized convolutional autoencoder to enforce equivalent importance to the events happening both near and far from the cameras; thus, helping to reduce false alarms and making the method more suitable for real-world deployment. The proposed method was trained using data from nine participants with dementia across three cameras situated in a specialized dementia unit and achieved an area under the curve of receiver operating characteristic of $0.852$, $0.81$ and $0.768$ for the three cameras. Ablation analysis was conducted for the individual components of the proposed method and the performance of the proposed method was investigated for participant-specific and sex-specific behaviours of risk detection. The proposed method performed reasonably well in detecting behaviours of risk in people with dementia motivating further research toward the development of a behaviours of risk detection system suitable for deployment in video surveillance systems in care facilities.

Undersampling and Cumulative Class Re-decision Methods to Improve Detection of Agitation in People with Dementia

Feb 07, 2023

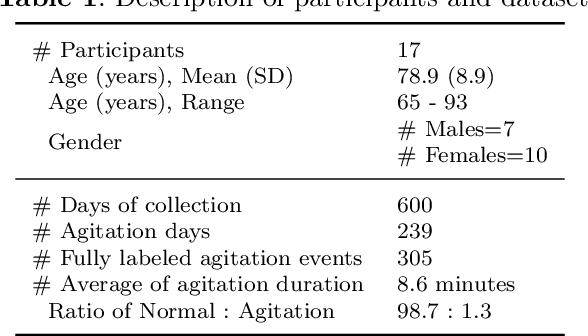

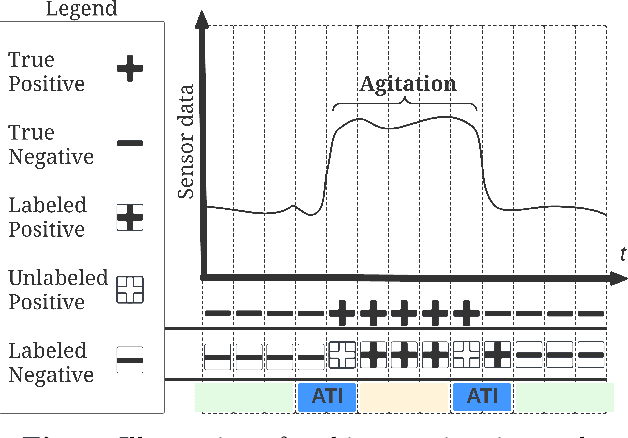

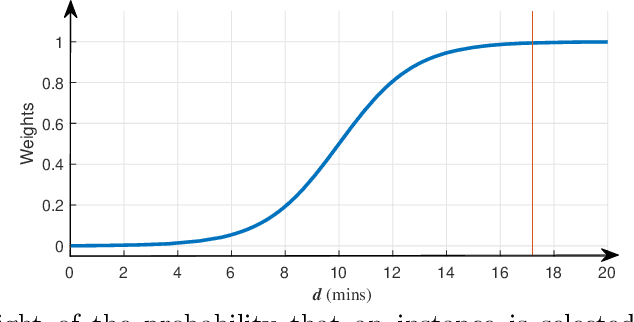

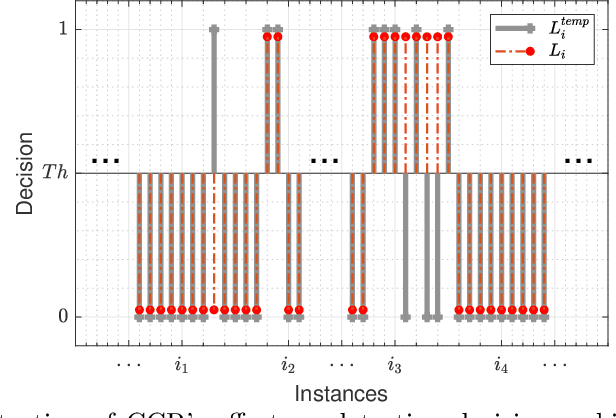

Abstract:Agitation is one of the most prevalent symptoms in people with dementia (PwD) that can place themselves and the caregiver's safety at risk. Developing objective agitation detection approaches is important to support health and safety of PwD living in a residential setting. In a previous study, we collected multimodal wearable sensor data from 17 participants for 600 days and developed machine learning models for predicting agitation in one-minute windows. However, there are significant limitations in the dataset, such as imbalance problem and potential imprecise labels as the occurrence of agitation is much rarer in comparison to the normal behaviours. In this paper, we first implement different undersampling methods to eliminate the imbalance problem, and come to the conclusion that only 20% of normal behaviour data are adequate to train a competitive agitation detection model. Then, we design a weighted undersampling method to evaluate the manual labeling mechanism given the ambiguous time interval (ATI) assumption. After that, the postprocessing method of cumulative class re-decision (CCR) is proposed based on the historical sequential information and continuity characteristic of agitation, improving the decision-making performance for the potential application of agitation detection system. The results show that a combination of undersampling and CCR improves best F1-score by 26.6% and other metrics to varying degrees with less training time and data used, and inspires a way to find the potential range of optimal threshold reference for clinical purpose.

Privacy-Protecting Behaviours of Risk Detection in People with Dementia using Videos

Dec 20, 2022Abstract:People living with dementia often exhibit behavioural and psychological symptoms of dementia that can put their and others' safety at risk. Existing video surveillance systems in long-term care facilities can be used to monitor such behaviours of risk to alert the staff to prevent potential injuries or death in some cases. However, these behaviours of risk events are heterogeneous and infrequent in comparison to normal events. Moreover, analyzing raw videos can also raise privacy concerns. In this paper, we present two novel privacy-protecting video-based anomaly detection approaches to detect behaviours of risks in people with dementia. We either extracted body pose information as skeletons and use semantic segmentation masks to replace multiple humans in the scene with their semantic boundaries. Our work differs from most existing approaches for video anomaly detection that focus on appearance-based features, which can put the privacy of a person at risk and is also susceptible to pixel-based noise, including illumination and viewing direction. We used anonymized videos of normal activities to train customized spatio-temporal convolutional autoencoders and identify behaviours of risk as anomalies. We show our results on a real-world study conducted in a dementia care unit with patients with dementia, containing approximately 21 hours of normal activities data for training and 9 hours of data containing normal and behaviours of risk events for testing. We compared our approaches with the original RGB videos and obtained an equivalent area under the receiver operating characteristic curve performance of 0.807 for the skeleton-based approach and 0.823 for the segmentation mask-based approach. This is one of the first studies to incorporate privacy for the detection of behaviours of risks in people with dementia.

Tracking agitation in people living with dementia in a care environment

Apr 26, 2021

Abstract:Agitation is a symptom that communicates distress in people living with dementia (PwD), and that can place them and others at risk. In a long term care (LTC) environment, care staff track and document these symptoms as a way to detect when there has been a change in resident status to assess risk, and to monitor for response to interventions. However, this documentation can be time-consuming, and due to staffing constraints, episodes of agitation may go unobserved. This brings into question the reliability of these assessments, and presents an opportunity for technology to help track and monitor behavioural symptoms in dementia. In this paper, we present the outcomes of a 2 year real-world study performed in a dementia unit, where a multi-modal wearable device was worn by $20$ PwD. In line with a commonly used clinical documentation tool, this large multi-modal time-series data was analyzed to track the presence of episodes of agitation in 8-hour nursing shifts. The development of a baseline classification model (AUC=0.717) on this dataset and subsequent improvement (AUC= 0.779) lays the groundwork for automating the process of annotating agitation events in nursing charts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge