Andrea Censi

ETH Zürich

CODEI: Resource-Efficient Task-Driven Co-Design of Perception and Decision Making for Mobile Robots Applied to Autonomous Vehicles

Mar 13, 2025Abstract:This paper discusses the integration challenges and strategies for designing mobile robots, by focusing on the task-driven, optimal selection of hardware and software to balance safety, efficiency, and minimal usage of resources such as costs, energy, computational requirements, and weight. We emphasize the interplay between perception and motion planning in decision-making by introducing the concept of occupancy queries to quantify the perception requirements for sampling-based motion planners. Sensor and algorithm performance are evaluated using False Negative Rates (FPR) and False Positive Rates (FPR) across various factors such as geometric relationships, object properties, sensor resolution, and environmental conditions. By integrating perception requirements with perception performance, an Integer Linear Programming (ILP) approach is proposed for efficient sensor and algorithm selection and placement. This forms the basis for a co-design optimization that includes the robot body, motion planner, perception pipeline, and computing unit. We refer to this framework for solving the co-design problem of mobile robots as CODEI, short for Co-design of Embodied Intelligence. A case study on developing an Autonomous Vehicle (AV) for urban scenarios provides actionable information for designers, and shows that complex tasks escalate resource demands, with task performance affecting choices of the autonomy stack. The study demonstrates that resource prioritization influences sensor choice: cameras are preferred for cost-effective and lightweight designs, while lidar sensors are chosen for better energy and computational efficiency.

A Counterfactual Safety Margin Perspective on the Scoring of Autonomous Vehicles' Riskiness

Aug 18, 2023Abstract:Autonomous Vehicles (AVs) have the potential to provide numerous societal benefits, such as decreased road accidents and increased overall transportation efficiency. However, quantifying the risk associated with AVs is challenging due to the lack of historical data and the rapidly evolving technology. This paper presents a data-driven framework for comparing the risk of different AVs' behaviors in various operational design domains (ODDs), based on counterfactual simulations of "misbehaving" road users. We introduce the concept of counterfactual safety margin, which represents the minimum deviation from normal behavior that could lead to a collision. This concept helps to find the most critical scenarios but also to assess the frequency and severity of risk of AVs. We show that the proposed methodology is applicable even when the AV's behavioral policy is unknown -- through worst- and best-case analyses -- making the method useful also to external third-party risk assessors. Our experimental results demonstrate the correlation between the safety margin, the driving policy quality, and the ODD shedding light on the relative risk associated with different AV providers. This work contributes to AV safety assessment and aids in addressing legislative and insurance concerns surrounding this emerging technology.

Factorization of Multi-Agent Sampling-Based Motion Planning

Apr 01, 2023Abstract:Modern robotics often involves multiple embodied agents operating within a shared environment. Path planning in these cases is considerably more challenging than in single-agent scenarios. Although standard Sampling-based Algorithms (SBAs) can be used to search for solutions in the robots' joint space, this approach quickly becomes computationally intractable as the number of agents increases. To address this issue, we integrate the concept of factorization into sampling-based algorithms, which requires only minimal modifications to existing methods. During the search for a solution we can decouple (i.e., factorize) different subsets of agents into independent lower-dimensional search spaces once we certify that their future solutions will be independent of each other using a factorization heuristic. Consequently, we progressively construct a lean hypergraph where certain (hyper-)edges split the agents to independent subgraphs. In the best case, this approach can reduce the growth in dimensionality of the search space from exponential to linear in the number of agents. On average, fewer samples are needed to find high-quality solutions while preserving the optimality, completeness, and anytime properties of SBAs. We present a general implementation of a factorized SBA, derive an analytical gain in terms of sample complexity for PRM*, and showcase empirical results for RRG.

How Bad is Selfish Driving? Bounding the Inefficiency of Equilibria in Urban Driving Games

Oct 24, 2022Abstract:We consider the interaction among agents engaging in a driving task and we model it as general-sum game. This class of games exhibits a plurality of different equilibria posing the issue of equilibrium selection. While selecting the most efficient equilibrium (in term of social cost) is often impractical from a computational standpoint, in this work we study the (in)efficiency of any equilibrium players might agree to play. More specifically, we bound the equilibrium inefficiency by modeling driving games as particular type of congestion games over spatio-temporal resources. We obtain novel guarantees that refine existing bounds on the Price of Anarchy (PoA) as a function of problem-dependent game parameters. For instance, the relative trade-off between proximity costs and personal objectives such as comfort and progress. Although the obtained guarantees concern open-loop trajectories, we observe efficient equilibria even when agents employ closed-loop policies trained via decentralized multi-agent reinforcement learning.

Categorification of Negative Information using Enrichment

Jul 27, 2022

Abstract:In many applications of category theory it is useful to reason about "negative information". For example, in planning problems, providing an optimal solution is the same as giving a feasible solution (the "positive" information) together with a proof of the fact that there cannot be feasible solutions better than the one given (the "negative" information). We model negative information by introducing the concept of "norphisms", as opposed to the positive information of morphisms. A "nategory" is a category that has "Nom"-sets in addition to hom-sets, and specifies the compatibility rules between norphisms and morphisms. With this setup we can choose to work in "coherent" "subnategories": subcategories that describe a potential instantiation of the world in which all morphisms and norphisms are compatible. We derive the composition rules for norphisms in a coherent subnategory; we show that norphisms do not compose by themselves, but rather they need to use morphisms as catalysts. We have two distinct rules of the type $\text{morphism} + \text{norphism} \rightarrow \text{norphism}$. We then show that those complex rules for norphism inference are actually as natural as the ones for morphisms, from the perspective of enriched category theory. Every small category is enriched over $\text{P}= \langle \text{Set}, \times, 1\rangle$. We show that we can derive the machinery of norphisms by considering an enrichment over a certain monoidal category called PN(for "positive"/"negative"). In summary, we show that an alternative to considering negative information using logic on top of the categorical formalization is to "categorify" the negative information, obtaining negative arrows that live at the same level as the positive arrows, and suggest that the new inference rules are born of the same substance from the perspective of enriched category theory.

Task-driven Modular Co-design of Vehicle Control Systems

Mar 30, 2022

Abstract:When designing autonomous systems, we need to consider multiple trade-offs at various abstraction levels, and the choices of single (hardware and software) components need to be studied jointly. In this work we consider the problem of designing the control algorithm as well as the platform on which it is executed. In particular, we focus on vehicle control systems, and formalize state-of-the-art control schemes as monotone feasibility relations. We then show how, leveraging a monotone theory of co-design, we can study the embedding of control synthesis problems into the task-driven co-design problem of a robotic platform. The properties of the proposed approach are illustrated by considering urban driving scenarios. We show how, given a particular task, we can efficiently compute Pareto optimal design solutions.

Posetal Games: Efficiency, Existence, and Refinement of Equilibria in Games with Prioritized Metrics

Nov 13, 2021

Abstract:Modern applications require robots to comply with multiple, often conflicting rules and to interact with the other agents. We present Posetal Games as a class of games in which each player expresses a preference over the outcomes via a partially ordered set of metrics. This allows one to combine hierarchical priorities of each player with the interactive nature of the environment. By contextualizing standard game theoretical notions, we provide two sufficient conditions on the preference of the players to prove existence of pure Nash Equilibria in finite action sets. Moreover, we define formal operations on the preference structures and link them to a refinement of the game solutions, showing how the set of equilibria can be systematically shrunk. The presented results are showcased in a driving game where autonomous vehicles select from a finite set of trajectories. The results demonstrate the interpretability of results in terms of minimum-rank-violation for each player.

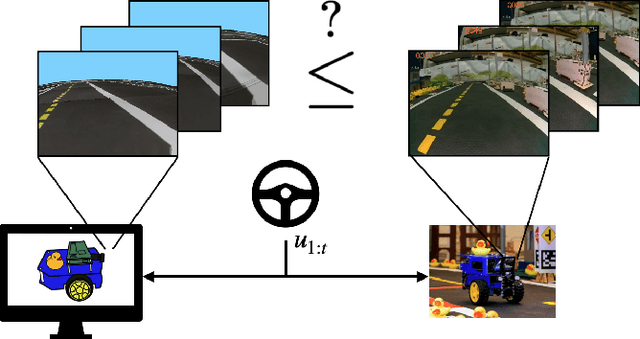

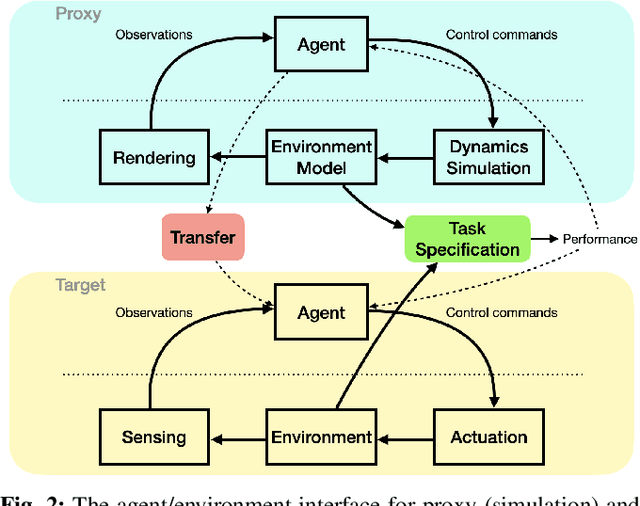

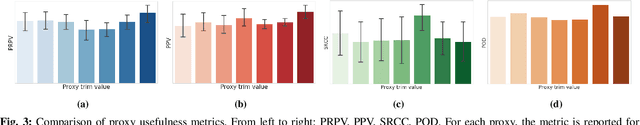

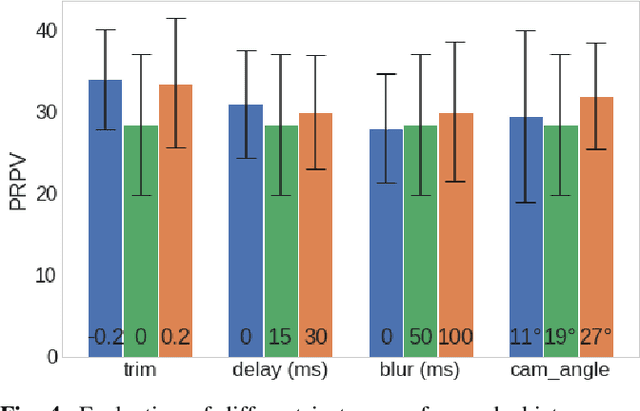

On Assessing the Usefulness of Proxy Domains for Developing and Evaluating Embodied Agents

Oct 07, 2021

Abstract:In many situations it is either impossible or impractical to develop and evaluate agents entirely on the target domain on which they will be deployed. This is particularly true in robotics, where doing experiments on hardware is much more arduous than in simulation. This has become arguably more so in the case of learning-based agents. To this end, considerable recent effort has been devoted to developing increasingly realistic and higher fidelity simulators. However, we lack any principled way to evaluate how good a "proxy domain" is, specifically in terms of how useful it is in helping us achieve our end objective of building an agent that performs well in the target domain. In this work, we investigate methods to address this need. We begin by clearly separating two uses of proxy domains that are often conflated: 1) their ability to be a faithful predictor of agent performance and 2) their ability to be a useful tool for learning. In this paper, we attempt to clarify the role of proxy domains and establish new proxy usefulness (PU) metrics to compare the usefulness of different proxy domains. We propose the relative predictive PU to assess the predictive ability of a proxy domain and the learning PU to quantify the usefulness of a proxy as a tool to generate learning data. Furthermore, we argue that the value of a proxy is conditioned on the task that it is being used to help solve. We demonstrate how these new metrics can be used to optimize parameters of the proxy domain for which obtaining ground truth via system identification is not trivial.

A Compositional Sheaf-Theoretic Framework for Event-Based Systems

Jan 26, 2021

Abstract:A compositional sheaf-theoretic framework for the modeling of complex event-based systems is presented. We show that event-based systems are machines, with inputs and outputs, and that they can be composed with machines of different types, all within a unified, sheaf-theoretic formalism. We take robotic systems as an exemplar of complex systems and rigorously describe actuators, sensors, and algorithms using this framework.

* In Proceedings ACT 2020, arXiv:2101.07888. arXiv admin note: substantial text overlap with arXiv:2005.04715

Co-Design of Autonomous Systems: From Hardware Selection to Control Synthesis

Nov 21, 2020

Abstract:Designing cyber-physical systems is a complex task which requires insights at multiple abstraction levels. The choices of single components are deeply interconnected and need to be jointly studied. In this work, we consider the problem of co-designing the control algorithm as well as the platform around it. In particular, we leverage a monotone theory of co-design to formalize variations of the LQG control problem as monotone feasibility relations. We then show how this enables the embedding of control co-design problems in the higher level co-design problem of a robotic platform. We illustrate the properties of our formalization by analyzing the co-design of an autonomous drone performing search-and-rescue tasks and show how, given a set of desired robot behaviors, we can compute Pareto efficient design solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge