Anant Jain

Secure Generalization through Stochastic Bidirectional Parameter Updates Using Dual-Gradient Mechanism

Apr 03, 2025

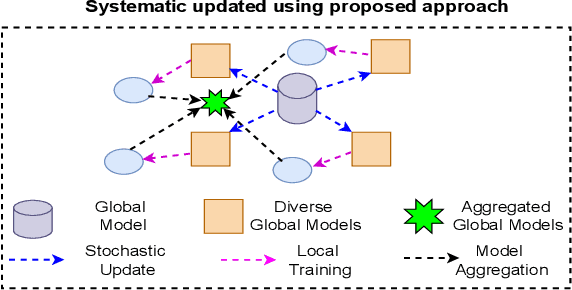

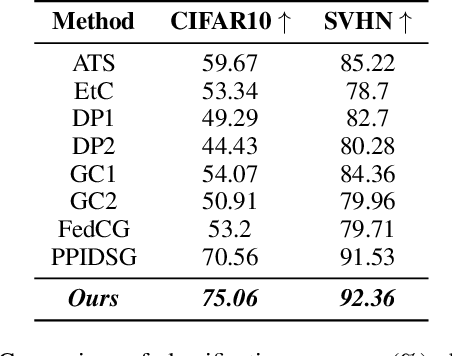

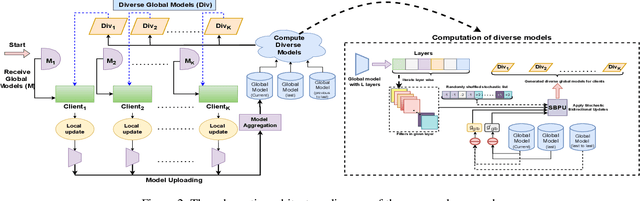

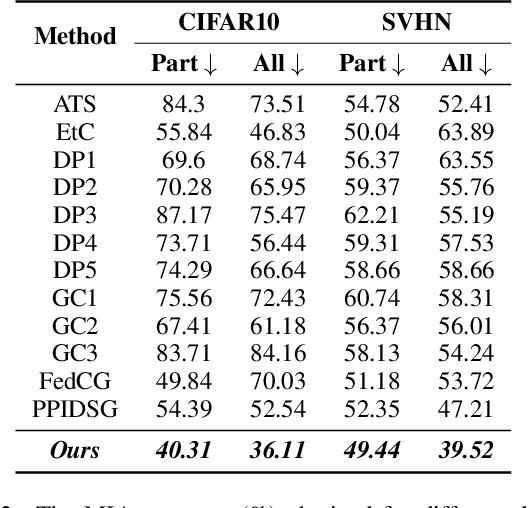

Abstract:Federated learning (FL) has gained increasing attention due to privacy-preserving collaborative training on decentralized clients, mitigating the need to upload sensitive data to a central server directly. Nonetheless, recent research has underscored the risk of exposing private data to adversaries, even within FL frameworks. In general, existing methods sacrifice performance while ensuring resistance to privacy leakage in FL. We overcome these issues and generate diverse models at a global server through the proposed stochastic bidirectional parameter update mechanism. Using diverse models, we improved the generalization and feature representation in the FL setup, which also helped to improve the robustness of the model against privacy leakage without hurting the model's utility. We use global models from past FL rounds to follow systematic perturbation in parameter space at the server to ensure model generalization and resistance against privacy attacks. We generate diverse models (in close neighborhoods) for each client by using systematic perturbations in model parameters at a fine-grained level (i.e., altering each convolutional filter across the layers of the model) to improve the generalization and security perspective. We evaluated our proposed approach on four benchmark datasets to validate its superiority. We surpassed the state-of-the-art methods in terms of model utility and robustness towards privacy leakage. We have proven the effectiveness of our method by evaluating performance using several quantitative and qualitative results.

EEG-based 90-Degree Turn Intention Detection for Brain-Computer Interface

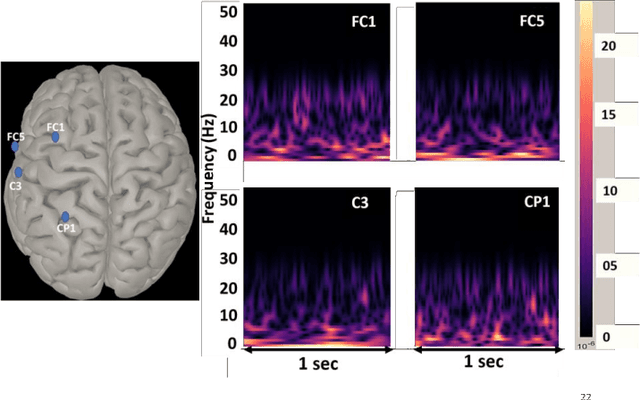

Oct 15, 2024Abstract:Electroencephalography (EEG)--based turn intention prediction for lower limb movement is important to build an efficient brain-computer interface (BCI) system. This study investigates the feasibility of intention detection of left-turn, right-turn, and straight walk by utilizing EEG signals obtained before the event occurrence. Synchronous data was collected using 31-channel EEG and IMU-based motion capture systems for nine healthy participants while performing left-turn, right-turn, and straight walk movements. EEG data was preprocessed with steps including Artifact Subspace Reconstruction (ASR), re-referencing, and Independent Component Analysis (ICA) to remove data noise. Feature extraction from the preprocessed EEG data involved computing various statistical measures (mean, median, standard deviation, skew, and kurtosis), and Hjorth parameters (activity, mobility, and complexity). Further, the feature selection was performed using the Random forest algorithm for the dimensionality reduction. The feature set obtained was utilized for 3-class classification using XG boost, gradient boosting, and support vector machine (SVM) with RBF kernel classifiers in a five-fold cross-validation scheme. Using the proposed intention detection methodology, the SVM classifier using an EEG window of 1.5 s and 0 s time-lag has the best decoding performance with mean accuracy, precision, and recall of 81.23%, 85.35%, and 83.92%, respectively, across the nine participants. The decoding analysis shows the feasibility of turn intention prediction for lower limb movement using the EEG signal before the event onset.

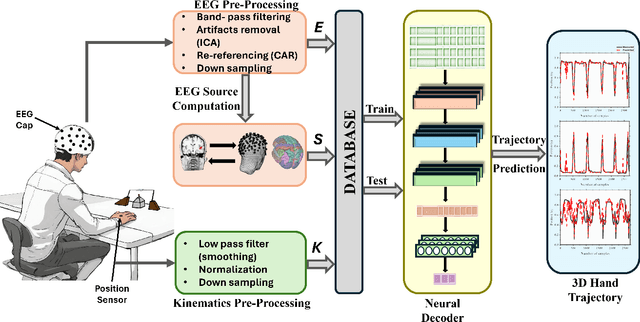

ESI-GAL: EEG Source Imaging-based Kinematics Parameter Estimation for Grasp and Lift Task

Jun 18, 2024

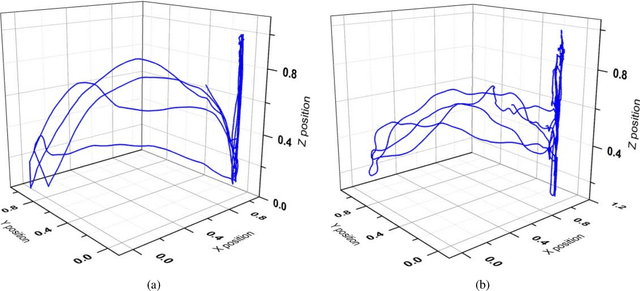

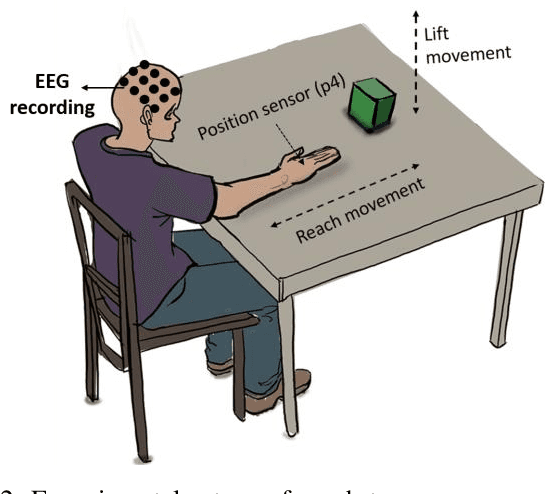

Abstract:Objective: Electroencephalogram (EEG) signals-based motor kinematics prediction (MKP) has been an active area of research to develop brain-computer interface (BCI) systems such as exosuits, prostheses, and rehabilitation devices. However, EEG source imaging (ESI) based kinematics prediction is sparsely explored in the literature. Approach: In this study, pre-movement EEG features are utilized to predict three-dimensional (3D) hand kinematics for the grasp-and-lift motor task. A public dataset, WAY-EEG-GAL, is utilized for MKP analysis. In particular, sensor-domain (EEG data) and source-domain (ESI data) based features from the frontoparietal region are explored for MKP. Deep learning-based models are explored to achieve efficient kinematics decoding. Various time-lagged and window sizes are analyzed for hand kinematics prediction. Subsequently, intra-subject and inter-subject MKP analysis is performed to investigate the subject-specific and subject-independent motor-learning capabilities of the neural decoders. The Pearson correlation coefficient (PCC) is used as the performance metric for kinematics trajectory decoding. Main results: The rEEGNet neural decoder achieved the best performance with sensor-domain and source-domain features with the time lag and window size of 100 ms and 450 ms, respectively. The highest mean PCC values of 0.790, 0.795, and 0.637 are achieved using sensor-domain features, while 0.769, 0.777, and 0.647 are achieved using source-domain features in x, y, and z-directions, respectively. Significance: This study explores the feasibility of trajectory prediction using EEG sensor-domain and source-domain EEG features for the grasp-and-lift task. Furthermore, inter-subject trajectory estimation is performed using the proposed deep learning decoder with EEG source domain features.

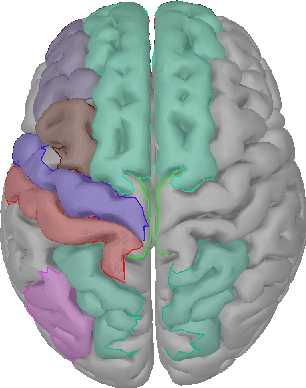

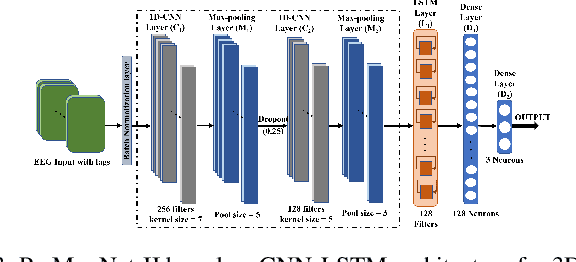

EEG Cortical Source Feature based Hand Kinematics Decoding using Residual CNN-LSTM Neural Network

Apr 13, 2023Abstract:Motor kinematics decoding (MKD) using brain signal is essential to develop Brain-computer interface (BCI) system for rehabilitation or prosthesis devices. Surface electroencephalogram (EEG) signal has been widely utilized for MKD. However, kinematic decoding from cortical sources is sparsely explored. In this work, the feasibility of hand kinematics decoding using EEG cortical source signals has been explored for grasp and lift task. In particular, pre-movement EEG segment is utilized. A residual convolutional neural network (CNN) - long short-term memory (LSTM) based kinematics decoding model is proposed that utilizes motor neural information present in pre-movement brain activity. Various EEG windows at 50 ms prior to movement onset, are utilized for hand kinematics decoding. Correlation value (CV) between actual and predicted hand kinematics is utilized as performance metric for source and sensor domain. The performance of the proposed deep learning model is compared in sensor and source domain. The results demonstrate the viability of hand kinematics decoding using pre-movement EEG cortical source data.

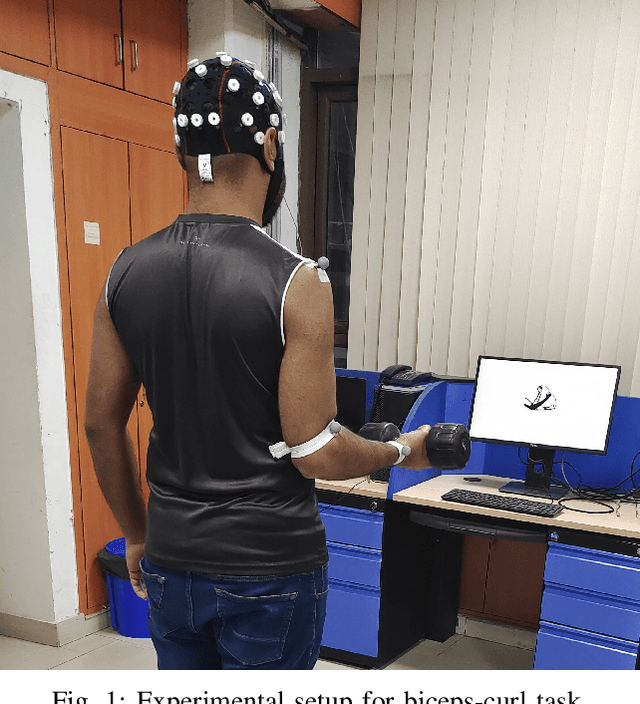

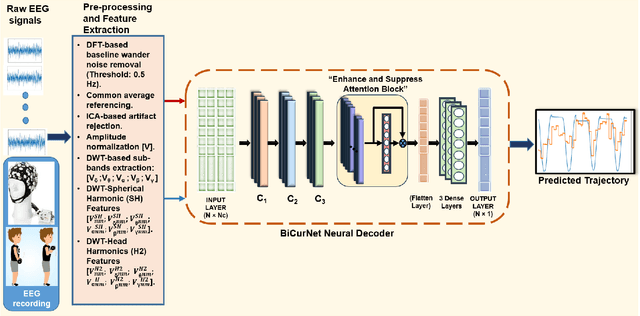

BiCurNet: Pre-Movement EEG based Neural Decoder for Biceps Curl Trajectory Estimation

Jan 10, 2023

Abstract:Kinematic parameter (KP) estimation from early electroencephalogram (EEG) signals is essential for positive augmentation using wearable robot. However, work related to early estimation of KPs from surface EEG is sparse. In this work, a deep learning-based model, BiCurNet, is presented for early estimation of biceps curl using collected EEG signal. The model utilizes light-weight architecture with depth-wise separable convolution layers and customized attention module. The feasibility of early estimation of KPs is demonstrated using brain source imaging. Computationally efficient EEG features in spherical and head harmonics domain is utilized for the first time for KP prediction. The best Pearson correlation coefficient (PCC) between estimated and actual trajectory of $0.7$ is achieved when combined EEG features (spatial and harmonics domain) in delta band is utilized. Robustness of the proposed network is demonstrated for subject-dependent and subject-independent training, using EEG signals with artifacts.

Subject-Independent 3D Hand Kinematics Reconstruction using Pre-Movement EEG Signals for Grasp And Lift Task

Sep 05, 2022

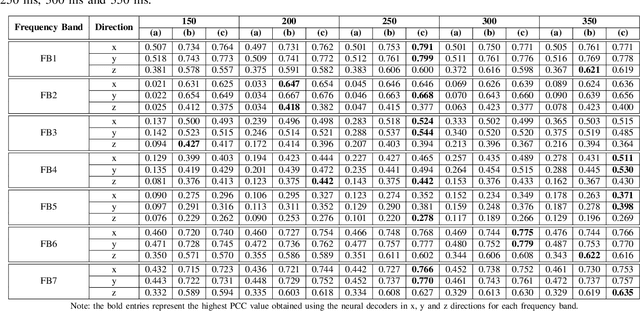

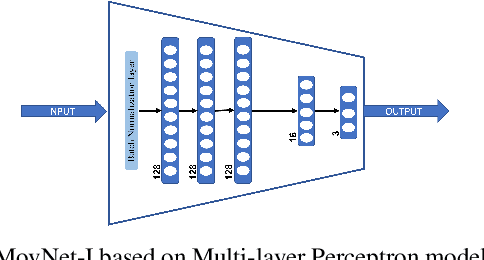

Abstract:Brain-computer interface (BCI) systems can be utilized for kinematics decoding from scalp brain activation to control rehabilitation or power-augmenting devices. In this study, the hand kinematics decoding for grasp and lift task is performed in three-dimensional (3D) space using scalp electroencephalogram (EEG) signals. Twelve subjects from the publicly available database WAY-EEG-GAL, has been utilized in this study. In particular, multi-layer perceptron (MLP) and convolutional neural network-long short-term memory (CNN-LSTM) based deep learning frameworks are proposed that utilize the motor-neural information encoded in the pre-movement EEG data. Spectral features are analyzed for hand kinematics decoding using EEG data filtered in seven frequency ranges. The best performing frequency band spectral features has been considered for further analysis with different EEG window sizes and lag windows. Appropriate lag windows from movement onset, make the approach pre-movement in true sense. Additionally, inter-subject hand trajectory decoding analysis is performed using leave-one-subject-out (LOSO) approach. The Pearson correlation coefficient and hand trajectory are considered as performance metric to evaluate decoding performance for the neural decoders. This study explores the feasibility of inter-subject 3-D hand trajectory decoding using EEG signals only during reach and grasp task, probably for the first time. The results may provide the viable information to decode 3D hand kinematics using pre-movement EEG signals for practical BCI applications such as exoskeleton/exosuit and prosthesis.

PreMovNet: Pre-Movement EEG-based Hand Kinematics Estimation for Grasp and Lift task

May 02, 2022

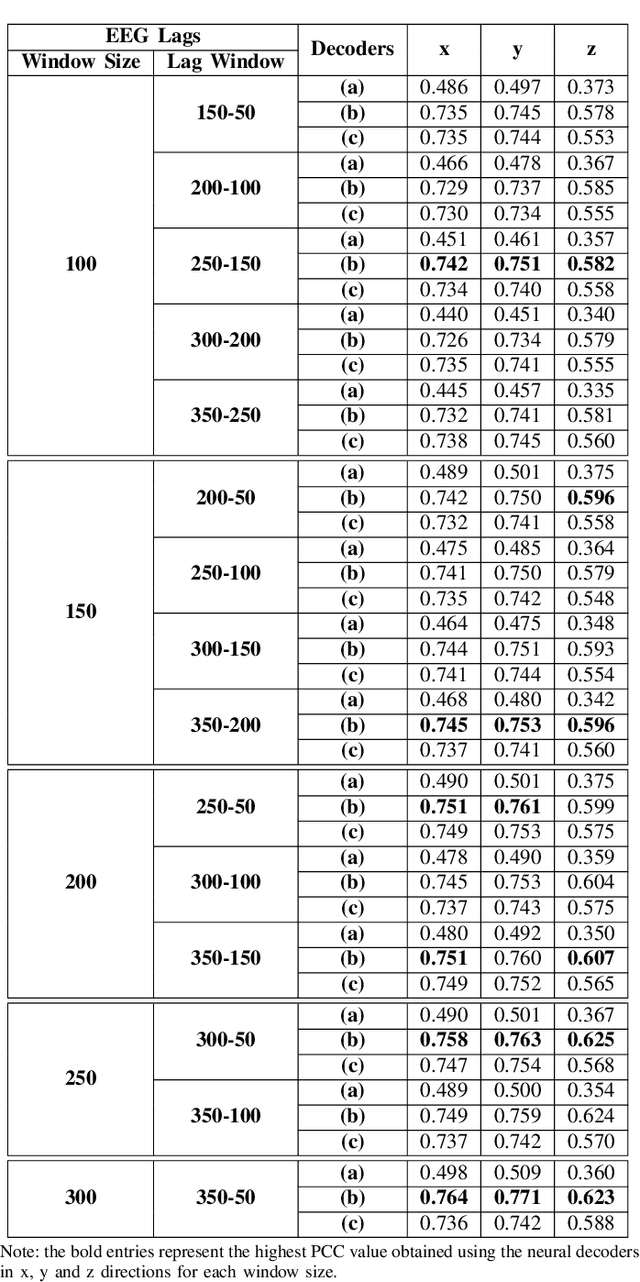

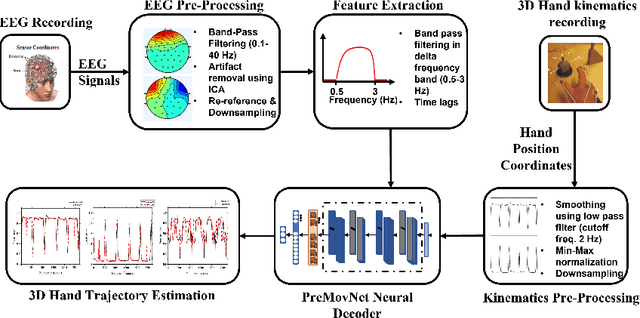

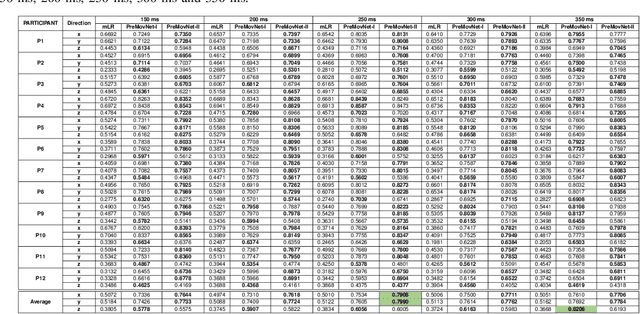

Abstract:Kinematics decoding from brain activity helps in developing rehabilitation or power-augmenting brain-computer interface devices. Low-frequency signals recorded from non-invasive electroencephalography (EEG) are associated with the neural motor correlation utilised for motor trajectory decoding (MTD). In this communication, the ability to decode motor kinematics trajectory from pre-movement delta-band (0.5-3 Hz) EEG is investigated for the healthy participants. In particular, two deep learning-based neural decoders called PreMovNet-I and PreMovNet-II, are proposed that make use of motor-related neural information existing in the pre-movement EEG data. EEG data segments with various time lags of 150 ms, 200 ms, 250 ms, 300 ms, and 350 ms before the movement onset are utilised for the same. The MTD is presented for grasp-and-lift task (WAY-EEG-GAL dataset) using EEG with the various lags taken as input to the neural decoders. The performance of the proposed decoders are compared with the state-of-the-art multi-variable linear regression (mLR) model. Pearson correlation coefficient and hand trajectory are utilised as performance metric. The results demonstrate the viability of decoding 3D hand kinematics using pre-movement EEG data, enabling better control of BCI-based external devices such as exoskeleton/exosuit.

Source Aware Deep Learning Framework for Hand Kinematic Reconstruction using EEG Signal

Apr 05, 2021

Abstract:The ability to reconstruct the kinematic parameters of hand movement using non-invasive electroencephalography (EEG) is essential for strength and endurance augmentation using exosuit/exoskeleton. For system development, the conventional classification based brain computer interface (BCI) controls external devices by providing discrete control signals to the actuator. A continuous kinematic reconstruction from EEG signal is better suited for practical BCI applications. The state-of-the-art multi-variable linear regression (mLR) method provides a continuous estimate of hand kinematics, achieving maximum correlation of upto 0.67 between the measured and the estimated hand trajectory. In this work, three novel source aware deep learning models are proposed for motion trajectory prediction (MTP). In particular, multi layer perceptron (MLP), convolutional neural network - long short term memory (CNN-LSTM), and wavelet packet decomposition (WPD) CNN-LSTM are presented. Additional novelty of the work includes utilization of brain source localization (using sLORETA) for the reliable decoding of motor intention mapping (channel selection) and accurate EEG time segment selection. Performance of the proposed models are compared with the traditionally utilised mLR technique on the real grasp and lift (GAL) dataset. Effectiveness of the proposed framework is established using the Pearson correlation coefficient and trajectory analysis. A significant improvement in the correlation coefficient is observed when compared with state-of-the-art mLR model. Our work bridges the gap between the control and the actuator block, enabling real time BCI implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge