Subject-Independent 3D Hand Kinematics Reconstruction using Pre-Movement EEG Signals for Grasp And Lift Task

Paper and Code

Sep 05, 2022

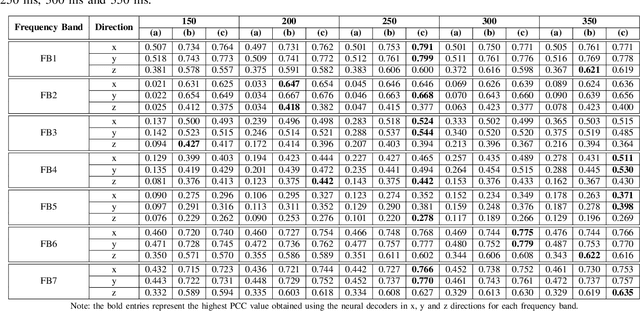

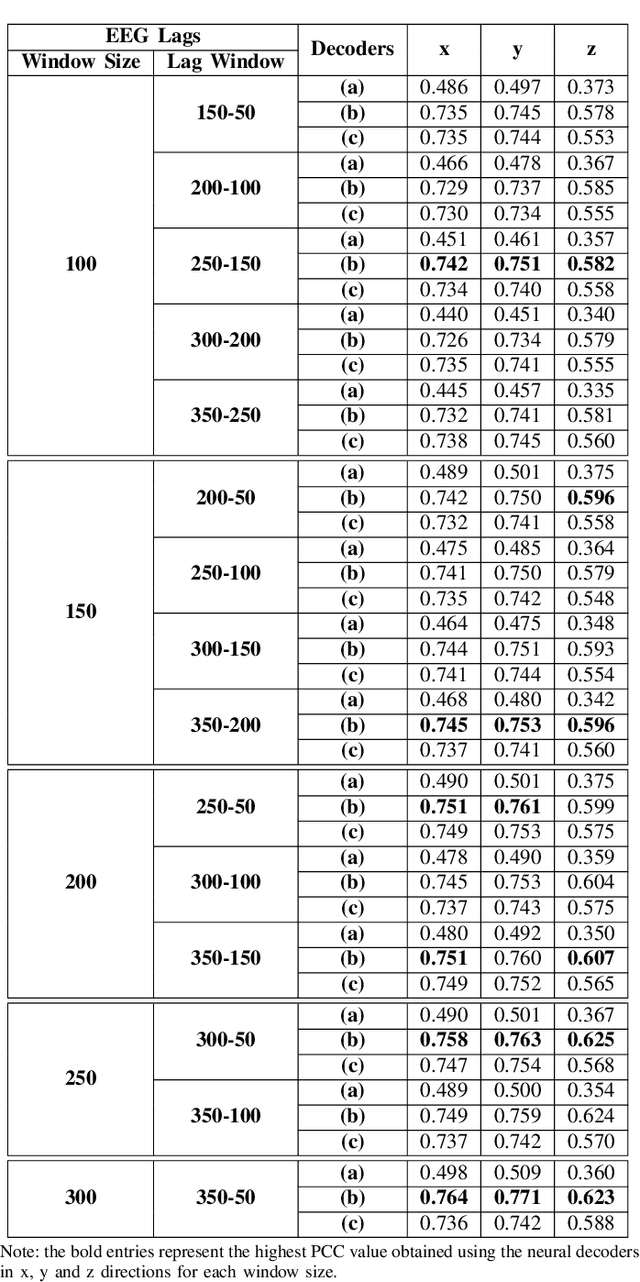

Brain-computer interface (BCI) systems can be utilized for kinematics decoding from scalp brain activation to control rehabilitation or power-augmenting devices. In this study, the hand kinematics decoding for grasp and lift task is performed in three-dimensional (3D) space using scalp electroencephalogram (EEG) signals. Twelve subjects from the publicly available database WAY-EEG-GAL, has been utilized in this study. In particular, multi-layer perceptron (MLP) and convolutional neural network-long short-term memory (CNN-LSTM) based deep learning frameworks are proposed that utilize the motor-neural information encoded in the pre-movement EEG data. Spectral features are analyzed for hand kinematics decoding using EEG data filtered in seven frequency ranges. The best performing frequency band spectral features has been considered for further analysis with different EEG window sizes and lag windows. Appropriate lag windows from movement onset, make the approach pre-movement in true sense. Additionally, inter-subject hand trajectory decoding analysis is performed using leave-one-subject-out (LOSO) approach. The Pearson correlation coefficient and hand trajectory are considered as performance metric to evaluate decoding performance for the neural decoders. This study explores the feasibility of inter-subject 3-D hand trajectory decoding using EEG signals only during reach and grasp task, probably for the first time. The results may provide the viable information to decode 3D hand kinematics using pre-movement EEG signals for practical BCI applications such as exoskeleton/exosuit and prosthesis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge