Source Aware Deep Learning Framework for Hand Kinematic Reconstruction using EEG Signal

Paper and Code

Apr 05, 2021

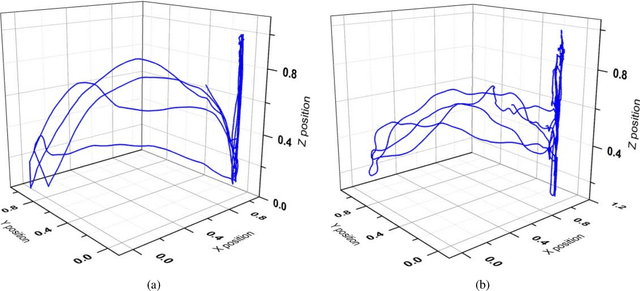

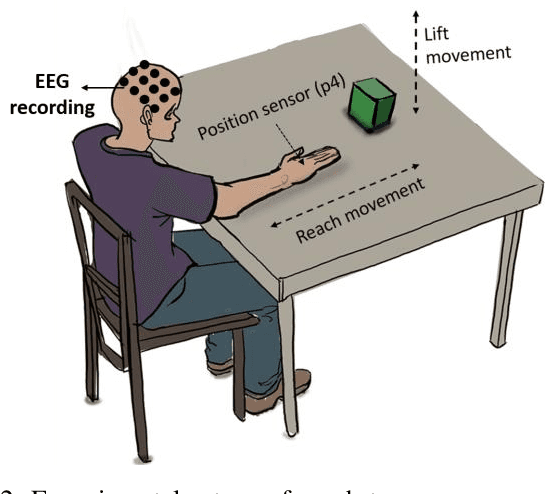

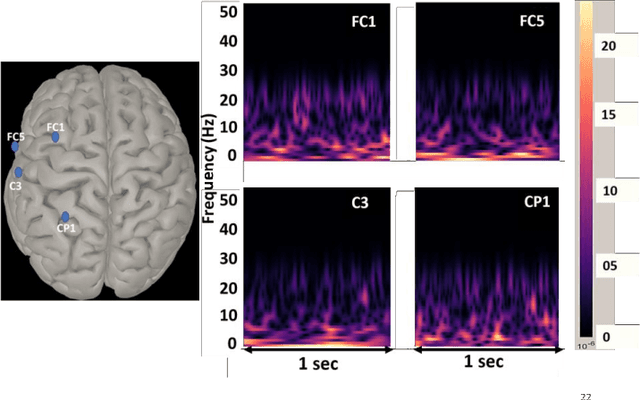

The ability to reconstruct the kinematic parameters of hand movement using non-invasive electroencephalography (EEG) is essential for strength and endurance augmentation using exosuit/exoskeleton. For system development, the conventional classification based brain computer interface (BCI) controls external devices by providing discrete control signals to the actuator. A continuous kinematic reconstruction from EEG signal is better suited for practical BCI applications. The state-of-the-art multi-variable linear regression (mLR) method provides a continuous estimate of hand kinematics, achieving maximum correlation of upto 0.67 between the measured and the estimated hand trajectory. In this work, three novel source aware deep learning models are proposed for motion trajectory prediction (MTP). In particular, multi layer perceptron (MLP), convolutional neural network - long short term memory (CNN-LSTM), and wavelet packet decomposition (WPD) CNN-LSTM are presented. Additional novelty of the work includes utilization of brain source localization (using sLORETA) for the reliable decoding of motor intention mapping (channel selection) and accurate EEG time segment selection. Performance of the proposed models are compared with the traditionally utilised mLR technique on the real grasp and lift (GAL) dataset. Effectiveness of the proposed framework is established using the Pearson correlation coefficient and trajectory analysis. A significant improvement in the correlation coefficient is observed when compared with state-of-the-art mLR model. Our work bridges the gap between the control and the actuator block, enabling real time BCI implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge