Anam Zahid

FairUDT: Fairness-aware Uplift Decision Trees

Feb 03, 2025Abstract:Training data used for developing machine learning classifiers can exhibit biases against specific protected attributes. Such biases typically originate from historical discrimination or certain underlying patterns that disproportionately under-represent minority groups, such as those identified by their gender, religion, or race. In this paper, we propose a novel approach, FairUDT, a fairness-aware Uplift-based Decision Tree for discrimination identification. FairUDT demonstrates how the integration of uplift modeling with decision trees can be adapted to include fair splitting criteria. Additionally, we introduce a modified leaf relabeling approach for removing discrimination. We divide our dataset into favored and deprived groups based on a binary sensitive attribute, with the favored dataset serving as the treatment group and the deprived dataset as the control group. By applying FairUDT and our leaf relabeling approach to preprocess three benchmark datasets, we achieve an acceptable accuracy-discrimination tradeoff. We also show that FairUDT is inherently interpretable and can be utilized in discrimination detection tasks. The code for this project is available https://github.com/ara-25/FairUDT

* Published in Knowledge-based Systems (2025)

ViLBias: A Framework for Bias Detection using Linguistic and Visual Cues

Dec 22, 2024

Abstract:The integration of Large Language Models (LLMs) and Vision-Language Models (VLMs) opens new avenues for addressing complex challenges in multimodal content analysis, particularly in biased news detection. This study introduces ViLBias, a framework that leverages state of the art LLMs and VLMs to detect linguistic and visual biases in news content, addressing the limitations of traditional text-only approaches. Our contributions include a novel dataset pairing textual content with accompanying visuals from diverse news sources and a hybrid annotation framework, combining LLM-based annotations with human review to enhance quality while reducing costs and improving scalability. We evaluate the efficacy of LLMs and VLMs in identifying biases, revealing their strengths in detecting subtle framing and text-visual inconsistencies. Empirical analysis demonstrates that incorporating visual cues alongside text enhances bias detection accuracy by 3 to 5 %, showcasing the complementary strengths of LLMs in generative reasoning and Small Language Models (SLMs) in classification. This study offers a comprehensive exploration of LLMs and VLMs as tools for detecting multimodal biases in news content, highlighting both their potential and limitations. Our research paves the way for more robust, scalable, and nuanced approaches to media bias detection, contributing to the broader field of natural language processing and multimodal analysis. (The data and code will be made available for research purposes).

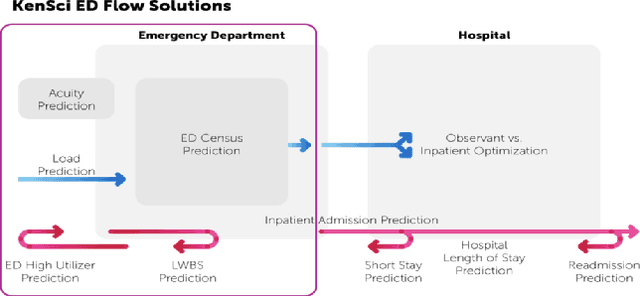

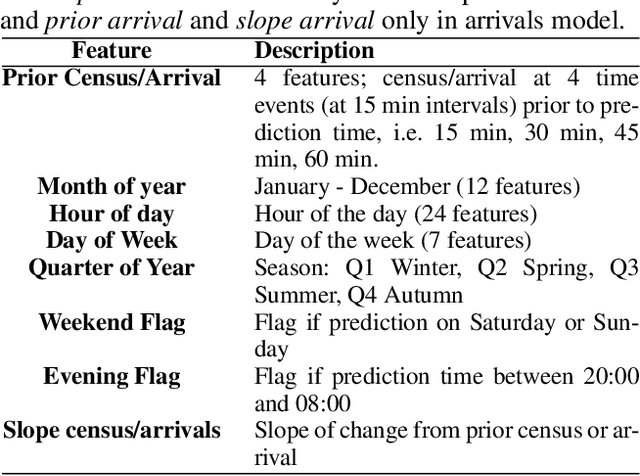

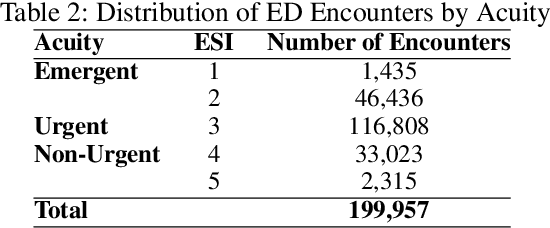

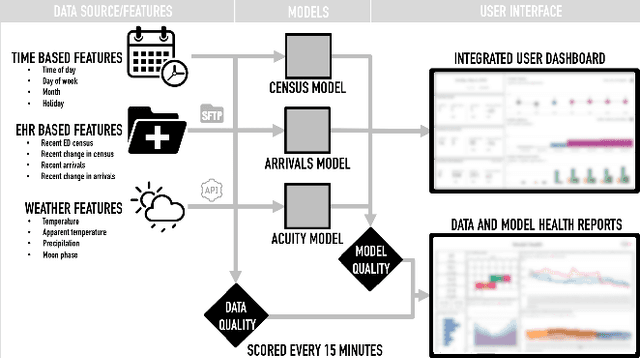

Emergency Department Optimization and Load Prediction in Hospitals

Feb 06, 2021

Abstract:Over the past several years, across the globe, there has been an increase in people seeking care in emergency departments (EDs). ED resources, including nurse staffing, are strained by such increases in patient volume. Accurate forecasting of incoming patient volume in emergency departments (ED) is crucial for efficient utilization and allocation of ED resources. Working with a suburban ED in the Pacific Northwest, we developed a tool powered by machine learning models, to forecast ED arrivals and ED patient volume to assist end-users, such as ED nurses, in resource allocation. In this paper, we discuss the results from our predictive models, the challenges, and the learnings from users' experiences with the tool in active clinical deployment in a real world setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge