Allison Stevens

Robust joint registration of multiple stains and MRI for multimodal 3D histology reconstruction: Application to the Allen human brain atlas

May 04, 2021

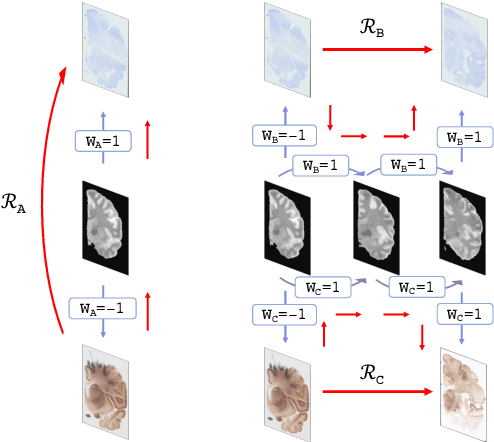

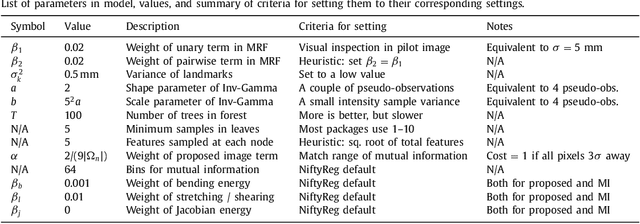

Abstract:Joint registration of a stack of 2D histological sections to recover 3D structure (3D histology reconstruction) finds application in areas such as atlas building and validation of in vivo imaging. Straighforward pairwise registration of neighbouring sections yields smooth reconstructions but has well-known problems such as banana effect (straightening of curved structures) and z-shift (drift). While these problems can be alleviated with an external, linearly aligned reference (e.g., Magnetic Resonance images), registration is often inaccurate due to contrast differences and the strong nonlinear distortion of the tissue, including artefacts such as folds and tears. In this paper, we present a probabilistic model of spatial deformation that yields reconstructions for multiple histological stains that that are jointly smooth, robust to outliers, and follow the reference shape. The model relies on a spanning tree of latent transforms connecting all the sections and slices, and assumes that the registration between any pair of images can be see as a noisy version of the composition of (possibly inverted) latent transforms connecting the two images. Bayesian inference is used to compute the most likely latent transforms given a set of pairwise registrations between image pairs within and across modalities. Results on synthetic deformations on multiple MR modalities, show that our method can accurately and robustly register multiple contrasts even in the presence of outliers. The 3D histology reconstruction of two stains (Nissl and parvalbumin) from the Allen human brain atlas, show its benefits on real data with severe distortions. We also provide the correspondence to MNI space, bridging the gap between two of the most used atlases in histology and MRI. Data is available at https://openneuro.org/datasets/ds003590 and code at https://github.com/acasamitjana/3dhirest.

Joint registration and synthesis using a probabilistic model for alignment of MRI and histological sections

Jan 16, 2018

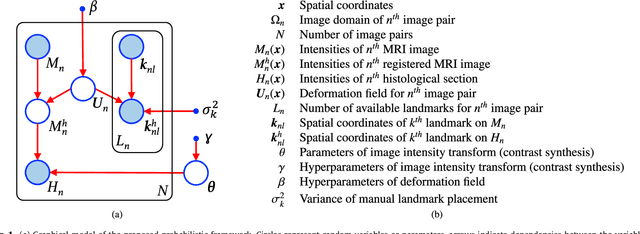

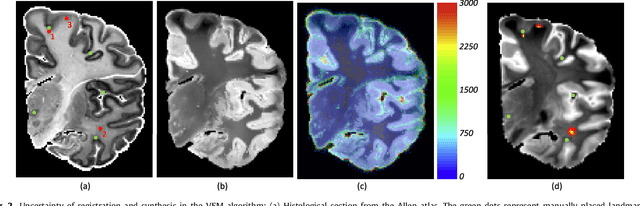

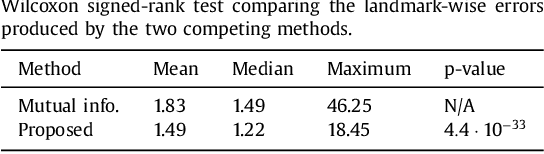

Abstract:Nonlinear registration of 2D histological sections with corresponding slices of MRI data is a critical step of 3D histology reconstruction. This task is difficult due to the large differences in image contrast and resolution, as well as the complex nonrigid distortions produced when sectioning the sample and mounting it on the glass slide. It has been shown in brain MRI registration that better spatial alignment across modalities can be obtained by synthesizing one modality from the other and then using intra-modality registration metrics, rather than by using mutual information (MI) as metric. However, such an approach typically requires a database of aligned images from the two modalities, which is very difficult to obtain for histology/MRI. Here, we overcome this limitation with a probabilistic method that simultaneously solves for registration and synthesis directly on the target images, without any training data. In our model, the MRI slice is assumed to be a contrast-warped, spatially deformed version of the histological section. We use approximate Bayesian inference to iteratively refine the probabilistic estimate of the synthesis and the registration, while accounting for each other's uncertainty. Moreover, manually placed landmarks can be seamlessly integrated in the framework for increased performance. Experiments on a synthetic dataset show that, compared with MI, the proposed method makes it possible to use a much more flexible deformation model in the registration to improve its accuracy, without compromising robustness. Moreover, our framework also exploits information in manually placed landmarks more efficiently than MI, since landmarks inform both synthesis and registration - as opposed to registration alone. Finally, we show qualitative results on the public Allen atlas, in which the proposed method provides a clear improvement over MI based registration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge