Aljaz Kramberger

A General Peg-in-Hole Assembly Policy Based on Domain Randomized Reinforcement Learning

Apr 05, 2025Abstract:Generalization is important for peg-in-hole assembly, a fundamental industrial operation, to adapt to dynamic industrial scenarios and enhance manufacturing efficiency. While prior work has enhanced generalization ability for pose variations, spatial generalization to six degrees of freedom (6-DOF) is less researched, limiting application in real-world scenarios. This paper addresses this limitation by developing a general policy GenPiH using Proximal Policy Optimization(PPO) and dynamic simulation with domain randomization. The policy learning experiment demonstrates the policy's generalization ability with nearly 100\% success insertion across over eight thousand unique hole poses in parallel environments, and sim-to-real validation on a UR10e robot confirms the policy's performance through direct trajectory execution without task-specific tuning.

Robotic Task Success Evaluation Under Multi-modal Non-Parametric Object Pose Uncertainty

Mar 16, 2024Abstract:Accurate 6D object pose estimation is essential for various robotic tasks. Uncertain pose estimates can lead to task failures; however, a certain degree of error in the pose estimates is often acceptable. Hence, by quantifying errors in the object pose estimate and acceptable errors for task success, robots can make informed decisions. This is a challenging problem as both the object pose uncertainty and acceptable error for the robotic task are often multi-modal and cannot be parameterized with commonly used uni-modal distributions. In this paper, we introduce a framework for evaluating robotic task success under object pose uncertainty, representing both the estimated error space of the object pose and the acceptable error space for task success using multi-modal non-parametric probability distributions. The proposed framework pre-computes the acceptable error space for task success using dynamic simulations and subsequently integrates the pre-computed acceptable error space over the estimated error space of the object pose to predict the likelihood of the task success. We evaluated the proposed framework on two mobile manipulation tasks. Our results show that by representing the estimated and the acceptable error space using multi-modal non-parametric distributions, we achieve higher task success rates and fewer failures.

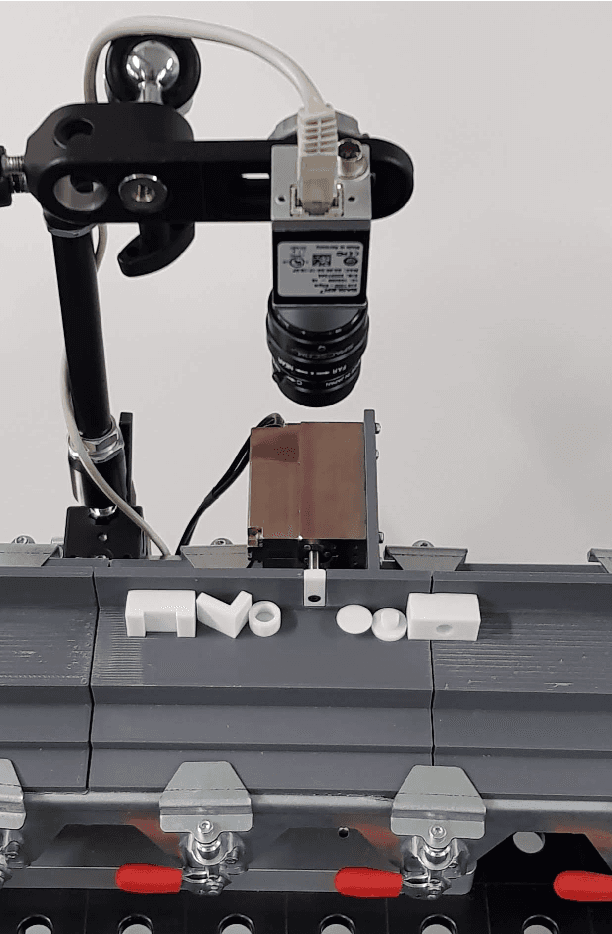

A Flexible and Robust Vision Trap for Automated Part Feeder Design

Jun 01, 2022

Abstract:Fast, robust, and flexible part feeding is essential for enabling automation of low volume, high variance assembly tasks. An actuated vision-based solution on a traditional vibratory feeder, referred to here as a vision trap, should in principle be able to meet these demands for a wide range of parts. However, in practice, the flexibility of such a trap is limited as an expert is needed to both identify manageable tasks and to configure the vision system. We propose a novel approach to vision trap design in which the identification of manageable tasks is automatic and the configuration of these tasks can be delegated to an automated feeder design system. We show that the trap's capabilities can be formalized in such a way that it integrates seamlessly into the ecosystem of automated feeder design. Our results on six canonical parts show great promise for autonomous configuration of feeder systems.

Dynamic Movement Primitives in Robotics: A Tutorial Survey

Feb 07, 2021

Abstract:Biological systems, including human beings, have the innate ability to perform complex tasks in versatile and agile manner. Researchers in sensorimotor control have tried to understand and formally define this innate property. The idea, supported by several experimental findings, that biological systems are able to combine and adapt basic units of motion into complex tasks finally lead to the formulation of the motor primitives theory. In this respect, Dynamic Movement Primitives (DMPs) represent an elegant mathematical formulation of the motor primitives as stable dynamical systems, and are well suited to generate motor commands for artificial systems like robots. In the last decades, DMPs have inspired researchers in different robotic fields including imitation and reinforcement learning, optimal control,physical interaction, and human-robot co-working, resulting a considerable amount of published papers. The goal of this tutorial survey is two-fold. On one side, we present the existing DMPs formulations in rigorous mathematical terms,and discuss advantages and limitations of each approach as well as practical implementation details. In the tutorial vein, we also search for existing implementations of presented approaches and release several others. On the other side, we provide a systematic and comprehensive review of existing literature and categorize state of the art work on DMP. The paper concludes with a discussion on the limitations of DMPs and an outline of possible research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge