Thorbjørn Mosekjær Iversen

Global Optimization of Stochastic Black-Box Functions with Arbitrary Noise Distributions using Wilson Score Kernel Density Estimation

Sep 11, 2025Abstract:Many optimization problems in robotics involve the optimization of time-expensive black-box functions, such as those involving complex simulations or evaluation of real-world experiments. Furthermore, these functions are often stochastic as repeated experiments are subject to unmeasurable disturbances. Bayesian optimization can be used to optimize such methods in an efficient manner by deploying a probabilistic function estimator to estimate with a given confidence so that regions of the search space can be pruned away. Consequently, the success of the Bayesian optimization depends on the function estimator's ability to provide informative confidence bounds. Existing function estimators require many function evaluations to infer the underlying confidence or depend on modeling of the disturbances. In this paper, it is shown that the confidence bounds provided by the Wilson Score Kernel Density Estimator (WS-KDE) are applicable as excellent bounds to any stochastic function with an output confined to the closed interval [0;1] regardless of the distribution of the output. This finding opens up the use of WS-KDE for stable global optimization on a wider range of cost functions. The properties of WS-KDE in the context of Bayesian optimization are demonstrated in simulation and applied to the problem of automated trap design for vibrational part feeders.

Robotic Task Success Evaluation Under Multi-modal Non-Parametric Object Pose Uncertainty

Mar 16, 2024Abstract:Accurate 6D object pose estimation is essential for various robotic tasks. Uncertain pose estimates can lead to task failures; however, a certain degree of error in the pose estimates is often acceptable. Hence, by quantifying errors in the object pose estimate and acceptable errors for task success, robots can make informed decisions. This is a challenging problem as both the object pose uncertainty and acceptable error for the robotic task are often multi-modal and cannot be parameterized with commonly used uni-modal distributions. In this paper, we introduce a framework for evaluating robotic task success under object pose uncertainty, representing both the estimated error space of the object pose and the acceptable error space for task success using multi-modal non-parametric probability distributions. The proposed framework pre-computes the acceptable error space for task success using dynamic simulations and subsequently integrates the pre-computed acceptable error space over the estimated error space of the object pose to predict the likelihood of the task success. We evaluated the proposed framework on two mobile manipulation tasks. Our results show that by representing the estimated and the acceptable error space using multi-modal non-parametric distributions, we achieve higher task success rates and fewer failures.

Fixture calibration with guaranteed bounds from a few correspondence-free surface points

Mar 04, 2024Abstract:Calibration of fixtures in robotic work cells is essential but also time consuming and error-prone, and poor calibration can easily lead to wasted debugging time in downstream tasks. Contact-based calibration methods let the user measure points on the fixture's surface with a tool tip attached to the robot's end effector. Most such methods require the user to manually annotate correspondences on the CAD model, however, this is error-prone and a cumbersome user experience. We propose a correspondence-free alternative: The user simply measures a few points from the fixture's surface, and our method provides a tight superset of the poses which could explain the measured points. This naturally detects ambiguities related to symmetry and uninformative points and conveys this uncertainty to the user. Perhaps more importantly, it provides guaranteed bounds on the pose. The computation of such bounds is made tractable by the use of a hierarchical grid on SE(3). Our method is evaluated both in simulation and on a real collaborative robot, showing great potential for easier and less error-prone fixture calibration. Project page at https://sites.google.com/view/ttpose

SpyroPose: Importance Sampling Pyramids for Object Pose Distribution Estimation in SE(3)

Mar 09, 2023Abstract:Object pose estimation is a core computer vision problem and often an essential component in robotics. Pose estimation is usually approached by seeking the single best estimate of an object's pose, but this approach is ill-suited for tasks involving visual ambiguity. In such cases it is desirable to estimate the uncertainty as a pose distribution to allow downstream tasks to make informed decisions. Pose distributions can have arbitrary complexity which motivates estimating unparameterized distributions, however, until now they have only been used for orientation estimation on SO(3) due to the difficulty in training on and normalizing over SE(3). We propose a novel method for pose distribution estimation on SE(3). We use a hierarchical grid, a pyramid, which enables efficient importance sampling during training and sparse evaluation of the pyramid at inference, allowing real time 6D pose distribution estimation. Our method outperforms state-of-the-art methods on SO(3), and to the best of our knowledge, we provide the first quantitative results on pose distribution estimation on SE(3). Code will be available at spyropose.github.io

Multi-view object pose estimation from correspondence distributions and epipolar geometry

Oct 03, 2022

Abstract:In many automation tasks involving manipulation of rigid objects, the poses of the objects must be acquired. Vision-based pose estimation using a single RGB or RGB-D sensor is especially popular due to its broad applicability. However, single-view pose estimation is inherently limited by depth ambiguity and ambiguities imposed by various phenomena like occlusion, self-occlusion, reflections, etc. Aggregation of information from multiple views can potentially resolve these ambiguities, but the current state-of-the-art multi-view pose estimation method only uses multiple views to aggregate single-view pose estimates, and thus rely on obtaining good single-view estimates. We present a multi-view pose estimation method which aggregates learned 2D-3D distributions from multiple views for both the initial estimate and optional refinement. Our method performs probabilistic sampling of 3D-3D correspondences under epipolar constraints using learned 2D-3D correspondence distributions which are implicitly trained to respect visual ambiguities such as symmetry. Evaluation on the T-LESS dataset shows that our method reduces pose estimation errors by 80-91% compared to the best single-view method, and we present state-of-the-art results on T-LESS with four views, even compared with methods using five and eight views.

Ki-Pode: Keypoint-based Implicit Pose Distribution Estimation of Rigid Objects

Sep 20, 2022

Abstract:The estimation of 6D poses of rigid objects is a fundamental problem in computer vision. Traditionally pose estimation is concerned with the determination of a single best estimate. However, a single estimate is unable to express visual ambiguity, which in many cases is unavoidable due to object symmetries or occlusion of identifying features. Inability to account for ambiguities in pose can lead to failure in subsequent methods, which is unacceptable when the cost of failure is high. Estimates of full pose distributions are, contrary to single estimates, well suited for expressing uncertainty on pose. Motivated by this, we propose a novel pose distribution estimation method. An implicit formulation of the probability distribution over object pose is derived from an intermediary representation of an object as a set of keypoints. This ensures that the pose distribution estimates have a high level of interpretability. Furthermore, our method is based on conservative approximations, which leads to reliable estimates. The method has been evaluated on the task of rotation distribution estimation on the YCB-V and T-LESS datasets and performs reliably on all objects.

Self-supervised deep visual servoing for high precision peg-in-hole insertion

Jun 17, 2022

Abstract:Many industrial assembly tasks involve peg-in-hole like insertions with sub-millimeter tolerances which are challenging, even in highly calibrated robot cells. Visual servoing can be employed to increase the robustness towards uncertainties in the system, however, state of the art methods either rely on accurate 3D models for synthetic renderings or manual involvement in acquisition of training data. We present a novel self-supervised visual servoing method for high precision peg-in-hole insertion, which is fully automated and does not rely on synthetic data. We demonstrate its applicability for insertion of electronic components into a printed circuit board with tight tolerances. We show that peg-in-hole insertion can be drastically sped up by preceding a robust but slow force-based insertion strategy with our proposed visual servoing method, the configuration of which is fully autonomous.

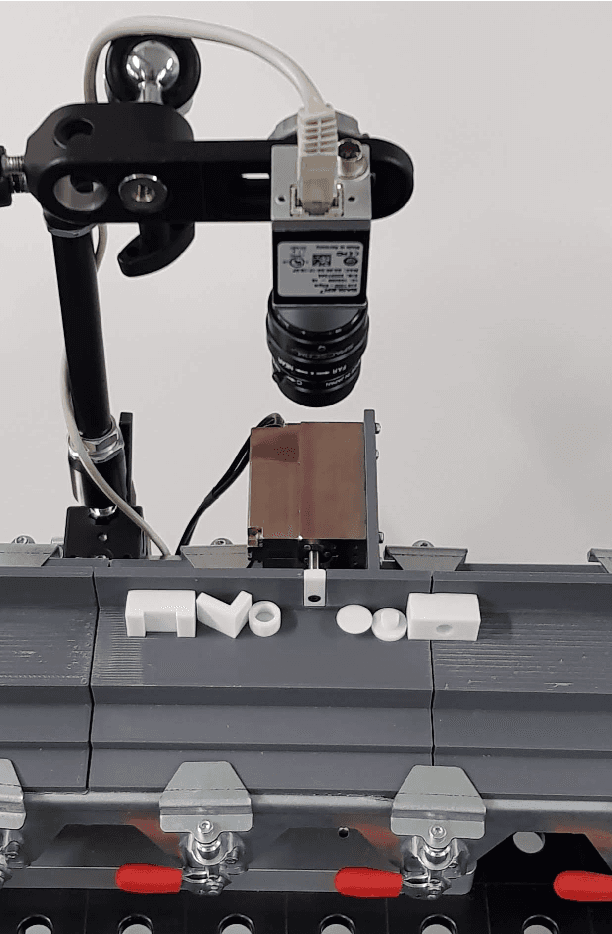

A Flexible and Robust Vision Trap for Automated Part Feeder Design

Jun 01, 2022

Abstract:Fast, robust, and flexible part feeding is essential for enabling automation of low volume, high variance assembly tasks. An actuated vision-based solution on a traditional vibratory feeder, referred to here as a vision trap, should in principle be able to meet these demands for a wide range of parts. However, in practice, the flexibility of such a trap is limited as an expert is needed to both identify manageable tasks and to configure the vision system. We propose a novel approach to vision trap design in which the identification of manageable tasks is automatic and the configuration of these tasks can be delegated to an automated feeder design system. We show that the trap's capabilities can be formalized in such a way that it integrates seamlessly into the ecosystem of automated feeder design. Our results on six canonical parts show great promise for autonomous configuration of feeder systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge