A Flexible and Robust Vision Trap for Automated Part Feeder Design

Paper and Code

Jun 01, 2022

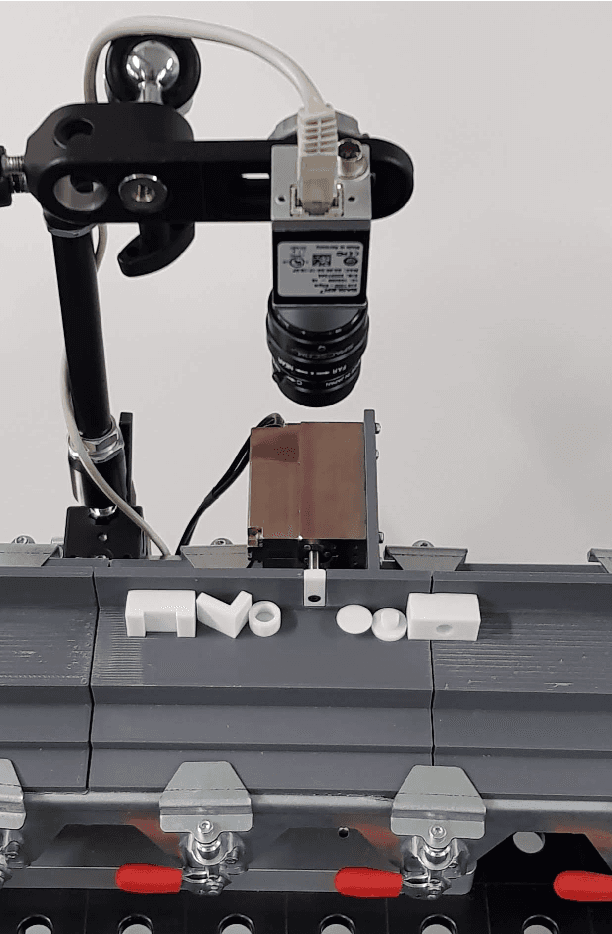

Fast, robust, and flexible part feeding is essential for enabling automation of low volume, high variance assembly tasks. An actuated vision-based solution on a traditional vibratory feeder, referred to here as a vision trap, should in principle be able to meet these demands for a wide range of parts. However, in practice, the flexibility of such a trap is limited as an expert is needed to both identify manageable tasks and to configure the vision system. We propose a novel approach to vision trap design in which the identification of manageable tasks is automatic and the configuration of these tasks can be delegated to an automated feeder design system. We show that the trap's capabilities can be formalized in such a way that it integrates seamlessly into the ecosystem of automated feeder design. Our results on six canonical parts show great promise for autonomous configuration of feeder systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge