Alex Zyner

End-to-End Velocity Estimation For Autonomous Racing

Mar 15, 2020

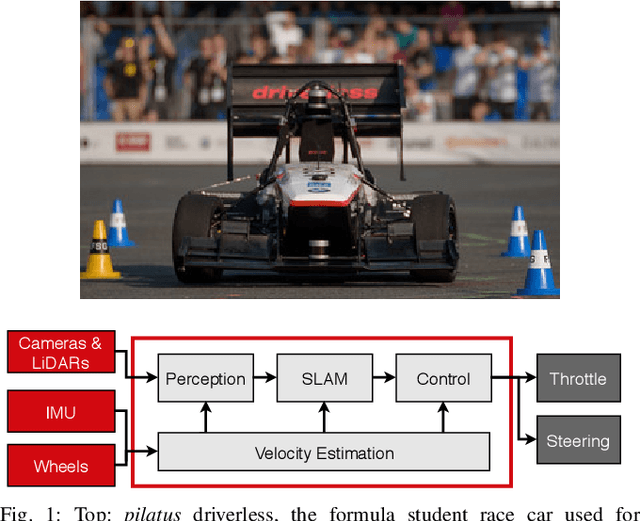

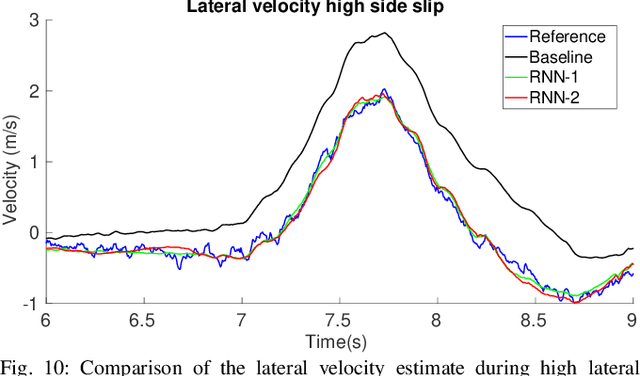

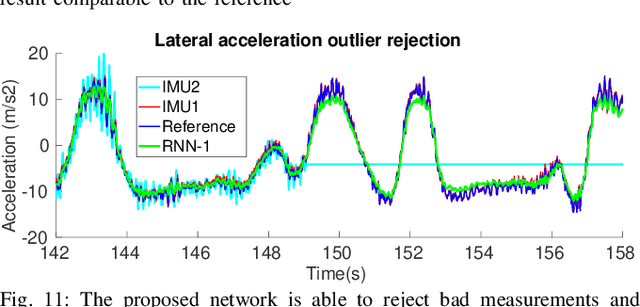

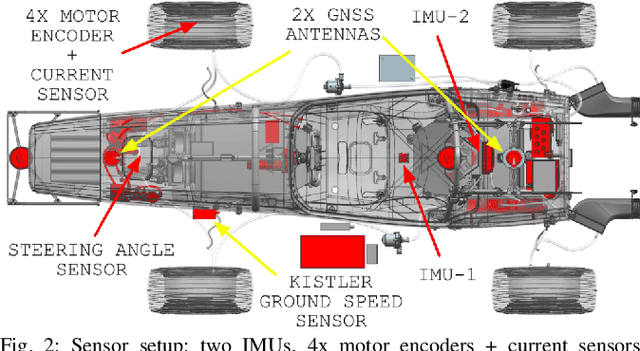

Abstract:Velocity estimation plays a central role in driverless vehicles, but standard and affordable methods struggle to cope with extreme scenarios like aggressive maneuvers due to the presence of high sideslip. To solve this, autonomous race cars are usually equipped with expensive external velocity sensors. In this paper, we present an end-to-end recurrent neural network that takes available raw sensors as input (IMU, wheel odometry, and motor currents) and outputs velocity estimates. The results are compared to two state-of-the-art Kalman filters, which respectively include and exclude expensive velocity sensors. All methods have been extensively tested on a formula student driverless race car with very high sideslip (10{\deg} at the rear axle) and slip ratio (~20%), operating close to the limits of handling. The proposed network is able to estimate lateral velocity up to 15x better than the Kalman filter with the equivalent sensor input and matches (0.06 m/s RMSE) the Kalman filter with the expensive velocity sensor setup.

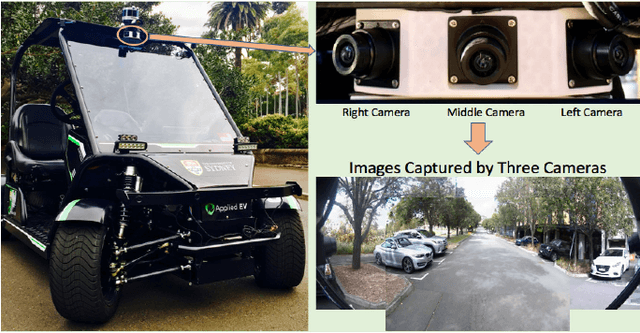

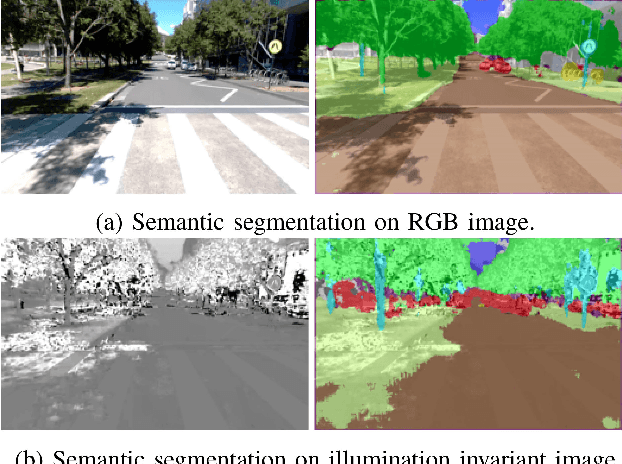

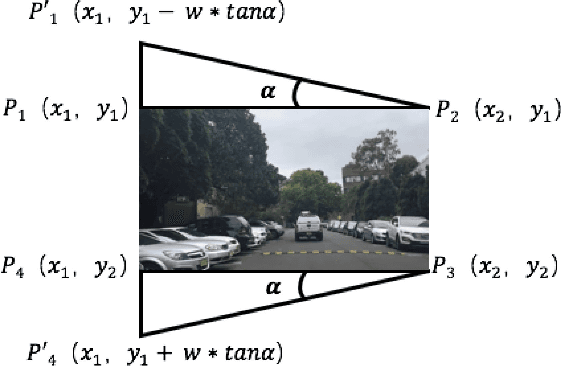

Adapting Semantic Segmentation Models for Changes in Illumination and Camera Perspective

Sep 13, 2018

Abstract:Semantic segmentation using deep neural networks has been widely explored to generate high-level contextual information for autonomous vehicles. To acquire a complete $180^\circ$ semantic understanding of the forward surroundings, we propose to stitch semantic images from multiple cameras with varying orientations. However, previously trained semantic segmentation models showed unacceptable performance after significant changes to the camera orientations and the lighting conditions. To avoid time-consuming hand labeling, we explore and evaluate the use of data augmentation techniques, specifically skew and gamma correction, from a practical real-world standpoint to extend the existing model and provide more robust performance. The presented experimental results have shown significant improvements with varying illumination and camera perspective changes.

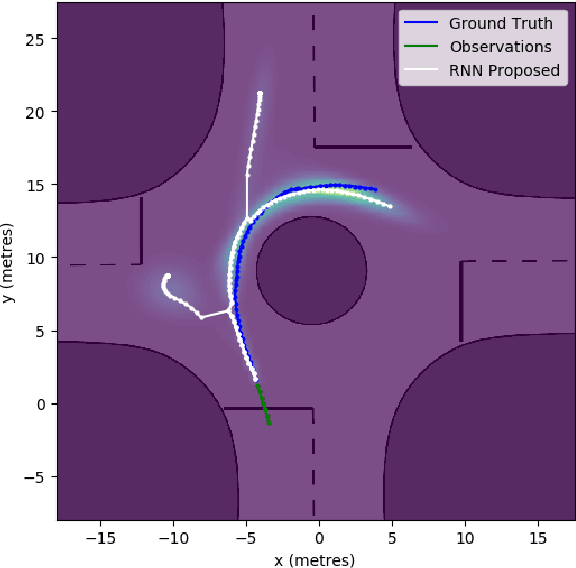

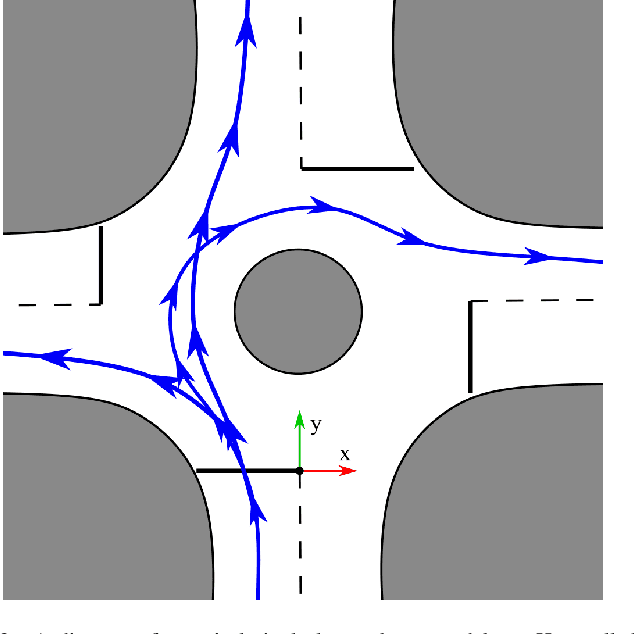

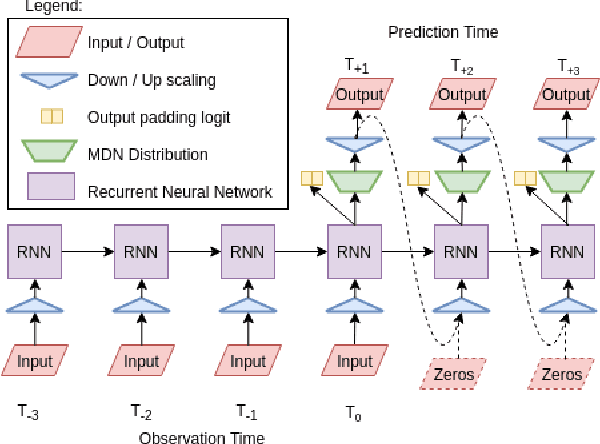

Naturalistic Driver Intention and Path Prediction using Recurrent Neural Networks

Jul 26, 2018

Abstract:Understanding the intentions of drivers at intersections is a critical component for autonomous vehicles. Urban intersections that do not have traffic signals are a common epicentre of highly variable vehicle movement and interactions. We present a method for predicting driver intent at urban intersections through multi-modal trajectory prediction with uncertainty. Our method is based on recurrent neural networks combined with a mixture density network output layer. To consolidate the multi-modal nature of the output probability distribution, we introduce a clustering algorithm that extracts the set of possible paths that exist in the prediction output, and ranks them according to likelihood. To verify the method's performance and generalizability, we present a real-world dataset that consists of over 23,000 vehicles traversing five different intersections, collected using a vehicle mounted Lidar based tracking system. An array of metrics is used to demonstrate the performance of the model against several baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge