Alessandro Corbetta

Understanding complex crowd dynamics with generative neural simulators

Dec 03, 2024

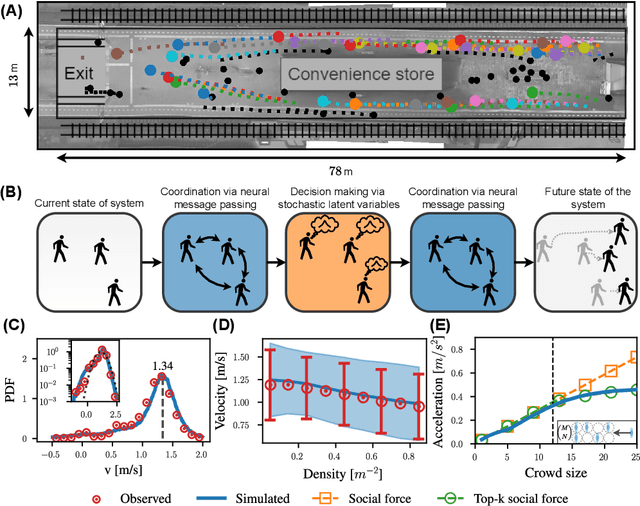

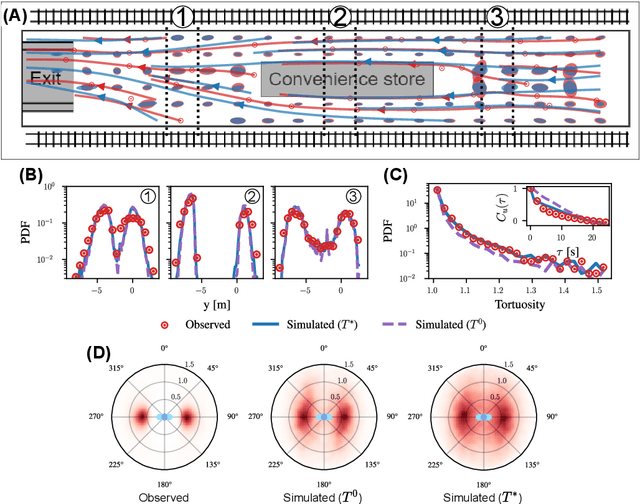

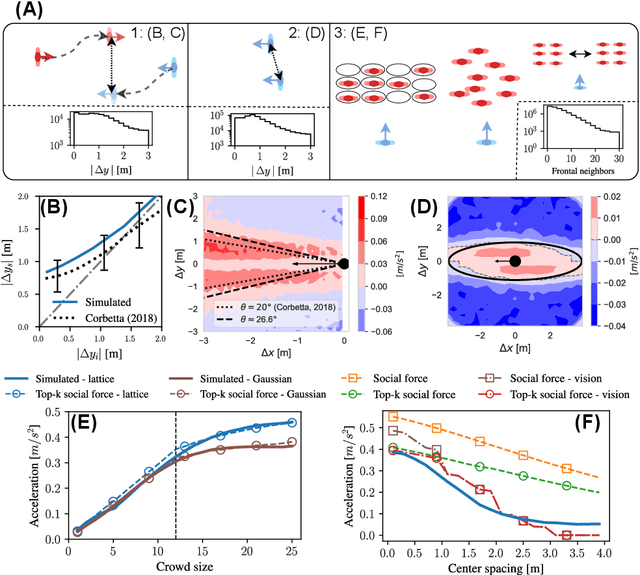

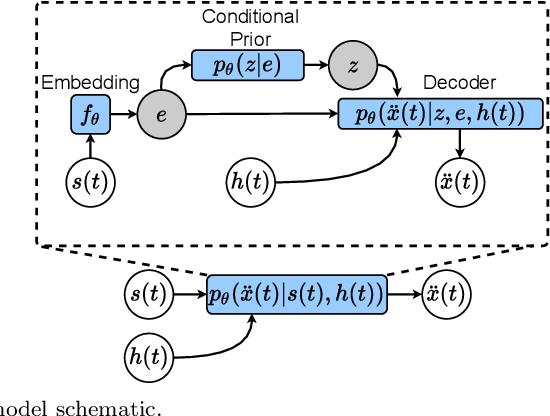

Abstract:Understanding the dynamics of pedestrian crowds is an outstanding challenge crucial for designing efficient urban infrastructure and ensuring safe crowd management. To this end, both small-scale laboratory and large-scale real-world measurements have been used. However, these approaches respectively lack statistical resolution and parametric controllability, both essential to discovering physical relationships underlying the complex stochastic dynamics of crowds. Here, we establish an investigation paradigm that offers laboratory-like controllability, while ensuring the statistical resolution of large-scale real-world datasets. Using our data-driven Neural Crowd Simulator (NeCS), which we train on large-scale data and validate against key statistical features of crowd dynamics, we show that we can perform effective surrogate crowd dynamics experiments without training on specific scenarios. We not only reproduce known experimental results on pairwise avoidance, but also uncover the vision-guided and topological nature of N-body interactions. These findings show how virtual experiments based on neural simulation enable data-driven scientific discovery.

Enhancing lattice kinetic schemes for fluid dynamics with Lattice-Equivariant Neural Networks

May 22, 2024Abstract:We present a new class of equivariant neural networks, hereby dubbed Lattice-Equivariant Neural Networks (LENNs), designed to satisfy local symmetries of a lattice structure. Our approach develops within a recently introduced framework aimed at learning neural network-based surrogate models Lattice Boltzmann collision operators. Whenever neural networks are employed to model physical systems, respecting symmetries and equivariance properties has been shown to be key for accuracy, numerical stability, and performance. Here, hinging on ideas from group representation theory, we define trainable layers whose algebraic structure is equivariant with respect to the symmetries of the lattice cell. Our method naturally allows for efficient implementations, both in terms of memory usage and computational costs, supporting scalable training/testing for lattices in two spatial dimensions and higher, as the size of symmetry group grows. We validate and test our approach considering 2D and 3D flowing dynamics, both in laminar and turbulent regimes. We compare with group averaged-based symmetric networks and with plain, non-symmetric, networks, showing how our approach unlocks the (a-posteriori) accuracy and training stability of the former models, and the train/inference speed of the latter networks (LENNs are about one order of magnitude faster than group-averaged networks in 3D). Our work opens towards practical utilization of machine learning-augmented Lattice Boltzmann CFD in real-world simulations.

How neural networks learn to classify chaotic time series

Jun 04, 2023

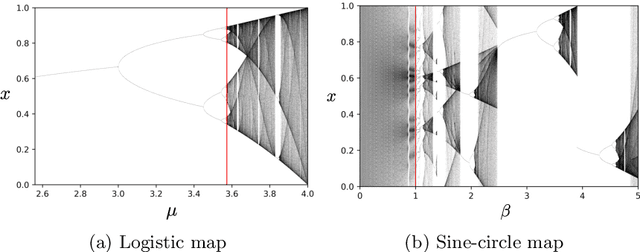

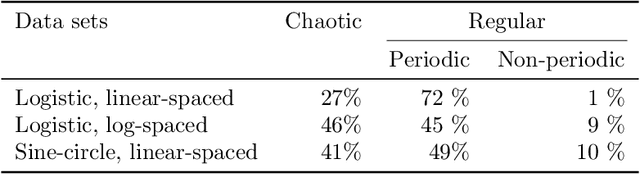

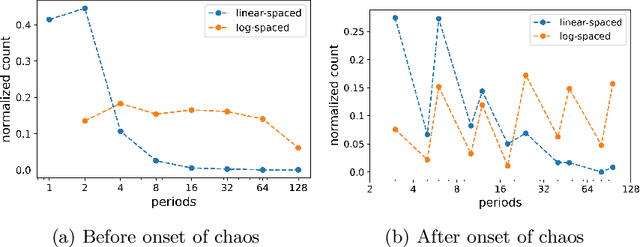

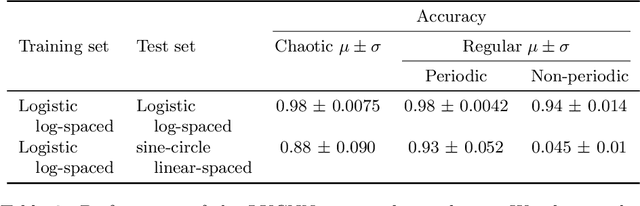

Abstract:Neural networks are increasingly employed to model, analyze and control non-linear dynamical systems ranging from physics to biology. Owing to their universal approximation capabilities, they regularly outperform state-of-the-art model-driven methods in terms of accuracy, computational speed, and/or control capabilities. On the other hand, neural networks are very often they are taken as black boxes whose explainability is challenged, among others, by huge amounts of trainable parameters. In this paper, we tackle the outstanding issue of analyzing the inner workings of neural networks trained to classify regular-versus-chaotic time series. This setting, well-studied in dynamical systems, enables thorough formal analyses. We focus specifically on a family of networks dubbed Large Kernel Convolutional Neural Networks (LKCNN), recently introduced by Boull\'{e} et al. (2021). These non-recursive networks have been shown to outperform other established architectures (e.g. residual networks, shallow neural networks and fully convolutional networks) at this classification task. Furthermore, they outperform ``manual'' classification approaches based on direct reconstruction of the Lyapunov exponent. We find that LKCNNs use qualitative properties of the input sequence. In particular, we show that the relation between input periodicity and activation periodicity is key for the performance of LKCNN models. Low performing models show, in fact, analogous periodic activations to random untrained models. This could give very general criteria for identifying, a priori, trained models that have poor accuracy.

Towards a Numerical Proof of Turbulence Closure

Feb 18, 2022

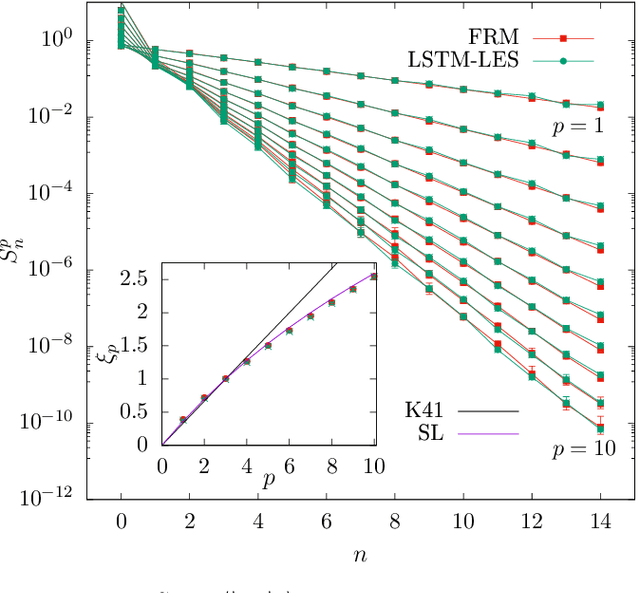

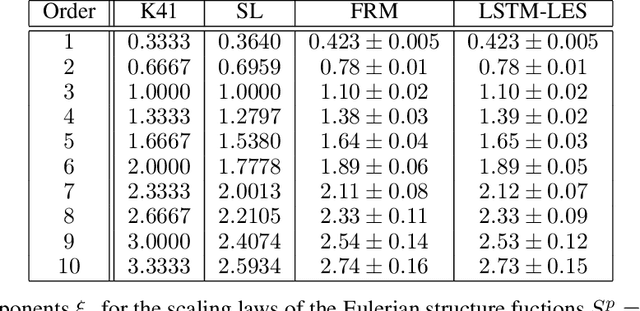

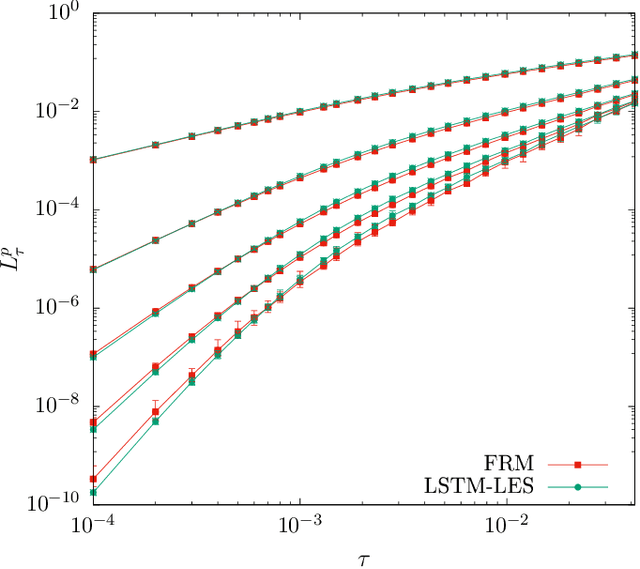

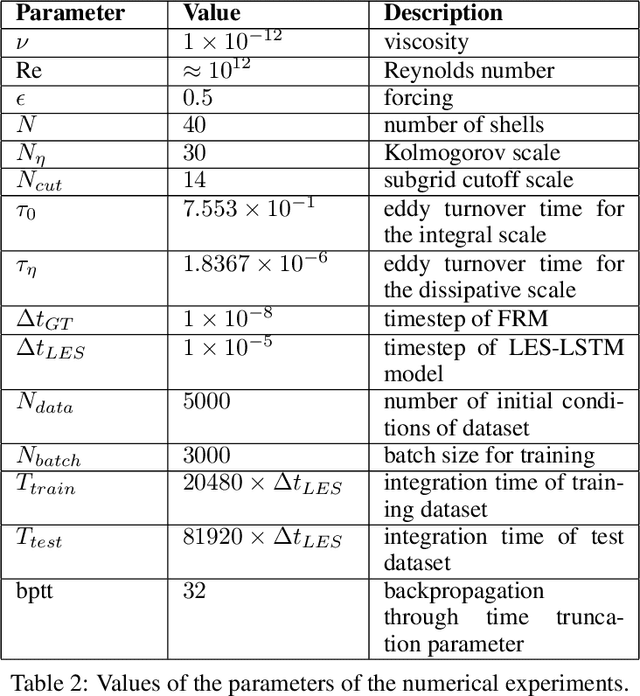

Abstract:The development of turbulence closure models, parametrizing the influence of small non-resolved scales on the dynamics of large resolved ones, is an outstanding theoretical challenge with vast applicative relevance. We present a closure, based on deep recurrent neural networks, that quantitatively reproduces, within statistical errors, Eulerian and Lagrangian structure functions and the intermittent statistics of the energy cascade, including those of subgrid fluxes. To achieve high-order statistical accuracy, and thus a stringent statistical test, we employ shell models of turbulence. Our results encourage the development of similar approaches for 3D Navier-Stokes turbulence.

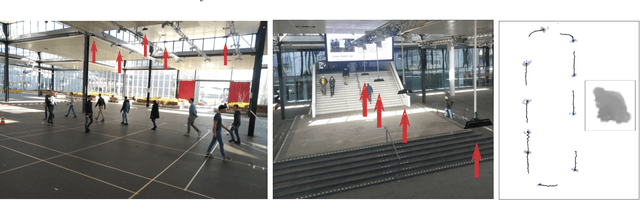

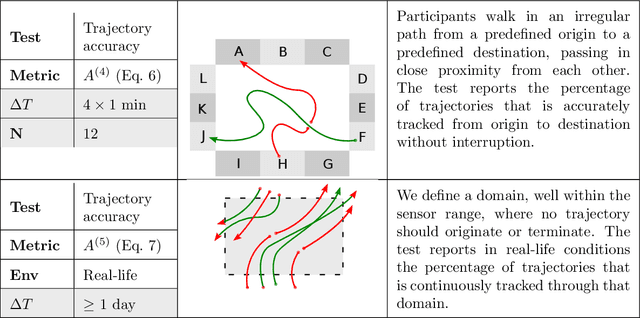

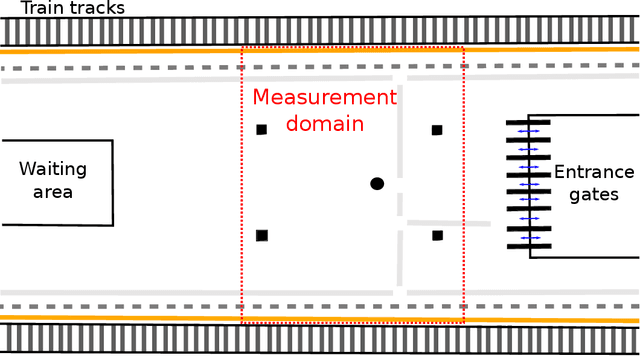

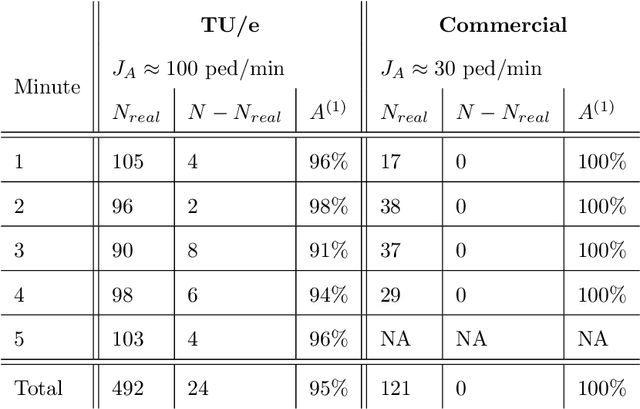

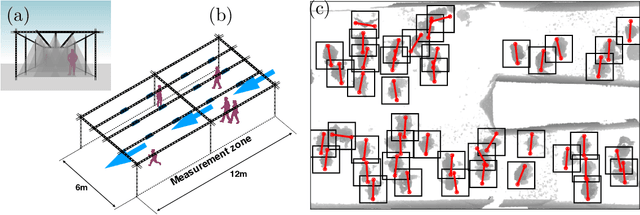

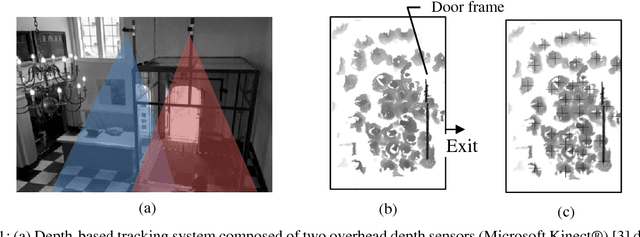

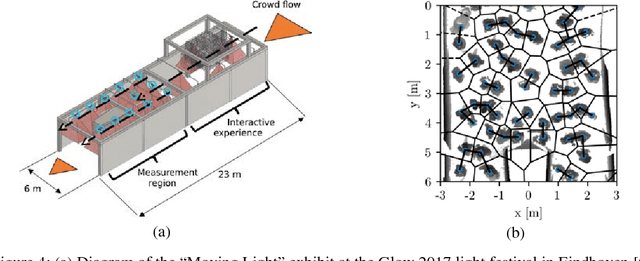

Benchmarking high-fidelity pedestrian tracking systems for research, real-time monitoring and crowd control

Aug 26, 2021

Abstract:High-fidelity pedestrian tracking in real-life conditions has been an important tool in fundamental crowd dynamics research allowing to quantify statistics of relevant observables including walking velocities, mutual distances and body orientations. As this technology advances, it is becoming increasingly useful also in society. In fact, continued urbanization is overwhelming existing pedestrian infrastructures such as transportation hubs and stations, generating an urgent need for real-time highly-accurate usage data, aiming both at flow monitoring and dynamics understanding. To successfully employ pedestrian tracking techniques in research and technology, it is crucial to validate and benchmark them for accuracy. This is not only necessary to guarantee data quality, but also to identify systematic errors. In this contribution, we present and discuss a benchmark suite, towards an open standard in the community, for privacy-respectful pedestrian tracking techniques. The suite is technology-independent and is applicable to academic and commercial pedestrian tracking systems, operating both in lab environments and real-life conditions. The benchmark suite consists of 5 tests addressing specific aspects of pedestrian tracking quality, including accurate crowd flux estimation, density estimation, position detection and trajectory accuracy. The output of the tests are quality factors expressed as single numbers. We provide the benchmark results for two tracking systems, both operating in real-life, one commercial, and the other based on overhead depth-maps developed at TU Eindhoven. We discuss the results on the basis of the quality factors and report on the typical sensor and algorithmic performance. This enables us to highlight the current state-of-the-art, its limitations and provide installation recommendations, with specific attention to multi-sensor setups and data stitching.

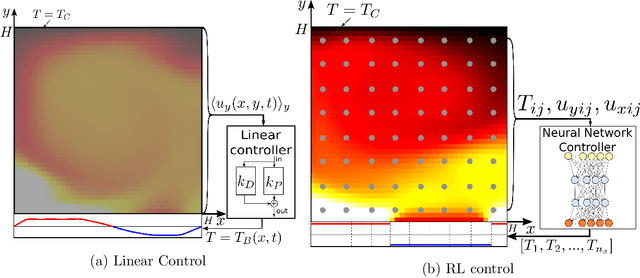

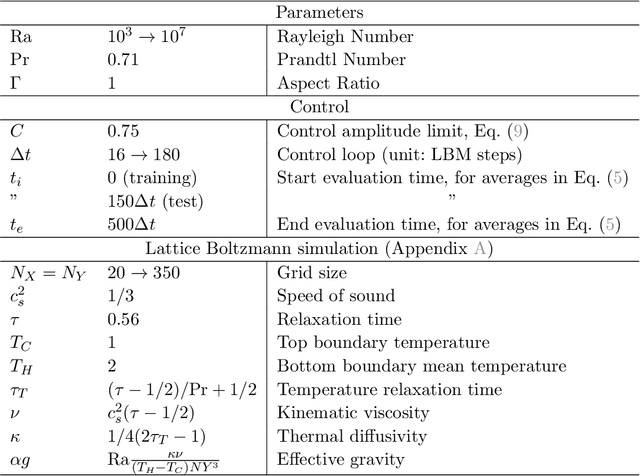

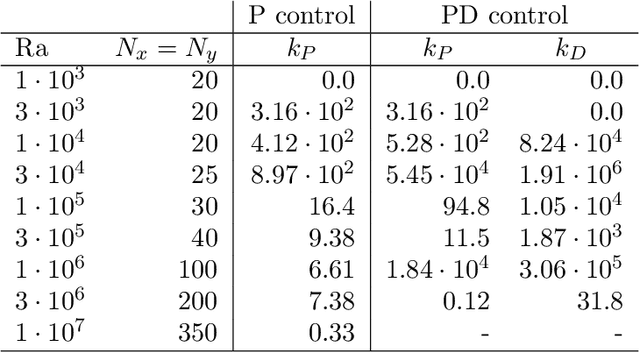

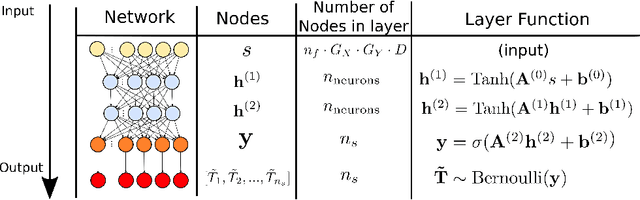

Controlling Rayleigh-Bénard convection via Reinforcement Learning

Mar 31, 2020

Abstract:Thermal convection is ubiquitous in nature as well as in many industrial applications. The identification of effective control strategies to, e.g., suppress or enhance the convective heat exchange under fixed external thermal gradients is an outstanding fundamental and technological issue. In this work, we explore a novel approach, based on a state-of-the-art Reinforcement Learning (RL) algorithm, which is capable of significantly reducing the heat transport in a two-dimensional Rayleigh-B\'enard system by applying small temperature fluctuations to the lower boundary of the system. By using numerical simulations, we show that our RL-based control is able to stabilize the conductive regime and bring the onset of convection up to a Rayleigh number $Ra_c \approx 3 \cdot 10^4$, whereas in the uncontrolled case it holds $Ra_{c}=1708$. Additionally, for $Ra > 3 \cdot 10^4$, our approach outperforms other state-of-the-art control algorithms reducing the heat flux by a factor of about $2.5$. In the last part of the manuscript, we address theoretical limits connected to controlling an unstable and chaotic dynamics as the one considered here. We show that controllability is hindered by observability and/or capabilities of actuating actions, which can be quantified in terms of characteristic time delays. When these delays become comparable with the Lyapunov time of the system, control becomes impossible.

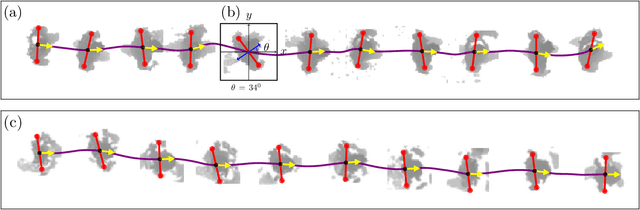

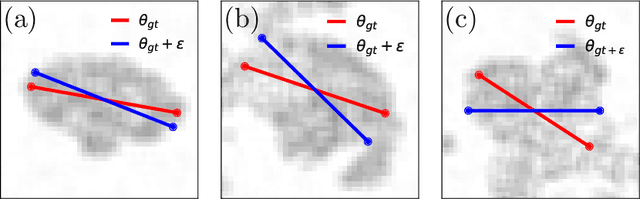

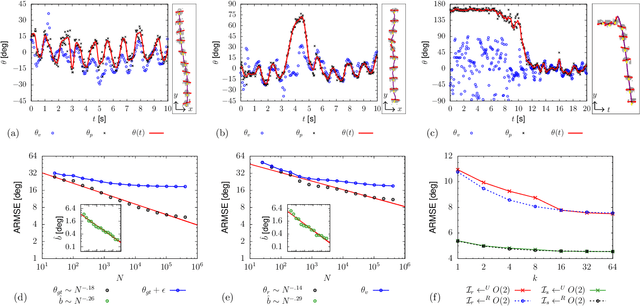

Pedestrian orientation dynamics from high-fidelity measurements

Jan 14, 2020

Abstract:We investigate in real-life conditions and with very high accuracy the dynamics of body rotation, or yawing, of walking pedestrians - an highly complex task due to the wide variety in shapes, postures and walking gestures. We propose a novel measurement method based on a deep neural architecture that we train on the basis of generic physical properties of the motion of pedestrians. Specifically, we leverage on the strong statistical correlation between individual velocity and body orientation: the velocity direction is typically orthogonal with respect to the shoulder line. We make the reasonable assumption that this approximation, although instantaneously slightly imperfect, is correct on average. This enables us to use velocity data as training labels for a highly-accurate point-estimator of individual orientation, that we can train with no dedicated annotation labor. We discuss the measurement accuracy and show the error scaling, both on synthetic and real-life data: we show that our method is capable of estimating orientation with an error as low as 7.5 degrees. This tool opens up new possibilities in the studies of human crowd dynamics where orientation is key. By analyzing the dynamics of body rotation in real-life conditions, we show that the instantaneous velocity direction can be described by the combination of orientation and a random delay, where randomness is provided by an Ornstein-Uhlenbeck process centered on an average delay of 100ms. Quantifying these dynamics could have only been possible thanks to a tool as precise as that proposed.

Deep learning velocity signals allows to quantify turbulence intensity

Nov 14, 2019

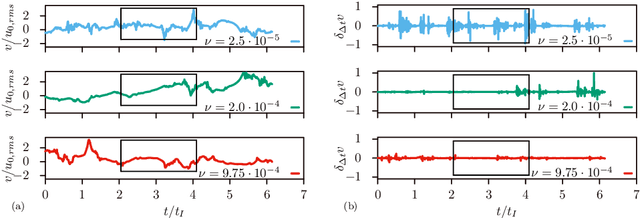

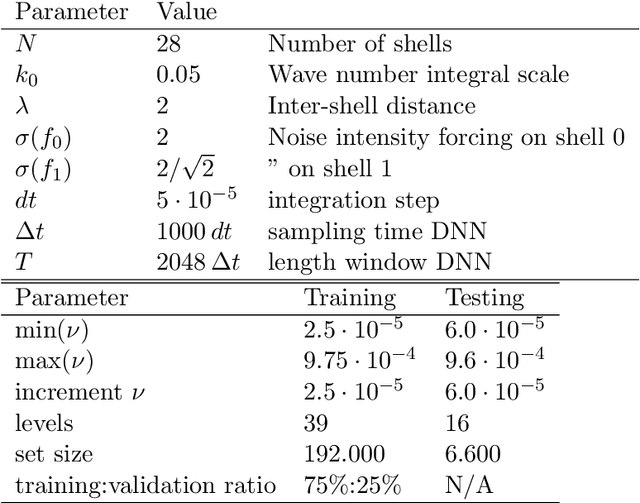

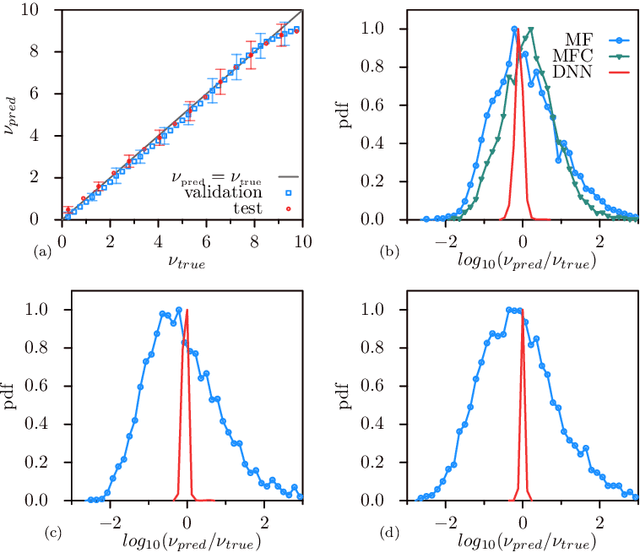

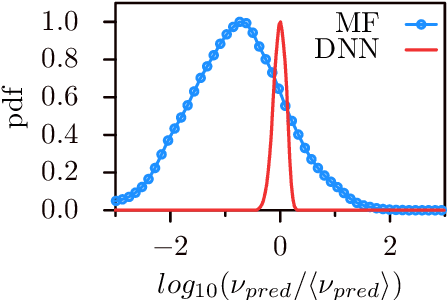

Abstract:Turbulence, the ubiquitous and chaotic state of fluid motions, is characterized by strong and statistically non-trivial fluctuations of the velocity field, over a wide range of length- and time-scales, and it can be quantitatively described only in terms of statistical averages. Strong non-stationarities hinder the possibility to achieve statistical convergence, making it impossible to define the turbulence intensity and, in particular, its basic dimensionless estimator, the Reynolds number. Here we show that by employing Deep Neural Networks (DNN) we can accurately estimate the Reynolds number within $15\%$ accuracy, from a statistical sample as small as two large-scale eddy-turnover times. In contrast, physics-based statistical estimators are limited by the rate of convergence of the central limit theorem, and provide, for the same statistical sample, an error at least $100$ times larger. Our findings open up new perspectives in the possibility to quantitatively define and, therefore, study highly non-stationary turbulent flows as ordinarily found in nature as well as in industrial processes.

StampNet: unsupervised multi-class object discovery

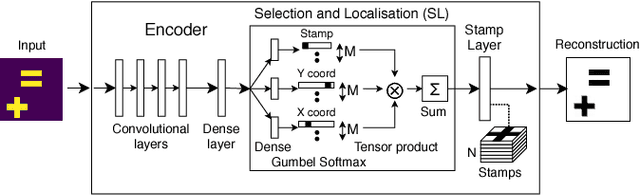

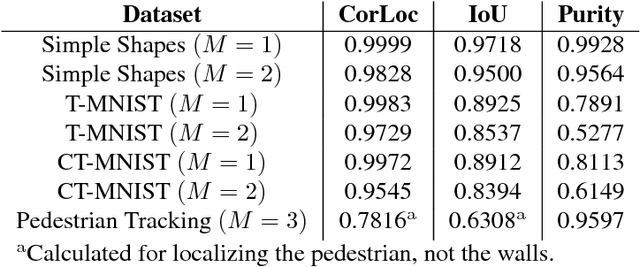

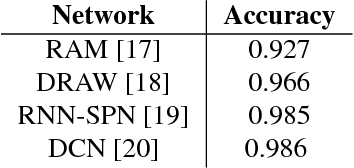

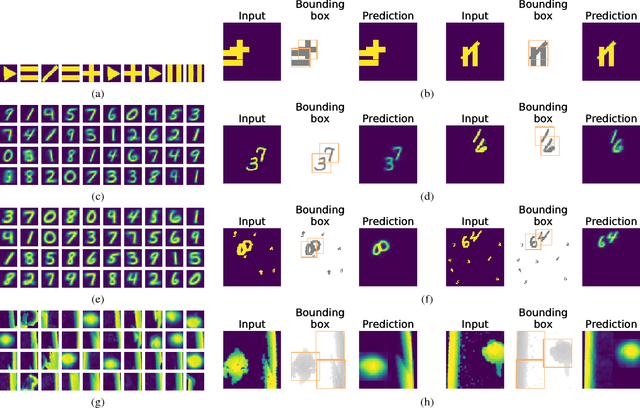

Feb 07, 2019

Abstract:Unsupervised object discovery in images involves uncovering recurring patterns that define objects and discriminates them against the background. This is more challenging than image clustering as the size and the location of the objects are not known: this adds additional degrees of freedom and increases the problem complexity. In this work, we propose StampNet, a novel autoencoding neural network that localizes shapes (objects) over a simple background in images and categorizes them simultaneously. StampNet consists of a discrete latent space that is used to categorize objects and to determine the location of the objects. The object categories are formed during the training, resulting in the discovery of a fixed set of objects. We present a set of experiments that demonstrate that StampNet is able to localize and cluster multiple overlapping shapes with varying complexity including the digits from the MNIST dataset. We also present an application of StampNet in the localization of pedestrians in overhead depth-maps.

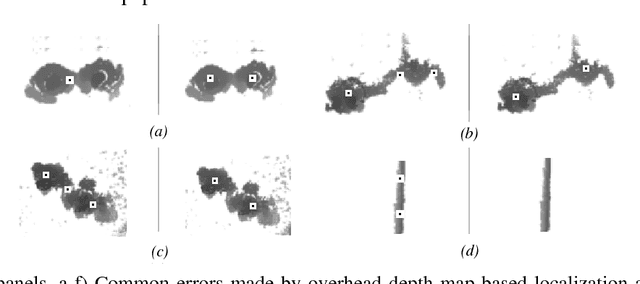

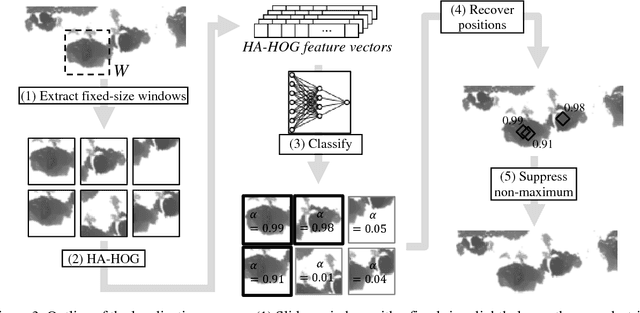

Accurate pedestrian localization in overhead depth images via Height-Augmented HOG

May 31, 2018

Abstract:We tackle the challenge of reliably and automatically localizing pedestrians in real-life conditions through overhead depth imaging at unprecedented high-density conditions. Leveraging upon a combination of Histogram of Oriented Gradients-like feature descriptors, neural networks, data augmentation and custom data annotation strategies, this work contributes a robust and scalable machine learning-based localization algorithm, which delivers near-human localization performance in real-time, even with local pedestrian density of about 3 ped/m2, a case in which most state-of-the art algorithms degrade significantly in performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge